Ruyi-Models: generate image to video open source models, support lens control and motion amplitude control

General Introduction

Ruyi-Models is an open source project designed to generate high quality video from images. Developed by the IamCreateAI team, the project supports the generation of cinematic-quality video at 768 resolution, 24 frames per second, totaling 120 frames in 5 seconds. Ruyi-Models supports both shot control and motion amplitude control, and can generate lossless video at 120 frames at 512 resolution (or about 72 frames at 768 resolution) using an RTX 3090 or RTX 4090 graphics card. The program provides detailed installation and The program provides detailed installation and usage instructions for different user needs.

Official website: https://www.iamcreate.ai/

Function List

- Image to Video Generation: Generate high quality cinematic video.

- Lens control: Support left/right, up/down and static control of the lens.

- Motion amplitude control: Support different amplitude motion control.

- GPU Memory Optimization: Provides a variety of GPU memory optimization options to meet the needs of different users.

- Multiple installation methods: support for installation via ComfyUI Manager and manual installation.

- Model Download: Supports automatic and manual model download.

Using Help

Installation process

- Clone the repository and install the required dependencies:

git clone https://github.com/IamCreateAI/Ruyi-Models cd Ruyi-Models pip install -r requirements.txt - For ComfyUI users:

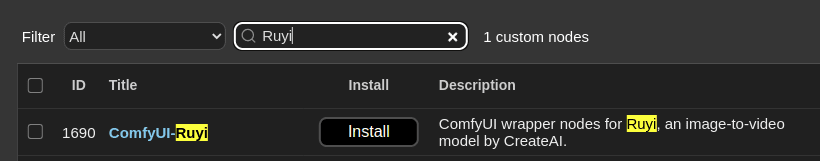

- Method 1: Installation through ComfyUI Manager.

Launch ComfyUI and open Manager. select Custom Nodes Manager and search for "Ruyi". Select ComfyUI-Ruyi in the search result (as shown in the screenshot below) and click "Install" button to install it.

Finally, search for "ComfyUI-VideoHelperSuite" and install it.

- Method 2: Manual installation:

cd ComfyUI/custom_nodes/ git clone https://github.com/IamCreateAI/Ruyi-Models.git pip install -r Ruyi-Models/requirements.txt

- Method 2: Manual installation:

- Install ComfyUI-VideoHelperSuite to display the video output:

cd ComfyUI/custom_nodes/ git clone https://github.com/Kosinkadink/ComfyUI-VideoHelperSuite.git pip install -r ComfyUI-VideoHelperSuite/requirements.txt - For Windows users:

- Use the ComfyUI_windows_portable_nvidia distribution:

cd ComfyUI_windows_portable/ComfyUI/custom_nodes git clone https://github.com/IamCreateAI/Ruyi-Models.git ..\..\python_embeded\python.exe -m pip install -r Ruyi-Models/requirements.txt

- Use the ComfyUI_windows_portable_nvidia distribution:

Usage

- Download the model and save it to the specified path:

- For example, download the Ruyi-Mini-7B model and save it to the

Ruyi-Models/modelsfolder. - For ComfyUI users, the path should be

ComfyUI/models/RuyiThe

- For example, download the Ruyi-Mini-7B model and save it to the

- Run the model:

- Run directly with Python code:

python3 predict_i2v.py - The script downloads the model to the

Ruyi-Models/modelsfolder and use theassetsThe images in the folder are used as the start and end frames for video inference. Variables in the script can be modified to replace the input images and set parameters such as video length and resolution.

- Run directly with Python code:

- GPU memory optimization:

- furnish

GPU_memory_modecap (a poem)GPU_offload_stepsoption to reduce GPU memory usage to meet the needs of different users. Using less GPU memory requires more RAM and longer generation times.

- furnish

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...