How to create a chatbot with DeepSeek's Chatbox feature tutorial?

Basic knowledge and preparation of Chatbox functions

The Chatbox functionality provided by the DeepSeek platform is essentially an API interface call system, where the user needs to interact with the model by way of the API. Preparation work needs to be completed before creating a chatbot:

- Local Deployment of Ollana: see detailed tutorial below

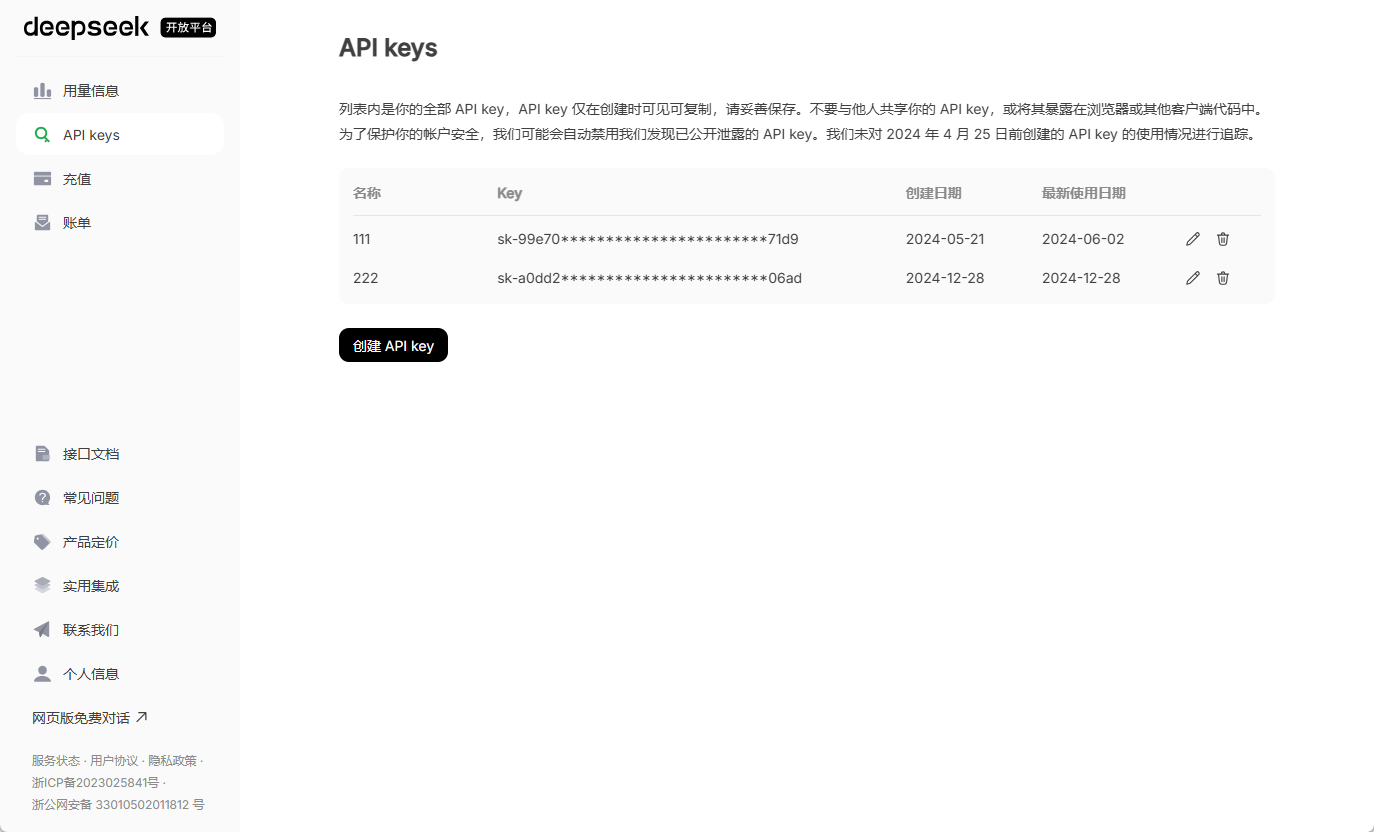

- Get the official API key: Visit DeepSeek's official website to create an account and pass the enterprise authentication (individual developers choose the individual type), in the console's "voucher managementThe "Module" generates a proprietary API Key, usually in the format ds-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx.

To create a chatbot using DeepSeek's ChatBox feature, follow the steps below to combine local deployment with interface configuration for efficient interaction:

I. Basic environment construction

- Installation of the Ollama Framework

Ollama is a lightweight framework for running native AI models, with support for open source models such as DeepSeek.- Visit the official Ollama website and choose the installation package according to your system (Windows choose .exe, Mac choose .dmg).

- After the installation is complete, type in the terminal

ollama listVerify success, if the list of models is displayed (e.g. llama3) the installation is complete.

- Deploying the DeepSeek model

- Select the model version according to the hardware configuration:

- low-profile equipment: Selection

1.5Bmaybe8BParametric version (requires 2GB or more video memory). - High-performance equipment: Recommendations

16Bor higher (requires 16GB or more video memory).

- low-profile equipment: Selection

- Run the command in the terminal to download the model:

ollama run deepseek-r1:8b # 替换数字为所选参数版本The first run requires the download of approximately 5GB of model files, and a stable network is required.

- Select the model version according to the hardware configuration:

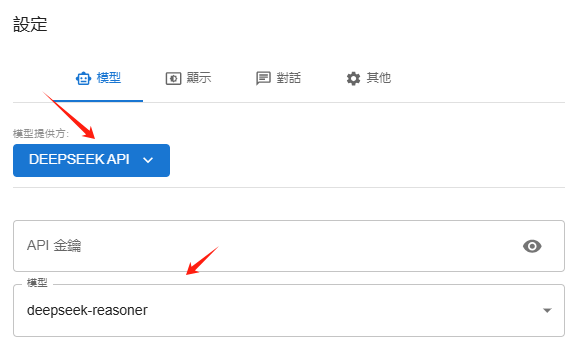

ChatBox interface configuration

- Installation of ChatBox Tool

- Visit the official ChatBox website and download the installation package for the corresponding system (Windows, Mac or Linux).

- Launch the software after installation and enter the setup interface.

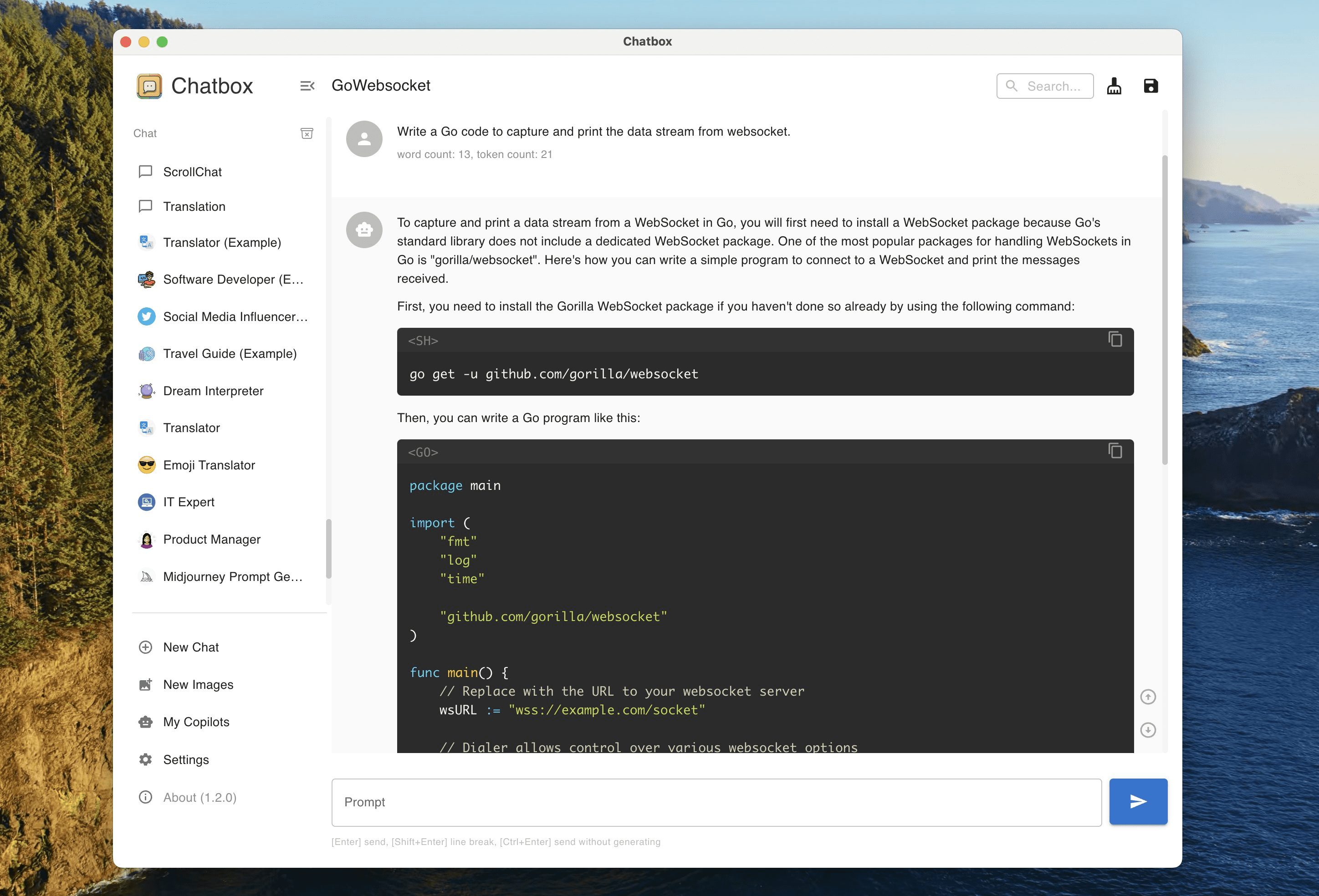

- Connecting Ollama with the DeepSeek Model

- Select "Model Provider" in the ChatBox. OLLAMA APIThe

- Fill in the API address for the default

http://localhost:11434(If not auto-filled, enter manually). - Select the installed version of DeepSeek in the "Model" drop-down menu (e.g.

deepseek-r1:8b).

- Functional Testing

- Return to the main interface, enter a question (e.g. "Write a New Year's wish"), ChatBox will generate a response through the local model, realizing a conversation without network dependency.

- Ollama Configuration

- DeepSeek Official API Configuration

III. Advanced Functionality Expansion

- Multimodal Interaction and Knowledge Base Integration

- utilization Page Assist and other browser plug-ins that add networked search capabilities to local models and support real-time information from specified engines.

- By uploading documents such as PDF, TXT, etc. to the knowledge base, the model can be combined with local data to generate more accurate answers (embedded models such as BERT need to be configured).

- Performance Optimization Recommendations

- hardware adaptation: If the dialog latency is high, lower the model parameter version or upgrade the graphics card (RTX 4080 or higher recommended).

- Privacy: Local deployment avoids data uploads and is suitable for handling sensitive information.

IV. Solutions to common problems

- Model download failed: Check your internet connection and turn off your firewall or antivirus program.

- ChatBox not responding: Confirm that the Ollama service is started (terminal input)

ollama serve). - insufficient video memory: Try smaller models or increase virtual memory.

With the above steps, a localized, high-privacy DeepSeek chatbot can be quickly built. For further customization features (e.g. web integration), refer to the API development tutorial.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...