How do I convert the API provided by Dify to a format compatible with the OpenAI interface?

Yes, I did. Dify For those of you who know Dify, you should know that while it's a great AI app, the APIs it provides are incompatible with Open AI, which makes it impossible for some apps to interface to Dify.

What can be done about it?

It is possible to utilize "transit APIs", the most famous of which would be One API: Multi-model API Management and Load Balancing, Distribution System It can aggregate different AI models together and then unify them into OpenAI APIs for distribution, but it doesn't support Dify's access so far, so although there is a way out, it can't solve the above problem.

At this time, a big shot, based on the One API to do the second opening, the project name is New APIThis project fully supports Dify, so we can finally play with the Dify API!

After a successful deployment we access it via IP + port:

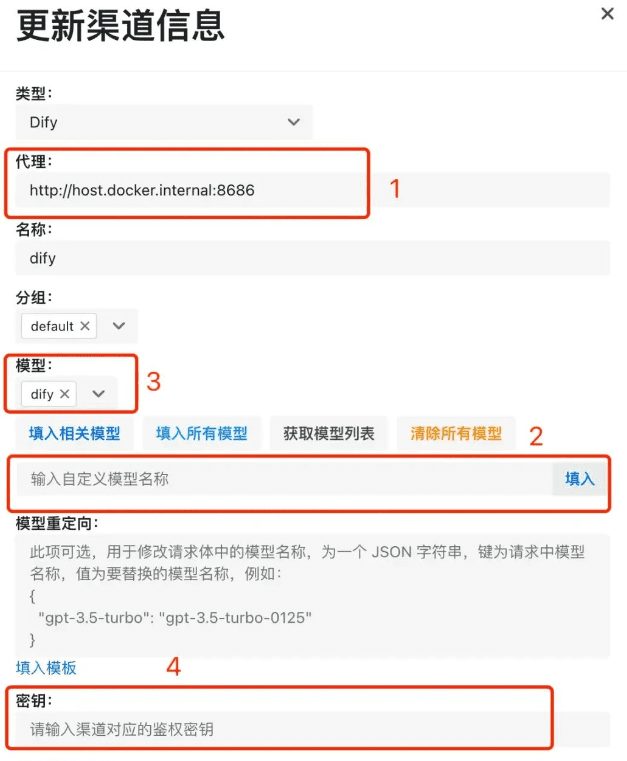

- After we check the Dify type, we have to enter the address of the Dify service. If your new-api service and dify are both deployed on a single machine via docker, then remember to enter the address of the

http://host.docker.internal:你的端口号The screenshot is my example, so don't copy it as is. If you're not using the docker deployment method, you'll need to enter the dify service'shttp:// ip + 端口号Ready to go; - Since we're accessing dify, all we need to do for model selection is enter the name of the custom model, e.g., dify, and then be sure to click the "Fill in" button at the end;

- Whether it's a custom model or another model selected, it must be shown at position 3 in the screenshot, and not showing it means it's not configured;

- Finish submitting, we can see a piece of data in the list of channels ~!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...