Intelligentsia in software engineering: research, current status and prospects

Original: https://arxiv.org/abs/2409.09030

summaries

In recent years, Large Language Models (LLMs) have achieved remarkable success and have been widely used in a variety of downstream tasks, especially in tasks in the software engineering (SE) domain. We have found that the concept of intelligentsia is explicitly or implicitly adopted in many studies that combine LLMs with SE. However, there is a lack of an in-depth research paper to sort out the development lineage of existing work, analyze how existing work combines LLM-based intelligent body techniques to optimize various tasks, and clarify the framework of LLM-based intelligent bodies in SE. In this paper, we present the first survey of research on combining LLM intelligences with SE and propose a framework for LLM intelligences in SE that includes three key modules: perception, memory, and action. We also summarize the current challenges encountered in combining these two domains and propose future opportunities to address them. We maintain a GitHub repository of related papers at:https://github.com/DeepSoftwareAnalytics/Awesome-Agent4SEThe

1 Introduction

In recent years, Large Language Models (LLMs) have achieved remarkable success and have been widely used in many downstream tasks, especially in various tasks in the software engineering (SE) field Zheng et al., as summarized in the code Ahmed et al. (2024); Sun et al. (2023b); Haldar and Hockenmaier (2024); Mao et al. (2024); Guo et al. (2023); Wang et al. (2021), code generation Jiang et al. (2023a); Hu et al. (2024b); Yang et al. (2023a); Tian and Chen (2023); Li et al. (2023e); Wang et al. (2024b), code translation Pan et al. (2024, ), vulnerability detection and remediation Zhou et al. (2024); Islam and Najafirad (2024); de Fitero-Dominguez et al. (2024); Le et al. (2024); Liu et al. (2024b); Chen et al. (2023a), etc. The concept of intelligibles is introduced from the field of AI, either explicitly or implicitly, in many studies that combine LLM with SE. Explicit use implies that the paper directly refers to the application of technologies related to intelligibles, while implicit use suggests that although the concept of intelligibles is used, it may be presented using different terminology or in some other form.

Intelligentsia Wang et al. (2024c) represents an intelligent entity capable of perceiving, reasoning and executing actions. It serves as an important technological basis for accomplishing various tasks and goals by sensing the state of the environment and selecting actions based on its goals and design to maximize specific performance metrics. LLM-based intelligences typically use LLMs as the cognitive core of the intelligences and excel in scenarios such as automation, intelligent control, and human-computer interaction, utilizing the powerful capabilities of LLMs in language comprehension and generation, learning and reasoning, context awareness and memory, and multimodality. With the development of various fields, the concepts of traditional and LLM-based intelligences are gradually clarified and widely used in the field of natural language processing (NLP) Xi et al. (2023). However, while existing work uses this concept explicitly or implicitly in SE, there is still no clear definition of intelligibles. There is a lack of an in-depth research article to analyze how existing work incorporates intelligent body techniques to optimize various types of tasks, to sort out the developmental lineage of existing work, and to clarify the framework of intelligent bodies in SE.

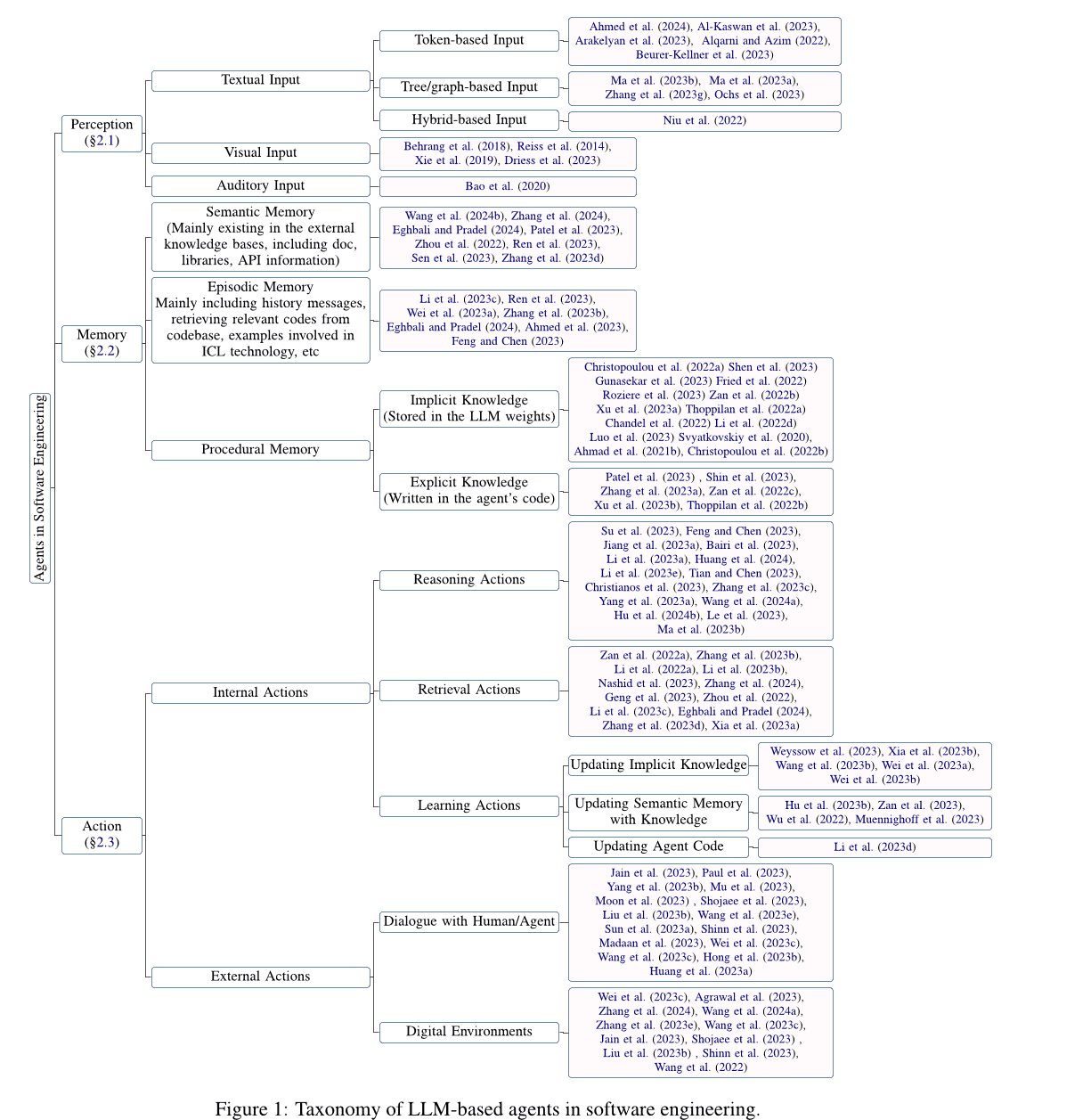

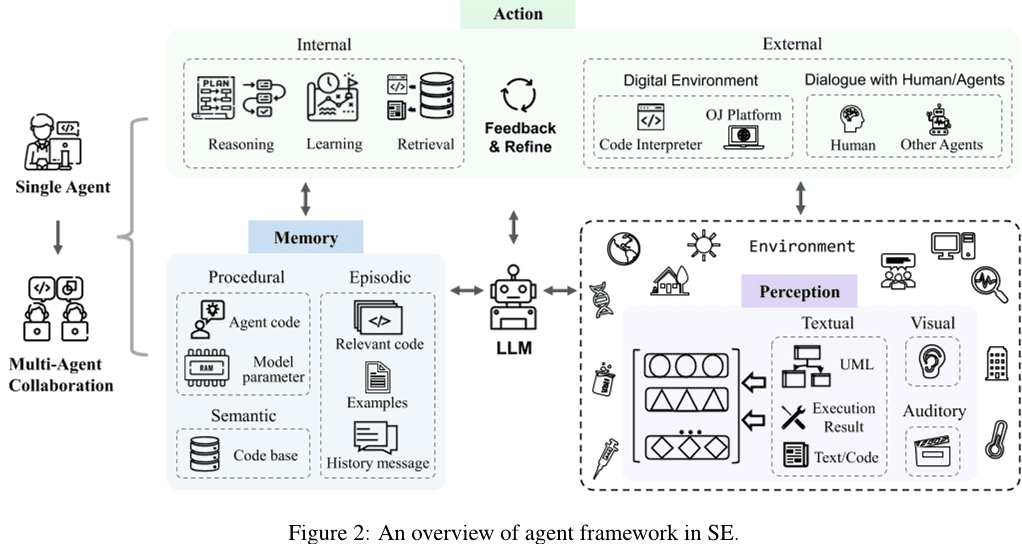

In this paper, we provide an in-depth analysis of research on combining Large Language Model (LLM)-based agents with software engineering (SE), summarize the current challenges faced when combining the two, and suggest possible opportunities for future research in response to existing challenges. Specifically, we first collected papers related to the application of LLM-based agent technology in SE and obtained 115 papers after filtering and quality assessment. Then, inspired by the traditional agent definition (Wang et al.2024cXi et al.2023), we present a generalized conceptual framework for LLM-based agents in SE (see Section 2 sections), including the three key components of perception, memory, and action. We first introduce the perception module (see Section 2.1 Section), this module can handle inputs of different modalities, such as textual input, visual input, auditory input, and so on. Next, we introduce the memory module (see Section 2.2 Section), this module includes semantic memory, plot memory, and procedural memory to help the agent make reasoned decisions. Finally, we introduce the action module (see Section 2.3 Section), the module includes internal actions such as reasoning, retrieval and learning, as well as external actions such as interacting with the environment.

We then detail the challenges and opportunities of LLM-based agents in SE (see Section 3 sections). Specifically, we propose the following future research opportunities to address the current challenges of LLM agents in SE:

- Most of the existing studies mainly explore the Token-based text input perception module, while the exploration of other modalities is lacking.

- Many new tasks remain outside the scope of LLM learning, and complex tasks in the SE domain require agents with multiple capabilities. Therefore, it is critical to explore how LLM-based agents can take on new roles and effectively balance the capabilities of multiple roles.

- The SE domain lacks an authoritative and recognized knowledge base containing a wealth of code-related knowledge as a basis for external retrieval.

- Mitigating the illusion of LLM agents improves the overall performance of the agent, and agent optimization in turn mitigates the illusion problem of LLM agents.

- The process of multi-agent collaboration requires significant computational resources and additional communication overhead due to synchronization and sharing of various information. Exploring techniques to improve the efficiency of multi-agent collaboration is also an opportunity for future work.

- Technologies in the SE field can also contribute to the development of the agent field, and more work is needed in the future to explore how to incorporate advanced technologies in the SE field into the agent and to promote the advancement of both the agent and the SE field.

In addition, techniques in SE, especially those related to code, can also advance the field of agents, demonstrating the mutually reinforcing relationship between these two very different fields. However, very little work has explored the application of SE techniques to agents, and the focus of research remains on the simple basic techniques of SE, such as function calls, HTTP requests, and other tools. Therefore, this paper focuses on agent-related work in the field of SE, and in section 3.6 SectionThe application of SE techniques to agents is briefly discussed in as an opportunity for future research.

2 SE Agents Based on the Large Language Model (LLM)

We propose a software engineering (SE) agent framework based on the Large Language Model (LLM) after collating and analyzing the research obtained during data collection. As shown in Fig. 2 shown, a single agent contains three key modules: perception, memory, and action. Specifically, the perception module receives multimodal information from the external environment and converts it into a form of input that the LLM can understand and process. The action module includes internal and external actions, which are responsible for reasoning decisions based on inputs from the LLM and optimizing decisions based on feedback obtained from interaction with the external environment, respectively. The memory module includes semantic, situational, and procedural memories that can provide additional useful information to help the LLM make reasoned decisions. Also, the action module can update different memories in the memory module by learning actions to provide more effective memory information for reasoning and retrieval operations. In addition, multi-agent collaboration consists of multiple single agents that are responsible for part of the task and work together to accomplish the task through collaborative cooperation. This section presents the details of each module in the LLM-based SE agent framework.

2.1 Perception

The perceptual module connects the LLM-based agent to the external environment and is central to the processing of external inputs. It processes different modal inputs such as textual, visual and auditory inputs and converts them into embedded formats that the LLM agent can understand and process, laying the groundwork for the LLM agent's reasoning and decision-making behavior. Next, we present the details of the different modal inputs in the perception module.

2.1.1 Text input

Unlike the text input format in Natural Language Processing (NLP), which takes into account the characteristics of the code, the text input format in SE consists of a text input format based on the Token inputs, tree/graph based inputs, and hybrid inputs.

Token-based input. Token-based inputs (Ahmed et al. 2024; Al-Kaswan et al. 2023; Arakelyan et al. 2023; Beurer-Kellner et al. 2023:: Alqarni and Azim. 2022; Li et al. 2022b; Gu et al. 2022Du et al. 2021) is the most dominant input approach, which treats code directly as natural language text and uses Token sequences directly as input to the LLM, ignoring the properties of the code.

Tree/diagram based input. In contrast to natural languages, codes have strict structural and syntactic rules and are usually written according to the syntax of a particular programming language. Based on the characteristics of code, tree/diagram-based input (Ma et al. 2023b, aZhang et al. 2023g:: Ochs et al. 2023Bi et al. 2024Shi et al. 2023a, 2021; Wang and Li. 2021) can transform code into tree structures such as abstract syntax trees, or graph structures such as control flow graphs, to model structured information about the code. However, current work related to LLM-based SE agents has not explored such modalities, which presents both challenges and opportunities.

Hybrid input. The hybrid input (Niu et al. 2022; Hu et al. 2024aGuo et al. 2022) combines multiple modalities to provide different types of information to the LLM. For example, the inclusion of hybrid Token-based and Tree-based inputs can combine semantic and structural information about the code, leading to better modeling and understanding of the code. However, work related to LLM-based agents in SE has not yet explored this modality as well.

2.1.2 Visual input

Visual input uses visual image data, such as UI sketches or UML design diagrams, as model input and makes inferential decisions by modeling and analyzing the images. Many NLP-related works explore this modality. For example, Driess et al. (2023) proposes PaLM-E, an embodied multimodal language model whose inputs are multimodal sentences intertwined with visual, continuous state estimation and textual input encoding. Traditional software engineering domains also have tasks for visualizing input, such as UI code search (Behrang et al.2018; Reiss et al.2014; Xie et al.2019), which uses UI sketches as queries to find useful code snippets. However, there is still less visual modal work as LLM input.

2.1.3 Auditory input

Auditory input interacts with LLMs in the form of speech, using auditory data such as audio as input. Traditional software engineering domains have tasks for auditory input, such as programming video searches (Bao et al.2020), which uses video as a source of useful code snippets. However, work related to auditory input for LLM is also relatively lacking.

2.2 Memory

The memory modules include semantic memory, situational memory, and procedural memory, which can provide additional useful information to help LLMs make reasoned decisions. Next, we will introduce the details of each of these three types of memory.

2.2.1 Semantic Memory

Semantic memories store the recognized world knowledge of LLM agents, usually in the form of external knowledge retrieval repositories that include documents, libraries, APIs, or other knowledge. Many studies have explored the application of semantic memory (Wang et al.2024b; Zhang et al.2024Eghbali and Pradel.2024; Patel et al.2023; Zhou et al.2022; Ren et al.2023; Zhang et al.2023d). Specifically, documentation and APIs are the most common information found in external knowledge bases. For example, Zhou et al. (2022) proposed a novel natural language-to-code generation method called DocPrompting, which explicitly utilizes documents based on natural language intent by retrieving relevant document fragments.Zhang et al. (2024) constructed a manual organizing benchmark called CODEAGENTBENCH, specialized for code generation at the codebase level, containing documentation, code dependencies, and runtime environment information.Ren et al. (2023) proposed a novel knowledge-driven Prompt-based chained code generation approach called KPC, which utilizes fine-grained exception handling knowledge extracted from API documentation to assist LLM in code generation. In addition to documentation, APIs are also common information in external knowledge bases. For example, Eghbali and Pradel (2024) proposed an LLM-based code-completion technique called De-Hallucinator, which automatically identifies project-specific API references related to code prefixes and initial predictions of the model and adds information about these references to the hints.Zhang et al. (2023d) Integrating an API search tool into the generation process allows the model to automatically select APIs and use the search tool to get suggestions. In addition, some work has addressed other information as well. For example, Patel et al. (2023) examined the capabilities and limitations of different LLMs when generating code based on context.Wang et al. (2024b) Fine-tuning of selected code LLMs using enhancement functions and their corresponding documentation strings.

2.2.2 Situational memory

Situational memory records content relevant to the current case as well as empirical information gained from previous decision-making processes. Content related to the current case (e.g., relevant information found in search databases, samples provided through In-context learning (ICL) techniques, etc.) can provide additional knowledge for LLM reasoning, and thus many studies have introduced this information into the reasoning process of LLM (Zhong et al.2024; Li et al.2023c; Feng and Chen.2023; Ahmed et al.2023; Wei et al.2023a; Ren et al.2023; Zhang et al.2023bEghbali and Pradel.2024; Shi et al.2022). For example, Li et al. (2023c) proposed a new hinting technique called AceCoder, which selects similar programs as examples in the hints, providing a large amount of content (e.g., algorithms, APIs) related to the target code.Feng and Chen (2023) proposed AdbGPT, a lightweight approach without training and hard-coding that automatically reproduces errors based on error reports using In-context learning techniques.Ahmed et al. (2023) found that the inclusion of semantic facts can help LLM improve the performance of the code summarization task.Wei et al. (2023a) proposes a new model called Coeditor, which predicts edits to code regions based on recent changes to the same codebase in a multi-round automatic code editing setup. In addition, introducing empirical information such as historical interaction information can help LLM-based agents better understand the context and make correct decisions. Some work uses empirical information from past reasoning and decision-making processes to obtain more accurate answers by iteratively querying and modifying answers. For example, Ren et al. (2023) proposed KPC, a knowledge-driven Prompt chaining-based code generation approach that decomposes code generation into AI chains with iterative check-rewrite steps.Zhang et al. (2023b) proposed RepoCoder, a simple, general, and effective framework that efficiently utilizes codebase-level information for code completion in an iterative retrieval generation pipeline.Eghbali and Pradel (2024) proposed De-Hallucinator, an LLM-based code-completion technique that retrieves suitable API references by incrementally increasing the contextual information in the hints and iterates the query model to make its predictions more accurate.

2.2.3 Procedural memory

The procedural memory of an agent in software engineering consists of implicit knowledge stored in the Large Language Model (LLM) weights and explicit knowledge written in the agent's code.

The implicit knowledge is stored in the parameters of the LLM. Existing studies usually propose new LLMs with rich implicit knowledge for various downstream tasks by training models using large amounts of data.Zheng et al. (2023) has organized the code LLMs in the SE domain according to their affiliation type (including companies, universities, research teams & open source communities, individuals & anonymous contributors).

Explicit knowledge Written into the agent's code to enable the agent to run automatically. Several works, Patel et al. (2023); Shin et al. (2023); Zhang et al. (2023a) explored different ways of constructing agent code. Specifically, Patel et al. (2023) use three types of contextual supervision to specify library functions, including demos, descriptions, and implementations.Shin et al. (2023) investigated the effectiveness of three different cue engineering techniques (i.e., basic cueing, contextual learning, and task-specific cueing) with fine-tuned LLM on three typical ASE tasks. Zhang et al. (2023a) explored the use of different cue designs (i.e., basic cues, supporting information cues, and chained thinking cues) through the use of ChatGPT Performance of performing software vulnerability detection.

2.3 Movement

The action module consists of two types: internal actions and external actions. External actions interact with the external environment to obtain feedback, including conversations with humans/agents and interactions with the digital environment, while internal actions reason and make decisions based on inputs from the LLM and optimize the decisions based on the feedback obtained, including inference, retrieval, and learning actions. Next, we describe each action in detail.

2.3.1 Internal actions

Internal actions include reasoning, retrieval and learning actions. Separately, the reasoning action is responsible for analyzing the problem, reasoning and making decisions based on inputs from the LLM agent. Retrieval actions retrieve relevant information from the knowledge base and help the reasoning actions to make correct decisions. Learning actions, on the other hand, continuously learn and update knowledge by learning and updating semantic, procedural, and situational memories, thus improving the quality and efficiency of reasoning and decision making.

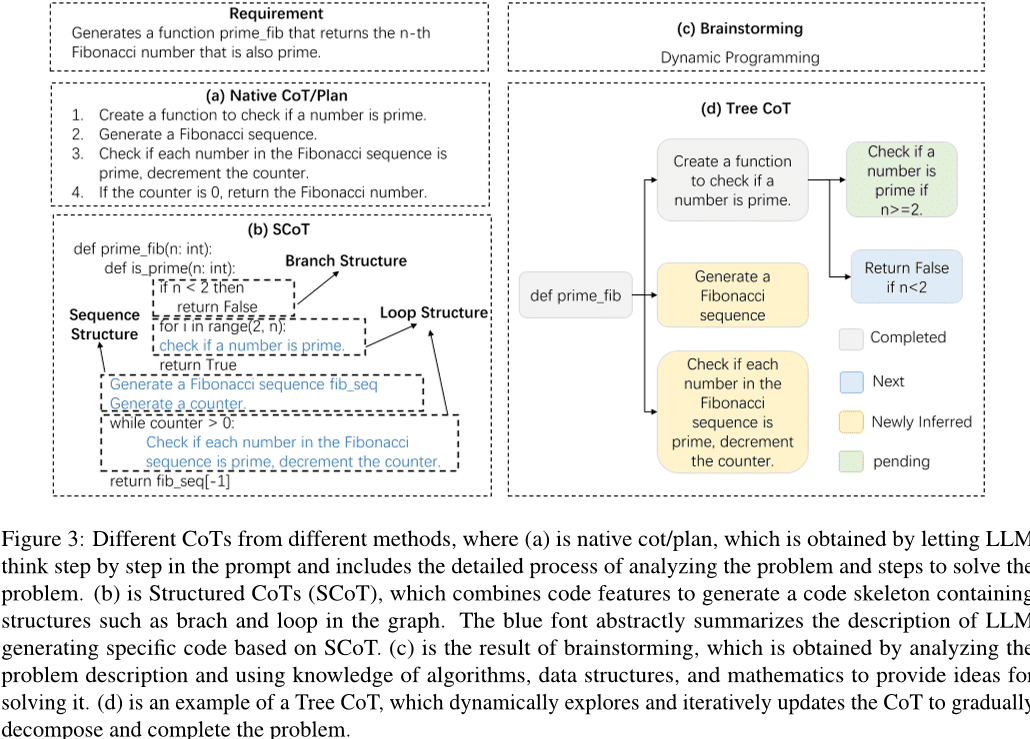

Reasoning Actions. A rigorous reasoning process is key for LLM agents to accomplish their tasks, and Chained Thinking (CoT) is an effective way of reasoning. With the help of CoT, LLMs can deeply understand problems, decompose complex tasks, and generate high-quality answers. As shown in the figure 3 As shown, existing research has explored different forms of CoT, including simple CoT/planning, SCoT, brainstorming, and tree CoT. Specifically, a simple CoT/plan is a paragraph in the prompt that describes the reasoning process of the problem. In early work, a simple sentence was included in the prompt to guide the LLM to generate chain thinking for better problem solving. For example, Hu et al. (2024bA context learning approach to guide LLM debugging using the "print debug" method is proposed. With the development of LLM technology, the design of CoTs has become more complex. Inspired by the developers' process of verifying the feasibility of test scenarios, Su et al. (2023) designed Chained Thinking (CoT) reasoning to extract humanoid knowledge and logical reasoning from LLM. le et al. (2023) proposed a new framework called CodeChain, which generates self-correcting chains guided by a number of representative submodules generated in previous iterations.Huang et al. (2024) proposed CodeCoT, which generates test cases to verify the code for syntax errors during execution, and then combines chain thinking with the self-checking process of code generation through a self-checking phase.Tian and Chen (2023) presents a novel prompting technique, designs sophisticated thought-lead prompts and prompt-based feedback, and explores for the first time how to improve the code generation performance of LLMs.

Considering the characteristics of the code, some researches have proposed a structured CoT (Chain of Thought) to introduce structural information of the code. As shown in Fig. 3 As shown in (b), structured CoT presents the reasoning process in a pseudo-code-like form, involving loops, branches, and other structures. For example, Li et al. (2023a) proposed Structured CoTs (SCoTs), which can effectively utilize the rich structural information in source code, and a new code generation hinting technique, SCoT hinting.Christianos et al. (2023) proposes a generalized framework model that utilizes the construction of intrinsic and extrinsic functions to increase the understanding of the reasoning structure and incorporate structured reasoning into the strategies of AI intelligences. In addition, several studies have proposed other forms of CoT, such as brainstorming and tree CoT, as shown in Figure 3 (c) and (d) in the following. Brainstorming is based on generating relevant keywords based on the input. For example, Li et al. (2023e) proposed a novel brainstorming framework for code generation, which utilizes brainstorming steps to generate and select different ideas, facilitating algorithmic reasoning prior to code generation. Tree CoT is an example of Feng and Chen (2023) is proposed, which dynamically explores and updates the CoT with nodes in the tree in various states including completed, new, newly derived and pending nodes.

In addition, several studies have explored other techniques to improve the reasoning ability and reasoning efficiency of intelligences based on large language models. For example, Wang et al. (2024a) proposed TOOLGEN, which consists of a trigger insertion and model fine-tuning phase (offline), and a tool integration code generation phase (online).TOOLGEN utilizes enhancements in the given code corpus to infer the location of triggering autocompletion tools and to mark special token Token.Yang et al. (2023a) devised a new method, COTTON, which automatically generates code-generated CoTs using a lightweight language model. Zhang et al. (2023c) proposed self-inference decoding, a novel inference scheme that generates draft Token and then verifies the Token output from these drafts using the original bigram model through a single forward pass.Zhou et al. (2023) introduces an adaptive solving framework which strategically adjusts the solving strategy according to the difficulty of the problem, which not only improves the computational efficiency but also the overall performance.

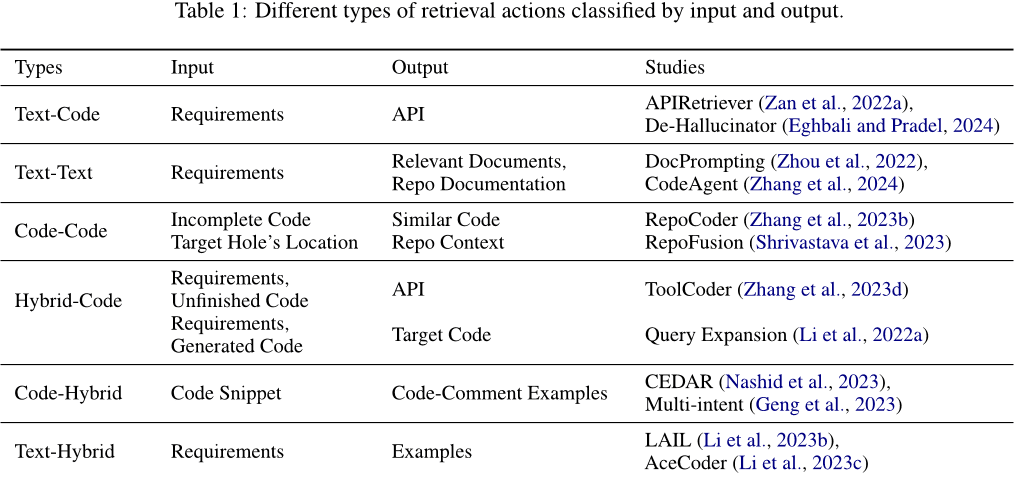

Retrieve operation. Retrieval operations retrieve relevant information from the knowledge base to help reasoning operations make the right decisions. The input used for retrieval and the type of output content obtained through retrieval vary. As shown in Table 1 As shown, the inputs and outputs can be text, code, or mixed messages containing text and code. They can be categorized as follows: (1) Text-code. Usually, requirements are used as inputs to retrieve relevant code or used APIs, which are added to the prompts to generate response codes. For example, Zan et al. (2022a) propose a new framework that includes the APIRetriever and APICoder modules. Specifically, APIRetriever retrieves useful APIs, and then APICoder uses these retrieved APIs to generate code. de-Hallucinator Eghbali and Pradel (2024) Retrieve appropriate API references via hints and use the obtained hints to iteratively query the model. (2) Text-Text. Sometimes, requirements are also used as input to retrieve relevant documents or similar questions to help accomplish the task. For example, Zhou et al. (2022) introduced DocPrompting, a natural language-to-code generation method that explicitly responds to a given NL intent by retrieving relevant document fragments. Zhang et al. (2024) proposes CodeAgent, a novel agent framework based on a large language model that integrates external tools to retrieve relevant information for efficient code generation at the codebase level, and supports interaction with software artifacts for information retrieval, code symbol navigation, and code testing. (3) Code-Code. Code can also be used as input to retrieve similar or related code to provide reference for generating target code. For example, Zhang et al. (2023b) proposes RepoCoder, a simple, general and effective framework that uses an iterative retrieval generation process to retrieve similarity-based codebase-level information. (4) Hybrid-Code. In addition to using a single type of information (e.g., text or code) as input to retrieve relevant codes, multiple types of information can be combined into hybrid information to improve retrieval accuracy. For example, Li et al. (2022a) utilizes a powerful code generation model by augmenting a document query with code snippets generated by the generation model (generating peer-to-peer sections) and then using the augmented query to retrieve the code.ToolCoder Zhang et al. (2023d) Use online search engines and documentation search tools to obtain appropriate API recommendations to aid in API selection and code generation. In addition, the search is not limited to a single type of information. (5) Code-Hybrid. It uses code as input and retrieves a variety of relevant information. For example, Nashid et al. (2023) proposed a new technique called CEDAR for prompt creation that automatically retrieves code presentations relevant to the developer's task based on embedding or frequency analysis.Geng et al. (2023) Using a contextual learning paradigm to generate multi-intent annotations for code, by selecting different code-annotation examples from a pool of examples. (6) Text-Mixing. It uses requirements as input to retrieve relevant code and similar problems for reference. For example, Li et al. (2023b) proposed LAIL (LLM-Aware In-context Learning), a new learning-based selection method for selecting examples to be used for code generation.Li et al. (2023c) introduces a new mechanism called AceCoder, which uses demand retrieval of similar programs as examples in the prompts to provide a large amount of relevant content (e.g., algorithms, APIs).

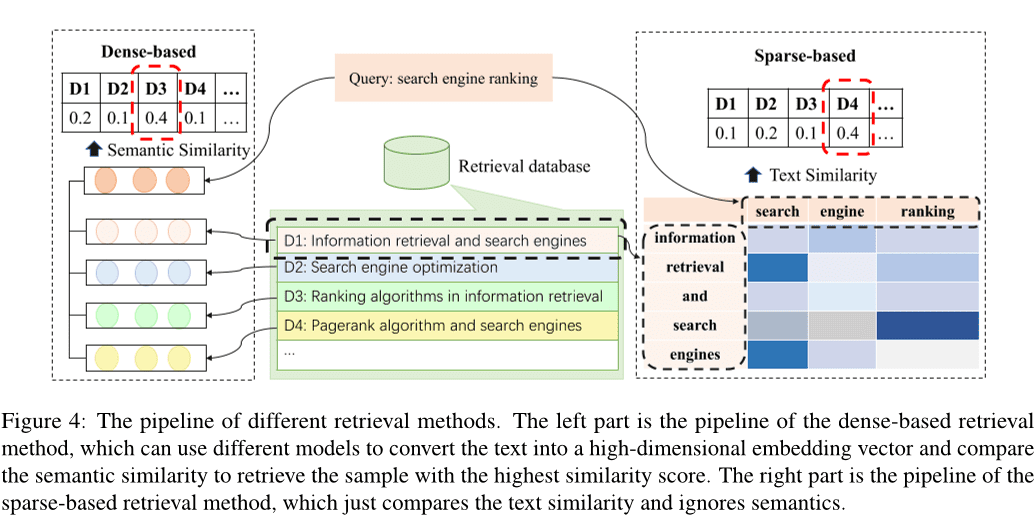

According to a previous study Li et al. (2022c); Zhao et al. (2024); Hu et al. (2023a), the existing search methods can be categorized into sparse search, dense search Wang et al. (2024e), and other methods Hayati et al. (2018); Zhang et al. (2020); Poesia et al. (2022); Ye et al. (2021); Shu et al. (2022). Fig. 4 shows the pipeline for sparse and dense retrieval. The dense retrieval method transforms the input into a high-dimensional vector and selects the k samples with the highest similarity by comparing the semantic similarities, while the sparse retrieval method computes the BM25 or metrics such as TF-IDF to assess text similarity between samples. In addition, various alternative retrieval methods have been explored. For example, some studies have focused on computing the edit distance between natural language texts Hayati et al. (2018), or an abstract syntax tree (AST) of code fragments Zhang et al. (2020); Poesia et al. (2022). There are also methods that utilize knowledge graphs for retrieval Ye et al. (2021); Shu et al. (2022).

Dense and sparse retrieval methods are the two most dominant retrieval methods. Of these, dense retrieval techniques usually perform better than sparse retrieval methods. However, sparse retrieval tends to be more efficient and can achieve comparable performance to dense retrieval in some cases. Therefore, many studies choose to use sparse retrieval methods for efficiency reasons.

Learning Behavior. Learning behavior is the continuous learning and updating of knowledge by learning and updating semantic and procedural memory to improve the quality and efficiency of reasoning and decision making. (1) Using knowledge to update semantic memory. Semantic memory mainly exists in the knowledge base, storing the underlying world knowledge, which can be updated by updating the knowledge base using the recognized code knowledge or constructing a new knowledge base. For example, Liao et al. (2023) proposed a novel code generation framework, called A3-CodGen, to generate higher quality code by utilizing three types of information (local awareness information from the current code file, global awareness information from other code files, and third-party library information) obtained from retrieved libraries.Du et al. (2024) proposes a novel vulnerability detection technique Vul-RAG based on large language modeling, which extracts multi-dimensional knowledge from existing CVE instances via LLM to construct a vulnerability knowledge base. (2) Updating Tacit Knowledge. Since the tacit knowledge is stored in the parameters of the large language model, the LLM parameters can be updated by fine-tuning the model. Earlier work typically constructs new data to supervise the fine-tuning of pre-trained models, thus updating the full parameters of the models Xia et al. (2023b); Wei et al. (2023a(math.) genusb); Tao et al. (2024); Wang et al. (2024d); Liu et al. (2023a); Wang et al. (2023d); Shi et al. (2023b). However, the cost of fine-tuning the model increases as the parameter scale increases. Some work has attempted to explore parameter-efficient fine-tuning techniques Weyssow et al. (2023); Shi et al. (2023c). For example, Weyssow et al. (2023) conducted a comprehensive study of parameter efficient fine-tuning (PEFT) techniques for large language models for automated code generation scenarios.Wang et al. (2023b) inserted and fine-tuned the efficient fine-tuning structure adapter instead of fine-tuning the pre-trained model. Most of the current work uses efficient fine-tuning techniques to fine-tune the model Guo et al. (2024); Shi et al. (2023d).

(3) Updating agent code. Agent code refers to the program or algorithm that an agent runs to guide its behavior and decision-making process. Agents based on big language models do this by constructing appropriate prompts as agent code to regulate how to perceive the environment, make reasoning and decisions, and perform operations. Much of the work uses instruction alignment techniques to align the output of the big language model with the input instructions. For example, Muennighoff et al. (2023) takes advantage of the natural structure of Git commits to pair code changes with human instructions and use those instructions to make adjustments.Hu et al. (2023b) constructed the first instruction-tuned dataset, InstructCoder, designed to adapt large language models to general-purpose code editing. These high-quality data can bring new knowledge to larger language models and update semantic memory.Zan et al. (2023) conducted extensive experiments with eight popular programming languages on StarCoder to explore whether programming languages can enhance each other through instruction tuning.

2.3.2 External action

Conversations with Humans/Agents Agents can interact with humans or other agents and receive rich information as feedback during the interaction, expanding the agent's knowledge and making the Big Language Model's answers more accurate. Specifically, many works use big language models as agents for interaction, e.g., Lu et al. (2024); Jain et al. (2023); Paul et al. (2023); Shojaee et al. (2023); Liu et al. (2023b); Wang et al. (2023e); Mu et al. (2023); Madaan et al. (2023Jain et al. (2023) proposed RLCF, which utilizes feedback from different large language models and compares the generated code with a reference code in order to further train pre-trained large language models through reinforcement learning.REFINER Paul et al. (2023) is a framework for interacting with a model of criticism that provides automatic feedback.Yang et al. (2023b) examined and clarified how large language models benefit from discriminant modeling.Moon et al. (2023) constructed a new dataset designed specifically for code repair and used it to obtain a model that could automatically generate useful feedback through Preference-Optimized Tuning and Selection.PPOCoder Shojaee et al. (2023) consists of two components, the critic and the executor, and is optimized using PPO through the interaction between these two models.RLTF Liu et al. (2023b) interacts with other models that utilize real data and online cached data generated through interaction with the compiler to calculate losses and update model weights through gradient feedback.Wang et al. (2023e) proposed ChatCoder, a way to refine requirements by chatting with a large language model.Sun et al. (2023a) proposed Clover, a tool that checks for consistency between code, documentation strings, and formal comments.ClarifyGPT Mu et al. (2023) prompts another macrolanguage model to generate targeted clarification questions to refine the ambiguous requirements of user input.Reflexion Shinn et al. (2023) can interact with humans and other agents to generate external feedback.Self-Refine Madaan et al. (2023) uses a single large language model as a generator, corrector, and feedback provider without any supervised training data, additional training, or reinforcement learning.Repilot Wei et al. (2023c) synthesizes candidate patches through the interaction between the Big Language Model and the Completion Engine. Specifically, Repilot prunes infeasible Token based on the suggestions provided by the Big Language Model.Wang et al. (2023c) introduced MINT, a benchmark test that can assess the ability of a large language model to solve multi-round interactive tasks by utilizing natural language feedback from users simulated by GPT-4. Hong et al. (2023b) proposed MetaGPT, an innovative metaprogramming framework that incorporates efficient human workflows into multi-agent collaboration based on a large language model.Huang et al. (2023a) introduced AgentCoder, a novel code generation solution that includes a multi-agent framework with specialized agents: a programmer agent, a test designer agent, and a test execution agent.

Digital Environment Agents can interact with digital systems such as OJ platforms, web pages, compilers and other external tools, and the information gained during the interaction can be used as feedback to optimize themselves. Specifically, compilers are the most common external tools, such as Jain et al. (2023); Shojaee et al. (2023); Liu et al. (2023b); Wang et al. (2022); Zhang et al. (2023e). For example, RLCF Jain et al. (2023) checks that the code it generates passes a set of correctness checks by training a pre-trained large language model using compiler-derived feedback.PPOCoder Shojaee et al. (2023) can incorporate compiler feedback and structural alignment as additional knowledge for model optimization to fine-tune the code generation model through deep reinforcement learning (RL).RLTF Liu et al. (2023b) interacts with the compiler to generate training data pairs and stores them in an online cache.Wang et al. (2022) proposed COMPCODER, which utilizes compiler feedback for compilable code generation.Zhang et al. (2023e) proposed Self-Edit, a generate-and-edit approach to improve code quality in competitive programming tasks by utilizing execution results from code generated from a large language model. In addition, much work has built tools, such as search engines, complementation engines, etc., to extend the capabilities of intelligent agents, Wang et al. (2024a); Zhang et al. (2024); Agrawal et al. (2023); Wei et al.2023c); Zhang et al. (2023fWang et al. (2024a) introduced TOOLGEN, a method for integrating auto-completion tools into the code's large language model generation process to address dependency errors such as undefined variables and missing members.Zhang et al. (2024) introduced CodeAgent, a novel agent framework for large language models that integrates five programming tools to enable interaction with software documentation for information, code symbol navigation, and code testing for effective repository-level code generation.Agrawal et al. (2023) proposed MGD, a monitor-guided decoding method in which the monitor uses static analysis to guide decoding.Repilot Wei et al. (2023c) synthesizes candidate patches through interaction between the Big Language Model and the Completion Engine. Specifically, Repilot completes Token based on the suggestions provided by the Completion Engine.

3 Challenges and Opportunities

After analyzing the content related to the work on Large Language Model (LLM)-based agents in software engineering, it is evident that the integration of these two domains is still facing many challenges that limit their development. In this section, we discuss in detail the current challenges faced by LLM-based agents in software engineering and discuss opportunities for future work based on the analysis of existing challenges.

3.1 Insufficient exploration of perceptual modules

e.g. No. 1 2.1 As described in section, there is not enough exploration of LLM-based agents for perceptual modules in software engineering. Unlike natural language, code is a special kind of representation that can be viewed as ordinary text or converted into intermediate representations characterized by code, such as Abstract Syntax Trees (ASTs), Control Flow Graphs (CFGs), and so on. Existing work such as Ahmed et al. (2024); Al-Kaswan et al. (2023); Arakelyan et al. (2023); Beurer-Kellner et al. (2023); Alqarni and Azim (2022) code is often viewed as text, and there is still a dearth of research exploring tree/graphics-based input modalities in the context of work on LLM-based agents in software engineering. In addition, exploration of visual and auditory input modalities also remains underdeveloped.

3.2 Role-playing skills

LLM-based agents are often required to play different roles in a variety of tasks, each requiring specific skills. For example, an agent may need to act as a code generator when it is asked to generate code, and as a code tester when it is performing code testing. In addition, in some scenarios, these agents may need to have multiple capabilities at the same time. For example, in code generation scenarios, the agent needs to act as both a code generator and a tester, and thus needs to have the ability to generate and test code (Huang et al. 2023b). In the software engineering domain, there are many niche tasks for which LLM learning is not sufficient, and complex tasks that require agents to have multiple capabilities, such as test generation scenarios, front-end development, and repository-level problem solving. Thus, advancing research on how to enable agents to effectively adopt new roles and manage the demands of multi-role performance represents a promising direction for future work.

3.3 Inadequate knowledge retrieval base

External knowledge retrieval bases are an important part of semantic memory in the agent's memory module and an important external tool that agents can interact with. In the field of Natural Language Processing (NLP), there are knowledge bases such as Wikipedia as external retrieval bases (Zhao et al. 2023). However, in the field of software engineering, there is no authoritative and recognized knowledge base containing a wealth of code-related knowledge, such as the basic syntax of various programming languages, commonly used algorithms, knowledge related to data structures and operating systems, and so on. In future research, efforts can be made to develop a comprehensive code knowledge base that can be used as the basis for external retrieval of agents. This knowledge base will enrich the information available, thus improving the quality and efficiency of the reasoning and decision-making process.

3.4 Illusory phenomena in LLM-based agents

Many studies related to LLM-based agents view the LLM as the cognitive core of the agent, and the overall performance of the agent is closely related to the capabilities of the underlying LLM. Existing studies (Pan et al. 2024; Liu et al. 2024a) suggests that LLM-based agents may create hallucinations, such as generating non-existent APIs while completing software engineering tasks.Reducing these hallucinations can improve overall agent performance. At the same time, optimization of the agent can also inversely mitigate the hallucinations of LLM-based agents, highlighting a bidirectional relationship between agent performance and hallucination mitigation. Although several studies have explored the phenomenon of hallucinations in LLM, addressing hallucinations in LLM-based agents still faces significant challenges. Exploring the types of hallucinations present in LLM-based agents, analyzing the causes of these hallucinations in depth, and proposing effective methods of hallucination relief are important opportunities for future research.

3.5 Efficiency of multi-intelligence collaboration

In multi-intelligence collaboration, each individual intelligence is required to play a different role in order to accomplish a specific task, and then the results of each intelligence's decisions are combined to jointly tackle more complex goals Chen et al. (2023b); Hong et al. (2023a); Huang et al. (2023b); Wang et al. (2023a). However, this process usually requires a large amount of computational resources for each intelligence, leading to wasted resources and reduced efficiency. In addition, each individual intelligence needs to synchronize and share various information, which adds additional communication costs and affects the real-time and responsiveness of collaboration. Efficiently managing and allocating computational resources, minimizing the communication cost between intelligences, and reducing the reasoning overhead of individual intelligences are key challenges for improving the efficiency of multi-intelligence collaboration. Addressing these issues provides significant opportunities for future research.

3.6 Application of Software Engineering Techniques to Intelligentsia Based on Large Language Models

Technologies in the field of software engineering, particularly coding, have the potential to significantly advance the field of intelligentsia, suggesting a mutually beneficial relationship between the two fields. For example, software testing techniques can be adapted to identify anomalous behavior and potential defects in intelligentsia based on large language models. In addition, improvements in software tools (e.g., APIs and libraries) can also enhance the performance of intelligentsia based on large language models, especially for intelligentsia with tool-using capabilities. Further, package management techniques can be adapted to efficiently manage the intelligent body system. For example, version control can be applied to monitor and coordinate updates of different intelligences in an intelligent body system, enhancing compatibility and system integrity.

However, research in this area remains limited. Therefore, exploring the incorporation of more complex SE techniques into intelligent body systems represents a promising direction for future research that may advance both fields.

4 Conclusion

In order to analyze in-depth the work of combining big language model-based intelligences with software engineering, we first collected many studies that combine big language model-based intelligences with tasks in the field of software engineering. Then, after organizing and analyzing the studies obtained during the data collection period, we present a framework for large language model-based intelligentsia in software engineering that contains three key modules: perception, memory, and action. Finally, we present detailed information about each module in the framework, analyze the current challenges faced by large language model-based intelligentsia in the field of software engineering, and point out some opportunities for future work.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...