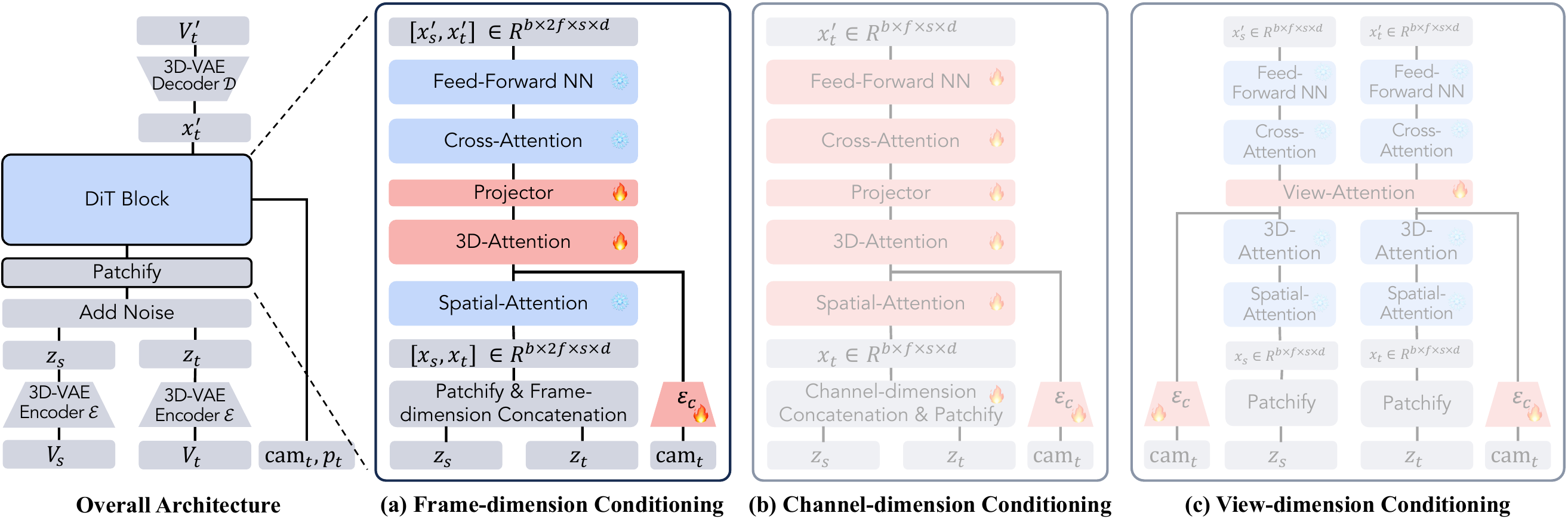

ReCamMaster: Rendering Tool for Generating Multi-View Videos from a Single Video

General Introduction

ReCamMaster is an open source video processing tool with the core function of generating new camera views from a single video. Users can specify the camera track and re-render the video to get a dynamic picture with different angles. Developed by a team from Zhejiang University and Racer Technology, and based on a text-to-video diffusion model, ReCamMaster supports video stabilization, super-resolution enhancement, and frame expansion, and is suitable for video creation, autopilot data generation, and virtual reality development. ReCamMaster supports video stabilization, super-resolution enhancement and frame expansion, and is suitable for video creation, autopilot data generation, and virtual reality development. The open source code is based on the Wan2.1 model, but due to company policy restrictions, the full model is not yet available, so you need to go through the official link to experience the best results. The team has also released the MultiCamVideo dataset, which contains 136,000 multi-view videos, to facilitate related research.

Soon to be available on efficacious International Edition Experience. Currently you can only submit your video at the official test address and wait for official feedback to generate results.

Function List

- Camera Track Control: User-selected or customized camera paths to generate new perspective videos.

- Video Stabilization: Turns shaky videos into smooth images for handheld shooting scenarios.

- Super Resolution Enhancement: Enhances video clarity through zoom tracks to produce detailed images.

- Screen Extension: Extends the video boundary to generate scene content beyond the original screen.

- Data enhancement: generating multi-view training data for autonomous driving or robot vision.

- Batch Processing: Supports simultaneous processing of multiple videos and automatic output of new perspective results.

- Dataset Support: Provides MultiCamVideo dataset with synchronized multi-view video.

Using Help

At the heart of ReCamMaster is the ability to generate new perspectives from a single video through camera track control. Below is a detailed user guide covering online trial, local runtime and dataset usage to help users get started quickly.

Online Trial Process

ReCamMaster offers a convenient online trial that allows users to experience it without installation. The steps are as follows:

- Visit the trial link::

- Open the official Google Forms link: https://docs.google.com/forms/d/e/1FAIpQLSezOzGPbm8JMXQDq6EINiDf6iXn7rV4ozj6KcbQCSAzE8Vsnw/viewform?usp=dialog.

- Enter a valid e-mail address for receiving rendering results.

- Upload Video::

- Prepare a video in MP4 format with a recommended duration of at least 81 frames (approximately 3 seconds) and a resolution of no less than 480p.

- Ensure that the video content is clear and the scene is moderately dynamic, avoiding overly dark, overexposed or purely static images.

- Upload the video in the form, paying attention to the file size limit (usually 100MB, depending on the form instructions).

- Selecting the camera track::

- ReCamMaster offers 10 preset tracks to cover common viewpoint variations:

- Pan Right: Moves horizontally to show the width of the scene.

- Pan Left: Moves horizontally in the reverse direction.

- Tilt Up: Tilt up vertically to highlight high details.

- Tilt Down: Tilt vertically down to show ground content.

- Zoom In: Move closer to the subject to enhance localized sharpness.

- Zoom Out: Pulls the lens away to expand the field of view.

- Translate Up: Moves upward with rotation.

- Translate Down: Downward movement with rotation.

- Arc Left: Rotates around the left side of the body.

- Arc Right: rotates around the right side of the body.

- Select a trajectory in the form, or note a custom requirement (need to contact the team to confirm).

- ReCamMaster offers 10 preset tracks to cover common viewpoint variations:

- Submit and receive results::

- After submitting the form, the system automatically processes the video. Processing time is usually a few hours to a day, depending on server load.

- The results are sent in MP4 format to the user's e-mail address, with the subject line "Inference Results of ReCamMaster", and may be addressed to

jianhongbai@zju.edu.cnmaybecpurgicn@gmail.comThe - Check inbox and spam folder. If not received for a day, contact the team.

take note of::

- A free trial is available for each user, but frequent submissions may delay processing.

- The quality of the results depends on the video content, with dynamic scenes working better.

- In the future, the team plans to launch an online trial site, which will be even easier to operate.

local operation

The open source code is based on the Wan2.1 model and is suitable for users with a technical background. Below are the detailed installation and runtime steps:

1. Environmental preparation

- hardware requirement: GPU-equipped devices (e.g. NVIDIA RTX 3060 or higher) are recommended; CPU operation may be slower.

- Software Requirements::

- Python 3.10

- Rust and Cargo (for compiling DiffSynth-Studio)

- Git and pip

2. Installation steps

- clone warehouse::

git clone https://github.com/KwaiVGI/ReCamMaster.git cd ReCamMaster - Installing Rust::

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh source "$HOME/.cargo/env" - Creating a Virtual Environment::

conda create -n recammaster python=3.10 conda activate recammaster - Installation of dependencies::

pip install -e . pip install lightning pandas websockets - Download model weights::

- Wan2.1 model:

python download_wan2.1.py - ReCamMaster pre-trained weights:

Downloaded from https://huggingface.co/KwaiVGI/ReCamMaster-Wan2.1/blob/main/step20000.ckptstep20000.ckptput intomodels/ReCamMaster/checkpointsFolder.

- Wan2.1 model:

3. Test example video

- Run the following command to test using a preset trajectory (e.g., panning to the right):

python inference_recammaster.py --cam_type 1 - The output is saved in the project directory in the

outputfolder in MP4 format.

4. Testing customized videos

- Prepare data::

- establish

custom_test_datafolder, put in the MP4 video (at least 81 frames). - generating

metadata.csv, record video paths and descriptive captions. Example:video_path,caption test_video.mp4,"A person walking in the park" - Subtitles can be written manually or generated using tools such as LLaVA.

- establish

- running inference::

python inference_recammaster.py --cam_type 1 --dataset_path custom_test_data

take note of::

- Local runs may not work as well as online trials, as the open source model is different from the thesis model.

- Ensure that the GPU memory is sufficient (at least 12GB).

- If you get an error, check the dependency version or refer to GitHub Issues.

5. Training models

If a custom model is required, prepare the MultiCamVideo dataset and train it:

- Download Dataset::

Download it from https://huggingface.co/datasets/KwaiVGI/MultiCamVideo-Dataset and unzip it:cat MultiCamVideo-Dataset.part* > MultiCamVideo-Dataset.tar.gz tar -xzvf MultiCamVideo-Dataset.tar.gz - Extraction Characteristics::

CUDA_VISIBLE_DEVICES="0,1,2,3,4,5,6,7" python train_recammaster.py --task data_process --dataset_path path/to/MultiCamVideo/Dataset --output_path ./models --text_encoder_path "models/Wan-AI/Wan2.1-T2V-1.3B/models_t5_umt5-xxl-enc-bf16.pth" --vae_path "models/Wan-AI/Wan2.1-T2V-1.3B/Wan2.1_VAE.pth" --tiled --num_frames 81 --height 480 --width 832 --dataloader_num_workers 2 - Generate Subtitles::

Use a tool such as LLaVA to generate a description for the video, save it to themetadata.csvThe - Start training::

CUDA_VISIBLE_DEVICES="0,1,2,3,4,5,6,7" python train_recammaster.py --task train --dataset_path recam_train_data --output_path ./models/train --dit_path "models/Wan-AI/Wan2.1-T2V-1.3B/diffusion_pytorch_model.safetensors" --steps_per_epoch 8000 --max_epochs 100 --learning_rate 1e-4 --accumulate_grad_batches 1 --use_gradient_checkpointing --dataloader_num_workers 4 - Test Training Model::

python inference_recammaster.py --cam_type 1 --ckpt_path path/to/your/checkpoint

draw attention to sth.::

- Multiple high-performance GPUs are required for training, and batch sizes may need to be adjusted for single-card training.

- Hyperparameters (e.g., learning rate) can be optimized to improve results.

MultiCamVideo dataset usage

The MultiCamVideo dataset contains 136,000 videos covering 13,600 dynamic scenes, each captured by 10 synchronized cameras. The following is a guide to its use:

1. Structure of the data set

MultiCamVideo-Dataset

├── train

│ ├── f18_aperture10

│ │ ├── scene1

│ │ │ ├── videos

│ │ │ │ ├── cam01.mp4

│ │ │ │ ├── ...

│ │ │ └── cameras

│ │ │ └── camera_extrinsics.json

│ │ ├── ...

│ ├── f24_aperture5

│ ├── f35_aperture2.4

│ ├── f50_aperture2.4

└── val

└── 10basic_trajectories

├── videos

│ ├── cam01.mp4

│ ├── ...

└── cameras

└── camera_extrinsics.json

- video format: 1280x1280 resolution, 81 fps, 15 FPS.

- camera parameters: Focal lengths (18mm, 24mm, 35mm, 50mm), apertures (10, 5, 2.4).

- Track File::

camera_extrinsics.jsonRecords camera position and rotation.

2. Use of data sets

- Train the video generation model to verify the camera control effect.

- Study synchronized video production or 3D/4D reconstruction.

- Testing the camera track generation algorithm.

3. Examples of operations

- Unzip the dataset::

tar -xzvf MultiCamVideo-Dataset.tar.gz - Visualize camera tracks::

python vis_cam.pyOutput a trajectory map showing the camera movement path.

take note of::

- The data set is large (1TB of storage reserved is recommended).

- Video ratio (e.g. 16:9) can be cropped to fit the model.

Functional operation details

1. New perspective generation

- functionality: Generate new perspectives from a single video, e.g., turn a frontal video into a side or wrap-around view.

- Online Operation::

- Upload the video and select the track (e.g. right arc).

- The system re-renders from the trajectory and generates an MP4 file.

- local operation::

- (of a computer) run

inference_recammaster.pyDesignation--cam_type(e.g. 9 for left arc). - probe

outputresults in the folder.

- (of a computer) run

- draw attention to sth.: Dynamic scenes (e.g. characters walking) work best, static backgrounds may be unnaturally generated.

2. Video stabilization

- functionality: Eliminates handheld video jitter and generates smooth images.

- manipulate::

- Online: upload video, note "stabilization" requirements.

- Local: no direct stabilization commands available at this time, need to be simulated by translational trajectories.

- effect: e.g. a shaky video taken by running, processed to approach the gimbal effect.

3. Super-resolution and outreach

- super-resolution::

- Select the zoom track to enhance the details of the picture.

- Suitable for low-resolution videos, generating clear localized content.

- expanding out::

- Select Zoom Track to extend the screen boundary.

- Fits narrow scenes and generates additional backgrounds.

- manipulate::

- Online: Select the "Zoom In" or "Zoom Out" track.

- Local: Settings

--cam_type 5(zoom in) or--cam_type 6(Zoom in).

4. Data enhancement

- functionality: Generate multi-view videos for autopilot or robot training.

- manipulate::

- Upload videos and select multiple tracks in bulk.

- Or train the model directly with the dataset to generate diverse data.

- typical example: Generating surround-view road videos for self-driving models.

Frequently Asked Questions

- Delay in results: Check for spam, or contact

jianhongbai@zju.edu.cnThe - ineffective: Ensure that the video has a sufficient number of frames (at least 81) and that the scene is dynamic.

- local error message (LEM): Verify Python version (3.10), Rust installation, or update dependencies.

- Missing model weights: Download the correct file from Hugging Face, check the path.

ReCamMaster is easy to use, and the online trial is especially suitable for novices. The dataset provides a rich resource for researchers, and future functionality extensions are to be expected.

application scenario

- Video Creation

ReCamMaster adds dynamic perspectives to short videos, commercials or movie trailers. For example, turn a fixed shot into a wrap-around effect to enhance the visual impact. - automatic driving

Generate multi-view driving scene videos to train automatic driving models. It can simulate road conditions from different angles and reduce the cost of data collection. - virtual reality

Provide multi-view content for VR/AR development, adapting to immersive scenarios. For example, generating dynamic environment videos for VR games. - Research and Education

Researchers can validate algorithms using the MultiCamVideo dataset. Educators can use New Perspectives videos to demonstrate experimental procedures and enhance teaching and learning. - 3D/4D reconstruction

The dataset supports synchronized video studies to help reconstruct 3D models of dynamic scenes.

QA

- What video formats does ReCamMaster support?

Currently supports MP4, suggests at least 81 frames, resolution 480p or above, dynamic scene effect is better. - Is the online trial free?

Currently free, but processing speed depends on server load. Paid acceleration services may be available in the future. - What configuration is required to run locally?

Recommended NVIDIA RTX 3060 or higher, at least 12GB of video memory, Python 3.10 environment. - How can I download the dataset?

Downloaded from https://huggingface.co/datasets/KwaiVGI/MultiCamVideo-Dataset, it takes up about 1TB of storage when unzipped. - Can I customize the track?

Online trial supports 10 preset trajectories, customization requires contacting the team. Local run can modify trajectory parameters by code.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...