Qwen2.5-VL: an open source multimodal grand model supporting image-video document parsing

General Introduction

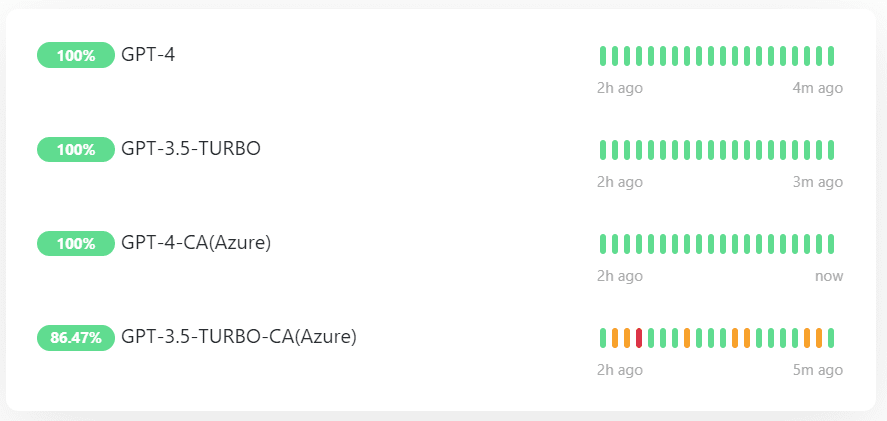

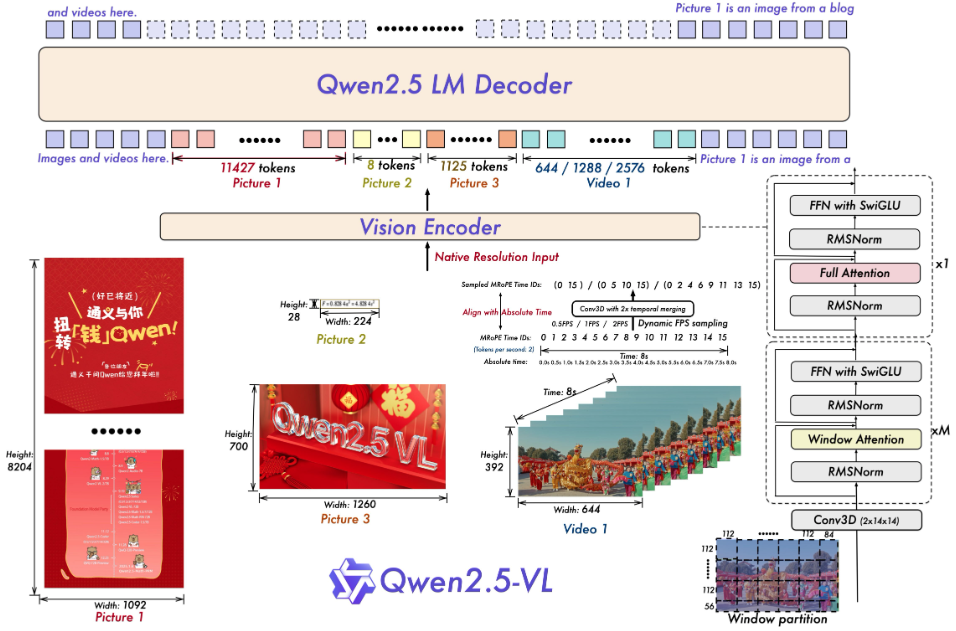

Qwen2.5-VL is an open source multimodal big model developed by Qwen team in Alibaba Cloud. It can process text, images, videos and documents at the same time, and is an upgraded version of Qwen2-VL, which is built on the Qwen2.5 language model. Officially, it is greatly improved in document parsing, video comprehension and intelligent agent functions, and supports four parameter scales, 3B, 7B, 32B and 72B, to meet a variety of needs from personal computers to servers. The project is hosted on GitHub under the Apache 2.0 license and is free and open source. qwen2.5-VL performs well in multiple tests, exceeding the closed-source model in some metrics, and is suitable for developers to build intelligent tools, such as document extractors, video analyzers, or device operation assistants.

Function List

- Recognize objects, text and layouts in images with support for natural scenes and multiple languages.

- Understand very long videos (over an hour) and locate second event clips.

- Parses complex documents and extracts handwritten text, tables, charts and chemical formulas.

- Control a computer or cell phone through visual and textual commands to perform intelligent agent tasks.

- Outputs structured data, such as coordinate or attribute information in JSON format.

- Supports dynamic resolution and frame rate adjustment to optimize video processing efficiency.

Using Help

Installation process

To run Qwen2.5-VL locally, you need to prepare the software environment. The following are the detailed steps:

- Inspection of the basic environment

Python 3.8+ and Git are required. check the terminal by entering the following command:

python --version

git --version

If you don't have it installed, you can download it from the Python and Git websites.

- Download Code

Run the following command in a terminal to clone your GitHub repository:

git clone https://github.com/QwenLM/Qwen2.5-VL.git

cd Qwen2.5-VL

- Installation of dependencies

The project requires specific Python libraries, which are installed by running the following command:

pip install git+https://github.com/huggingface/transformers@f3f6c86582611976e72be054675e2bf0abb5f775

pip install accelerate

pip install qwen-vl-utils[decord]

pip install 'vllm>0.7.2'

If you have a GPU, install PyTorch with CUDA support:

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

Notes:decord For accelerated video loading, non-Linux users can download the video from the decord GitHub Source code installation.

- priming model

Download and run the model, e.g. version 7B:

vllm serve Qwen/Qwen2.5-VL-7B-Instruct --port 8000 --host 0.0.0.0 --dtype bfloat16

The model is automatically downloaded from Hugging Face and starts the local service.

How to use the main features

After installation, Qwen2.5-VL can be operated from code or web interface.

image recognition

Want the model to describe the picture? Create a new image_test.py, enter the following code:

from transformers import Qwen2VLForConditionalGeneration, AutoProcessor

from qwen_vl_utils import process_vision_info

from PIL import Image

model = Qwen2VLForConditionalGeneration.from_pretrained("Qwen/Qwen2.5-VL-7B-Instruct", torch_dtype="auto", device_map="auto")

processor = AutoProcessor.from_pretrained("Qwen/Qwen2.5-VL-7B-Instruct")

messages = [{"role": "user", "content": [{"type": "image", "image": "图片路径或URL"}, {"type": "text", "text": "描述这张图片"}]}]

text = processor.apply_chat_template(messages, tokenize=False)

inputs = processor(text=[text], images=[Image.open("图片路径或URL")], padding=True, return_tensors="pt").to("cuda")

generated_ids = model.generate(**inputs, max_new_tokens=128)

output = processor.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(output)

When run, the model outputs a description, e.g., "The picture shows a cat sitting on a windowsill."

Video comprehension

Analyzing the video requires the preparation of local files (e.g. video.mp4). Use the following code:

messages = [{"role": "user", "content": [{"type": "video", "video": "video.mp4"}, {"type": "text", "text": "总结视频内容"}]}]

text = processor.apply_chat_template(messages, tokenize=False)

inputs = processor(text=[text], videos=[processor.process_video("video.mp4")], padding=True, return_tensors="pt").to("cuda")

generated_ids = model.generate(**inputs, max_new_tokens=128)

output = processor.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(output)

The output might be, "The video shows a cooking competition where the contestants make pizzas."

document resolution

Upload a PDF or image, use a code similar to image recognition, change the prompt to "Extract table data", the model will return structured results, such as:

[{"列1": "值1", "列2": "值2"}]

Intelligent Agents

Want to control devices? Use cookbooks/computer_use.ipynb Example. After running it, type "Open Notepad and type 'Hello'" and the model will simulate the operation and return the result.

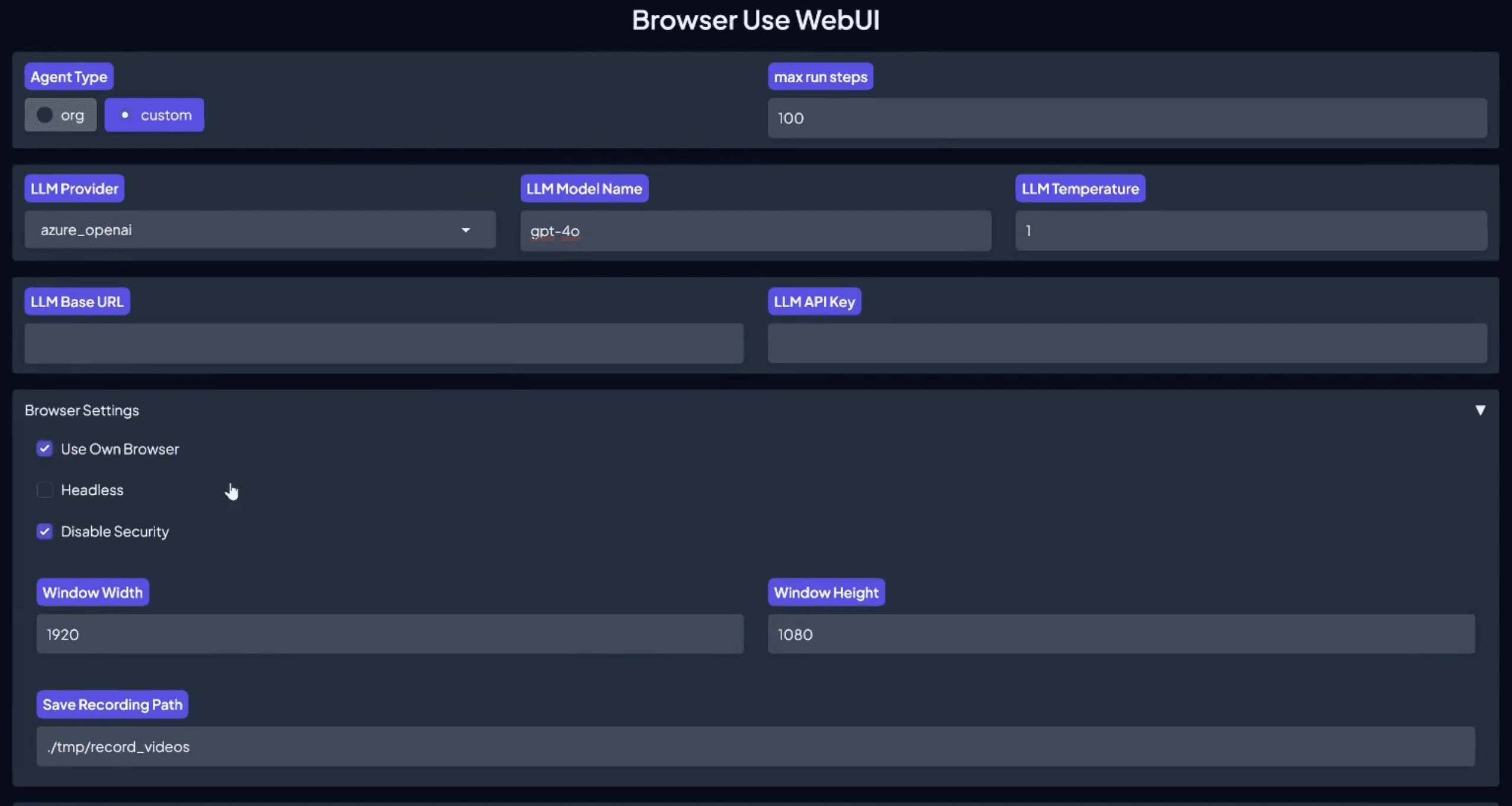

Web Interface Usage

(of a computer) run web_demo_mm.py Launch the web interface:

python web_demo_mm.py

browser access http://127.0.0.1:7860If you upload a file or enter a command, the model will process it directly.

optimize performance

- Flash Attention 2: Accelerated reasoning, used after installation:

pip install -U flash-attn --no-build-isolation python web_demo_mm.py --flash-attn2 - Resolution Adjustment: Settings

min_pixelscap (a poem)max_pixelsControl image sizes, such as the 256-1280 range, to balance speed and memory.

application scenario

- academic research

Students upload images of their papers, the model extracts formulas and data, and generates an analysis report. - video clip

The creator inputs a long video and the model extracts key clips to generate a summary. - Enterprise Document Management

The employee uploads a scanned copy of the contract, the model extracts the clauses and outputs the form. - intelligent assistant

The user uses pictures and voice commands to have the model look up flight information on their phone.

QA

- What languages are supported?

Supports Chinese, English and multiple languages (e.g. French, German), recognizes multilingual text and handwritten content. - What are the hardware requirements?

The 3B model requires 8GB of video memory, the 7B requires 16GB, and the 32B and 72B recommend a professional device with 24GB+ of video memory. - How to deal with super long videos?

The model is sampled with dynamic frame rates and is able to understand hours of video and pinpoint second-level events.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...