Qwen2.5-Max based on MoE architecture fully outperforms DeepSeek V3

Model overview

In recent years, large model training based on the Mixture of Experts (MoE) architecture has become an important research direction in the field of artificial intelligence.The Qwen team recently released the Qwen2.5-Max model, which employs more than 20 trillion tokens of pre-training data and refined post-training scheme, and makes a breakthrough in the scale-up application of the MoE architecture. progress. The model is now available viaAPI interfacemaybeQwen Chatplatform for the experience.

Technical characteristics

1. Innovations in model architecture

- Hybrid expert system optimization: Efficient allocation of computing resources through dynamic routing mechanisms

- Multimodal scalability: Supports multiple types of inputs and outputs such as text, structured data, etc.

- contextualization enhancementMaximum input is 30,720 tokens and can generate continuous text up to 8,192 tokens.

2. Core functional matrix

| functional dimension | Technical indicators |

|---|---|

| Multi-language support | Coverage of 29 languages (including Chinese/English/French/Spanish, etc.) |

| computational capabilities | Complex Math Operations and Code Generation |

| Structured processing | JSON/table data generation and parsing |

| contextual understanding | 8K tokens long text concatenation generation |

| application adaptability | Dialogue systems/data analysis/knowledge-based reasoning |

Performance Evaluation

Command Model Comparison

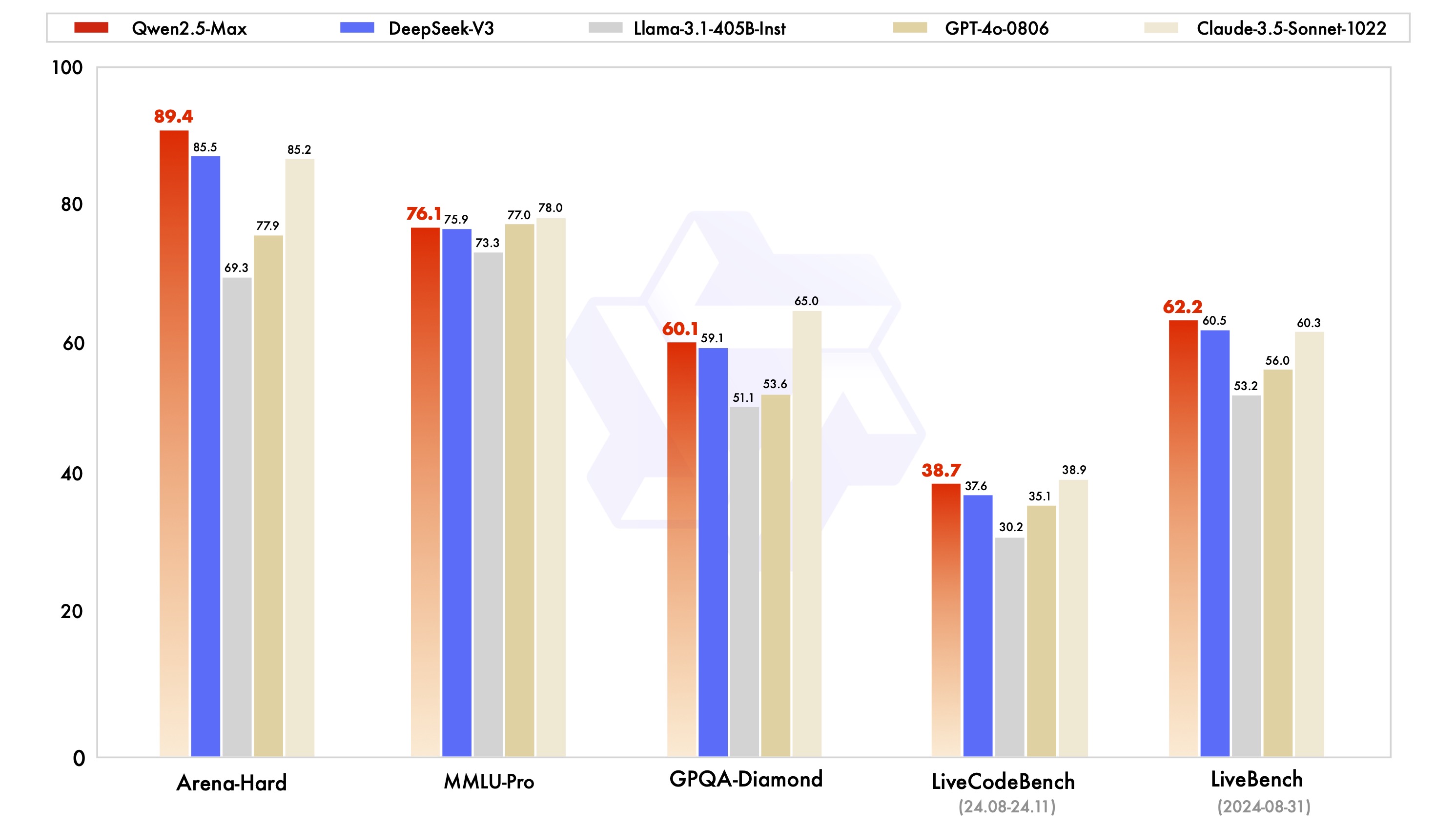

Qwen2.5-Max shows significant competitiveness in benchmark tests such as MMLU-Pro (University Knowledge Test), LiveCodeBench (Programming Ability Assessment), and Arena-Hard (Human Preference Simulation):

Test data shows that the model outperforms DeepSeek V3 in the dimensions of Programming Ability (LiveCodeBench) and Comprehensive Reasoning (LiveBench), and reaches the top level in the GPQA-Diamond Higher Order Reasoning Test.

Base Model Comparison

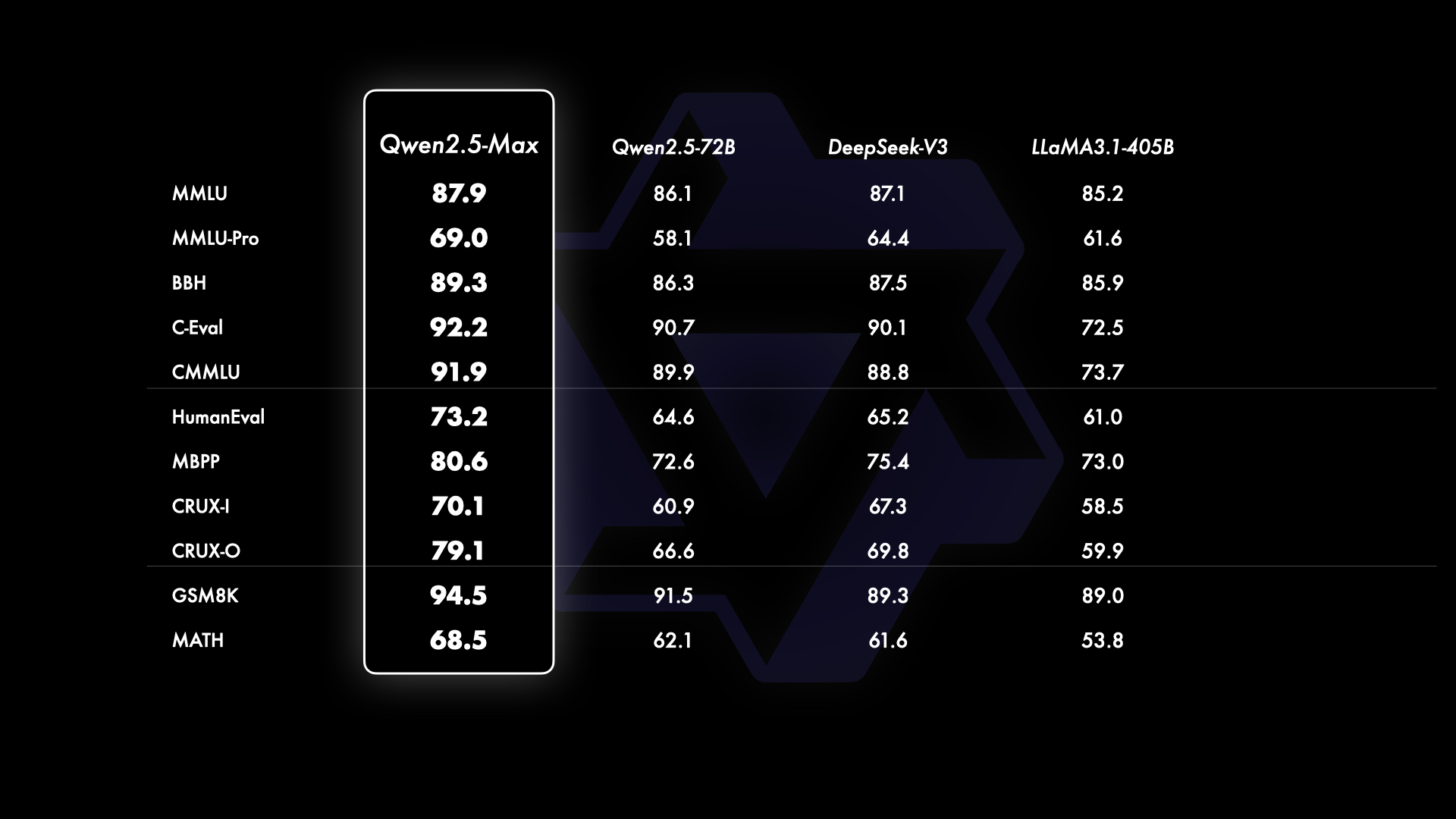

Compared with the mainstream open source models, Qwen2.5-Max demonstrates technical advantages at the basic capability level:

Comparing Llama-3.1 with a parameter scale of 405B and Qwen2.5-72B with 720B parameters, Qwen2.5-Max maintains the lead in most of the test items, verifying the effectiveness of the MoE architecture in model scaling.

Access and Use

1. Cloud API access

from openai import OpenAI

import os

client = OpenAI(

api_key=os.getenv("API_KEY"),

base_url="https://dashscale.aliyuncs.com/compatible-mode/v1",

)

response = client.chat.completions.create(

model="qwen-max-2025-01-25",

messages=[

{'role':'system', 'content':'设定AI助手角色'},

{'role':'user', 'content':'输入查询内容'}

]

)

2. Interactive experience

- interviewsHugging Face Demo Space

- Launching the Run button to load the model

- Real-time interaction via text input boxes

3. Enterprise-level deployment

- enrollmentAliyun Account

- Launch of a large model service platform

- Create API keys for system integration

Direction of technology evolution

The current version is continuously optimized in the following areas:

- Post-training data quality improvement strategies

- Multi-expert collaboration for efficiency optimization

- Low Resource Consumption Reasoning Acceleration

- Multimodal Extended Interface Development

future outlook

Continuously improving the data scale and model parameter scale can effectively improve the intelligence level of the model. Next, we will continue to explore, in addition to the scaling of pretraining, we will vigorously invest in the scaling of reinforcement learning, hoping to realize intelligence beyond human, and drive AI to explore the unknown realm.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...