Qwen2.5-Coder Full Series: Powerful, versatile and practical.

summary

Today, we are pleased to open source the full range of powerful, diverse, and useful Qwen2.5-Coder models, and are committed to continuing to advance the development of Open CodeLLMs.

- large: Qwen2.5-Coder-32B-Instruct becomes the current open-source model for SOTA, with code capabilities that tie GPT-4o, demonstrating strong and comprehensive code capabilities, as well as good generalization and mathematical capabilities.

- manifoldQwen2.5-Coder has covered the mainstream six model sizes to meet the needs of different developers: last month we open-sourced the 1.5B and 7B sizes, and this time we open-sourced the 0.5B, 3B, 14B, and 32B sizes.

- pragmatic: We explore the utility of Qwen2.5-Coder in both Code Assistant and Artifacts scenarios, and use some samples to demonstrate the potential of Qwen2.5-Coder in real-world scenarios.

Powerful: code capability up to open source model SOTA

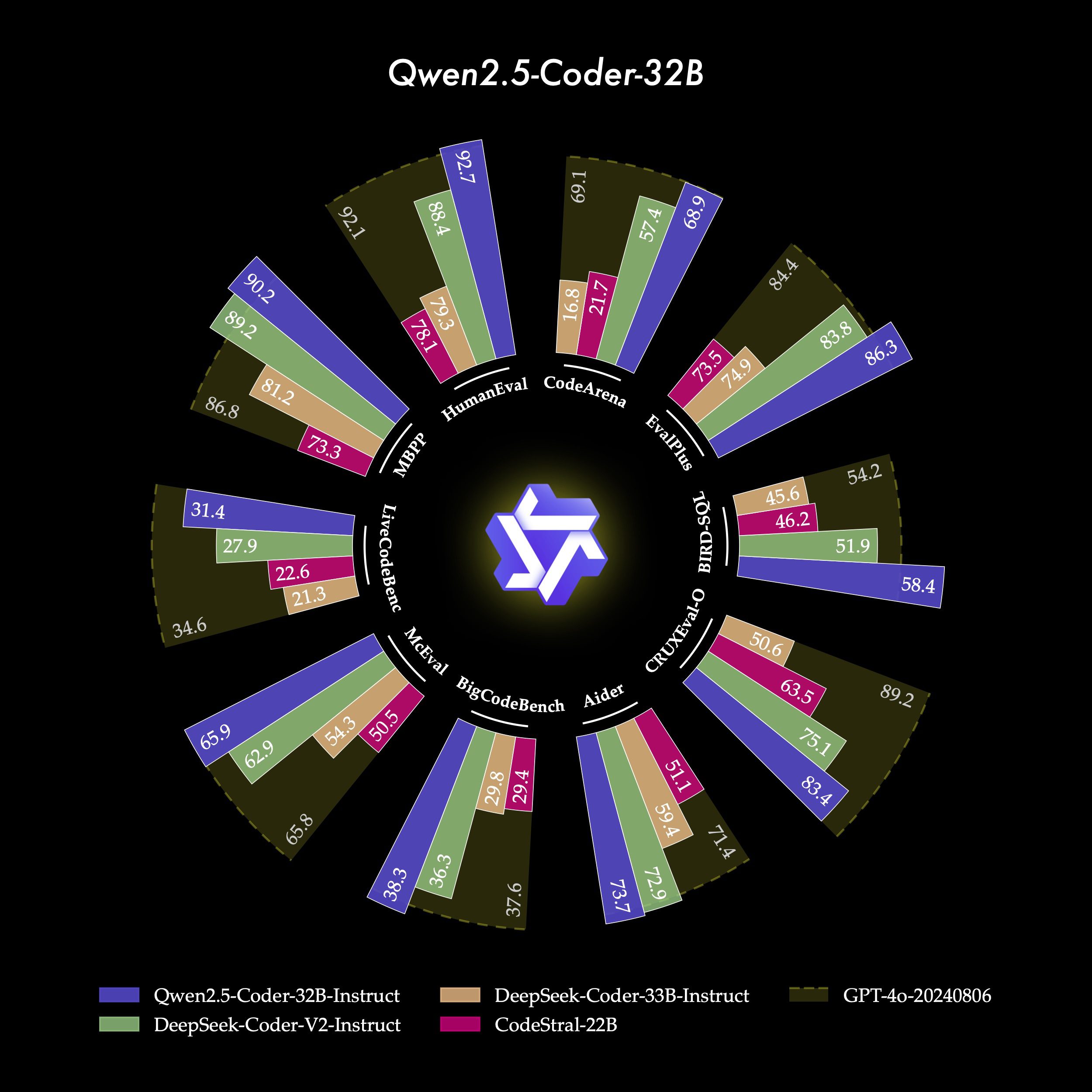

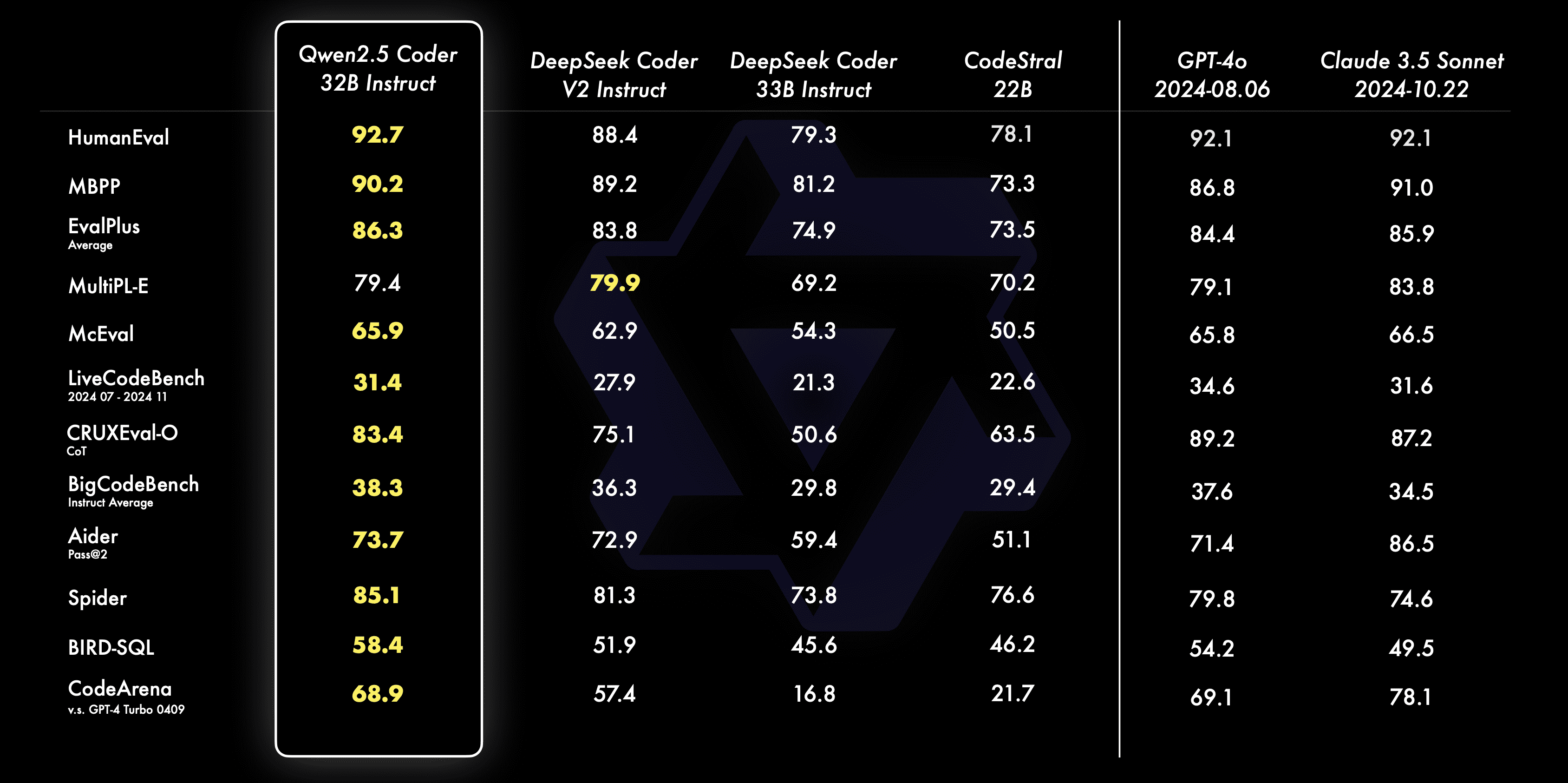

- code generation: Qwen2.5-Coder-32B-Instruct, the flagship model of this open source, has achieved the best performance among open source models on several popular code generation benchmarks (e.g., EvalPlus, LiveCodeBench, and BigCodeBench), and has reached a competitive performance with GPT-4o.

- Code FixesCode fixing is an important programming skill. qwen2.5-Coder-32B-Instruct helps users to fix errors in their code and make programming more efficient. aider is a popular benchmark for code fixing, and qwen2.5-Coder-32B-Instruct achieves a score of 73.7, which is comparable to the performance of GPT on Aider. Qwen2.5-Coder-32B-Instruct achieved a score of 73.7 on Aider and performed as well as GPT-4o.

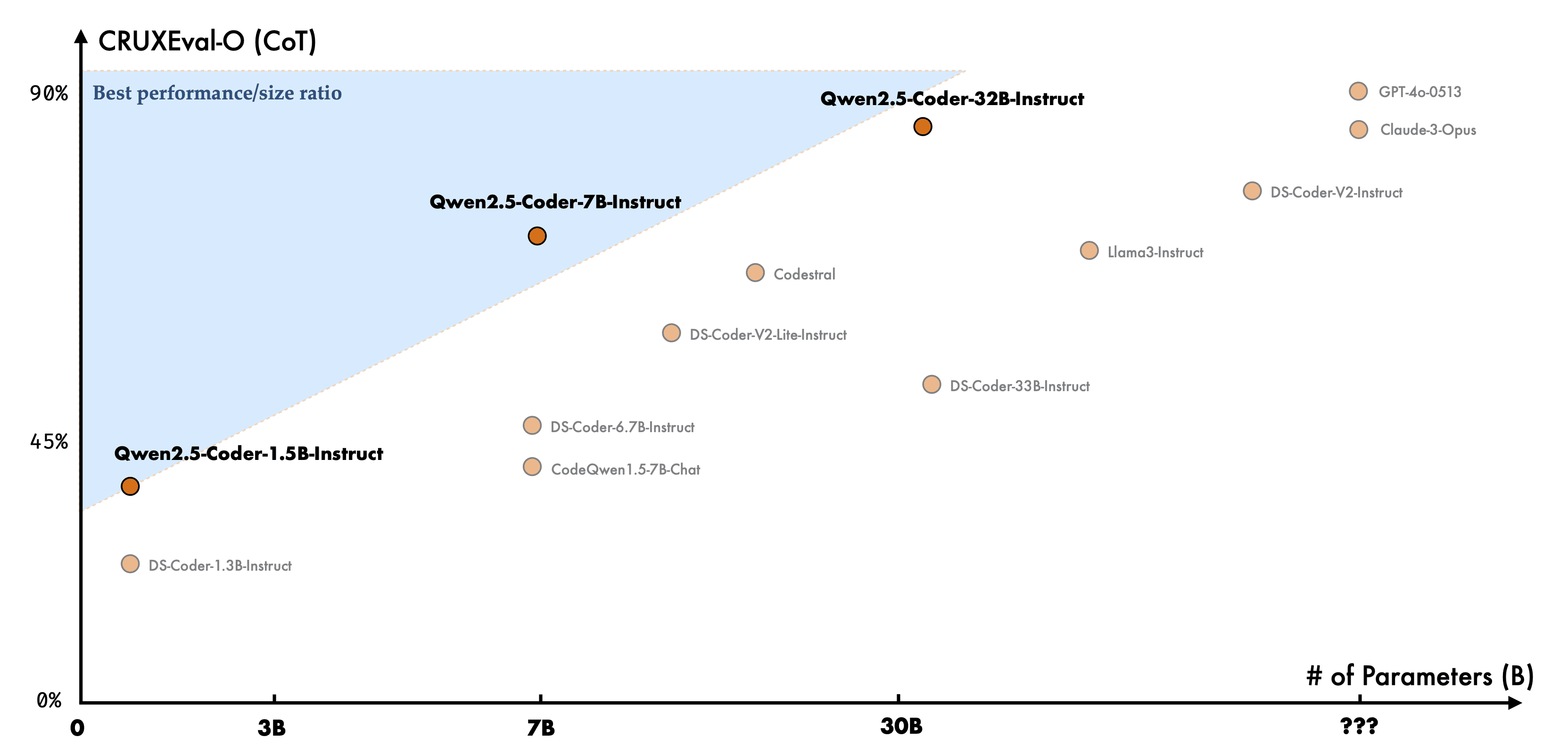

- coded reasoning: Code inference refers to the ability of a model to learn the process of code execution and accurately predict the inputs and outputs of the model. Qwen2.5-Coder-7B-Instruct, released last month, has already shown a good performance in code reasoning ability, and the 32B model goes even further.

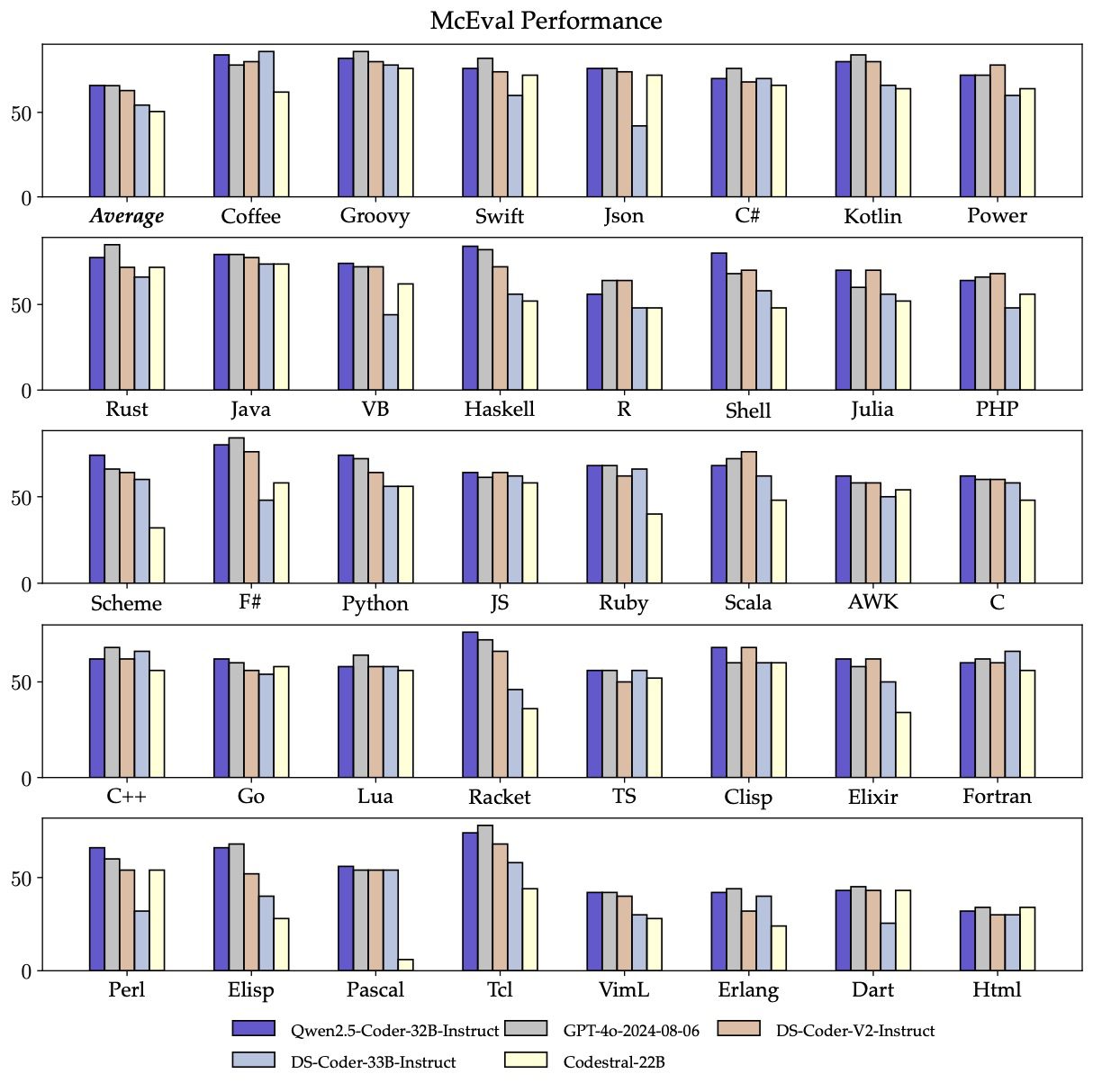

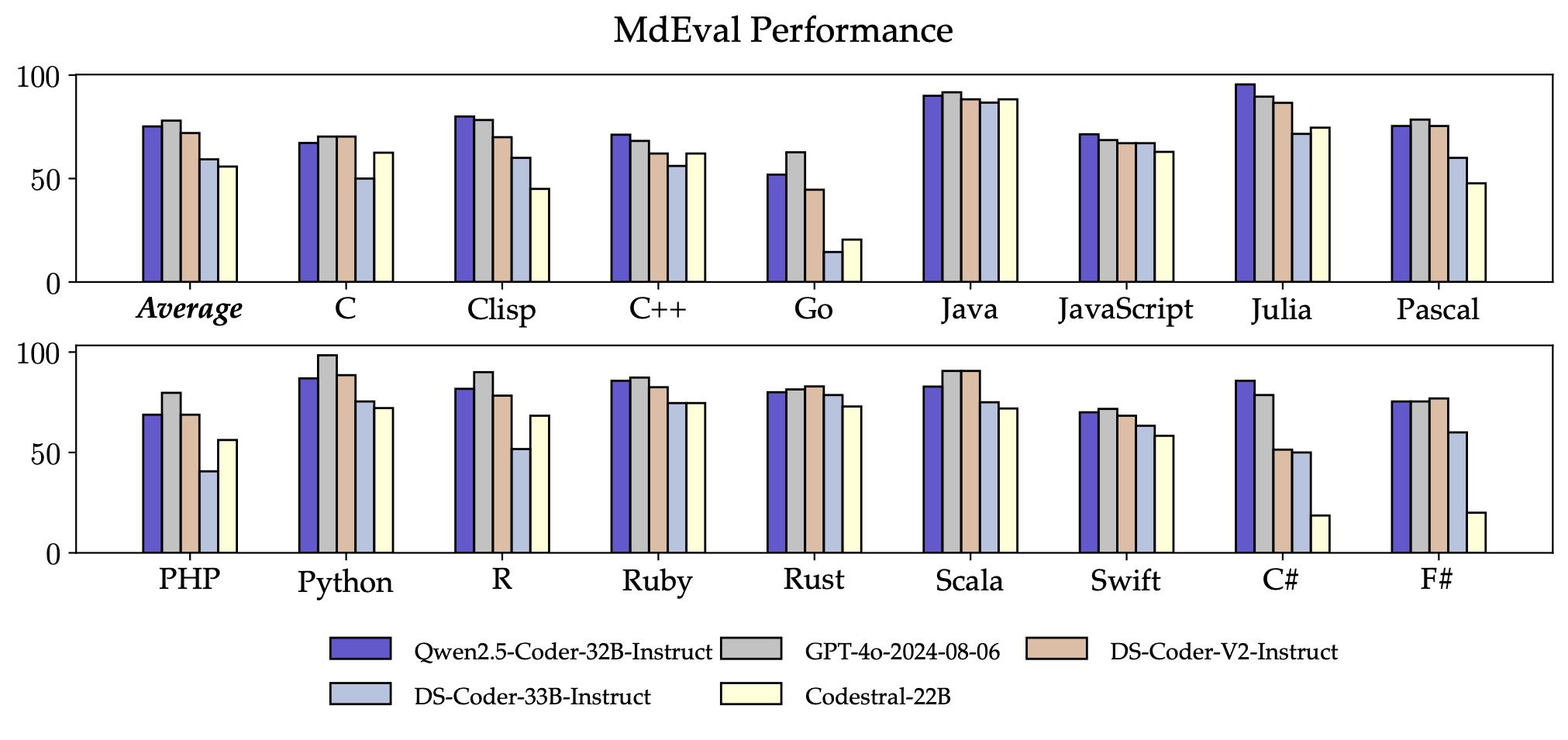

- multilingual: Intelligent programming assistants should be familiar with all programming languages, and Qwen2.5-Coder-32B-Instruct performs well on more than 40 programming languages, achieving a score of 65.9 on McEval, with impressive performances on Haskell, Racket, and others, thanks to our unique data cleansing and rationing during the pre-training phase.

In addition, Qwen2.5-Coder-32B-Instruct's multi-programming language code fixing ability is also surprising, which will help users to understand and modify their familiar programming languages, and greatly alleviate the learning cost of unfamiliar languages.

Similar to McEval, MdEval is a multi-programming language code fixing benchmark, and Qwen2.5-Coder-32B-Instruct achieved a score of 75.2 on MdEval, which is the top score among all open source models.

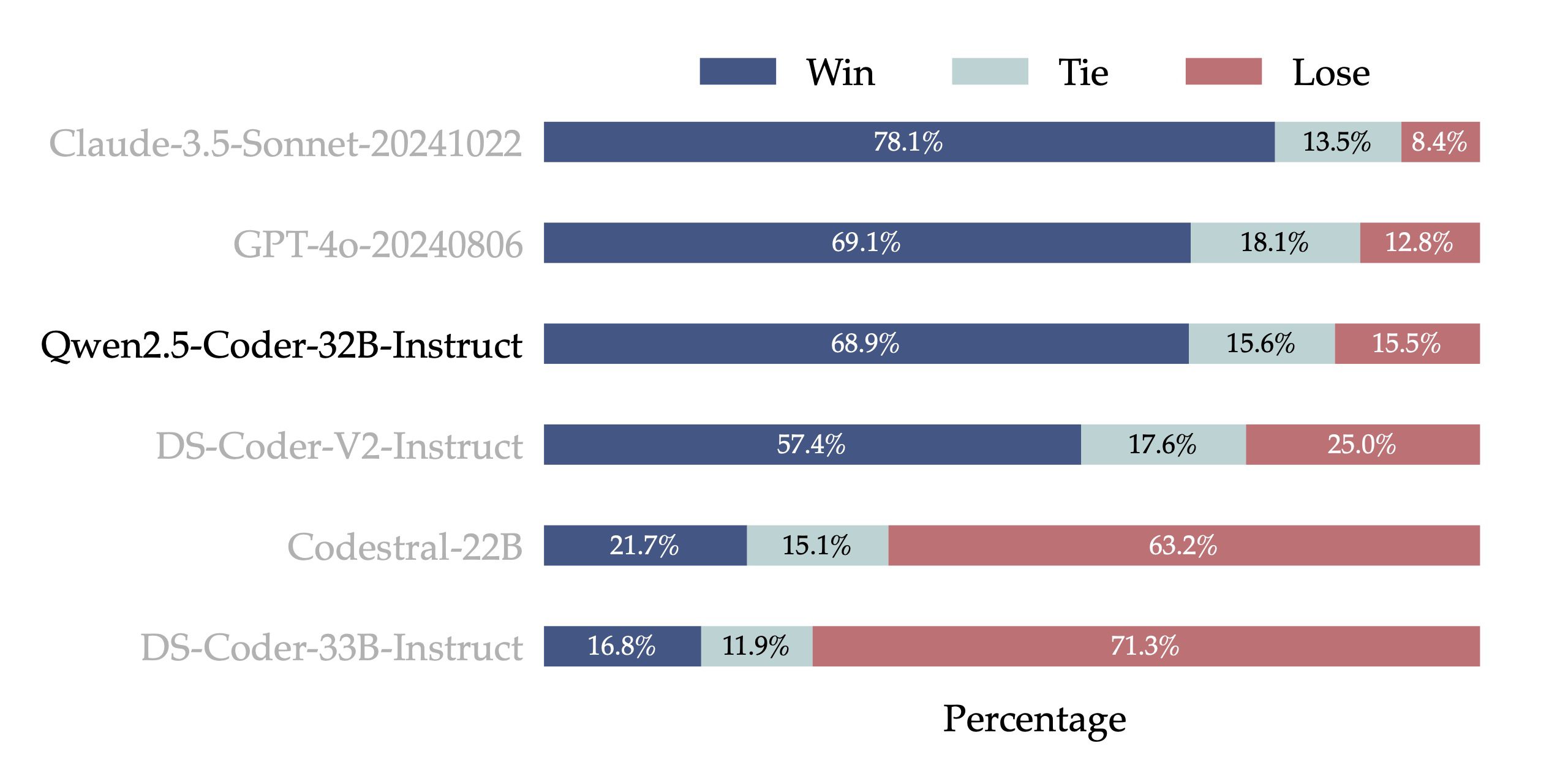

- Human preference alignment: To test the alignment performance of Qwen2.5-Coder-32B-Instruct on human preferences, we constructed Code Arena (similar to Arena Hard), a benchmark for evaluating code preferences from internal annotations. We use GPT-4o as the evaluation model for preference alignment, with an "A vs. B win" evaluation - i.e., the percentage by which model A's score exceeds model B's in the test set of instances. The results below show the advantage of Qwen2.5-Coder-32B-Instruct in preference alignment.

Versatility: a wealth of model sizes

The Qwen2.5-Coder open source model family consists of six sizes: 0.5B, 1.5B, 3B, 7B, 14B, and 32B, which not only meets the needs of developers in different resource scenarios, but also provides a good experimental field for the research community. The following table shows the detailed model information:

| Models | Params | Non-Emb Params | Layers | Heads (KV) | Tie Embedding | Context Length | License |

|---|---|---|---|---|---|---|---|

| Qwen2.5-Coder-0.5B | 0.49B | 0.36B | 24 | 14 / 2 | Yes | 32K | Apache 2.0 |

| Qwen2.5-Coder-1.5B | 1.54B | 1.31B | 28 | 12 / 2 | Yes | 32K | Apache 2.0 |

| Qwen2.5-Coder-3B | 3.09B | 2.77B | 36 | 16 / 2 | Yes | 32K | Qwen Research |

| Qwen2.5-Coder-7B | 7.61B | 6.53B | 28 | 28 / 4 | No | 128K | Apache 2.0 |

| Qwen2.5-Coder-14B | 14.7B | 13.1B | 48 | 40 / 8 | No | 128K | Apache 2.0 |

| Qwen2.5-Coder-32B | 32.5B | 31.0B | 64 | 40 / 8 | No | 128K | Apache 2.0 |

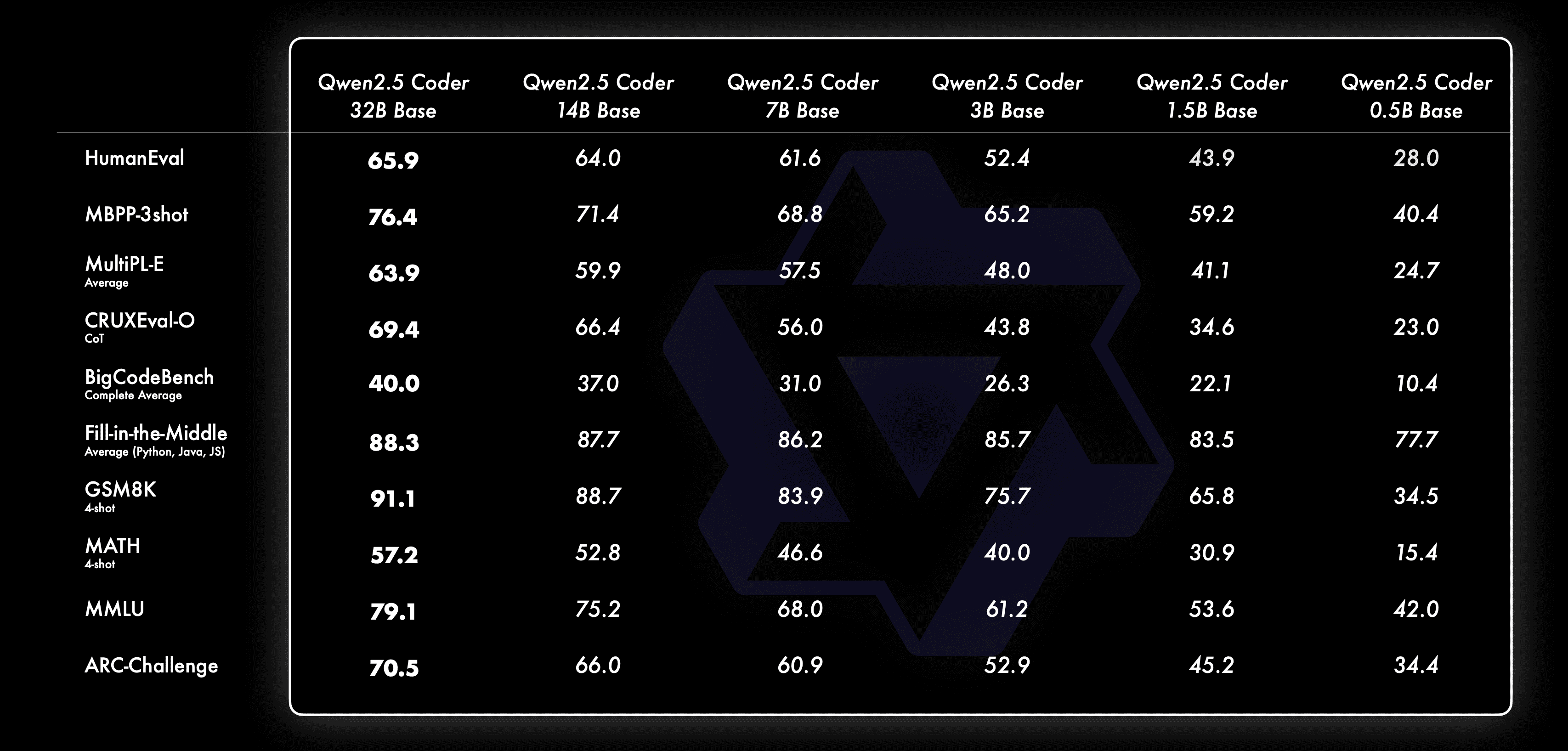

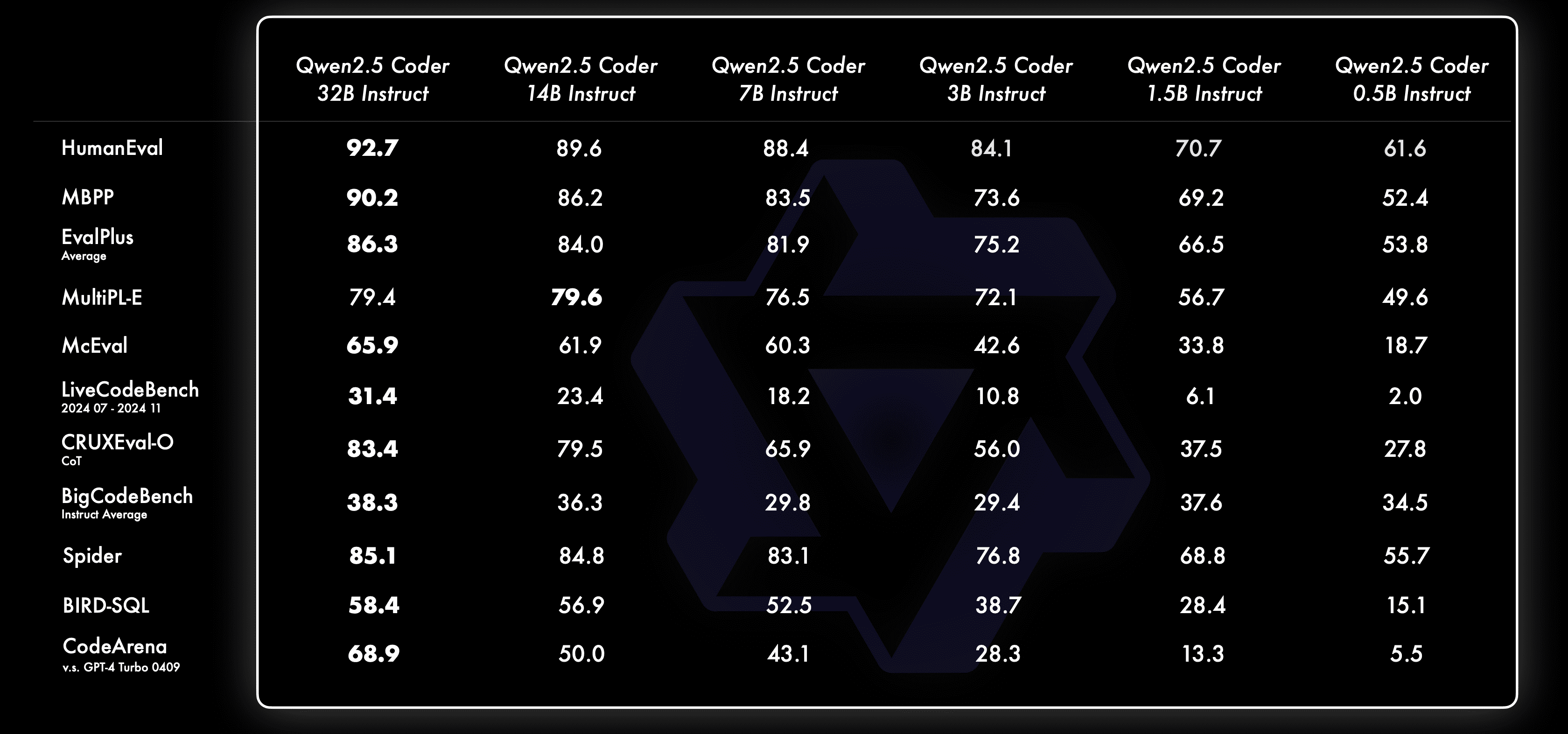

We've always believed Scaling Law Philosophy. We evaluate the performance of Qwen2.5-Coder models of different sizes on all datasets to verify the effectiveness of Scaling on Code LLMs.

For each size, we have open-sourced Base and Instruct models, where the Base model serves as a base for developers to fine-tune their models, and the Instruct model is the official alignment model available for direct chat.

Here's how the Base model behaves at different sizes:

Here's how the Instruct model behaves at different sizes:

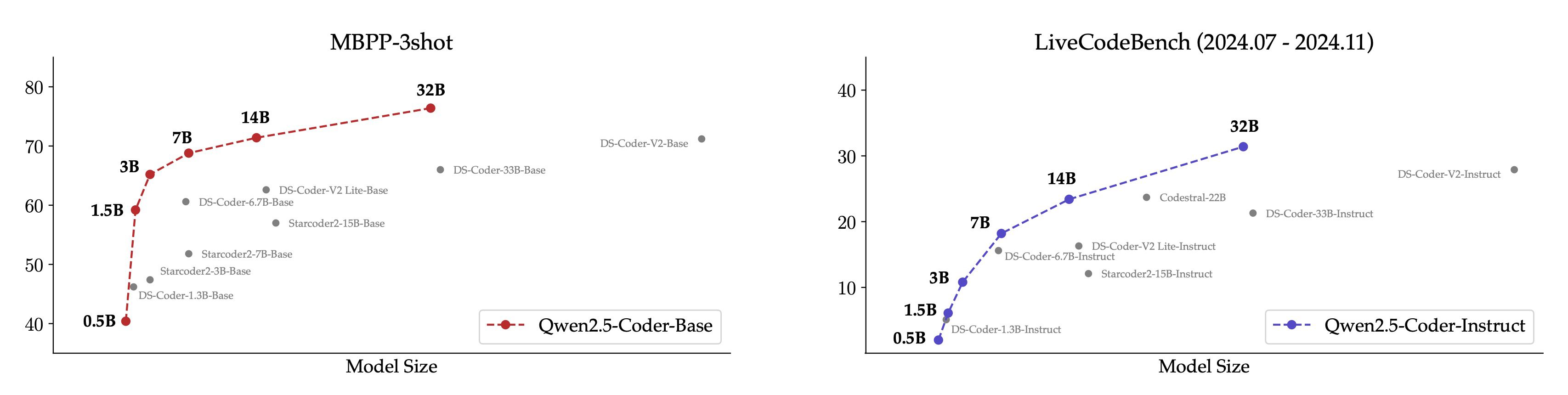

For more visualization, we show a comparison between different sizes of the Qwen2.5-Coder model and other open source models on the core dataset.

- For Base model, we choose MBPP-3shot as the evaluation index, and our extensive experiments show that MBPP-3shot is more suitable for evaluating the Base model and can correlate well with the real effect of the model.

- For the Instruct model, we chose LiveCodeBench topics from the last 4 months (2024.07 - 2024.11) for evaluation, and these recently published topics that are unlikely to leak into the training set reflect the OOD capability of the model.

The expected positive correlation between model size and model effectiveness, and the fact that the Qwen2.5-Coder achieved SOTA performance at all sizes, encourages us to continue exploring larger Coder models.

Practical: Meet Cursor and Artifacts

A practical Coder has always been our vision. To this end, we have explored the practical application of the Qwen2.5-Coder model in the context of code assistants and Artifacts.

Qwen2.5-Coder 🤝 Cursor

Intelligent code assistants are already widely used, but currently rely mostly on closed-source models, and we hope that the emergence of Qwen2.5-Coder will provide developers with a friendly and powerful alternative.

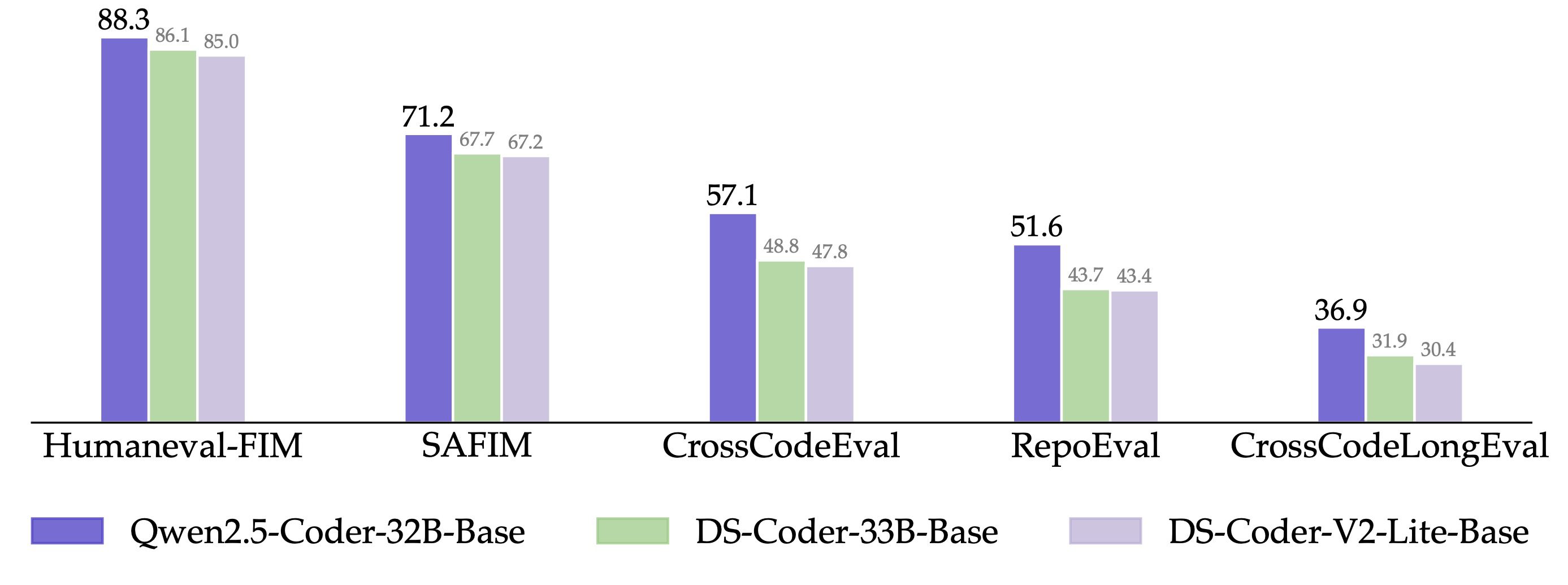

In addition, Qwen2.5-Coder-32B has demonstrated strong code-completion ability on the pre-trained model, and achieved SOTA performance on five evaluation sets, including Humaneval-Infilling, CrossCodeEval, CrossCodeLongEval, RepoEval, and SAFIM.

In order to maintain a fair comparison, we control the maximum sequence length to 8k, and use Fill-in-the-Middle mode for testing. In the 4 review sets of CrossCodeEval, CrossCodeLongEval, RepoEval, and Humaneval-Infilling, we evaluated whether the generated content is absolutely equal to the real labels (Exact Match); and in SAFIM, we used the 1-time execution success rate (Pass@1) for the Evaluation.

Qwen2.5-Coder 🤝 Artifacts

Artifacts is one of the most important applications for code generation that can help users to create something suitable for visualization, and we chose the Open WebUI Explore the potential of Qwen2.5-Coder in Artifacts scenarios, here are some concrete examples:

We will soon go online with code mode on Tongyi's official website https://tongyi.aliyun.com, which supports all kinds of visualization applications such as one-sentence generated websites, mini-games and data charts. Welcome to experience it!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...