Simple, effective RAG retrieval strategy: sparse + dense hybrid search and rearrangement, and use "cue caching" to generate overall document-relevant context for text chunks.

In order for an AI model to be useful in a particular scenario, it usually needs access to background knowledge. For example, a customer support chatbot needs to understand the specific business it serves, while a legal analysis bot needs to have access to a large number of past cases.

Developers often augment the knowledge of AI models using Retrieval-Augmented Generation (RAG), a method of retrieving relevant information from a knowledge base and attaching it to user prompts to significantly improve the responsiveness of the model. The problem is that traditional RAG Programs lose context when encoding information, which often results in the system being unable to retrieve relevant information from the knowledge base.

In this paper, we outline an approach that can significantly improve the retrieval step in RAG. This approach is called Contextual Retrieval and uses two subtechniques: Contextual Embeddings and Contextual BM25. This approach reduces the number of retrieval failures by 491 TP3T, and 671 TP3T when combined with reranking. these improvements dramatically increase retrieval accuracy, which directly translates into improved performance on downstream tasks.

The essence is a mixture of semantically similar and word frequency similar results, sometimes the semantic results do not represent the true intent. Read the link provided at the end of the text, it's been 2 years since this "old" strategy was released and the method is still rarely used, either by falling into extremely complex RAG strategies or by using only embedding + reordering.

In this article, a small improvement on this old strategy is to use "cache hints" to generate context for blocks of text that fits the overall context of the document at low cost. It's a small change, but the results are impressive!

You can do this by Our sample code utilization Claude Deploy your own contextual retrieval solution.

A note on the simple use of longer tips

Sometimes the simplest solution is also the best. If your Knowledge Base is less than 200,000 Token (about 500 pages of material), you can include the entire Knowledge Base directly in the prompts provided to the model, eliminating the need for a RAG or similar method.

A couple of weeks ago, we released for Claude Cue CacheIn addition to the new API, we've significantly accelerated and reduced the cost of this approach. Developers can now cache frequently used hints between API calls, reducing latency by more than 2x and cost by up to 90% (you can read more about this in our Hint Cache Sample Code (Understanding how it works).

However, as your knowledge base grows, you will need a more scalable solution, and that's where contextual search comes in. With that background out of the way, let's get down to business.

RAG Basics: Expanding to a Larger Knowledge Base

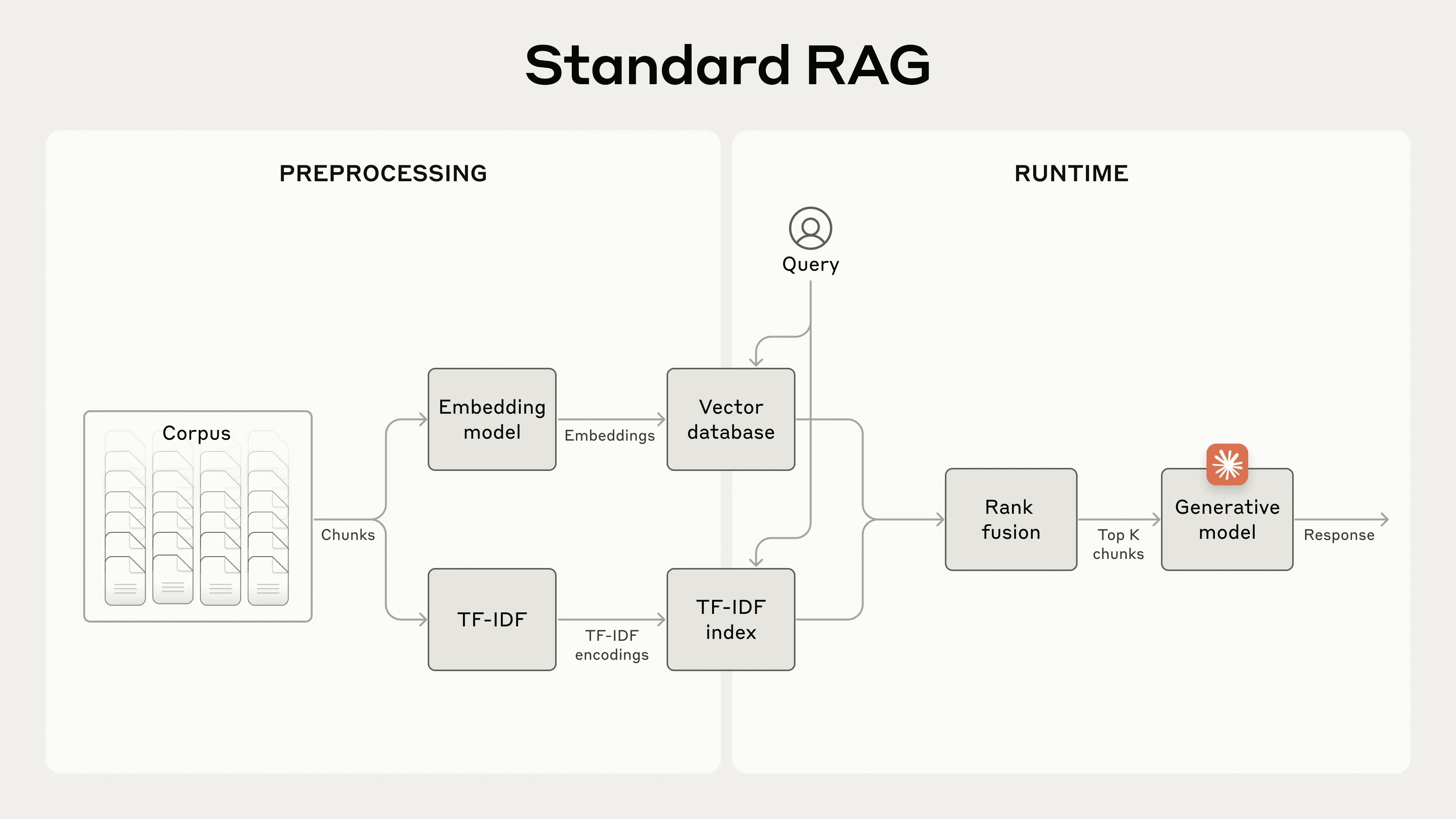

RAG is the typical solution for large knowledge bases that do not fit into a context window.RAG preprocesses the knowledge base in the following steps:

- Decomposition of the knowledge base (the document "corpus") into smaller text fragments, usually no more than a few hundred tokens; (excessively long blocks of text express more meaning, i.e., are too semantically rich)

- Use the embedding model to convert these segments into vector embeddings that encode meaning;

- Store these embeddings in a vector database for searching by semantic similarity.

At runtime, when the user inputs a query to the model, the vector database finds the most relevant fragment based on the semantic similarity of the query. The most relevant fragment is then added to the prompt sent to the generative model (answering the question as the context of the larger model reference).

While embedding models are good at capturing semantic relationships, they can miss critical exact matches. Fortunately, an older technique can help in such cases.BM25 is a ranking function that finds exact word or phrase matches through lexical matching. It is particularly effective for queries containing unique identifiers or technical terms.

BM25 Improved based on the concept of TF-IDF (Word Frequency-Inverse Document Frequency), which measures the importance of a word in a collection of documents.BM25 prevents common words from dominating the results by taking into account the length of the document and applying a saturation function to the word frequency.

Here's how BM25 works when semantic embedding fails: Suppose a user queries the technical support database for "error code TS-999". (The (vector) embedding model may find generic content about the error code, but may miss the exact "TS-999" match. Instead, BM25 looks for that specific text string to identify the relevant document.

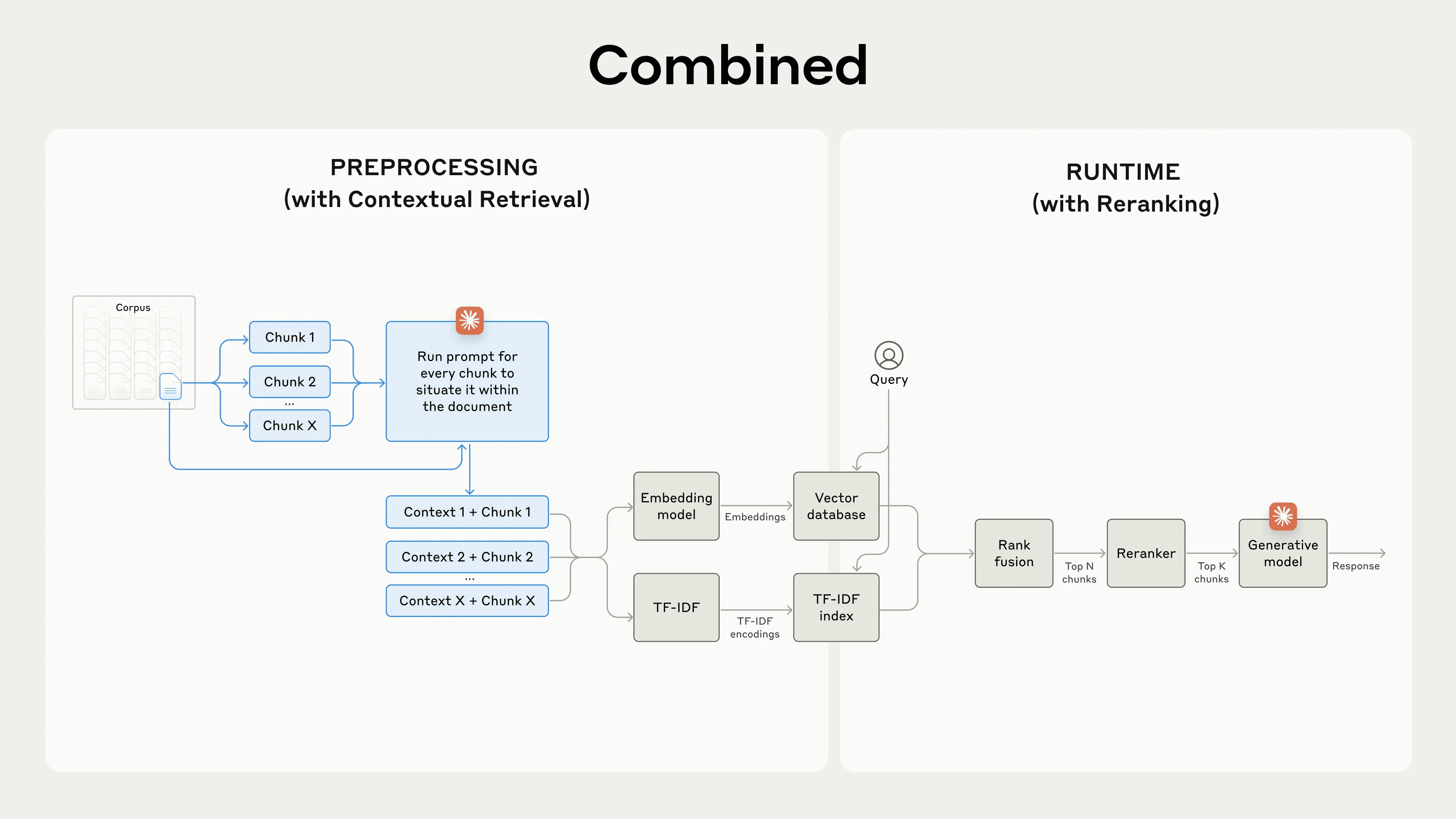

By combining embedding and BM25 techniques, the RAG scheme can retrieve the most relevant fragments more accurately, as follows:

- The knowledge base (the document "corpus") is broken down into smaller pieces of text, usually no more than a few hundred tokens;

- Create TF-IDF encodings and semantic (vector) embeddings for these segments;

- Use BM25 to find the best fragment based on an exact match;

- Use (vector) embedding to find the segments with the highest semantic similarity;

- The results of steps (3) and (4) are merged and de-emphasized using a sort fusion technique; e.g., the specialized reordering model Rerank 3.5 .

- Add the first K segments to the prompt to generate a response.

By combining BM25 and embedding models, traditional RAG systems are able to strike a balance between precise term matching and broader semantic understanding to provide more comprehensive and accurate results.

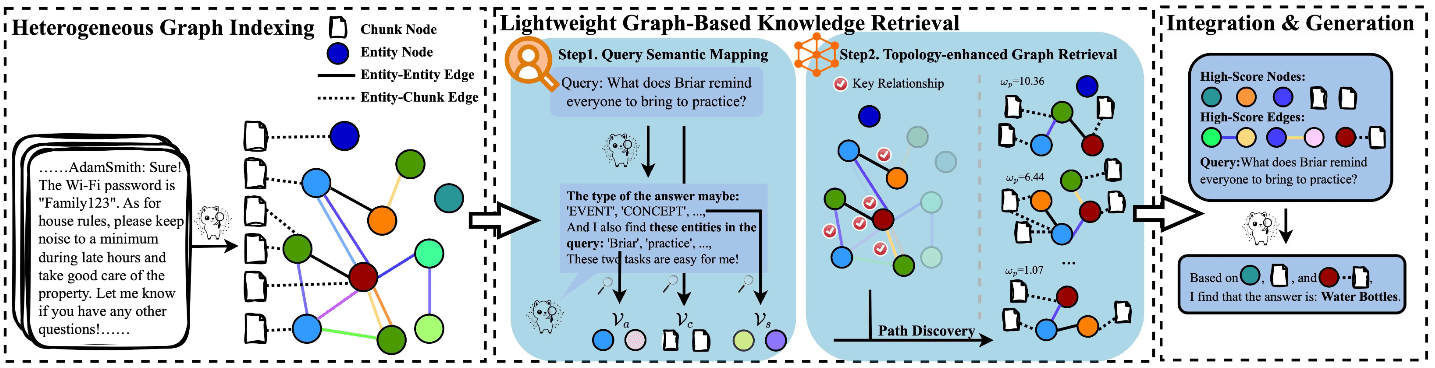

A standard Retrieval Augmentation Generation (RAG) system that combines embedding and Best Match 25 (BM25) to retrieve information.TF-IDF (Word Frequency-Inverse Document Frequency) measures the importance of words and forms the basis of BM25.

This approach allows you to scale to a much larger knowledge base than can be accommodated by a single prompt, at a low cost. However, these traditional RAG systems have a significant limitation: they often break context.

Speaking here on the basis of the retrieval program to make a reasonable design, has not yet spoken on the truncated text block view, the truncated text block is to express the same content, should never be truncated, but the above RAG programInevitably, the context is truncated. This is a problem that is both simple and complex. Let's get to the point of this article.

Contextual challenges in traditional RAG

In traditional RAG, documents are often split into smaller chunks for efficient retrieval. This approach is suitable for many application scenarios, but can lead to problems when individual chunks lack sufficient context.

For example, suppose you have some financial information embedded in your knowledge base (e.g., a U.S. SEC report) and receive the following question:"What is ACME Corporation's revenue growth in Q2 2023?"

A related block may contain the following text:"The company's revenues increased 3% from the previous quarter." However, the block itself does not explicitly mention specific companies or relevant time periods, making it difficult to retrieve the correct information or use it effectively.

Introducing Contextual Search

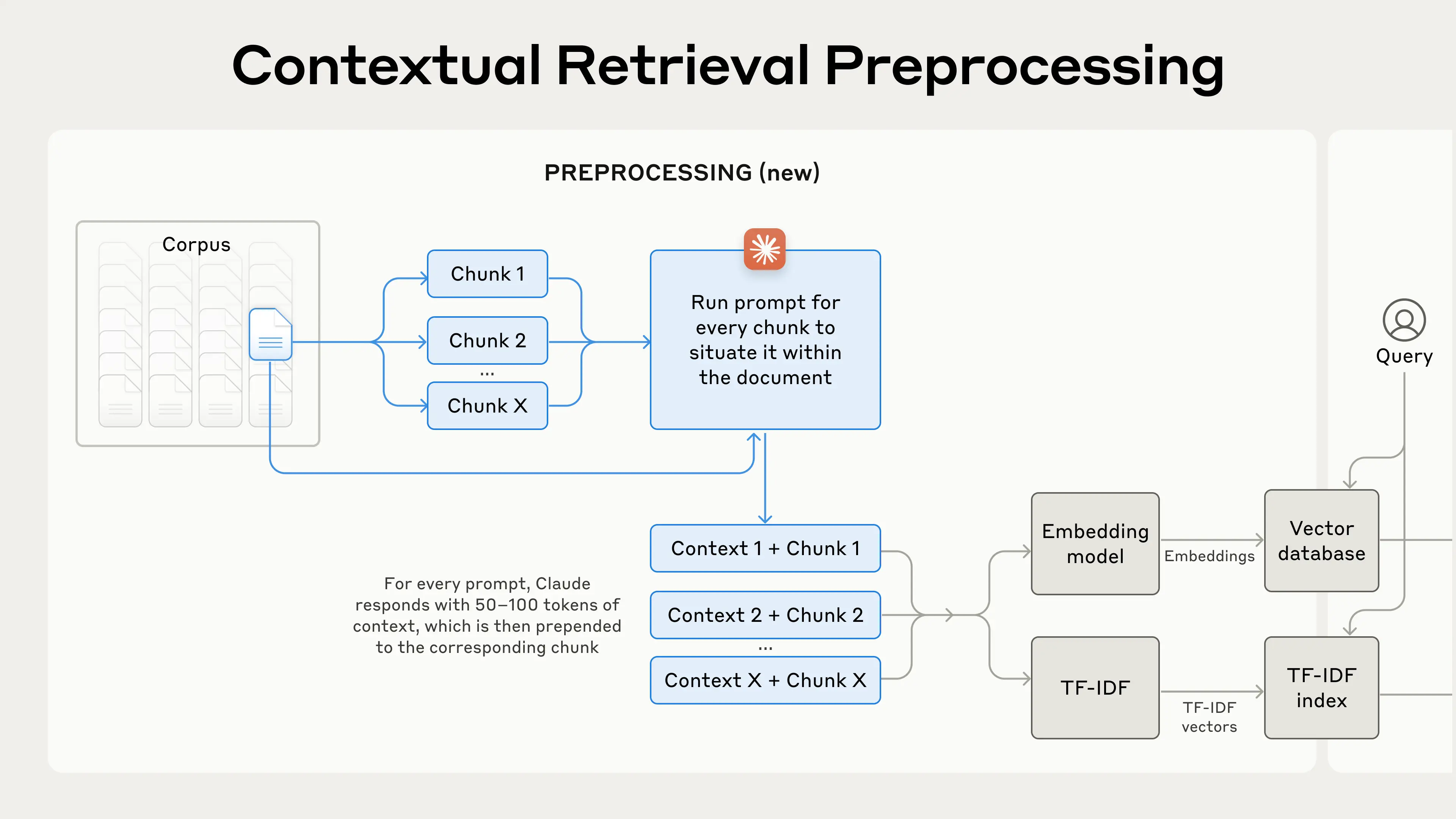

Contextual retrieval is accomplished by adding a specific block to each block prior to embedding theInterpretive context("Context Embedding") and creating a BM25 index ("Context BM25") solves this problem.

Let's go back to the SEC report collection example. Here is an example of how a block is converted:

original_chunk = "该公司的收入比上一季度增长了 3%。"

contextualized_chunk = "该块来自一份关于 ACME 公司 2023 年第二季度表现的 SEC 报告;上一季度的收入为 3.14 亿美元。该公司的收入比上一季度增长了 3%。"

It is worth noting that a number of other ways of using context to improve retrieval have been suggested in the past. Other suggestions include:Adding a generic document summary to a block(We experimented and found the gain to be very limited),Hypothetical document embedding cap (a poem) Digest-based indexing(We evaluated it and found that the performance is low). These methods are different from the one proposed in this paper.

Many of the methods for improving context quality have been experimentally shown to have limited gain, and even the relative best methods mentioned above are still questionable.Because adding explanatory context to this conversion process results in a loss of more or less informationThe

Even if a complete paragraph is sliced up into text blocks and multiple levels of headings are added to the content of the complete paragraph, this paragraph in isolation and out of context may not convey knowledge accurately, as the above examples have said dream.

This method effectively solves the problem that when the content of a text block exists alone, the lack of context causes the content to be isolated and meaningless.

Enabling Contextual Search

Of course, manually annotating context for thousands or even millions of blocks in a knowledge base is too much work. To enable contextual retrieval, we turned to Claude, where we wrote a hint that instructs the model to provide concise, block-specific context based on the context of the entire document. Here's how we used Claude 3 Haiku's hints to generate context for each block:

<document>

{{WHOLE_DOCUMENT}}

</document>

这是我们希望置于整个文档中的块

<chunk>

{{CHUNK_CONTENT}}

</chunk>

请提供一个简短且简明的上下文,以便将该块置于整个文档的上下文中,从而改进块的搜索检索。仅回答简洁的上下文,不要包含其他内容。

The generated context text is typically 50-100 Token and is added to the block before embedding and before creating the BM25 index.

This cue run has to reference the full document corresponding to the text block (which shouldn't be more than 500 pages, right?) , as a cue cache, in order to accurately generate the context associated with the text block relative to the full document.

This relies on Claude caching capabilities, the full document for the prompt cached input does not need to pay every time, caching, just pay once, so the program to achieve the prerequisites areLarge model allows long document cachingModels such as DeepSeek have similar capabilities.

The following is the pretreatment process in practice:

Contextual searching is a preprocessing technique that improves search accuracy.

If you are interested in using contextual search, you can refer to our operation manual Start.

Reducing the Cost of Contextual Retrieval with Hint Caching

Retrieval through Claude contexts can be accomplished at a low cost thanks to the special hint caching feature we mentioned. With hint caching, you don't need to pass in reference documents for each block. Simply load the document into the cache once and then reference the previously cached content. Assuming 800 Token per block, 8k Token per document, 50 Token per context instruction, and 100 Token per block of context.The one-time cost of generating contextualized blocks is $1.02 per million document TokenThe

methodology

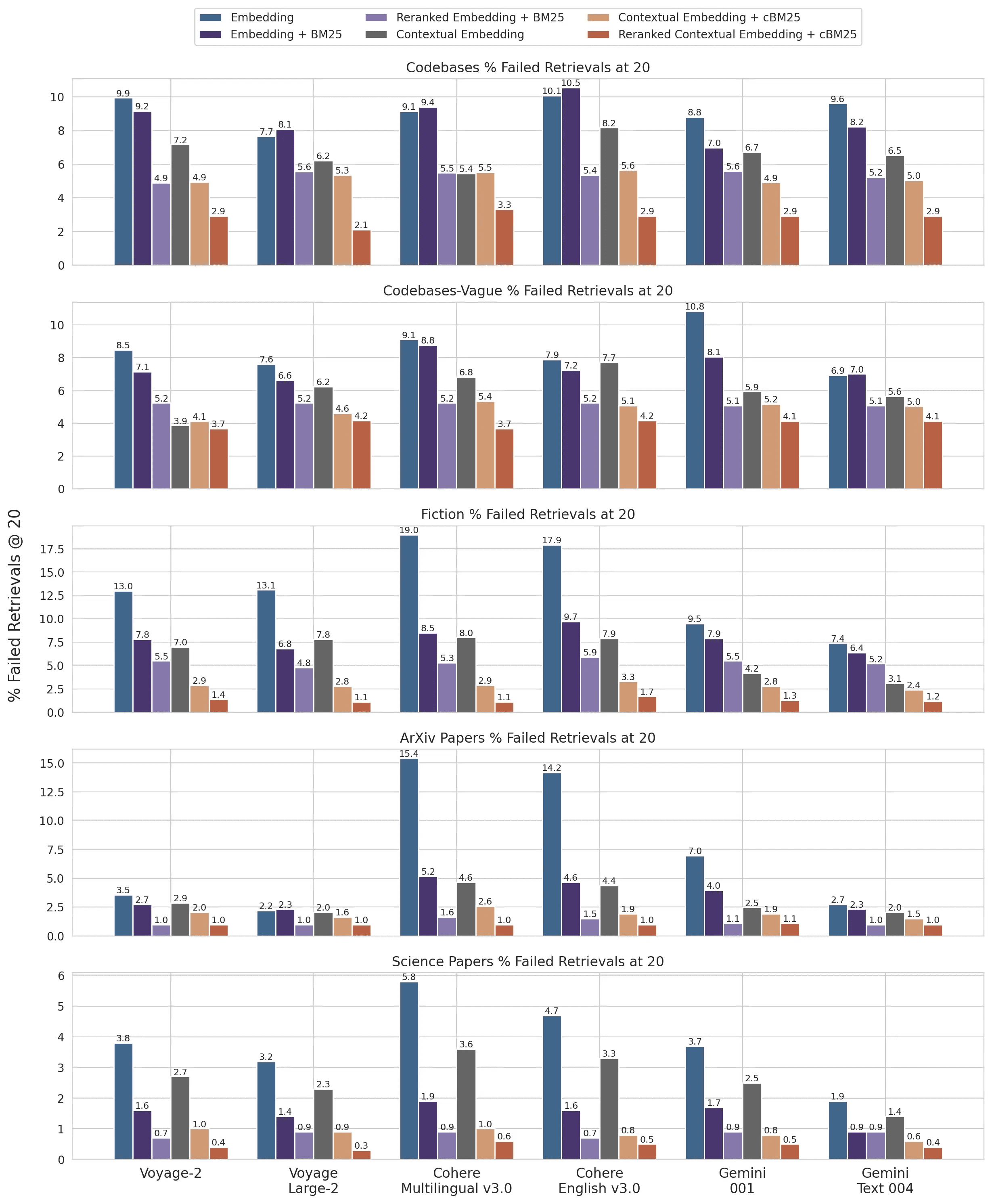

We conducted experiments in multiple knowledge domains (codebase, novels, ArXiv papers, scientific papers), embedding models, retrieval strategies, and evaluation metrics. We conducted our experiments in APPENDIX II Some examples of the questions and answers we used for each domain are listed in.

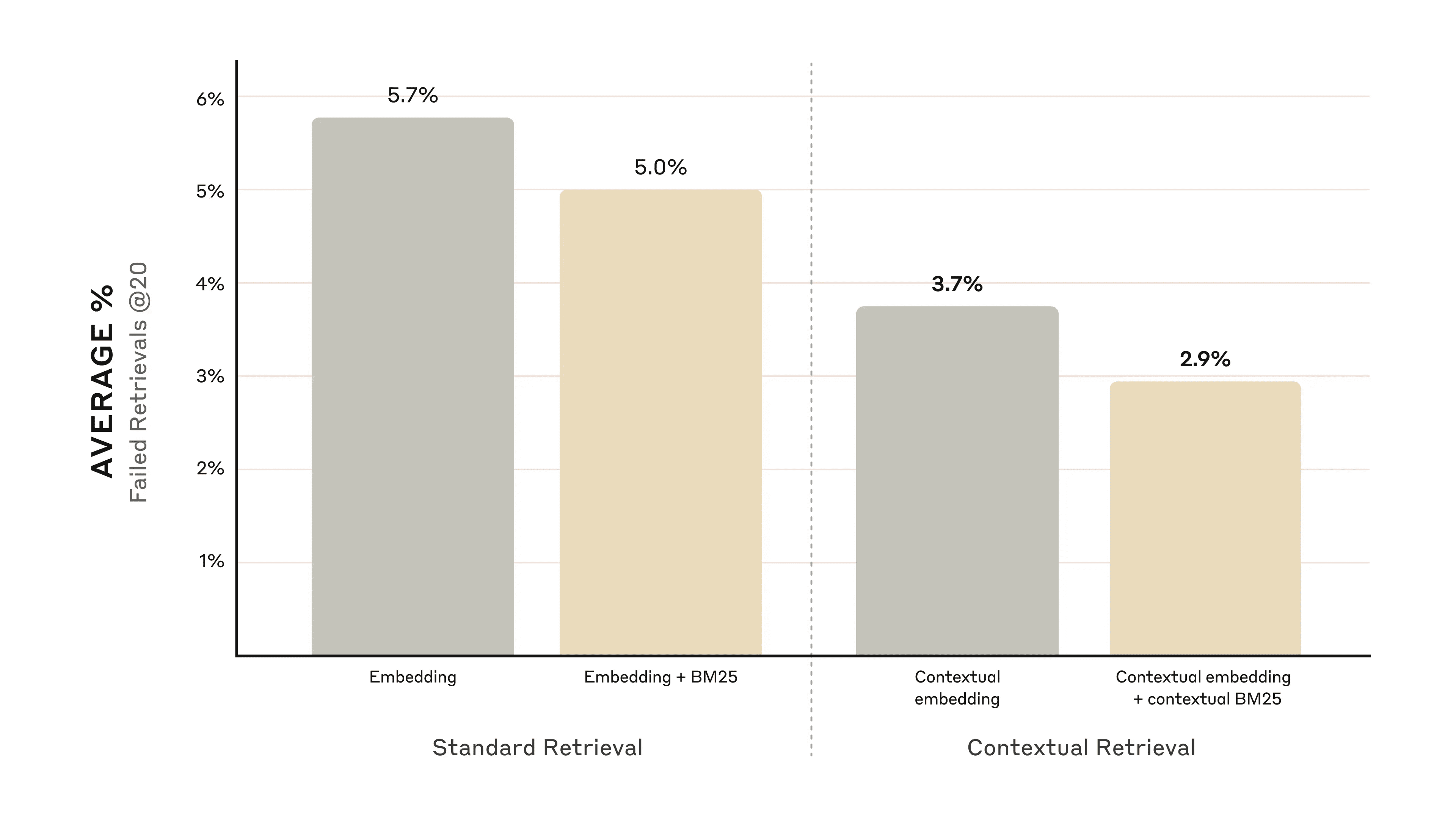

The following figure shows the average performance across all knowledge domains when using the embedding configuration with the best performance (Gemini Text 004) and retrieving the first 20 fragments. We use 1 minus recall@20 as an evaluation metric, which measures the percentage of relevant documents that failed to be retrieved in the first 20 snippets. The full results can be viewed in the Appendix - Contextualization improves performance in every combination of embedded sources we evaluated.

performance enhancement

Our experiments show that:

- Contextual embedding reduced the search failure rate for the first 20 fragments by 35%(5.7% → 3.7%).

- Combining contextual embedding and contextual BM25 reduced the search failure rate for the first 20 fragments by 49%(5.7% → 2.9%).

Combining contextual embedding and contextual BM25 reduced the retrieval failure rate of the first 20 fragments by 49%.

Realization Considerations

The following points need to be kept in mind when implementing contextual search:

- Segment boundaries: Consider how the document will be split into segments. The choice of fragment size, boundaries and overlap can affect retrieval performance ^1^.

- Embedding models: While contextual retrieval improves the performance of all the embedding models we tested, certain models may benefit more. We found that Gemini cap (a poem) Voyage Embedding is particularly effective.

- Customize contextual cues: While the generic prompts we provide work well, better results can be obtained by customizing the prompts for specific domains or use cases (e.g., by including a glossary of key terms that may be defined in other documents in the knowledge base).

- Number of clips: Adding more fragments to the context window can improve the chances of including relevant information. However, too much information may distract the model, so the number needs to be controlled. We tried 5, 10, and 20 snippets and found that using 20 snippets performed best with these options (see the Appendix for details), but it is worthwhile to experiment based on your use case.

Always run assessments: Response generation can be improved by passing contextualized snippets and distinguishing between context and snippets.

Using reordering to further improve performance

In the last step, we can combine contextual retrieval with another technique to further improve performance. In traditional RAG (Retrieval-Augmented Generation), the AI system searches its knowledge base for potentially relevant pieces of information. For large knowledge bases, the initial search typically returns a large number of fragments-sometimes as many as hundreds-with varying relevance and importance.

Reordering is a common filtering technique that ensures that only the most relevant pieces are passed to the model. Reordering provides a better response while reducing cost and latency because the model processes less information. The key steps are as follows:

- An initial search was performed to obtain the most likely relevant segments (we used the first 150);

- Pass the first N segments and the user query to the reordering model;

- A reordering model was used to score each segment based on relevance and importance to the cue, and then the top K segments were selected (we used the top 20);

- The first K segments are passed as context to the model to generate the final result.

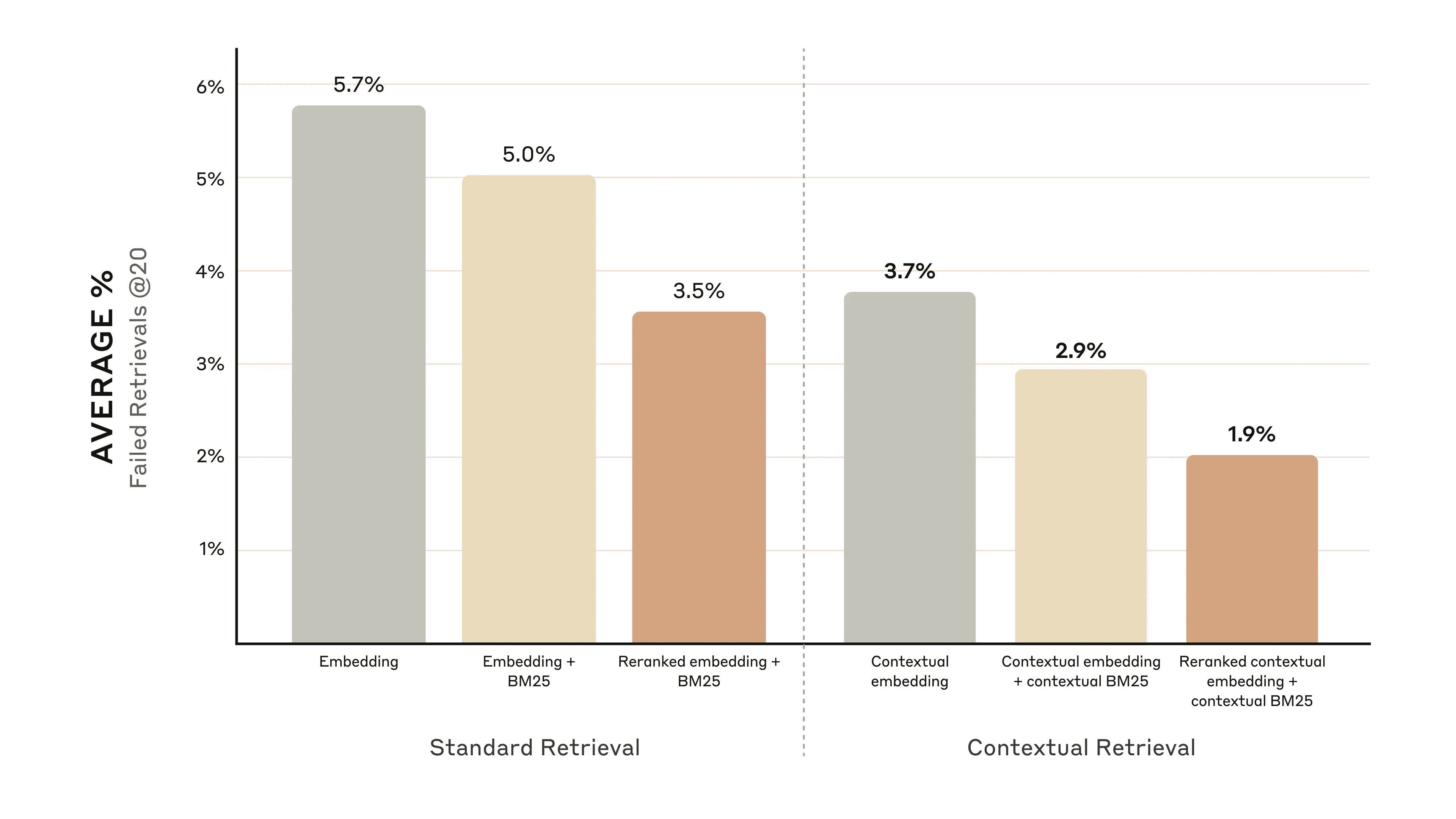

Combining contextual search and reordering maximizes search accuracy.

Combining contextual search and reordering maximizes search accuracy.

performance enhancement

There are various reordering models available in the market. We use the Cohere reorderer Ran the test. voyage A reorderer is also provided, but we did not have time to test it. Our experiments show that adding a reordering step can further optimize retrieval in a variety of domains.

Specifically, we find that reordering contextual embeddings and contextual BM25 reduces the retrieval failure rate of the top 20 fragments by 671 TP3T (5.71 TP3T → 1.91 TP3T).

Reordering contextual embeddings and contextual BM25 reduced the retrieval failure rate of the first 20 fragments by 67%.

Reordering contextual embeddings and contextual BM25 reduced the retrieval failure rate of the first 20 fragments by 67%.

Cost and delay considerations

An important consideration for reordering is the impact on latency and cost, especially when reordering a large number of fragments. Since reordering adds an extra step at runtime, it inevitably adds some latency, even though the reorderer is parallelized to score all fragments. There is a tradeoff between reordering more fragments for higher performance and reordering fewer fragments for lower latency and cost. We recommend experimenting with different settings for your specific use case to find the optimal balance.

reach a verdict

We ran a number of tests comparing different combinations of all of the above techniques (embedding models, use of BM25, use of contextual search, use of reorderers, and number of top K retrieved results) and conducted experiments across a variety of dataset types. A summary of our findings is presented below:

- Embedding + BM25 is better than just using embedding;

- Voyage and Gemini is the embedding model that worked best in our tests;

- Passing the first 20 segments to the model is more effective than passing only the first 10 or 5;

- Adding context to segments greatly improves retrieval accuracy;

- Reordering is better than not reordering;

- All these advantages can be stacked: To maximize performance improvements, we can use a combination of contextual embedding (from Voyage or Gemini), contextual BM25, reordering steps, and adding 20 snippets to the prompt.

We encourage all developers using the knowledge base to use the Our Practice Manual Experiment with these methods to unlock new levels of performance.

Appendix I

Below is a breakdown of Retrievals @ 20 results across datasets, embedded providers, BM25 used in conjunction with embedding, use of contextual retrieval, and use of reordering.

For a breakdown of Retrievals @ 10 and @ 5 and sample questions and answers for each dataset, see the APPENDIX IIThe

1 minus recall @ 20 for the dataset and embedded provider results.

1 minus recall @ 20 for the dataset and embedded provider results.

footnotes

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...