Proxy Lite: 3B Parametric Visual Model-Driven Web Automation Tool

General Introduction

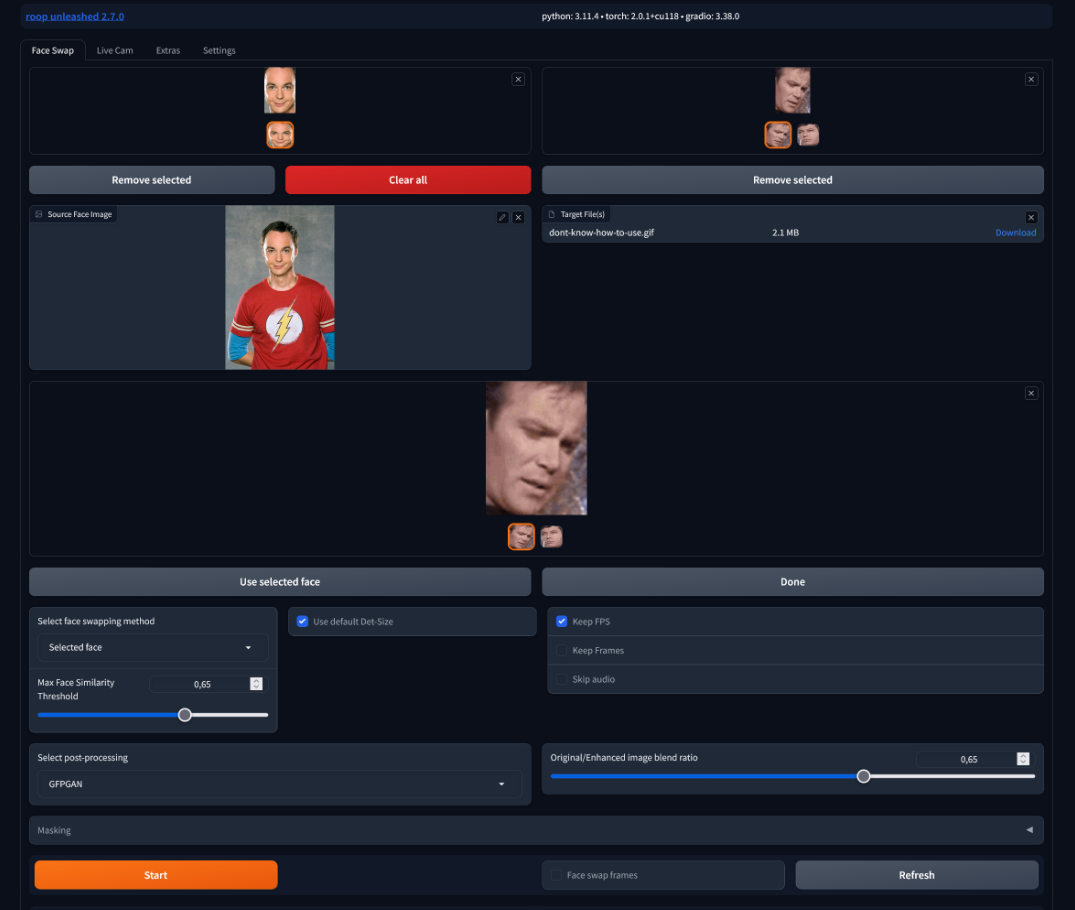

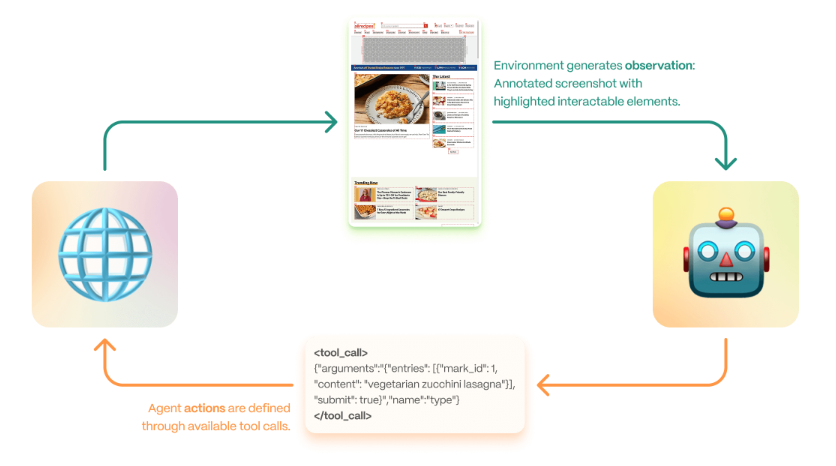

Proxy Lite is powered by Convergence An open source, lightweight web automation tool developed by AI as a mini-version of Proxy with an open weight design. It is based on the 3B-parameter Visual Language Model (VLM) and can autonomously navigate web pages and perform tasks such as finding information or operating a browser. The project is hosted on GitHub and is free for users to download and customize the code.Proxy Lite connects to the Hugging Face Spaces demo endpoint by default, but it is officially recommended to connect to the Hugging Face Spaces demo endpoint via the vLLM Deploy locally for optimal performance. It has a low resource footprint and is suitable for developers to run on their personal devices, focusing on automating tasks rather than user interactions.

Function List

- web automation: Automatically navigates web pages, performs clicking, typing, scrolling, etc.

- mandate implementation: Complete specific tasks based on instructions, such as searching the market and returning a rating.

- Local runtime support: Can be deployed and run on personal devices via vLLM.

- open source and scalable: Full code is provided and the user can adjust the model or environment configuration.

- low resource footprint: Lightweight design for running on consumer-grade hardware.

- Browser Interaction: Chromium browser driven by Playwright, headless mode supported.

Using Help

Proxy Lite is an open source tool focused on web automation for developers and technology enthusiasts. Below is a detailed installation and usage guide to ensure that users can deploy and run it smoothly.

Installation process

Proxy Lite requires a local environment to support it, here are the official recommended installation steps:

1. Environmental preparation

- operating system: Windows, Linux, or macOS.

- hardware requirement: Normal consumer-grade devices are fine, with 8GB of RAM or more recommended.

- software dependency::

- Python 3.11.

- Git (for cloning repositories).

- Playwright (browser control library).

- vLLM (Model Reasoning Framework).

- Transformers (needs to be installed from source to support Qwen-2.5-VL).

2. Cloning of warehouses

Run the following command in the terminal to download the code:

git clone https://github.com/convergence-ai/proxy-lite.git

cd proxy-lite

3. Setting up the environment

- Quick Installation::

make proxy - manual installation::

pip install uv uv venv --python 3.11 --python-preference managed uv sync uv pip install -e . playwright install- Note: If you are deploying the model locally, you need to install vLLM and Transformers:

uv sync --all-extrasTransformers needs to be installed from source to support Qwen-2.5-VL, the version is available in the

pyproject.tomlSpecified in.

- Note: If you are deploying the model locally, you need to install vLLM and Transformers:

4. Local deployment (recommended)

It is officially recommended to use vLLM to deploy local endpoints and avoid relying on demo endpoints:

vllm serve convergence-ai/proxy-lite-3b --trust-remote-code --enable-auto-tool-choice --tool-call-parser hermes --port 8008

- Parameter description::

--trust-remote-code: Allow loading of remote code.--enable-auto-tool-choice: Enable automatic tool selection.--tool-call-parser hermes: Called using the Hermes parsing tool.

- After the service runs, the endpoint address is

http://localhost:8008/v1The

Usage

Proxy Lite offers three ways to use it: command line, web UI and Python integration.

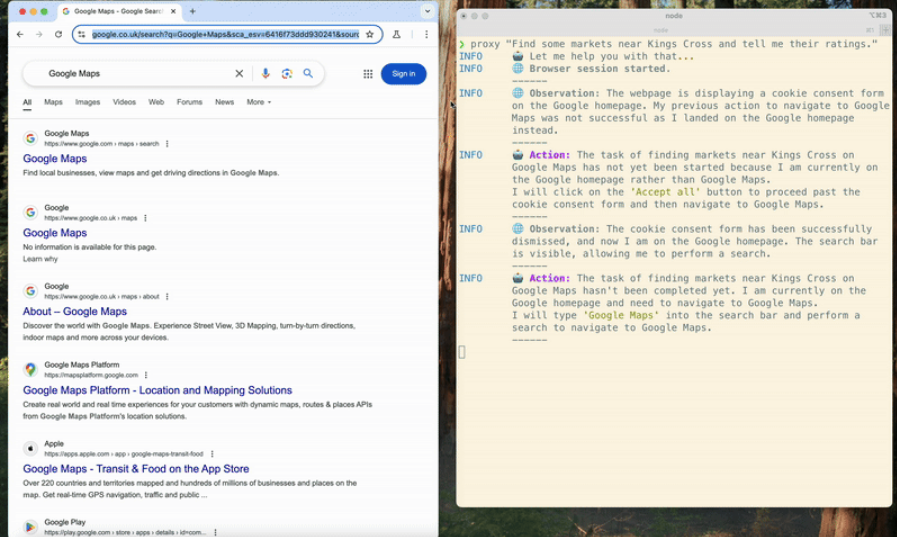

Mode 1: Command Line Operation

- Running Tasks::

proxy "Find some markets near Kings Cross and tell me their ratings."- The Hugging Face demo endpoint is used by default and may be slower.

- Using Local Endpoints::

proxy --api-base http://localhost:8008/v1 "Find some markets near Kings Cross and tell me their ratings."- or set environment variables:

export PROXY_LITE_API_BASE=http://localhost:8008/v1

- or set environment variables:

Way 2: Web UI operation

- Launch Interface::

make app - interviews::

Type in your browserhttp://localhost:8501, enter tasks through the interface.

Approach 3: Python Integration

- code example::

import asyncio from proxy_lite import Runner, RunnerConfig config = RunnerConfig.from_dict({ "environment": { "name": "webbrowser", "homepage": "https://www.google.com", "headless": True }, "solver": { "name": "simple", "agent": { "name": "proxy_lite", "client": { "name": "convergence", "model_id": "convergence-ai/proxy-lite-3b", "api_base": "http://localhost:8008/v1" } } }, "max_steps": 50, "action_timeout": 1800, "environment_timeout": 1800, "task_timeout": 18000, "logger_level": "DEBUG" }) proxy = Runner(config=config) result = asyncio.run(proxy.run("Book a table for 2 at an Italian restaurant in Kings Cross tonight at 7pm.")) print(result) - clarification: By

RunnerClasses run tasks in a browser environment and support custom configurations.

Main function operation flow

Function 1: Web automation

- move::

- Start the local endpoint (see Installation Step 4).

- Enter the task:

proxy --api-base http://localhost:8008/v1 "Search for markets near Kings Cross." - Proxy Lite uses Playwright to control the browser and perform navigation and actions.

- take note of: Tasks need to be clear, avoiding complex operations that require user interaction.

Function 2: Task Execution

- move::

- Enter tasks from the command line or Python:

result = asyncio.run(proxy.run("Find some markets near Kings Cross and tell me their ratings.")) - The model returns results, based on the Observe-Think-Act cycle.

- Enter tasks from the command line or Python:

- limitation: Tasks requiring logins or complex interactions are not supported unless all necessary information is provided.

Feature 3: Local Run Support

- move::

- Deploy the vLLM service.

- Configure the endpoint and run the task.

- dominance: Avoid instabilities and delays in demo endpoints.

caveat

- Demo Endpoint Limits: The default endpoints (Hugging Face Spaces) are for demonstration purposes only and are not suitable for production or frequent use; local deployment is recommended.

- anti-climbing measures: Use

playwright_stealthReduces the risk of detection, but may still be blocked by CAPTCHA, recommended to be paired with a web proxy. - functional limitations: Proxy Lite specializes in autonomous task execution and is not suitable for tasks that require real-time user interaction or credential login.

- Debugging Support: Settings

logger_level="DEBUG"View detailed logs.

With these steps, you can quickly deploy and automate web tasks with Proxy Lite.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...