Promptimizer: an experimental library for optimizing large model prompt words, automatically optimizing Prompt

General Introduction

Promptimizer is an experimental cue word optimization library designed to help users systematically improve the cue words of their AI systems. By automating the optimization process, Promptimizer can improve cue word performance on specific tasks. Users simply provide an initial cue word, a dataset, and a custom evaluator (with optional human feedback), and Promptimizer runs an optimization loop that generates an optimized cue word designed to outperform the original cue word.

Function List

- Cue word optimization: automated optimization of cue words to improve the performance of the AI system on specific tasks.

- Dataset support: support for a variety of dataset formats, user-friendly cue word optimization.

- Custom Evaluators: Users can define custom evaluators to quantify the performance of cue words.

- Human Feedback: Human feedback is supported to further improve cue word optimization.

- Quick Start Guide: A detailed Quick Start Guide is provided to help users get started quickly.

Using Help

mounting

- First install the CLI tool:

pip install -U promptim - Ensure that you have a valid LangSmith API Key in your environment:

export LANGSMITH_API_KEY=你的API_KEY export ANTHROPIC_API_KEY=你的API_KEY

Creating Tasks

- Create an optimization task:

promptim create task ./my-tweet-task \ --name my-tweet-task \ --prompt langchain-ai/tweet-generator-example-with-nothing:starter \ --dataset https://smith.langchain.com/public/6ed521df-c0d8-42b7-a0db-48dd73a0c680/d \ --description "Write informative tweets on any subject." \ -yThis command will generate a directory containing the task configuration file and task code.

Defining the Evaluator

- Open the generated task directory in the

task.pyfile to find the evaluation logic section:score = len(str(predicted.content)) < 180 - Modify the evaluation logic to, for example, penalize output that contains labels:

score = int("#" not in result)

train

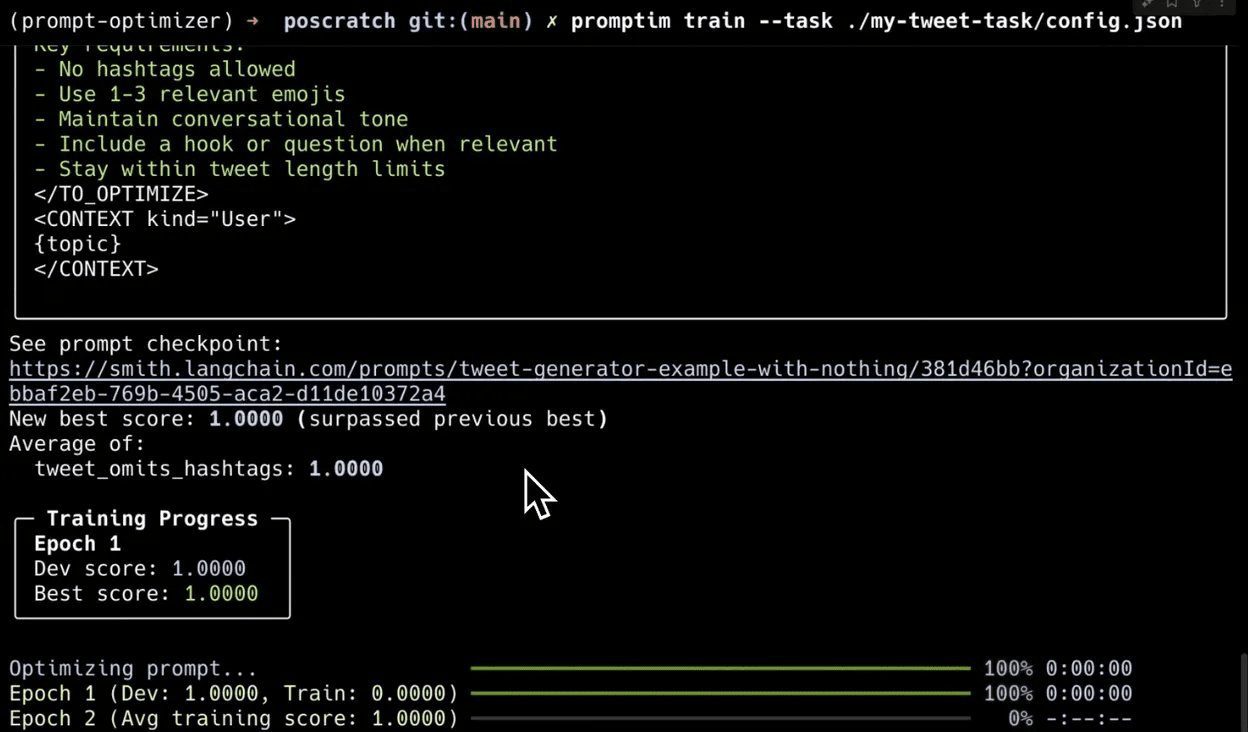

- Run the training command to start optimizing cue words:

promptim train --task ./my-tweet-task/config.jsonOnce the training is complete, the terminal outputs the final optimized cue word.

Add manual labels

- Set up the annotation queue:

promptim train --task ./my-tweet-task/config.json --annotation-queue my_queue - Access the LangSmith UI and navigate to the designated queue for manual labeling.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...