PixVerse V4 is re-released: video realism, sound, and speed improved across the board

At the start of 2025, the AI video space has seen a new technological race faster than expected. Shortly after the release of OmniHuman-1, PixVerse followed suit with a new model update, officially announcing that the PixVerse The V4 version is coming. As an observer in the field of AI technology, I had the honor to obtain the experience qualification of PixVerse V4 five days ago. After comprehensive testing, the V4 version has shown visible progress in text generation video, image generation video and new features, and the underlying model capability has realized a qualitative leap.

Significant improvement in text to video generation

PixVerse V4's ability to generate video from text is impressive. Below are a few cases tested by the author to visualize the generation effect of the new model:

Text Generation Video Case 1: Tornado Disaster Movie

Cue in. Tornadoes, high speed movement, tension and excitement, a retro 80's orange sports car on a city highway moving and drifting at high speed. Disaster movie atmosphere.

Text Generation Video Case 2: Foggy Stag

Cue in. An ethereal stag with a body made of silvery mist that shimmers faintly in the moonlight. Its antlers are adorned with floating orbs of light, and it moves silently, leaving shimmering trails of mist in its wake.

Text Generation Video Case 3: Space-Time Warp Leap

Cue in. Space-time warping leap sequence: Einstein field equations are applied to simulate space-time bending, and the camera moves along the Kerr black hole view interface to activate the time dilation visual effect. A spacetime singularity is set at the leap point, and the Penrose solver algorithm is introduced to compute the light-cone distortion (distortion factor 145%).

PixVerse V4's support for sci-fi and hardcore physics effects is particularly good, with stunning generation results. In order to visualize PixVerse V4's enhancements to sci-fi and hardcore physics effects, let's look at a case study. Here is the cue to generate this video:

Cue in. Hyperspherical dimension-leaping lens: 11-dimensional spatial projection of lens motion using a Riemannian manifold trajectory algorithm to activate a visual early warning system for topological defects during dimensional collapse.

In the case of hyperspherical dimension leaping, text appears in the video, but tests show that the controllability of text generation in the current version leaves much to be desired; text can be generated in text generation videos, but text generation is not yet supported in image generation videos, and only English input is supported.

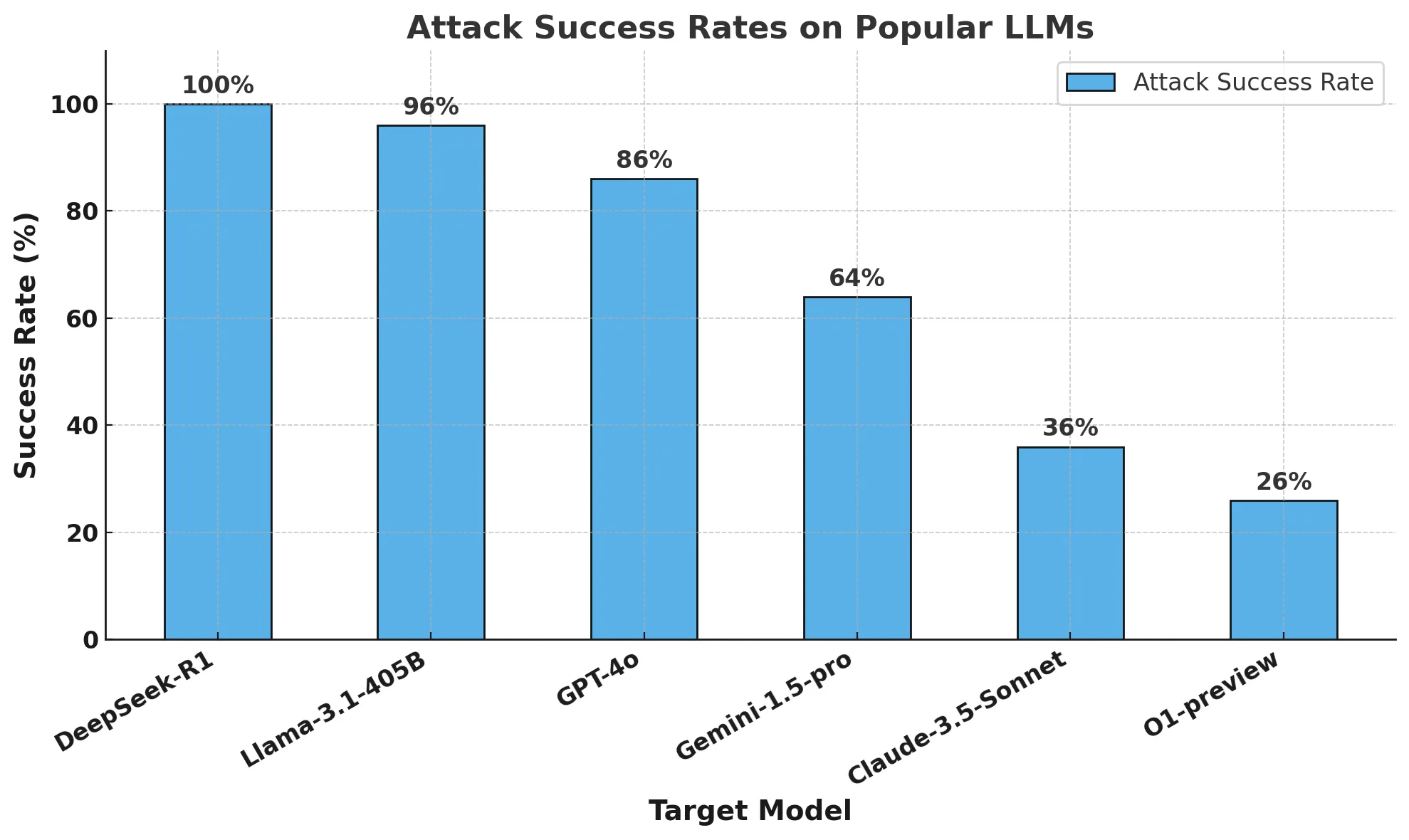

Generation speeds have increased dramatically & V4 models are firmly in the T1 echelon.

In addition to the significant improvement in generation, another impressive feature of PixVerse V4 is the dramatic increase in generation speed. In Extreme Mode, videos can be generated in about 5 seconds, which is a significant improvement in efficiency. All in all, PixVerse V4 is significantly improved in all aspects compared to V3.5, and the new V4 model is undoubtedly the first tier of the current AI video field.

Looking back at PixVerse's history, the pace of technology iteration is remarkable: v1 went live on January 15, 2024, v2 on July 24, v2.5 on August 22, v3 on October 29, v3.5 on December 29, and the latest, v4, was released on February 24th. PixVerse's strategy of sticking to a fast iterative modeling capability is the right one. Even though PixVerse has generated a lot of buzz on social media with its effects templates feature, and even topped the App Store charts in some Middle Eastern countries, PixVerse has not slowed down the pace of model iteration, but rather accelerated the evolution of its models.

since (a time) DeepSeek After attracting widespread attention, there is a general consensus in the AI field that "the only application of AI is intelligence itself". Model capability is the cornerstone of AI technology development. The optimization of functions and experience at the application level is to lower the threshold for users to use AI technology, just like the coefficient of model capability. For example, if the model capability is 10 points, the interesting special effect template function is like a coefficient of 10, and the final application performance can reach 100 points. On the other hand, if the model capability is only 1 point, even if the application function is powerful, the final effect is not satisfactory.

On the flip side, Runway, a company in the AI video space, deserves recognition for its feature innovations, such as Act-One, Super Lens Motion, and other features. However, in the past six months, Runway's model doesn't seem to have significant iterative updates. Industry insiders are well aware of what six months means in the context of rapidly changing AI technology. PixVerse has found a balance between technology iteration and feature innovation: while keeping the model rapidly iterative, it continues to improve the model's underlying capabilities, thus making other feature innovations more valuable.

Sound Features & Effects Templates Upgraded

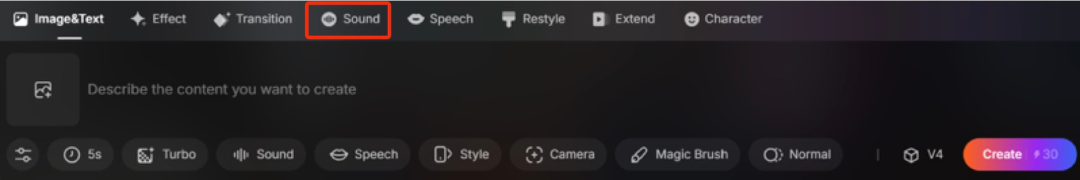

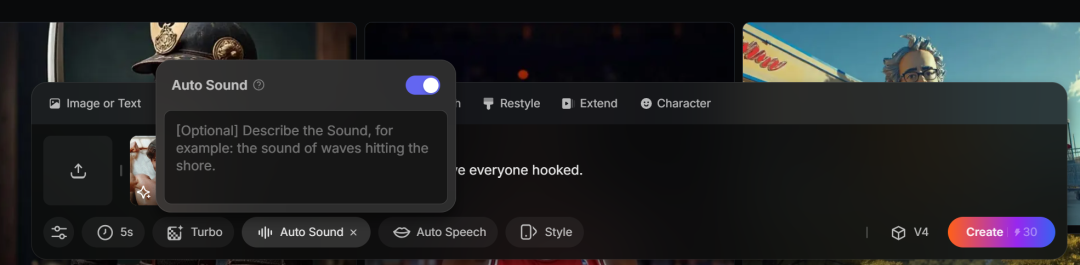

PixVerse V4 introduces a compelling new feature: Sound.

Careful readers may have noticed that most of the examples shown in the previous section are in video format, not GIFs. This is because the videos generated by PixVerse V4 already have natural and smooth sound effects. (The previous text-generated video examples are not in video format due to the platform's maximum number of videos in a single article). Users just need to enable the Sound function to automatically match the generated video with sound effects.

In addition, PixVerse V4 has added new features such as Speech (mouth synchronization) and Restyle (style migration), which you can experience on your own. As mentioned above, a strong base model is a prerequisite for the effective functioning of each feature.

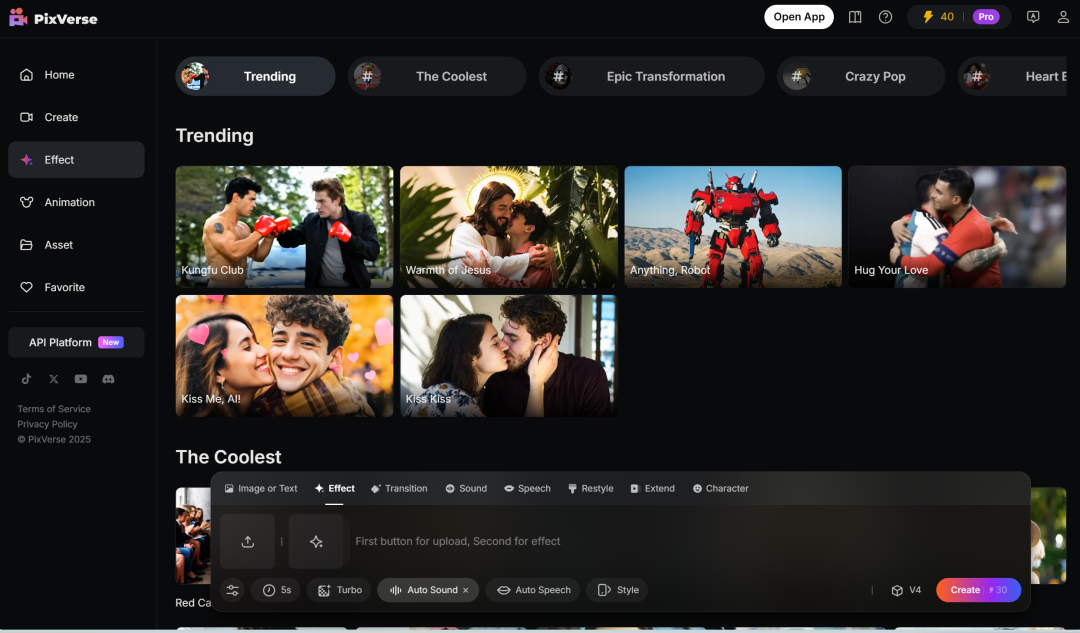

Last but not least, let's focus on the star feature of PixVerse, the effect templates. As the pioneer of FX templates, PixVerse has taken the quality of FX templates to the next level with the modeling capabilities of the V4 version.

PixVerse has been highly praised for its effects templates, and with the V4 version of the model, the texture and motion of the resulting videos have been enhanced once again.

In short, a strong modeling capability is the cornerstone of AI video technology development. Only by continuously improving the intelligence of the model itself can special effects, sound effects, style migration, and other application functions truly realize their potential and bring 혁신적인 experience to users. It is foreseeable that the competition in the field of AI video will become even more intense, and technological innovation is undoubtedly the key for vendors such as PixVerse to maintain their leading position.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...