PilottAI: An Open Source Project for Building Enterprise-Class Multi-Intelligence Body Applications

General Introduction

PilottAI is an open source Python framework hosted on GitHub and created by developer anuj0456. It focuses on helping users build enterprise-class multi-intelligence system , support for large language model (LLM) integration , providing task scheduling , dynamic expansion and fault tolerance mechanism and other features.PilottAI goal is to allow developers to use simple code to build scalable AI applications , such as automated processing of documents , manage customer service or analyze data. It's completely free, with open code for programmers and enterprise users. The official documentation is detailed, the framework supports Python 3.10+, and is released under the MIT license.

Function List

- Hierarchical Intelligent Body System: Supports division of labor between manager and worker intelligences and intelligent assignment of tasks.

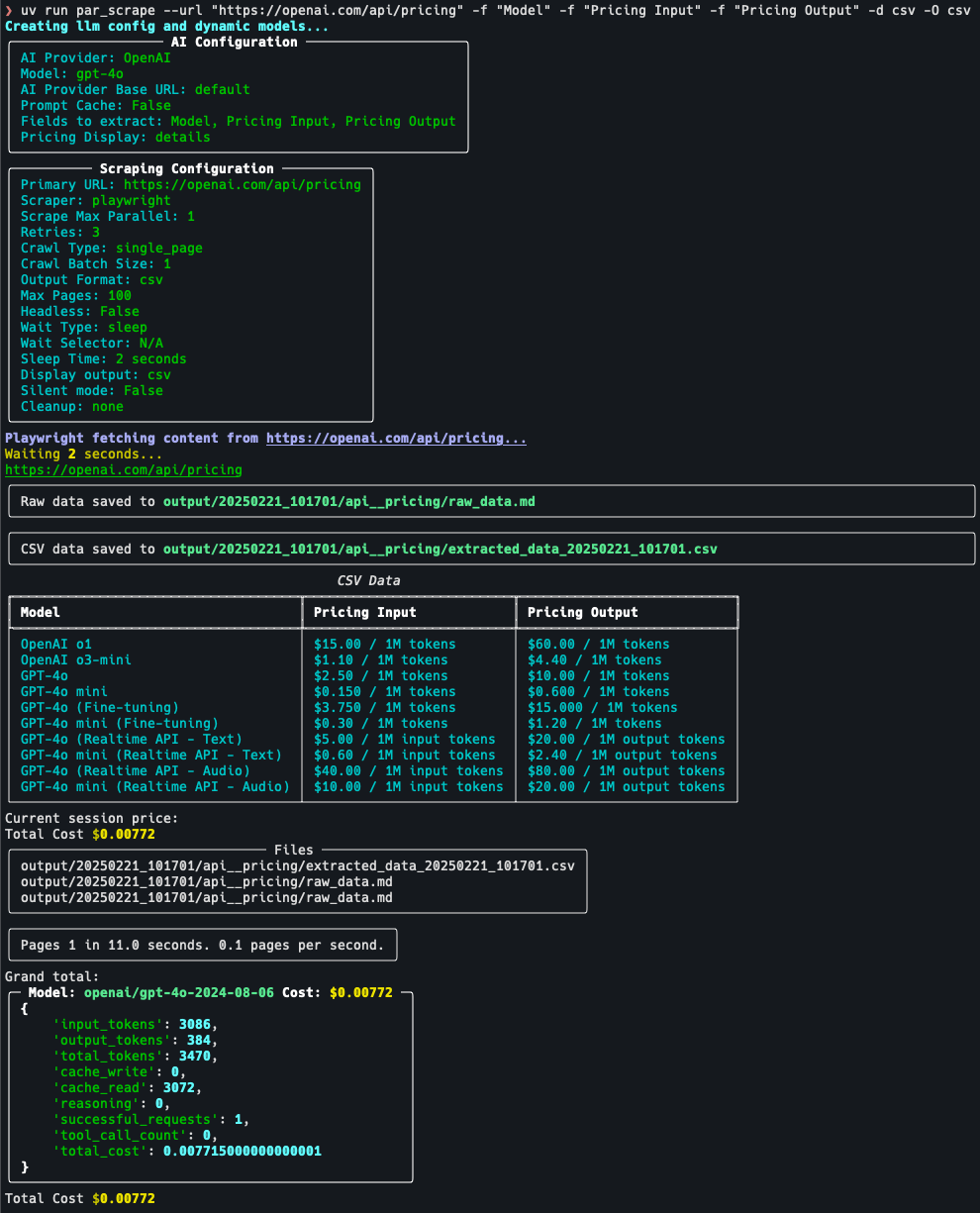

- Large Language Model Integration: Compatible with OpenAI, Anthropic, Google, and many other LLM providers.

- dynamic expansion: Automatically adjusts the number of intelligences according to the amount of tasks.

- fault tolerance mechanism: Automatic recovery in case of system errors to ensure stable operation.

- load balancing: Rationalize the distribution of tasks to avoid overload.

- Task organization: Support for multi-step workflows such as extraction, analysis and summarization.

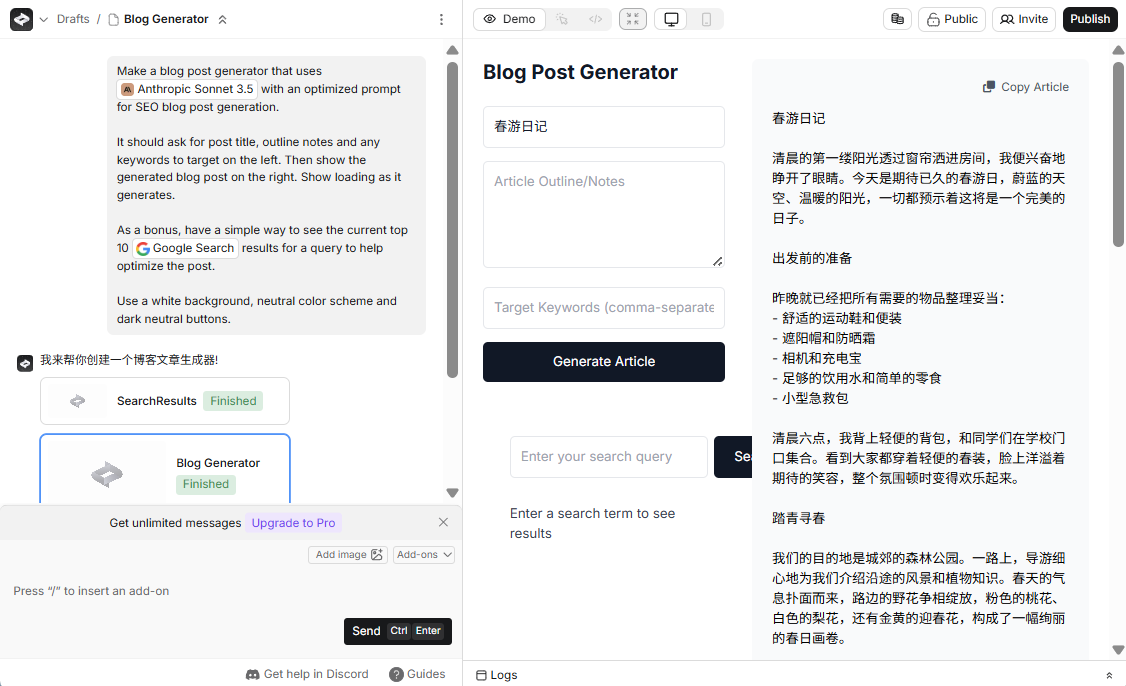

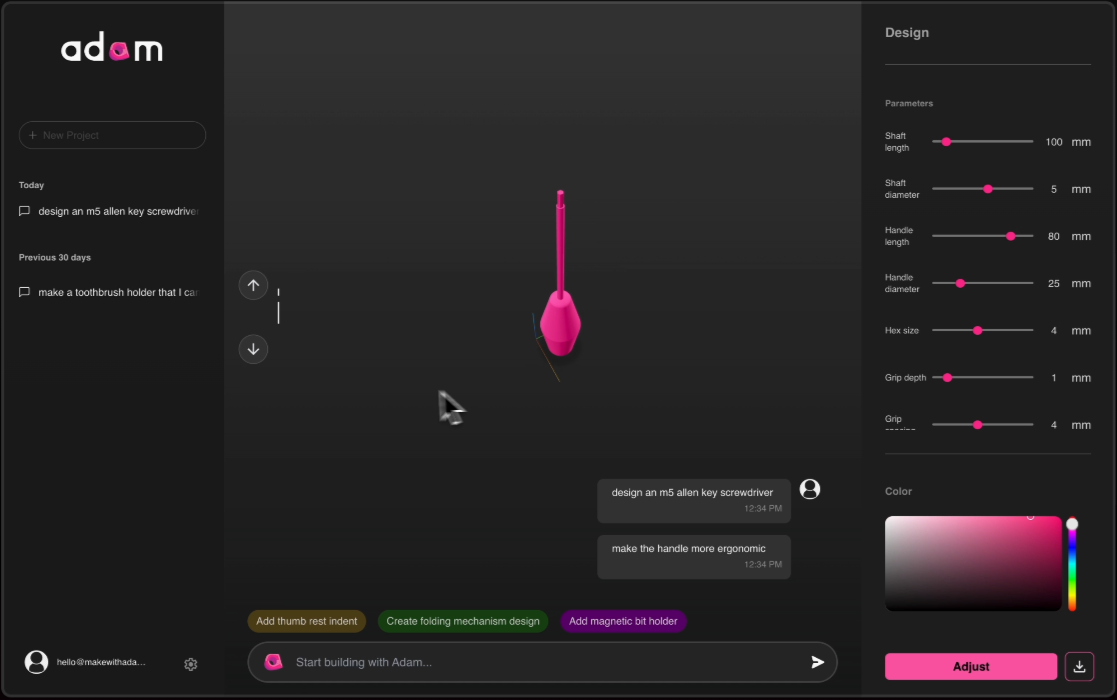

- specialized intelligence: Built-in intelligence for customer service, document processing, email management, and research analytics.

- Advanced Memory Management: Store task contexts to support semantic retrieval.

- Tool Support: Integrated document handling, WebSocket and customization tools.

Using Help

Installation process

PilottAI requires a Python environment to run. Here are the detailed steps:

- Checking the Python Version

Make sure Python 3.10 or later is installed. Type in the terminal:

python --version

If you have a version lower than 3.10, download and install it from the Python website.

- Download Code

Clone the PilottAI repository with Git:

git clone https://github.com/anuj0456/pilottai.git

Go to the project catalog:

cd pilottai

- Installation of dependencies

Runs in the terminal:

pip install pilott

This will automatically install all necessary libraries. If manual installation is required, the core dependencies include asyncio and LLM-related libraries, as described in the official documentation.

- Verify Installation

Run the test code:

python -m pilott.test

If there are no error messages, the installation was successful.

How to use the main features

1. Configure and start the system

PilottAI needs to be configured with LLMs and intelligences to work. Example code:

from pilott import Pilott

from pilott.core import AgentConfig, AgentRole, LLMConfig

# 配置 LLM

llm_config = LLMConfig(

model_name="gpt-4",

provider="openai",

api_key="your-api-key" # 替换为你的 API 密钥

)

# 配置智能体

config = AgentConfig(

role="processor",

role_type=AgentRole.WORKER,

goal="高效处理文档",

description="文档处理工作者",

max_queue_size=100

)

async def main():

# 初始化系统

pilott = Pilott(name="DocumentProcessor")

await pilott.start()

# 添加智能体

agent = await pilott.add_agent(

agent_type="processor",

config=config,

llm_config=llm_config

)

# 停止系统

await pilott.stop()

if __name__ == "__main__":

import asyncio

asyncio.run(main())

- clarification: Replacement

api_keyfor the key you get from platforms like OpenAI. When run, the system launches a document processing intelligence.

2. Processing PDF documents

PilottAI's document processing is very powerful. Operation Steps:

- To convert a PDF file (e.g.

report.pdf) into the project catalog. - Run the following code:

async def process_pdf(): result = await pilott.execute_task({ "type": "process_pdf", "file_path": "report.pdf" }) print("处理结果:", result) - The system extracts the contents of the file and returns the result.

3. Creation of specialized intelligences

PilottAI offers a wide range of pre-programmed intelligences, such as the Research Analytics Intelligence:

- Adding Intelligentsia:

researcher = await pilott.add_agent( agent_type="researcher", config=AgentConfig( role="researcher", goal="分析数据并生成报告", description="研究分析助手" ), llm_config=llm_config ) - Use intelligences to perform tasks:

result = await pilott.execute_task({ "type": "analyze_data", "data": "市场销售数据" }) print("分析结果:", result)

4. Configure load balancing and fault tolerance

- load balancing: Sets the check interval and overload threshold:

from pilott.core import LoadBalancerConfig config = LoadBalancerConfig( check_interval=30, # 每30秒检查一次 overload_threshold=0.8 # 80%负载视为过载 ) - fault tolerance mechanism: Set the number of recoveries and the heartbeat timeout:

from pilott.core import FaultToleranceConfig config = FaultToleranceConfig( recovery_attempts=3, # 尝试恢复3次 heartbeat_timeout=60 # 60秒无响应视为故障 )

caveat

- network requirement: Networking is required to use LLM to ensure that the API key is valid.

- documentation reference: For detailed configurations, see official documentThe

- adjust components during testing: Check the terminal logs when something goes wrong, or visit GitHub to submit an issue.

application scenario

- Enterprise Document Processing

Analyze contracts or reports with Document Processing Intelligence to extract key terms and improve efficiency. - Customer Support Automation

Customer service intelligences can handle inquiries, generate responses, and reduce the burden of manual labor. - Analysis of research data

Research Analytics Intelligence organizes information and analyzes trends, making it suitable for academic and business research. - Mail Management

Email Intelligence Body automatically sorts emails, generates templates, and optimizes the communication process.

QA

- What LLMs does PilottAI support?

Support for OpenAI, Anthropic, Google and many other providers, see documentation for specific models. - Do I have to pay for it?

The framework is free, but there may be an API fee to use the LLM service. - How do I customize the Smartbody?

pass (a bill or inspection etc)add_agentmethod to set up roles and targets, as detailed in the documentation example. - What about runtime errors?

Check the Python version, dependencies, and network connection, or refer to the GitHub issue page.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...