PengChengStarling: Smaller and Faster Multilingual Speech-to-Text Tool than Whisper-Large v3

General Introduction

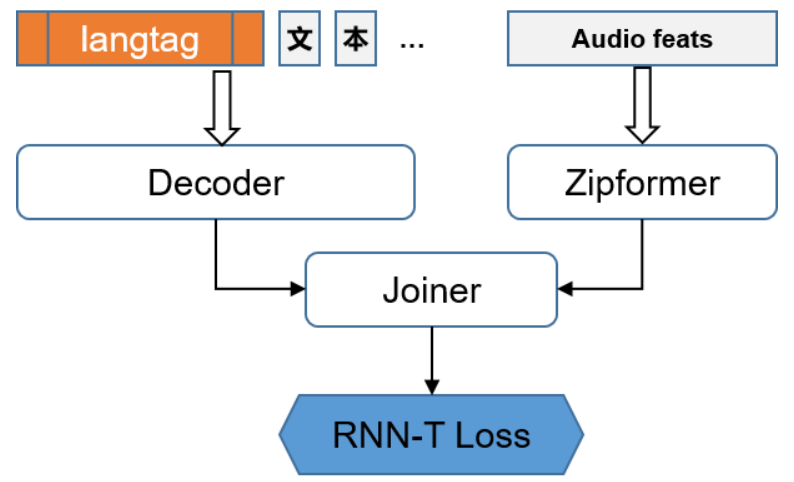

PengChengStarling (PengCheng Labs) is a multilingual Automatic Speech Recognition (ASR) tool capable of converting speech in different languages into corresponding text. Developed based on the icefall project, this toolkit provides a complete speech recognition process including data processing, model training, inference, fine-tuning, and deployment. pengChengStarling supports streaming speech recognition in eight languages, including Chinese, English, Russian, Vietnamese, Japanese, Thai, Indonesian, and Arabic. Its main application scenarios include voice assistants, translation tools, subtitle generation and voice search. The model size is 20% of Whisper-Large v3, and the inference speed is 7 times faster than Whisper-Large v3.

It is characterized by its ability to handle multilingual voice input within a unified framework, support for real-time speech recognition, recognition while speaking, and can be used for international conference recording to text, automatic generation of subtitles for multilingual videos, and cross-language customer service systems.

Function List

- Data Processing: Supports preprocessing of multiple datasets to generate the desired input format.

- Model training: provides flexible training configurations to support multilingual speech recognition tasks.

- Reasoning: efficient reasoning speed with support for streaming speech recognition.

- Fine-tuning: Supports the fine-tuning of models to fit specific task requirements.

- Deployment: provides models in PyTorch and ONNX formats for easy deployment.

Using Help

Installation process

- Cloning Project Warehouse:

git clone https://github.com/yangb05/PengChengStarling

cd PengChengStarling

- Install the dependencies:

pip install -r requirements.txt

export PYTHONPATH=/tmp/PengChengStarling:$PYTHONPATH

Data preparation

Before starting the training process, the raw data first needs to be preprocessed into the desired input format. Typically, this involves adapting thezipformer/prepare.pyhit the nail on the headmake_*_listmethod to generate thedata.listFile. Upon completion, the script will generate the corresponding cuts and fbank features for each dataset, which will be used as input data for PengChengStarling.

model training

- Configure the training parameters: in the

config_traindirectory to configure the parameters required for training. - Initiate training:

./train.sh

inference

- Prepare inference data: preprocess the data into the desired format.

- Initiate reasoning:

./eval.sh

trimming

- Prepare fine-tuned data: preprocesses the data into the desired format.

- Initiate fine-tuning:

./train.sh --finetune

deployments

PengChengStarling provides models in two formats: PyTorch state dictionary and ONNX format. You can choose the appropriate format for deployment according to your needs.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...