par_scrape: a crawler tool to intelligently extract data from web pages

General Introduction

par_scrape is a Python-based open source web crawler tool, launched on GitHub by developer Paul Robello, designed to help users intelligently extract data from web pages. It integrates two powerful browser automation technologies, Selenium and Playwright, and combines them with AI processing capabilities to support data crawling from simple static pages to complex dynamic websites. Whether it's extracting prices, headlines, or other structured information, par_scrape quickly completes the task by specifying fields and outputs the results as Markdown, JSON, or CSV. Project for developers, data analysts or users who want to automate the collection of information on the Web , easy to install and flexible features , popular in the open source community .

Function List

- Intelligent Data Extraction: Analyze web content with AI models such as OpenAI or Anthropic to accurately extract user-specified fields.

- Dual Crawler SupportThe website supports both Selenium and Playwright technologies, adapting to the needs of different website architectures.

- Multiple output formats: Capture results can be exported to Markdown, JSON, CSV or Excel for easy subsequent processing.

- Custom Field Grabbing: Users can specify the fields to be extracted, such as title, description, price, etc., to meet personalized needs.

- parallel capture: Support multi-threaded crawling to improve the efficiency of large-scale data collection.

- Waiting mechanism: Provide a variety of page loading waiting methods (e.g., pause, selector waiting) to ensure the success of dynamic content crawling.

- AI Model Selection: Supports multiple AI providers (e.g., OpenAI, Anthropic, XAI) for flexible adaptation to different tasks.

- Cache Optimization: Built-in hint caching feature to reduce the cost of duplicate requests and improve efficiency.

Using Help

Installation process

To use par_scrape, you need to complete the following installation steps first to ensure that your environment is well prepared. The following is a detailed installation guide:

1. Environmental preparation

- Python version: Ensure that Python 3.11 or later is installed on your system by using the command

python --versionCheck. - Git Tools: Used to clone code from GitHub, if not installed, it can be accessed via the

sudo apt install git(Linux) or the official website to download and install. - UV Tools: It is recommended to use UV to manage the dependencies, the installation command is:

- Linux/Mac:

curl -LsSf https://astral.sh/uv/install.sh | sh - Windows:

powershell -ExecutionPolicy ByPass -c "irm https://astral.sh/uv/install.ps1 | iex"

- Linux/Mac:

2. Cloning projects

Clone the par_scrape project locally by entering the following command in the terminal:

git clone https://github.com/paulrobello/par_scrape.git

cd par_scrape

3. Installation of dependencies

Use UV to install project dependencies:

uv sync

Or install it directly from PyPI:

uv tool install par_scrape

# 或使用 pipx

pipx install par_scrape

4. Installation of Playwright (optional)

If you choose Playwright as your crawler, you will need to install and configure your browser additionally:

uv tool install playwright

playwright install chromium

5. Configure the API key

par_scrape supports multiple AI providers, you need to configure the corresponding key in the environment variable. Edit ~/.par_scrape.env file, add the following (select as required):

OPENAI_API_KEY=your_openai_key

ANTHROPIC_API_KEY=your_anthropic_key

XAI_API_KEY=your_xai_key

Or set the environment variable before running the command:

export OPENAI_API_KEY=your_openai_key

Usage

Once the installation is complete you can run par_scrape from the command line, here is the detailed procedure:

Basic Usage Example

Suppose you want to extract the title, description and price from the OpenAI pricing page:

par_scrape --url "https://openai.com/api/pricing/" -f "Title" -f "Description" -f "Price" --model gpt-4o-mini --display-output md

--url: The address of the target web page.-f: Specify the extraction field, which can be used multiple times.--model: Select the AI model (e.g. gpt-4o-mini).--display-output: Output format (md, json, csv, etc.).

Featured Function Operation

- Switching Crawler Tools

Playwright is used by default, if you want to use Selenium, you can add a parameter:par_scrape --url "https://example.com" -f "Title" --scraper selenium - parallel capture

Set the maximum number of parallel requests to improve efficiency:par_scrape --url "https://example.com" -f "Data" --scrape-max-parallel 5 - Dynamic Page Waiting

For dynamically loaded content, wait types and selectors can be set:par_scrape --url "https://example.com" -f "Content" --wait-type selector --wait-selector ".dynamic-content"Supported wait types include

none,pause,sleep,idle,selectorcap (a poem)textThe - Customizing the output path

Saves the results to the specified folder:par_scrape --url "https://example.com" -f "Title" --output-folder ./my_data

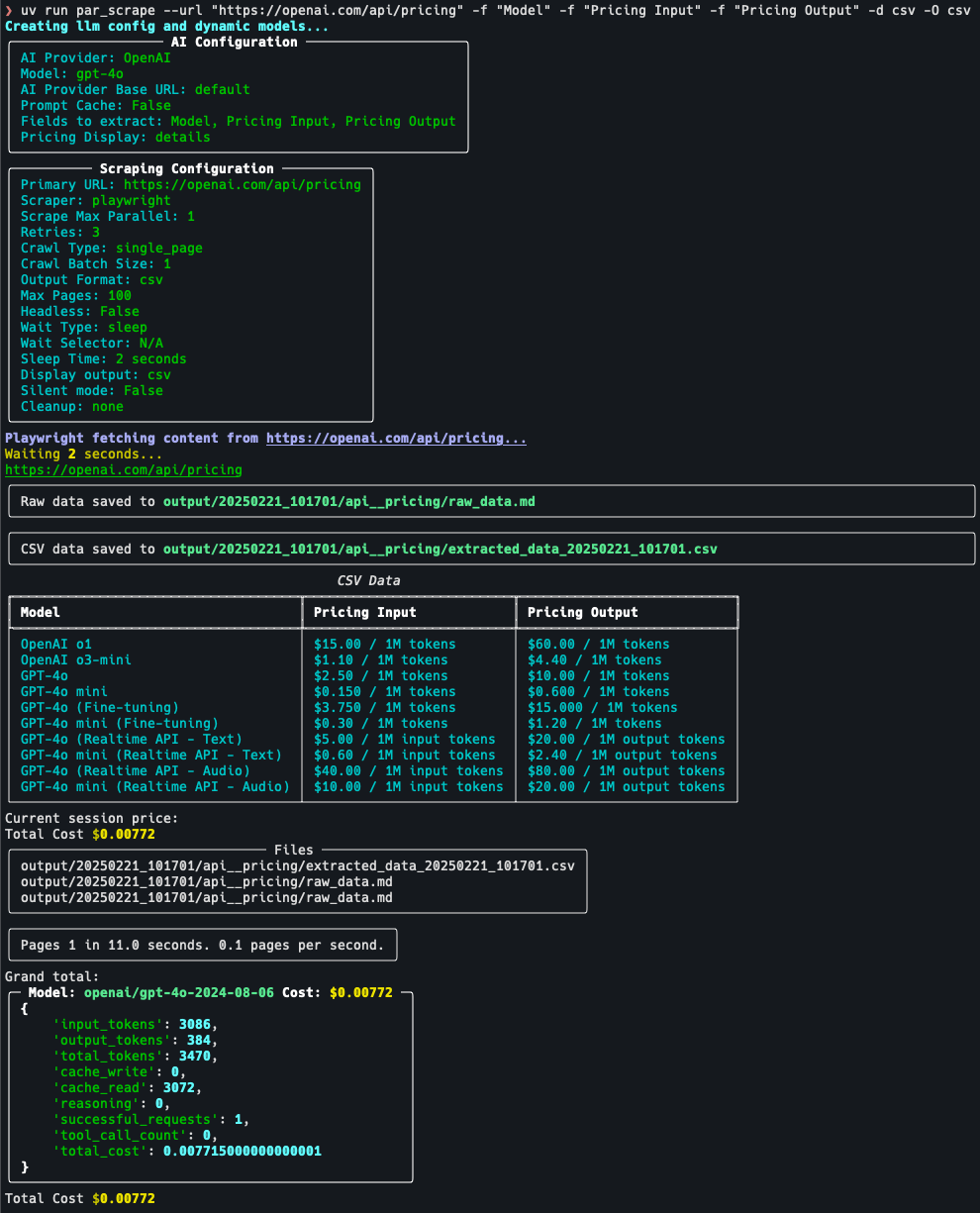

Operation process details

Take the crawl pricing page for example:

- target-oriented: Go to https://openai.com/api/pricing/ and confirm that you need to extract the "Model", "Pricing Input" and " Pricing Output".

- Run command::

par_scrape --url "https://openai.com/api/pricing/" -f "Model" -f "Pricing Input" -f "Pricing Output" --model gpt-4o-mini --display-output json - View Results: After the command is executed, the terminal displays the data in JSON format or saves it to the default output file.

- Adjustment parameters: If the data is incomplete, try adding

--retries 5(number of retries) or adjustments--sleep-time 5(Waiting time).

caveat

- API key: Ensure that the key is valid, otherwise the AI extraction function is not available.

- Website restrictions: Some sites may have an anti-crawl mechanism and it is recommended to use the

--headless(headless mode) or to adjust the grabbing frequency. - Cache Usage: If you crawl the same page multiple times, enable the

--prompt-cacheCost reduction.

With the above steps, users can quickly get started with par_scrape and easily accomplish web page data extraction tasks.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...