Inventorying LLM frameworks similar to Ollama: multiple options for locally deploying large models

The emergence of the Ollama framework has certainly attracted a lot of attention in the field of Artificial Intelligence and Large Language Models (LLMs). This open-source framework focuses on simplifying the deployment and operation of LLMs locally, making it easy for more developers to experience the appeal of LLMs. However, when looking at the market, Ollama is not alone, and there are many other tools in the same category, which together provide developers with more diverse options. In this article, we'll take an in-depth look at some of the tools that work with Ollama Several large modeling framework tools like these will hopefully help readers gain a more comprehensive understanding of the technology ecosystem in this area and find the most suitable tool for them.

Introduction to the Ollama Framework

Ollama is designed to simplify the deployment and operation of Large Language Models (LLMs) in local environments. It supports a wide range of mainstream LLMs, such as Llama 2, Code Llama, Mistral, Gemma, etc., and allows users to customize and create their own models according to their needs. ChatGPT The chat interface allows users to interact directly with the model without additional development. Moreover, Ollama's clean code and low runtime resources make it ideal for running on a local computer.

Tools similar to Ollama

Introduction to vLLM

vLLM Vectorized Large Language Model Serving System (vLLM) is an efficient large model inference and service engine tailored for LLM. vLLM significantly improves LLM inference performance through innovative PagedAttention technology, continuous batch processing, CUDA core optimization, and distributed inference support. vLLM significantly improves LLM inference performance through innovative PagedAttention technology, continuous batch processing, CUDA core optimization and distributed inference support.

specificities

vLLM vLLM supports a variety of model formats, including PyTorch, TensorFlow, etc., making it easy for users to choose flexibly according to their needs. At the same time, vLLM also has a high-performance inference engine that supports online inference and batch inference, and is able to respond quickly to a large number of concurrent requests, and still performs well in high-load scenarios.

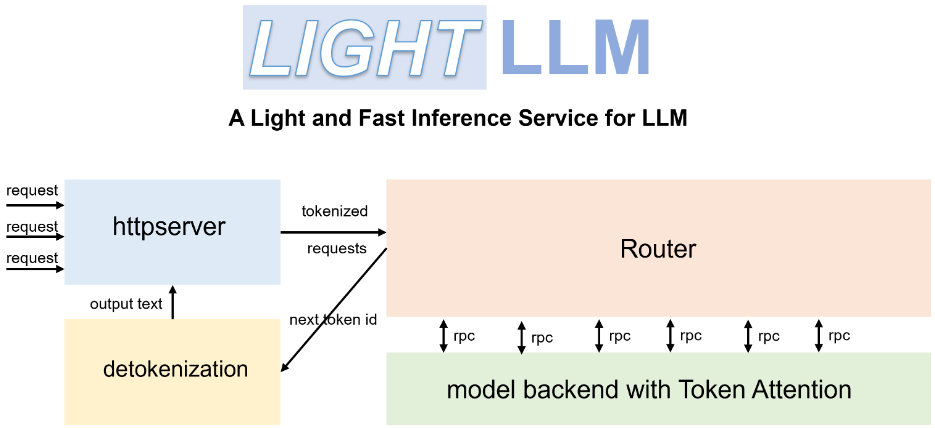

Introduction to LightLLM

LightLLM is a Python-based lightweight high-performance LLM reasoning and service framework. It integrates the strengths of open source implementations such as FasterTransformer, TGI, vLLM, and FlashAttention, and provides users with a new LLM service model.

specificities

LightLLM The unique three-process architecture decouples the three major steps of tokenize, model inference and detokenize and runs them in parallel through an asynchronous collaboration mechanism. This design significantly improves GPU utilization and reduces latency caused by data transmission, effectively improving inference efficiency. In addition, LightLLM also supports Nopad no-fill operation, which can handle requests with large length differences more efficiently and avoid invalid filling, thus improving resource utilization.

Introduction to llama.cpp

llama.cpp is an LLM inference engine developed in C and C++. It is deeply performance-optimized for the Apple silicon chip, and is capable of efficiently running the Llama 2 model from Meta on Apple devices.

specificities

The main goal of llama.cpp is to implement LLM inference on a wide range of hardware platforms, providing top performance with minimal configuration. To further enhance performance, llama.cpp provides a variety of quantization options such as 1.5-bit, 2-bit, 3-bit, 4-bit, 5-bit, 6-bit, and 8-bit integer quantization, which are designed to speed up inference and reduce memory footprint. Furthermore, llama.cpp supports mixed CPU/GPU inference, which further enhances inference flexibility and efficiency.

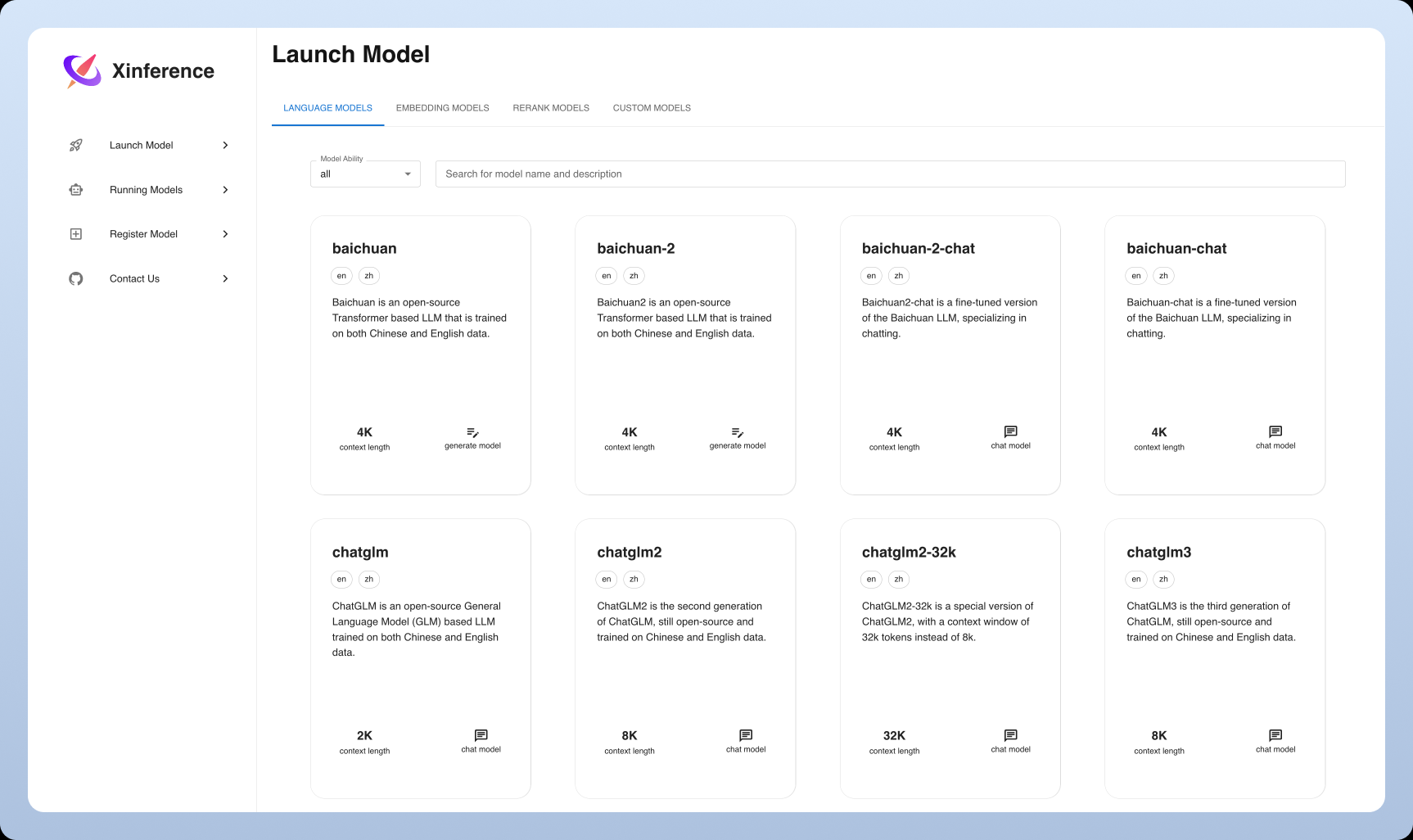

Introduction to Xinference

Xinference is an emerging generalized inference framework that not only supports LLM, but also supports models in a variety of modalities including image and speech. It aims to lower the threshold of model deployment and usage, provide a unified interface and a friendly user experience.

specificities

Xinference The highlight of Xinference is its extensive support for multimodal models, which allows users to easily deploy and experience different types of models on the same platform. At the same time, Xinference focuses on ease of use, providing a variety of interaction methods such as Web UI, Python client, etc., and supporting unified management and flexible expansion of models.

Tool Comparison and Analysis

Installation and Deployment

Ollama. The installation process is extremely easy, Ollama provides a clear and concise installation guide and supports many major operating systems. Users can easily get a large language model up and running with just a few simple command line operations.

vLLM. In contrast, the installation of vLLM is slightly more complicated, requiring the user tobeforehandConfiguring the Python environment and installing a set of dependency libraries. However, once deployed, vLLM's efficient inference performance will provide significant improvements for users, especially in performance-critical scenarios.

LightLLM. The installation process of LightLLM is relatively friendly, and detailed installation and configuration guides are provided to guide users to get started quickly. Users can flexibly choose the appropriate model format for deployment according to their needs.

llama.cpp. The deployment of llama.cpp requires a certain level of technical knowledge, and the user needs to have theuntimelyConfigure the C++ development environment. However, for technology enthusiasts and developers, once configured, llama.cpp's high-performance inference engine provides the ultimate user experience, with deep control over every aspect of model inference.

Xinference. Xinference is also relatively easy to install and deploy, providing Docker images and Python packages for users to quickly set up their environments. At the same time, Xinference also provides a comprehensive Web UI interface, which lowers the threshold of use.

Model Support and Compatibility

Ollama. In terms of model support, Ollama shows good openness, supporting a variety of open source models including Llama 2, Code Llama, etc. and allowing users to upload customized models. In addition, Ollama provides a library of pre-built models, which makes it easy for users to quickly get started with various models.

vLLM. vLLM excels in model format compatibility, supporting PyTorch, TensorFlow, and many other major model formats.feasible Users максимально flexibility in model selection. It is worth mentioning that vLLM also supports distributed inference, which is able to fully utilize the arithmetic power of multiple GPUs and run models in parallel to further improve inference efficiency.

LightLLM. LightLLM also has excellent model format compatibility, supporting a variety of commonly used model formats. At the same time, LightLLM also provides rich APIs and tools to facilitate in-depth customization and meet the needs of a wider range of users.specialThe need for application scenarios.

llama.cpp. llama.cpp focuses on optimizing the Llama model for extreme inference, but also supports other models. Its efficient inference engine allows llama.cpp to maintain excellent performance when dealing with large datasets.

Xinference. One of the highlights of Xinference is its model support, which not only supports various LLMs, but also supports image generation models such as Stable Diffusion, as well as Whisper and other speech models, demonstrating strong multimodal model compatibility and providing users with a broader application space.

Performance and Optimization

Ollama. Ollama is known for its clean API and efficient reasoning performance, but there may be performance bottlenecks in scenarios where a large number of concurrent requests are handled. Ollama is still a good choice for users seeking ease of use and fast deployment.

vLLM. vLLM is built for extreme performance. It significantly improves LLM reasoning performance through techniques such as PagedAttention, especially when dealing with a large number of concurrent requests, and is able to satisfy application scenarios with stringent service performance requirements.

LightLLM. LightLLM also puts a lot of effort into performance optimization. Thanks to its unique three-process architecture and asynchronous collaboration mechanism, LightLLM effectively improves GPU utilization and inference speed. In addition, LightLLM supports Nopad no-fill operation, which further improves resource utilization. Contributes to overall performanceThe

llama.cpp. llama.cpp also excels in terms of performance. Not only does it have an efficient inference engine, but it also offers a variety of quantization options that allow flexibility in the inference speed and memory footprint between theequilibriumIn addition, llama.cpp supports mixed CPU/GPU reasoning. Moreover, llama.cpp also supports mixed CPU/GPU inference, which further enhances the flexibility and efficiency of inference, allowing users to perform the inference according to the actual hardware environment of thebest (athlete, movie etc)Configuration.

Xinference. Xinference is also optimized for performance, supporting techniques such as quantization and pruning of models to improve inference efficiency and reduce resource consumption. In addition, Xinference is also being iteratively optimized to improve the performance of multimodal model inference.

Summary and outlook

Tools similar to Ollama have their own features and advantages, and users can choose the right framework according to application scenarios and actual needs. With the continuous development of artificial intelligence and large-scale language modeling technology, these tools will continue to improve and upgrade, providing users with more efficient and convenient solutions. Looking ahead, these tools will play a greater role in natural language processing, intelligent customer service, text generation and other areas, further promoting the development and application of artificial intelligence technology, and ultimatelyrealization A beautiful vision of Artificial Intelligence for all.

Recommendations and Suggestions

Ollama may be a good choice for novice and individual users. Ollama provides a simple API and a friendly user interface, which allows users to interact with the model directly without additional development. Moreover, Ollama supports a variety of open source models, which makes it easy for users to quickly get started and try out various application scenarios, and quickly experience the fun of deploying LLM locally.

For business users who need to handle highly concurrent requests, vLLM may be a better choice. The efficient reasoning performance and distributed reasoning support of vLLM can meet the demands of highly concurrent scenarios and provide users with a more stable and reliable solution to ensure business continuity.Stability and continuityThe

LightLLM and llama.cpp are more suitable for users with a certain technical base for custom development and deep optimization. These two tools provide developers with greater flexibility and more control over the underlying permissible The user is required to make a decision based onspecification Create more personalized and high-performance LLM applications.

Xinference is also an option for those who want to experiment with multimodal models and are looking for an out-of-the-box experience. Xinference lowers the barriers to multimodal modeling, allowing users to experience the power of a wide range of AI models on a single platform.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...