Orchestra: Building Smart AI Teams for Easier and More Efficient Multi-Intelligence Collaborative Development

General Introduction

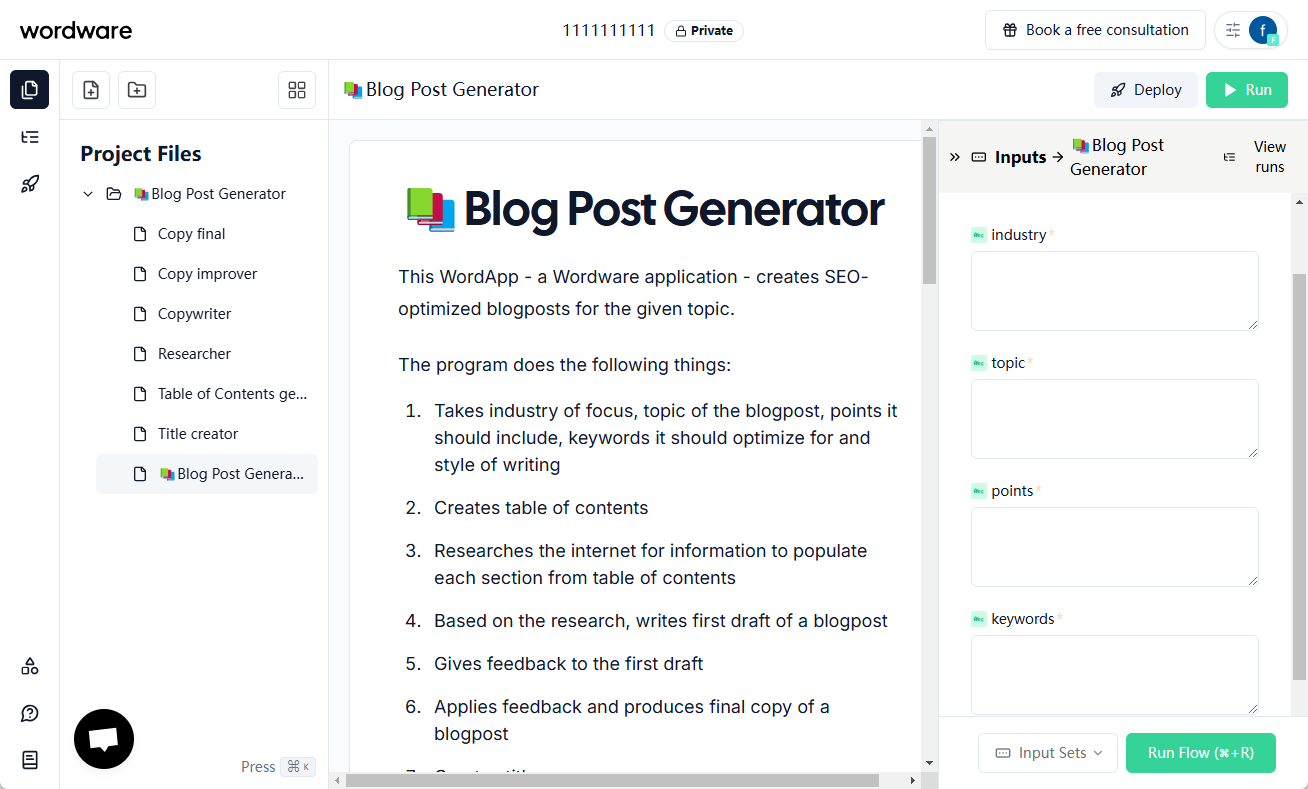

Orchestra is an innovative lightweight Python framework focused on building multi-intelligence collaborative systems based on the Large Language Model (LLM). It adopts a unique method of arranging intelligences so that multiple AI intelligences can work together harmoniously like a symphony orchestra. Through the modular architecture design, developers can easily create, extend and integrate various types of intelligences to realize the decomposition and collaboration of complex tasks.Orchestra supports GPT-4, Claude 3 and other mainstream big models, and provides a rich set of built-in tools, including web crawling, file processing, GitHub interaction and other functions. Its salient features are concise tool definition, real-time streaming output, elegant error handling mechanism, and task execution process based on structured thought patterns. As the advanced version of TaskflowAI, Orchestra is committed to providing developers with a more powerful and flexible AI application development framework.

Function List

- Intelligent Body Arrangement System:: Supporting intelligences to act as executors and commanders at the same time, realizing dynamic task decomposition and inter-intelligence coordination.

- Modular Architecture:: Provide an extensible componentized design for easy building and integration of custom functionality

- Multi-model support: Integration with OpenAI, Anthropic, Openrouter, Ollama, Groq and many other LLM providers

- built-in toolset: Includes web tools, file tools, GitHub tools, calculation tools, and many other utilities

- real-time stream processing:: Support for synchronous and asynchronous streaming of real-time outputs

- Error handling mechanism: Built-in intelligent failure handling and configurable degradation chains

- Structured tasking: Reduce LLM cognitive burden through phased implementation

- Simplicity Tool Definition: A simple tool definition based on document strings, without the need for complex JSON schemas.

Using Help

1. Installation configuration

Installation of the Orchestra framework is very simple, just use pip to execute the following command.

pip install mainframe-orchestra

2. Basic utilization process

2.1 Creating a Single Intelligence

from mainframe_orchestra import Agent, Task, OpenaiModels, WebTools

# 创建研究助手智能体

research_agent = Agent(

role="研究助手",

goal="回答用户查询",

llm=OpenaiModels.gpt_4o,

tools={WebTools.exa_search}

)

# 定义研究任务

def research_task(topic):

return Task.create(

agent=research_agent,

instruction=f"使用搜索工具研究{topic}并进行通俗易懂的解释"

)

2.2 Building multi-intelligence teams

Orchestra supports the creation of multiple specialized intelligences working together, for example, to build financial analysis teams.

- Market Analyst - responsible for market microstructure analysis

- Fundamental Analyst - Responsible for financial analysis of companies

- Technical Analyst - responsible for price chart analysis

- Sentiment Analyst - responsible for market sentiment analysis

- Commander Intelligence - Responsible for coordinating with other Intelligences

3. Use of advanced functions

3.1 Tool integration

Orchestra offers a variety of built-in tools.

- WebTools: Web crawling, search, weather API, etc.

- FileTools: CSV, JSON, XML and other file operations

- GitHubTools: Interactive tools for code repositories

- CalculatorTools: Date and Time Calculator

- WikipediaTools: Wikipedia Information Retrieval

- AmadeusTools: Flight Information Search

3.2 Customized tool development

It is possible to define your own tools by means of simple document strings: the

def custom_tool(param1: str, param2: int) -> str:

"""工具描述

Args:

param1: 参数1说明

param2: 参数2说明

Returns:

返回值说明

"""

# 工具实现代码

3.3 Error Handling and Process Control

Orchestra provides sophisticated error handling mechanisms.

- Configuring the Degradation Chain to Handle LLM Call Failures

- Real-time monitoring of smart body status

- Task execution timeout control

- Result validation and retry mechanism

4. Best practice recommendations

- Rationalize the division of responsibilities among intelligences to avoid overlapping responsibilities

- Use a structured approach to task decomposition

- Leverage built-in tools to improve efficiency

- Implement the necessary error handling mechanisms

- Keep code modular and maintainable

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...