OpenManus New WebUI and Domestic Search Engine Configuration Guide

OpenManus has been updated a lot lately, and in addition to supporting native Ollama and Web API providers, and also added support for domestic search engines and several WebUI adaptations. In this article, we will introduce a few community-contributed OpenManus WebUIs and how to configure them for domestic search engines.

Introduction to OpenManus WebUI

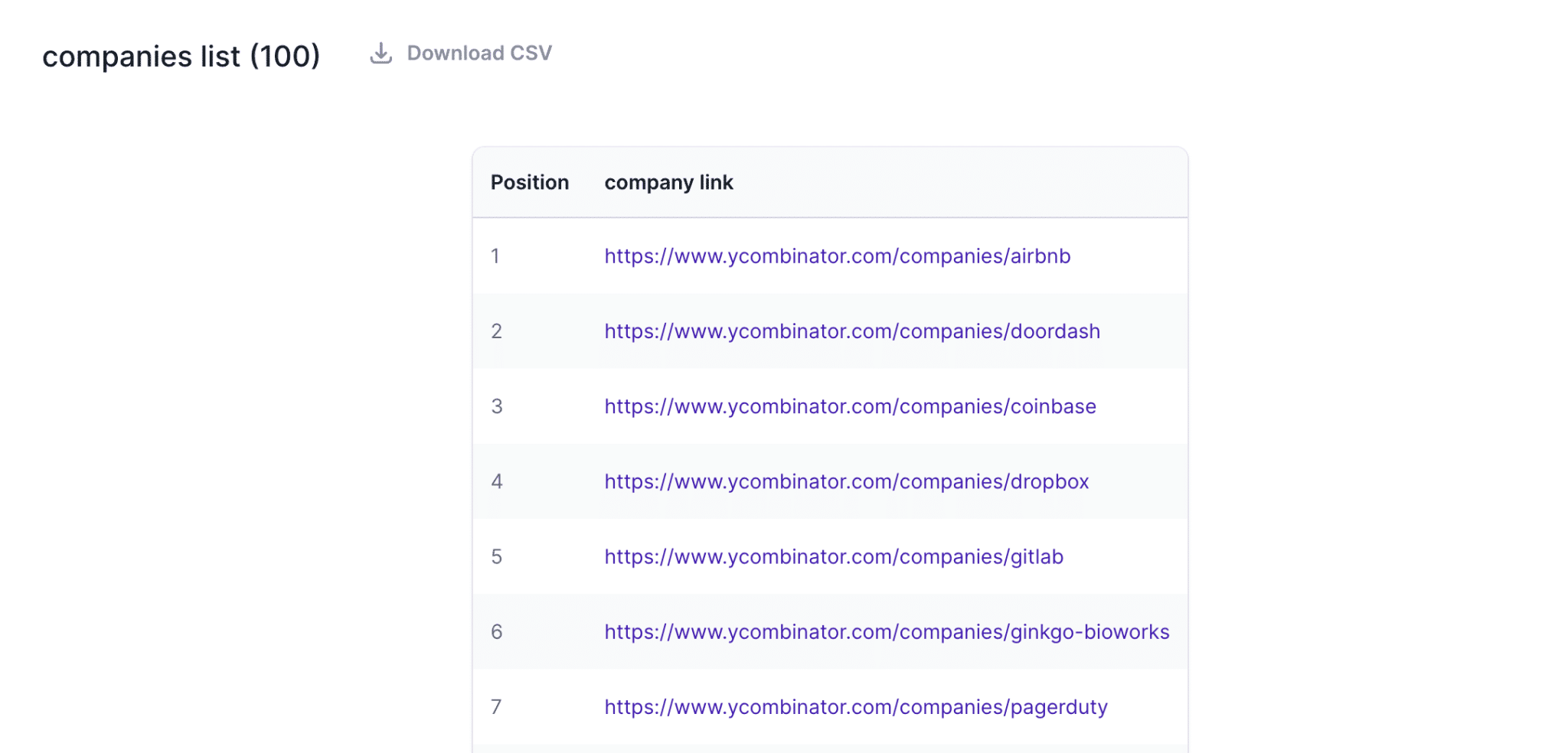

There are a number of WebUI projects in the community that are adapted to OpenManus, a few of which are listed below for reference:

Each of these WebUI programs has its own characteristics, so you can choose to use them according to your own preferences.

The official OpenManus website has also been updated (https://openmanus.github.io/). However, clicking from the right side of the GitHub repository to the official website and then clicking on the documentation link jumps back to the GitHub repository, which is a bit confusing.

Configuration interface update

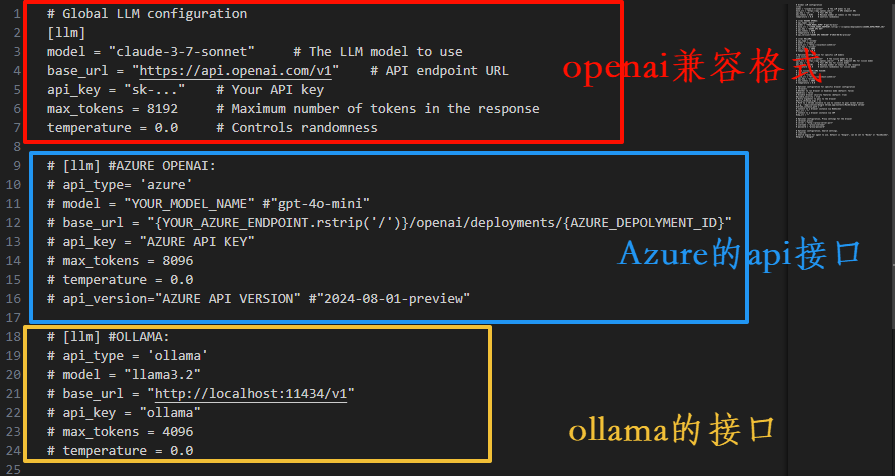

The new version of the configuration interface has increased annotations, and configuration items such as UI, URL, search engine, etc. are more clearly explained, making it more friendly to novice users.

The visual model configuration is similar and will not be repeated here.

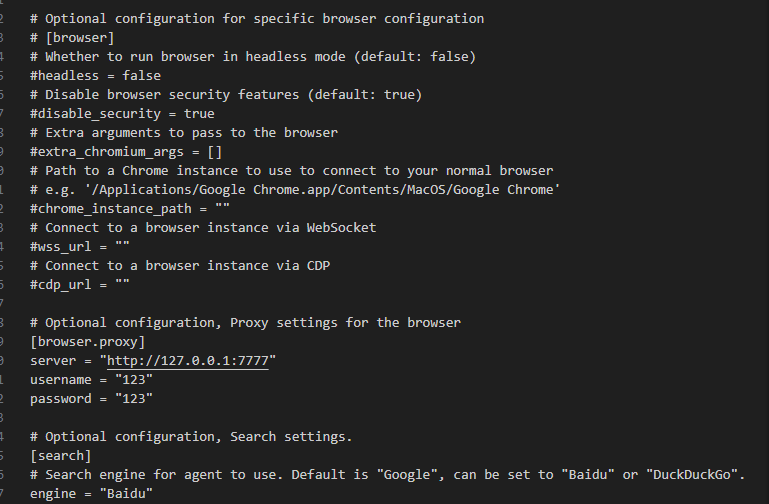

Search engine and browser configuration

OpenManus now supports configuration of search engines and browsers. Users can choose according to their needs. If you are not familiar with the English options, you can use a translation tool to aid in your understanding.

Using the WebUI

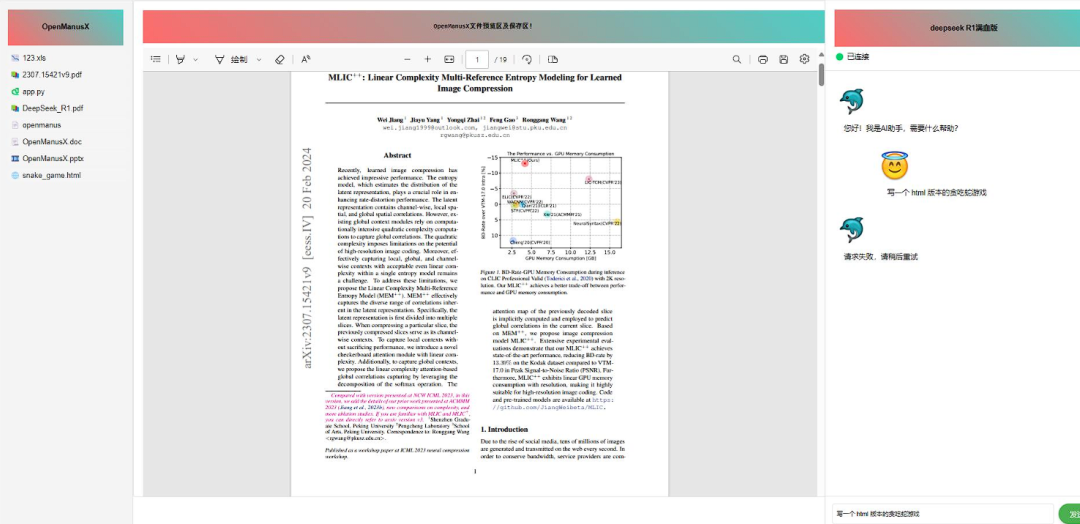

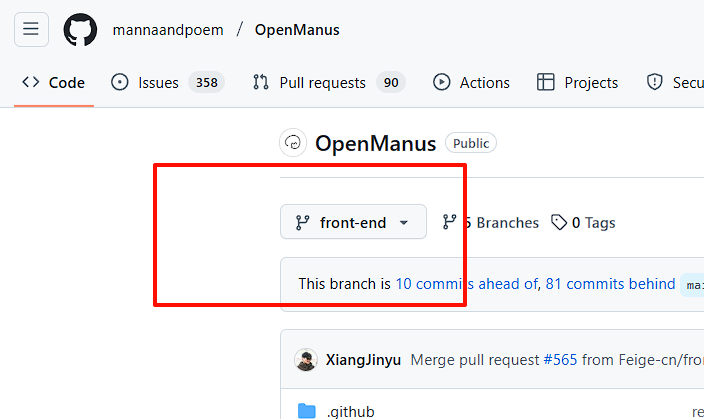

To use the WebUI, you need to select the repository's front-end Branching out.

Environment Configuration

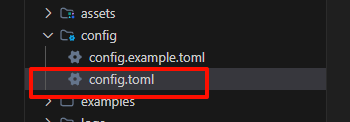

Before starting, you need to configure environment variables. Here is an example of a local Ollama. First, make a copy of config Documentation:

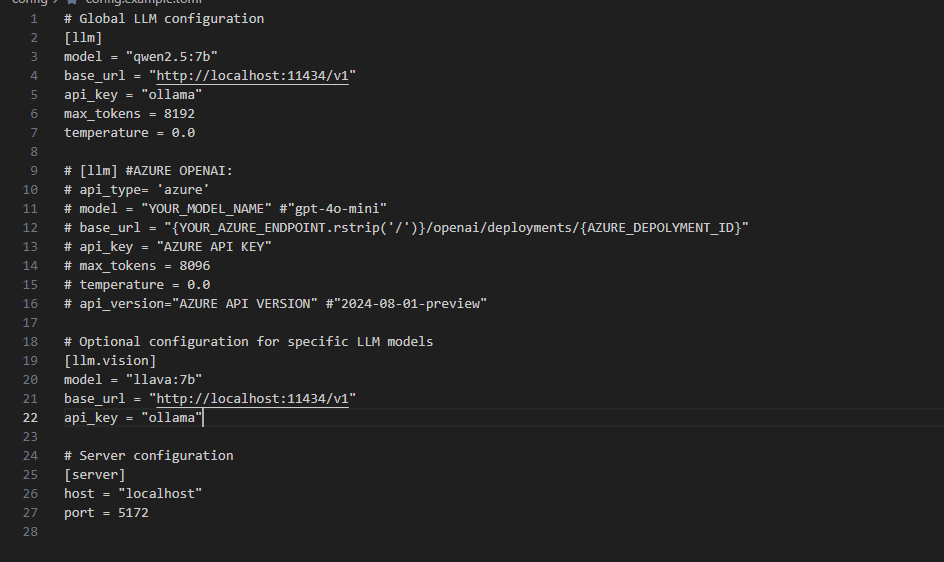

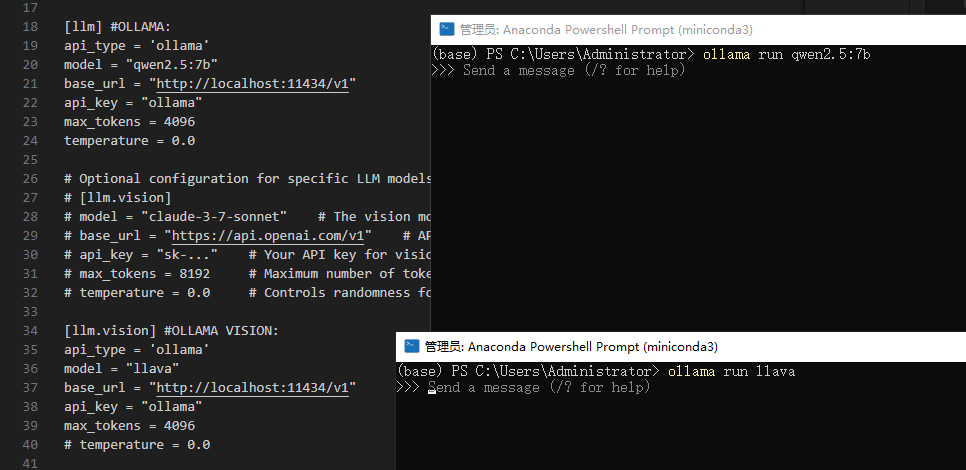

Then, make changes in the copied file. Delete the original API configuration and configure it according to the Ollama port:

Attention:api_key Cannot be null and can be filled with an arbitrary value.

When selecting a model, choose one that supports function tools; the Ollama website lists models that support tool calls.

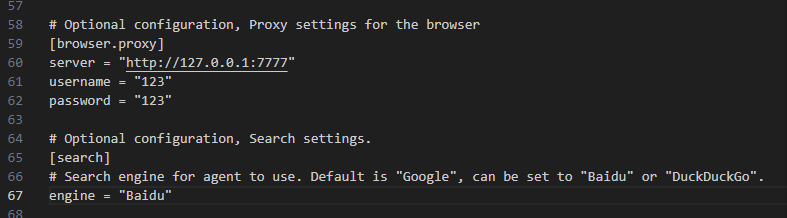

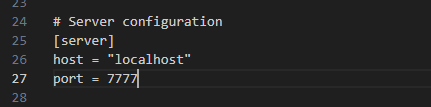

Set the port and search engine (the UI branch is not yet fully adapted to domestic search engines). Take Baidu for example, fill in BaiduThe

Black Window version configuration:

UI version configuration:

Save the configuration file.

Launch Ollama

To start Ollama from the command line, the example uses the qwen2.5:7b cap (a poem) llava Two models:

Installation of dependencies

Install the added dependency packages, such as fastapi,duckduckgo cap (a poem) baidusearch. Run in the project root directory:

pip install -r requirements.txt

Initiation of projects

After the installation is complete, start the project.

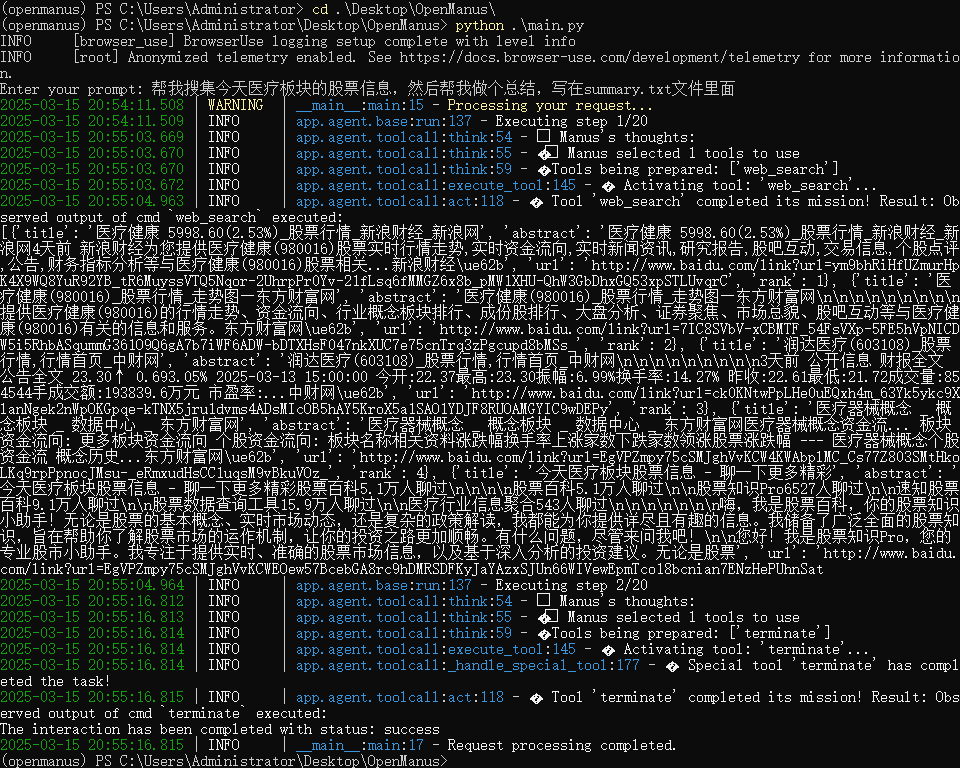

The Black Window version can be used run_flow.py maybe main.py Documentation:

python run_flow.py

# 或者

python main.py

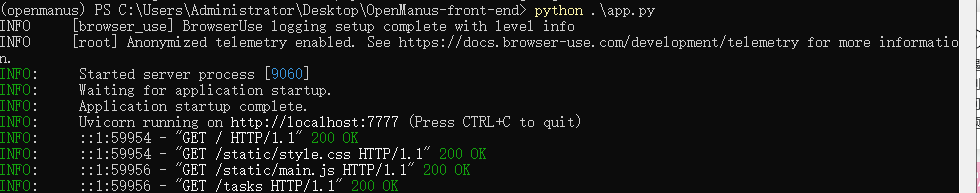

The UI version is started using the following command:

python app.py

Commencement of mission

The black window version runs the effect:

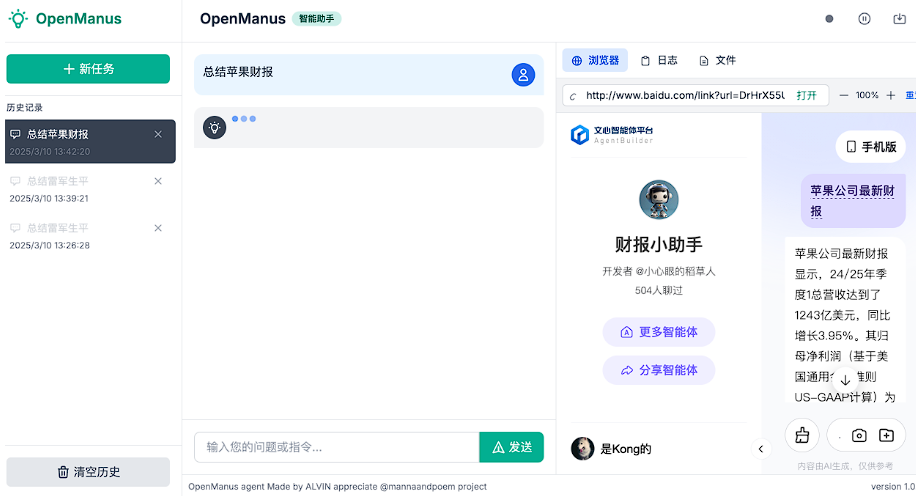

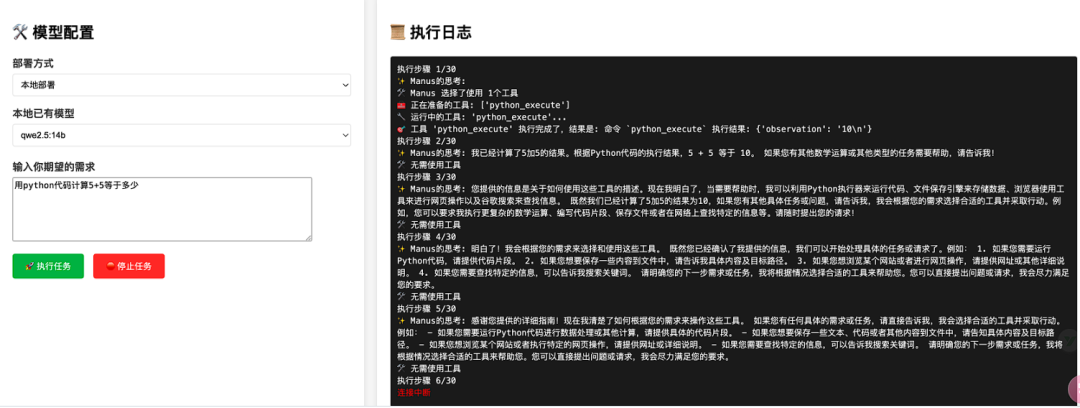

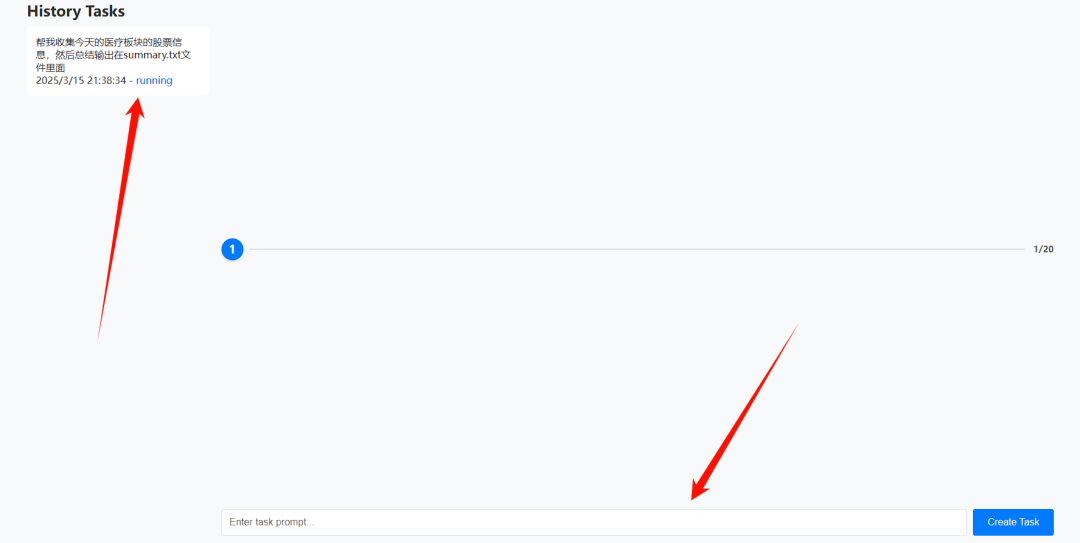

When the UI version is launched, it automatically jumps to the web page:

Enter the task in the input box and click "Create Task" to create the task.

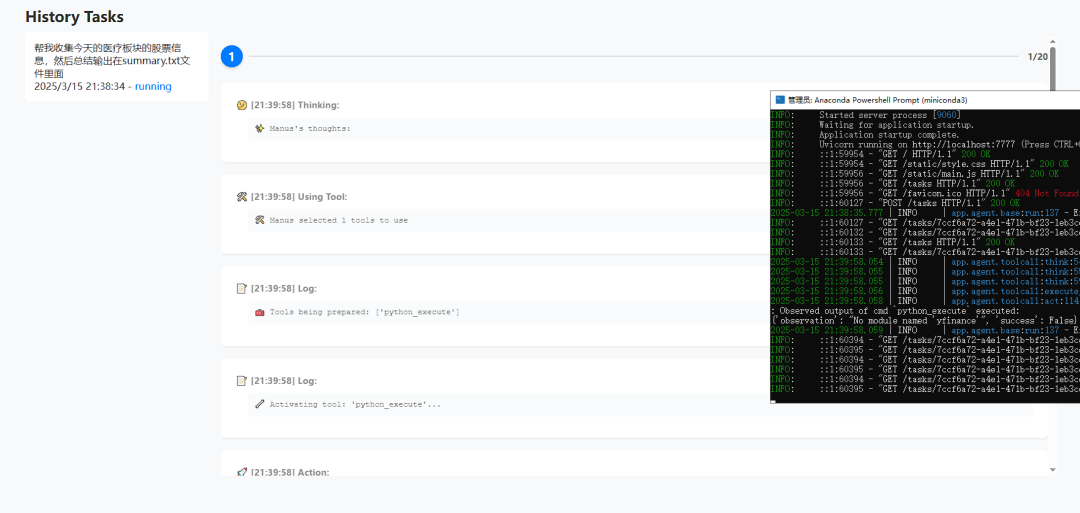

Task runs can be viewed in both the back-end and front-end interfaces:

WeChat search support (experimental)

A developer has submitted a Pull Request for WeChat Search:https://github.com/mannaandpoem/OpenManus/pull/483

This function is realized based on Sogou WeChat search and crawler technology. Due to the optimization of OpenManus search interface, the original path may need to be reconfigured. Interested users can try to adapt it by themselves.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...