OpenAOE: Large Model Group Chat Framework: Chatting with Multiple Large Language Models Simultaneously

General Introduction

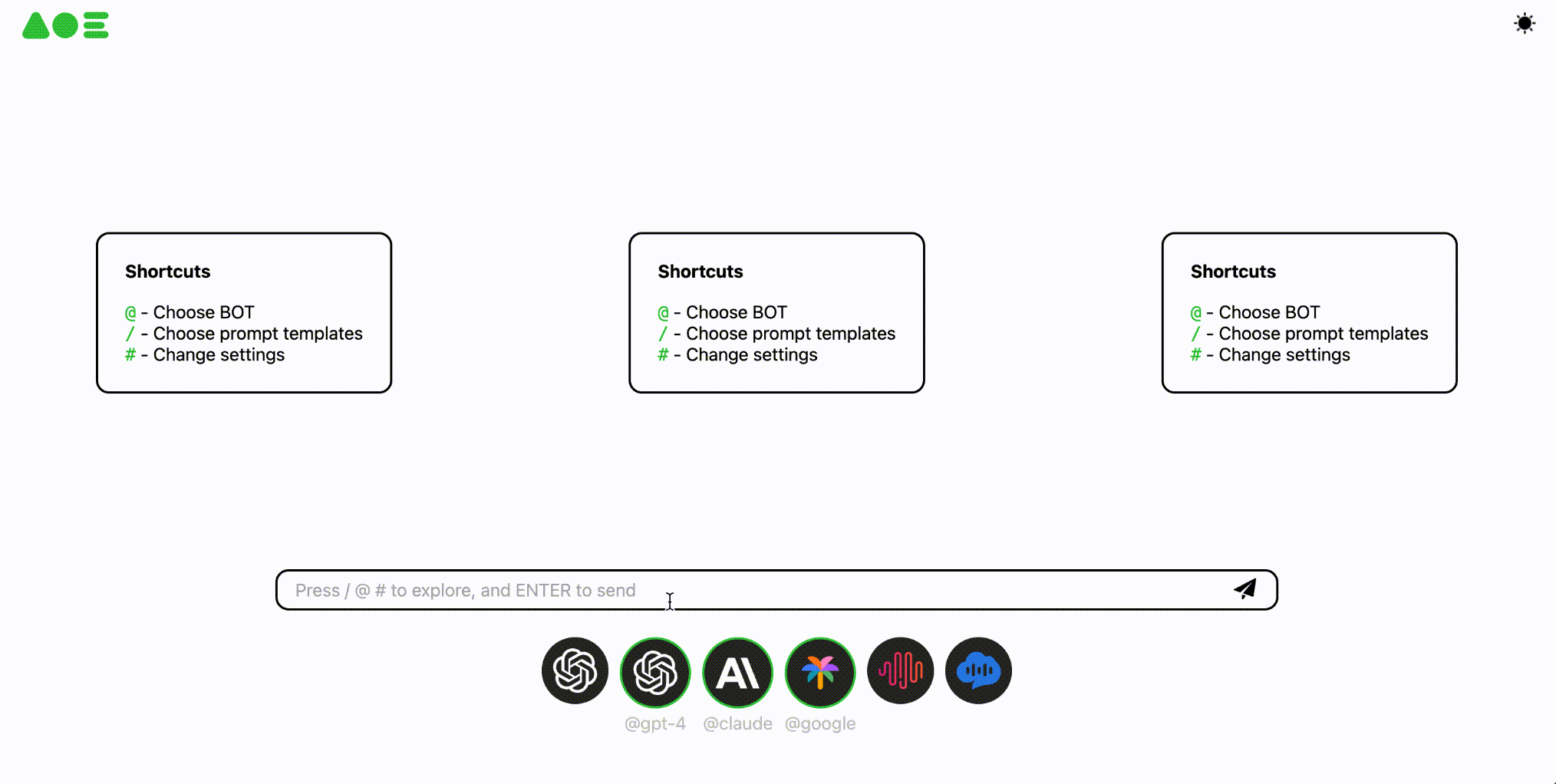

OpenAOE is an open source large model group chat framework that aims to solve the problem of the lack of chat frameworks in the current market that respond to multiple models in parallel. With OpenAOE, users can talk to multiple Large Language Models (LLMs) at the same time and get parallel output. The framework supports access to multiple commercial and open source LLM APIs, such as GPT-4, Google Palm, Minimax, Claude, etc., and allows users to customize the access to other LLM APIs.OpenAOE provides back-end APIs and Web-UIs to satisfy the needs of different users, and is suitable for LLM researchers, evaluators, engineering OpenAOE provides back-end API and Web-UI to meet different users' needs, and is suitable for LLM researchers, evaluators, engineers, developers, and laymen.

Function List

- Multi-model parallel response: Supports conversations with multiple large language models at the same time to obtain parallel output.

- Commercial and open source model access: Support access to GPT-4, Google Palm, Minimax, Claude and other commercial and open-source large language modeling APIs.

- Custom Model Access: Allows customized access to other big model APIs.

- Backend API and Web-UI: Provides back-end APIs and Web-UIs to meet the needs of different users.

- Multiple operating modes: Supports running OpenAOE via pip, docker, and source.

Using Help

Installation process

Installation via pip

- Make sure Python version >= 3.9.

- Run the following command to install OpenAOE:

pip install -U openaoe

- Start OpenAOE using the configuration file:

openaoe -f /path/to/your/config-template.yaml

Installation via docker

- Get the OpenAOE docker image:

docker pull opensealion/openaoe:latest

Or build a docker image:

git clone https://github.com/internlm/OpenAOE

cd OpenAOE

docker build . -f docker/Dockerfile -t opensealion/openaoe:latest

- Start the docker container:

docker run -p 10099:10099 -v /path/to/your/config-template.yaml:/app/config.yaml --name OpenAOE opensealion/openaoe:latest

Guidelines for use

- configuration file: Before starting OpenAOE, you need to prepare the configuration file

config-template.yamlThis file is used to control the back-end and front-end settings. - Access Model API: In the configuration file, users can configure the model APIs that need to be accessed, including GPT-4, Google Palm, Minimax, Claude and so on. Users can also customize the access to other large model APIs.

- Starting services: After starting the OpenAOE service following the installation process, users can operate it through the provided Web-UI or integrate it through the back-end API.

- parallel dialog: In the Web-UI, the user can enter a prompt word and OpenAOE will send a request to multiple large language models at the same time and return a parallel response.

- Analysis of results: Users can compare the response results of different models for analysis and evaluation.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...