OpenAI started to provide Prompt Caching for large models (GPT series models): the price of GPT-4o series model inputs dropped by half, and the access speed increased by 80%

In large model applications, processing complex requests is often accompanied by high latency and cost, especially when there is a lot of repetition in the request content. This "slow request" problem is especially prominent in scenarios with long prompts and high-frequency interactions. To address this challenge, OpenAI recently introduced the Prompt Caching Function. This new technology avoids double-computation by caching the same prefix portion processed by the model, thus dramatically reducing request response time and associated costs. Especially for long hint requests containing static content, hint caching can significantly improve efficiency and reduce operational overhead. In this paper, we will introduce in detail how this feature works, the supported models, and how to optimize the cache hit rate through a reasonable hint structure to help developers improve the experience of using large models.

What is Large Model Prompt Caching (Prompt Caching)?

Prompt Caching is a mechanism that reduces the latency and computational cost of processing long prompts with repetitive content. A "prompt" in this context refers to the input you send to the model. Instead of recalculating the first N input tokens of a hint each time during the request process, the system caches the results of previous calculations. This allows the system to reuse the cached data for subsequent requests with the same cue prefix, which speeds up processing, reduces latency, and saves costs.

In simple terms, hint caching works as follows:

- Cache the first 1024 tokens : The system checks if the first 1024 tokens of the prompt content are the same as the previous request, and if they are, it caches the computation of those tokens.

- cache hit : When a new request matches a cached hint, we call it a "cache hit". At this point, the response will contain detailed information about the cache token, helping to reduce computation time and cost.

- reduce costs : in Standard deployment type in which cached tokens will be billed at a discounted price; while in the Pre-configured deployment types in which cache tags can even get a 100% discount.

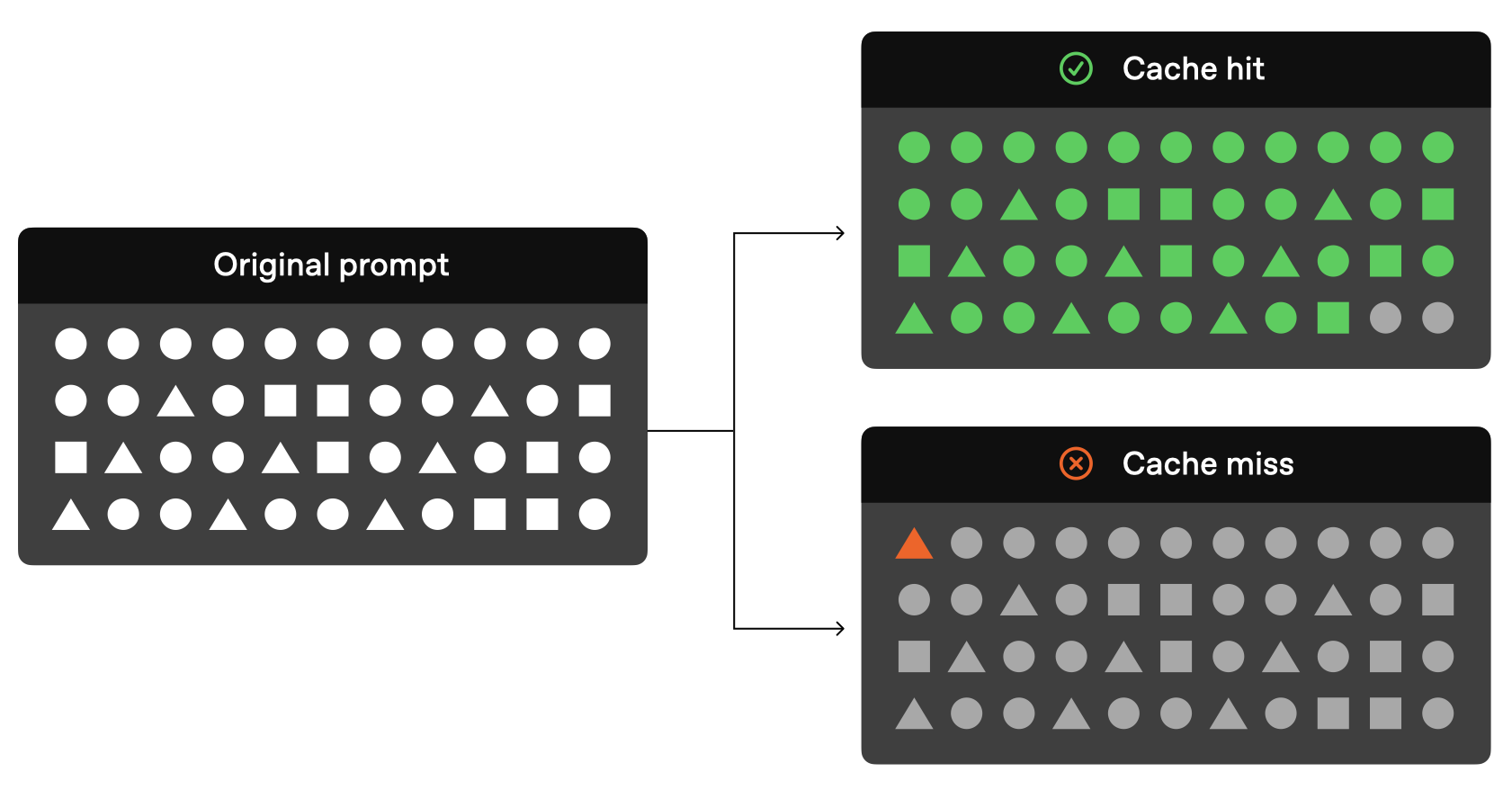

A very visual schematic of the hint cache is officially given:

As you can see from the above figure, if the previous tokens in your original request are the same as the previous request, that part of the request can be resolved not through the model, but just read directly through the cached results.

Currently, OpenAI officially provides the following cacheable models:

| mould | Text Entry Costs | Audio Input Costs |

|---|---|---|

| gpt-4o (excluding gpt-4o-2024-05-13 and chatgpt-4o-latest) | Cheap 50% | inapplicable |

| gpt-4o-mini | Cheap 50% | inapplicable |

| gpt-4o-realtime-preview | Cheap 50% | Cheap 80% |

| o1-preview | Cheap 50% | inapplicable |

| o1-mini | Cheap 50% | inapplicable |

Current models that support cue caching (e.g., gpt-4o, gpt-4o-mini, gpt-4o-realtime-preview, etc.) are all effective in reducing the cost of text input, especially with the cost discount of 50%, and the gpt-4o-realtime-preview model even enjoys the 80% cost discount when processing audio input. The support of these models not only improves the response efficiency of the model, but also provides developers with a low-cost, high-performance solution, especially for scenarios that require frequent repeat requests or contain large amounts of static content.

How the hint cache works

According to the current official OpenAI documentation, the following conditions must be met if your request is to use the cache:

- The length of the prompt must be at least 1024 tags The

- prompted First 1024 tags Must be in a subsequent request identical The

In addition, it should also be noted that, officially, the starting 1024 tokens is the minimum cache length, but the incremental 128 tokens of the later hits, that is to say, the length of the cache are 1024, 1152, 1280, 1408 so as to1024 tokens is the minimum, 128 tokens incrementsThe way it is saved.

When a "cache hit" occurs, the API response will contain cached_tokens, which indicates how many tokens came from the cache. For example, the number of tokens sent to o1-preview-2024-09-12 A request for a model may return the following response:

{"created":1729227448,"model":"o1-preview-2024-09-12","object":"chat.completion","usage":{"completion_tokens":1518,"prompt_tokens":1566,"total_tokens":3084,"completion_tokens_details":{"reasoning_tokens":576},"prompt_tokens_details":{"cached_tokens":1408}}}

In this example, the1408 markingsis fetched from the cache, significantly reducing processing time and cost.

Save time after hint cache hit

OpenAI officially says that the model does not keep cached information all the time. The current mechanism related to cue caching for the GPT series of models is as follows:

- Cache Duration : The cache will be in the 5-10 minutesis cleared after the inactive state of the cache. If the last use of the cache is not accessed for more than an hour, it is removed completely.

- Cache misses : If one of the first 1024 tokens of the prompt is different, the Cache misses at this time

cached_tokenswill have a value of 0. - Cache is not shared : Hints that the cache won't be available in a differentsubscribe toshared between them. Each subscription has its own cache.

Supported Cached Content Types

The functionality of the hint cache varies depending on the model used. For example.o1 Series ModelIt only supports text, not system messages, images, tool calls or structured output, so its caching feature is mainly applicable to the user message part. While gpt-4o and gpt-4o-mini The model supports caching of more content types, including:

- messages : A complete array of messages, including system messages, user messages, and helper messages.

- imagery : Images (either links or base64-encoded data) contained in user messages, as long as the

detailThe parameters are consistent. - Tool Call : Includes message arrays and tool-defined caches.

- Structured Output : Structured output mode attached to a system message.

To increase the probability of cache hits, it is recommended that duplicates be placed at the front of the message array.

Hinting at the API version of OpenAI supported by the cache

The hint caching feature was first introduced in the API version 2024-10-01-preview in providing support. For o1 Model series In addition, the API response now includes the cached_tokens parameter to show the number of tokens hit by the cache.

Steps to enable cue caching:

- minimum length : Ensure that the prompt is at least 1024 tokens long.

- consistent prefix (math.) : Ensure that the first 1024 tokens of the prompt are consistent across requests.

- API Response : On a cache hit, the API response displays the

cached_tokens, indicating how many tokens are cached.

Is it possible to disable hint caching?

Hints are cached in all supported models Enabled by default and is currently No disable option . This means that if you are using a supported model, caching is automatically enabled, provided the request is eligible.

Why is cue caching so important?

Cue caching brings two major benefits:

- Reducing delays : Caching significantly speeds up response times by avoiding duplicate processing of the same content.

- save costs : Caching reduces the number of tokens that need to be processed and thus reduces the overall computational cost, especially for long prompts with a lot of duplicate content.

Hint caching is especially advantageous for application scenarios where the same data or hints often need to be processed, such as conversational AI systems, data extraction, and repetitive queries.

Best Practices for OpenAI Hint Caching

To improve the probability of cache hits, OpenAI also gives some official advice:

- Structured prompts: Place static or repetitive content in front of the prompt and dynamic content behind the prompt.

- Monitor cache metrics: Optimize hinting and caching policies by monitoring cache hit rates, latency, and cache mark percentages.

- Utilize long hints and off-peak hours: Use longer hints and initiate requests during off-peak hours to increase the chances of cache hits, as the cache will be cleared more frequently during peak hours.

- Maintain consistency: Reduce the likelihood of cache flushes by periodically using prompt requests with the same prefix.

OpenAI's Hint Caching Technique Frequently Asked Questions and Answers

- How does caching ensure data privacy?

Cue caches are not shared between different organizations. Only members of the same organization can access the same cue cache. - Does hint caching affect output markup or final response?

Cue Cachewill not affectThe output of the model. The generated output is always the same whether caching is used or not. Because only the hints themselves are cached, the actual response is recalculated each time based on the cached hints.

- Is it possible to clear the cache manually?

Manually clearing the cache is not currently supported. Prompts that have not been used for a long time are automatically removed from the cache. Typically, the cache is cleared in the 5-10 minutes is cleared after an inactive period, but during periods of low traffic, the cache may last up to an hour The - Do I have to pay extra to use Hint Cache?

Not required. Caching is enabled automatically and there is no additional action or cost to the user. - Do cached hints count against TPM limits?

Yes, caching does not affect rate limiting, cache hits still count towards the total number of requests. - Can I use hint cache discounts in Scale Tier and Bulk API?

Tip Cache discounts do not apply to the Bulk API, but they do in Scale Tier. With Scale Tier, overflow tokens are also subject to cache discounts. - Does hint caching apply to zero data retention requests?

Yes, prompt caching complies with the existing zero data retention policy.

summarize

Azure OpenAI's cue caching feature provides a valuable optimization solution for handling long cues and repetitive requests. It significantly improves the efficiency of the model by reducing computational latency and cost.

As more models are supported and the hint caching feature continues to be optimized, it is expected that Azure OpenAI users will be able to enjoy a more efficient and cost-effective service experience.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...