OpenAI's Next-Generation Models Hit Big Bottleneck, Former Chief Scientist Reveals New Route

OpenAI's next-generation large language model, Orion, may be hitting an unprecedented bottleneck. According to The Information, OpenAI employees say that the Orion model's performance gains have fallen short of expectations, and that the quality gains are "much smaller" than the GPT-3 to GPT-4 upgrade.

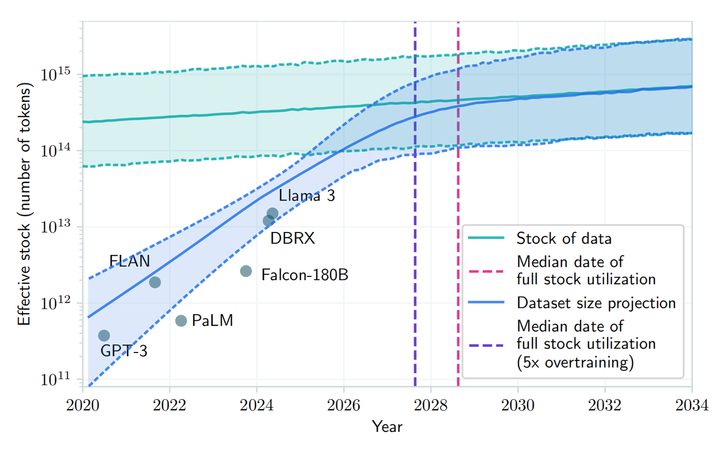

In addition, Orion is not as stable as its GPT-4 predecessor for certain tasks. Despite Orion's greater linguistic capabilities, programming performance may not surpass that of GPT-4. dwindling resources for training high-quality text and other data have made it more difficult to find high-quality training data, slowing down the development of Large Language Models (LLMs).

Not only that, but future training will be more computationally intensive, capital intensive, and even power intensive, meaning that the cost of developing Orion and subsequent large language models will increase significantly. openAI researcher Noam Brown recently told the TED AI conference that more advanced models may be 'economically unfeasible':

Are we really going to spend hundreds of billions or trillions of dollars training models? At some point, the law of scaling will break down.

OpenAI has set up a foundational team, led by Nick Ryder, to look at how to deal with the increasing scarcity of training data and how long the scaling laws of big models will last.

Noam Brown

The scaling laws are one of the core assumptions in AI: as long as there is more learnable data and more computational power to drive the training process, the performance of a large language model can continue to improve at the same rate. In short, the scaling law describes the relationship between resource inputs (amount of data, computational power, model size) and model performance output. That is, the extent to which performance improves when more resources are invested into the big language model.

For example, training a large language model can be likened to producing an automobile: initially, the factory is small, with only a few machines and a few workers, and at this point, each additional machine or worker results in a significant increase in output because the additional resources are directly translated into productivity. However, as the factory grows in size, the increase in output per additional machine or worker diminishes, possibly due to increased management complexity or less efficient worker collaboration.

When a factory reaches a certain size, adding more machines or workers may result in a more limited increase in production. At this point, the factory may be approaching the limits of its resources, such as land, power supply, and logistics, and further investment is no longer able to bring about a matching increase in production capacity.This is exactly the dilemma faced by Orion's model: as the model size increases (similar to adding more machines and workers), the model's performance improvement is very significant in the early and mid-term stages, but in the later stages, even if the model size or the amount of training data is increased, the performance improvement becomes This is known as "hitting the wall".

According to a paper on arXiv, it is predicted that the development of big language models could exhaust public human text resources between 2026 and 2032 due to the growing demand for and limited availability of public human text data. Despite pointing out that there are 'economic problems' with future model training, Norm Brown argues against the above, arguing that 'the development of AI is not going to slow down anytime soon'.

Researchers at OpenAI generally share this view, and while the law of scaling may be slowing down, overall AI development will not be significantly affected by optimizing inference times and post-training improvements.Meta CEO Mark Zuckerberg, OpenAI CEO Sam Altman, and other AI developer CEOs have also publicly stated that the traditional limits of the scaling law have not yet been reached, and they are still developing expensive data centers to improve the performance of pre-trained models.

Peter Welinder, VP of Product at OpenAI, also took to social media to say that 'people underestimate the power of test-time computation'. Test-time computation (TTC) is a machine learning concept that refers to the computation that occurs when a model is deployed to make inferences or predictions about new input data, separate from the computation that occurs during training. The training phase is the process by which the model learns data patterns, while the testing phase is the process by which the model is applied to real-world tasks.

Traditional machine learning models typically predict new instances of data without additional computation once they have been trained and deployed. However, some more complex models, such as certain types of deep learning models, may require additional computation at test time (inference time). the 'o1' model developed by OpenAI employs this type of inference pattern. The AI industry as a whole is shifting its focus to the optimization phase of the model once the initial training is complete.

Peter Welinder

Ilya Sutskever, one of the co-founders of OpenAI, admitted in a recent interview with Reuters that the pre-training phase of using large amounts of unlabeled data to train AI models to understand linguistic patterns and structures has plateaued in terms of effectiveness gains. "The 2010s were the era of expansion, and now we're back to the era of exploration and discovery once again," he said. He also noted, "Improving accuracy is more important than ever."

Orion is scheduled to launch in 2025, and OpenAI has named it 'Orion' instead of 'GPT-5', perhaps hinting at a whole new revolution. Although it is currently 'difficult to produce' due to theoretical limitations, it is still expected that this newly named 'newborn' will bring about a transformative opportunity for AI macromodeling.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...