OpenAI Releases New Generation of Audio Modeling APIs, Major Upgrade in Voice Interaction Technology

OpenAI recently announced the launch of its new generation of audio modeling APIs, aimed at empowering developers to build more powerful and smarter voice assistants. This initiative is seen as a major advancement in the field of voice interaction technology, signaling that human-computer voice interaction will usher in a new phase that is more natural and efficient.

The release includes two key updates: a more advanced speech-to-text model and a more expressive text-to-speech model, which OpenAI claims sets a new benchmark for accuracy and reliability, especially in challenging scenarios with complex accents, noisy environments, and varying speech speeds. This is especially true in challenging scenarios such as complex accents, noisy environments, and varying speech speeds. This means that the new model will dramatically improve the quality and efficiency of transcription in applications such as customer call centers and transcription of meeting minutes.

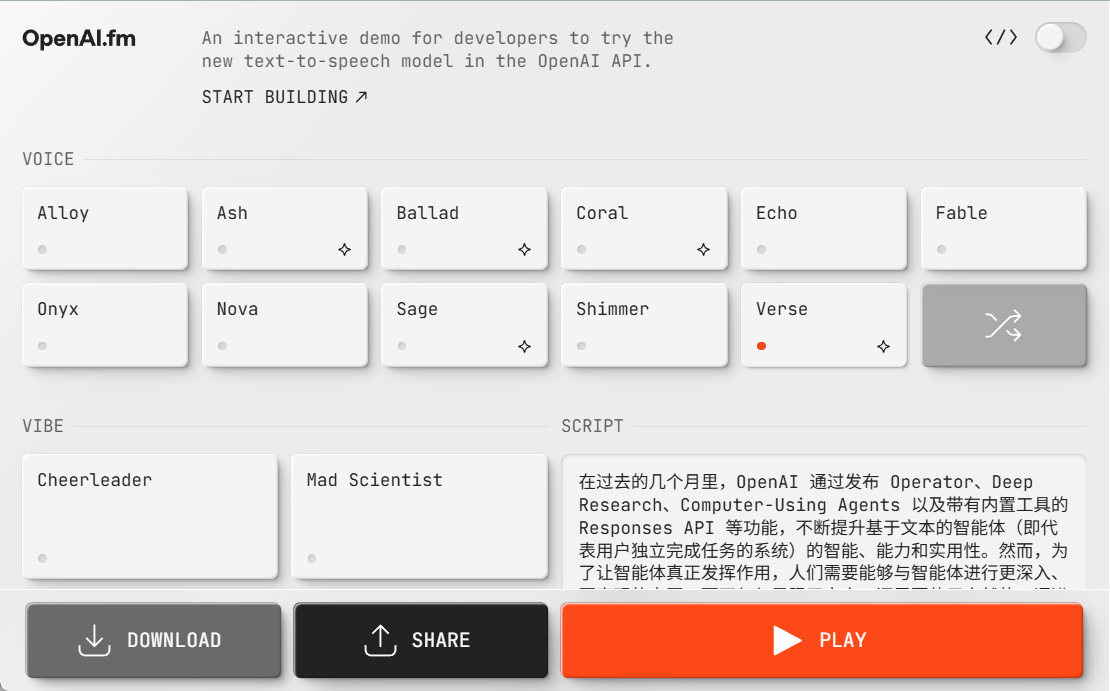

Notably, for the first time, OpenAI allows developers to instruct text-to-speech models to speak in a particular way. For example, developers can set the model to "speak like an empathetic customer service agent," giving voice assistants unprecedented scope for personalization. This feature opens new doors for a wide range of scenarios, from more humane customer service to more expressive creative storytelling.

OpenAI launched its first audio model, Whisper, back in 2022 and has continued to invest in improving the model's intelligence, accuracy and reliability ever since. This release of a new audio model is the culmination of that longstanding effort. Developers can now use the API to build more accurate speech-to-text systems, as well as more distinctive and vivid text-to-speech sounds.

A new generation of speech-to-text models: gpt-4o-transcribe and gpt-4o-mini-transcribe

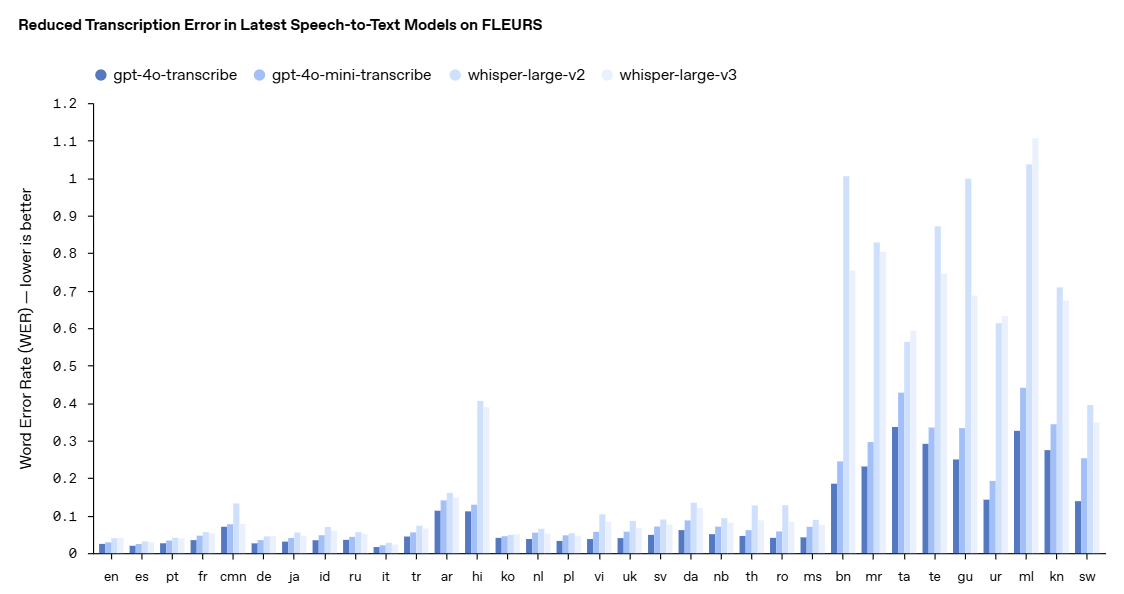

OpenAI has released two new models, gpt-4o-transcribe and gpt-4o-mini-transcribe, which are more advanced than the previous ones. Whisper models, they show significant improvements in Word Error Rate (WER), language recognition and accuracy.

- Word Error Rate (WER) measures the accuracy of a speech recognition model by calculating the percentage of incorrectly transcribed words compared to a reference transcript - the lower the WER, the better, the fewer errors. Our latest speech-to-text model achieves low WER in various benchmarks, including FLEURS (Learning Evaluation of Speech Universal Representation with Small Samples) - a multilingual speech benchmark that covers over 100 languages using manually transcribed audio samples. These results demonstrate higher transcription accuracy and stronger language coverage. As shown here, our model consistently outperforms Whisper v2 and Whisper v3 in all language evaluations.

gpt-4o-transcribe has demonstrated word error rate outperformance of the Whisper model on a number of authoritative benchmarks. Word error rate is a key measure of a speech recognition model's accuracy, with lower values representing fewer errors, and OpenAI says these improvements result from targeted innovations in reinforcement learning and large-scale interim training using diverse, high-quality audio datasets.

As a result, the new speech-to-text models are better able to capture speech nuances, reduce misrecognition, and improve transcription reliability, especially when dealing with complex situations such as accents, noisy environments, and different speech speeds. These models are currently open for use in the speech-to-text API.

To visualize the performance improvement, OpenAI cites FLEURS (Few-shot Learning Evaluation of Universal Results from the Representations of Speech (FLEURS) benchmark, a multilingual speech benchmark covering more than 100 languages using manually transcribed audio samples. The results show that OpenAI's new model has lower word error rates than both Whisper v2 and Whisper v3 in cross-language evaluations, demonstrating greater transcription accuracy and broader language coverage.

A New Generation of Text-to-Speech Models: gpt-4o-mini-tts

In addition to the speech-to-text model, OpenAI has also introduced the new gpt-4o-mini-tts text-to-speech model, whose highlight is its greater controllability. Developers can not only control what the model says, but also instruct the model how to say it. This capability brings more room for customization of the user experience, with applications ranging from customer service to creative content. The model is also available in the Text-to-Speech API. Note that these text-to-speech models are currently limited to preset synthesized voices, which OpenAI monitors to ensure they are consistent with the synthesized presets.

Get a quick taste of gpt-4o-mini-tts here

https://www.openai.fm/

The technological innovation behind the model

OpenAI reveals that the new audio models are built on top of the GPT-4o and GPT-4o-mini architectures and were pre-trained at scale using datasets specifically geared towards audio, which is critical for optimizing model performance. This targeted approach allows the models to understand the subtleties of speech more deeply and excel in a variety of audio-related tasks.

In addition, OpenAI has improved its knowledge refinement techniques to enable knowledge transfer from large audio models to small, efficient models. By utilizing advanced self-gaming methods, its refinement dataset effectively captures real dialog dynamics and replicates real user-assistant interaction scenarios. This helps the small model provide excellent dialog quality and responsiveness.

For speech-to-text modeling, OpenAI has adopted a reinforcement learning (RL)-led paradigm to take transcription accuracy to the next level. This approach significantly improves accuracy and reduces hallucination, making its speech-to-text solution more competitive in complex speech recognition scenarios.

These technological advances represent the latest progress in the field of audio modeling, where OpenAI combines innovative approaches with practical improvements aimed at enhancing the performance of speech applications.

API Openness and Future Outlook

These new audio models are now available to all developers. For developers who are already using text models to build conversational experiences, integrating speech-to-text and text-to-speech models is the easiest way to build voice assistants, and OpenAI has also released integration with the Agents SDK to simplify the development process. For developers looking to build low-latency speech-to-speech experiences, OpenAI recommends using the speech-to-speech models in the Realtime API.

Going forward, OpenAI plans to continue to invest more in the intelligence and accuracy of its audio models and explore ways to allow developers to introduce custom sounds to build more personalized experiences while ensuring compliance with its security standards. In addition, OpenAI is actively engaging in conversations with policymakers, researchers, developers, and creatives about the challenges and opportunities that synthesized voices can present.OpenAI is excited for developers to build innovative applications using these enhanced audio capabilities, and says it will continue to invest in other modalities, including video, to support developers in building multimodal intelligentsia experiences.

The new generation of audio model APIs released by OpenAI has undoubtedly injected new vitality into the development of voice interaction technology. With the continuous progress of technology and the increasingly rich application scenarios, we have reason to believe that the future of the digital world will become more "able to listen and speak", and human-computer interaction will be more natural and smooth.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...

![[转]Deepseek R1可能找到了超越人类的办法](https://aisharenet.com/wp-content/uploads/2025/01/5caa5299382e647.jpg)