How OpenAI's bots 'acted like a DDoS attack' to destroy the seven-person company's website

On Saturday, Triplegangers CEO Oleksandr Tomchuk was notified that his company's e-commerce site was down. It looked like some kind of distributed denial-of-service attack.

He soon discovered that the culprit was one of OpenAI's bots, which was relentlessly trying to crawl his entire massive website.

"We have over 65,000 products, and each product has a page," Tomchuk told TechCrunch. "Each page has at least three photos."

OpenAI sent "tens of thousands" of server requests trying to download all of this content, including hundreds of thousands of photos and their detailed descriptions.

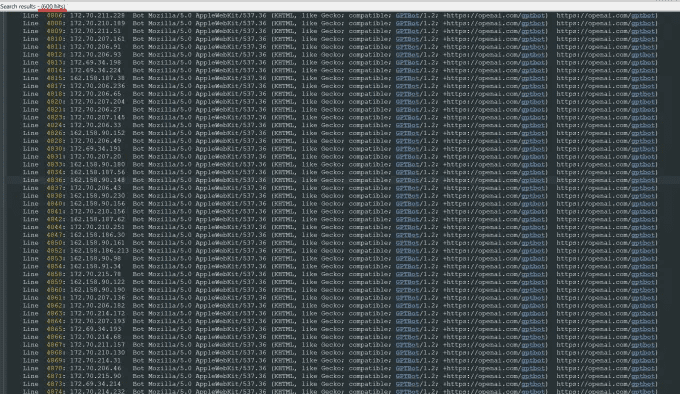

"OpenAI used 600 IPs to crawl the data, and we're still analyzing last week's logs, so maybe the number is even higher," he said of the IP addresses the bot used to try to access his site.

"Their crawlers were destroying our site," he said, "It was basically a DDoS attack."

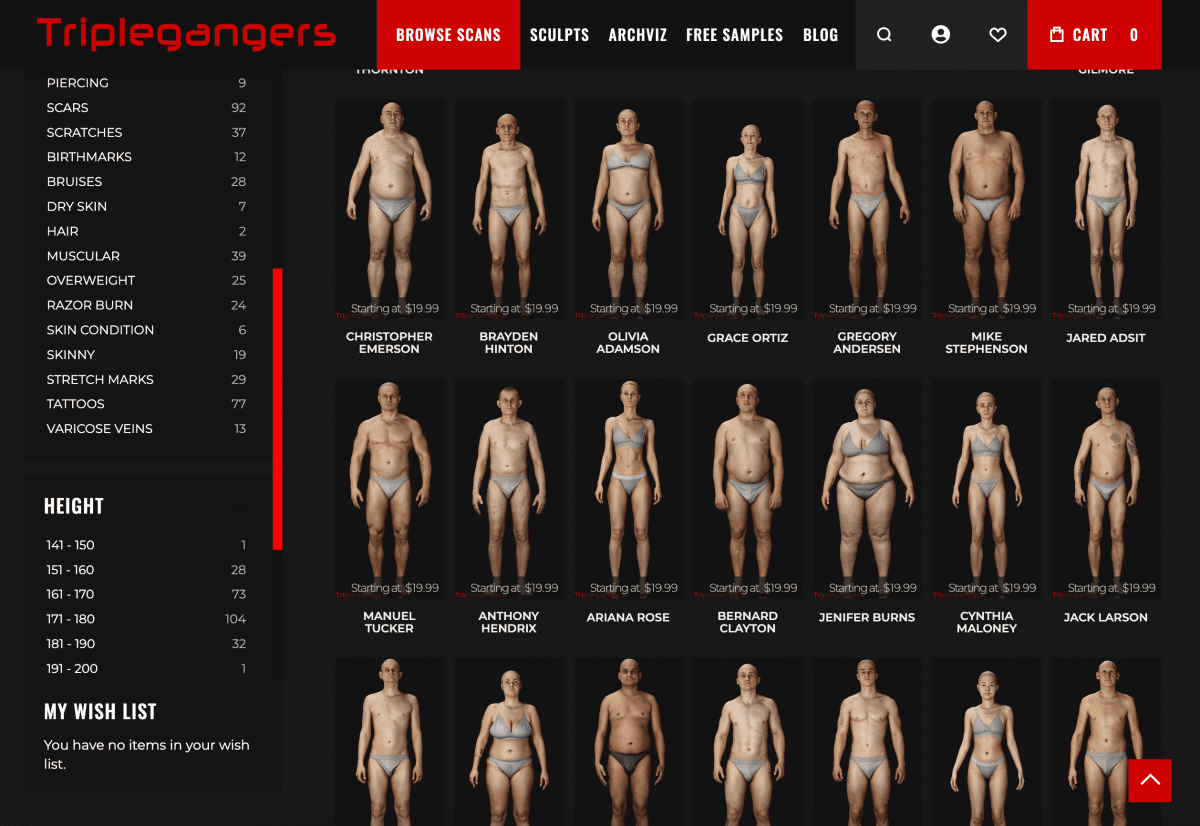

Triplegangers' website is its business. The seven-employee company has spent more than a decade assembling what it calls the largest database of "human digital doubles" on the Web, meaning 3D image files scanned from actual mannequins.

It sells 3D object files as well as photographs to 3D artists, video game producers, and anyone who needs to digitally reconstruct the features of the real human body-everything from hands to hair, skin, and the entire body.

Tomchuk's team is based in the Ukraine but is also licensed in Tampa, Florida, U.S. Its website has a terms of service page that prohibits bots from accessing its images without permission. But that in itself doesn't do anything. The site must use a properly configured robot.txt file that contains tags that explicitly tell OpenAI's robot, GPTBot, not to access the site. (OpenAI has several other robots, ChatGPT-User and OAI-SearchBot, which have their own tags, based on their information pages about crawlers.)

Robot.txt, also known as the Robots Exclusion Protocol, is designed to tell search engine sites what not to crawl as they index the web.OpenAI says on its information page that it will comply with such files when configured with its own no-crawl tag, though it also warns that its robots may take up to 24 hours to recognize an updated robot.txt file.

As Tomchuk experienced, if a site doesn't use robot.txt correctly, OpenAI and others take that to mean that they can crawl content at will. This is not an opt-in system.

To make matters worse, not only are Triplegangers in U.S. business hours being screwed offline by OpenAI's bot, but Tomchuk expects a significant increase in AWS bills due to all of the bot's CPU and download activity.

Robot.txt isn't infallible either. ai companies voluntarily comply with it. Another AI startup, Perplexity, gained notoriety last summer as a result of a Wired Magazine investigation when some evidence hinted that Perplexity There's no time to comply with it.

Unable to determine what is being accessed

By Wednesday, a few days after OpenAI's bot returned, the Triplegangers had properly configured the robot.txt file and set up a Cloudflare account to block its GPTBot and a few other bots he found, such as Barkrowler (an SEO crawler) and Bytespider ( TikTok's crawler).Tomchuk also hopes he has blocked crawlers from other AI modeling companies. He said the site did not crash Thursday morning.

But Tomchuk still doesn't have a reasonable way to find out exactly what OpenAI managed to access or remove the material. He couldn't find a way to contact OpenAI and ask. OpenAI did not respond to TechCrunch's request for comment. OpenAI did not respond to TechCrunch's request for comment, and OpenAI has so far failed to deliver on its long-promised opt-out tool, as TechCrunch recently reported.

This is a particularly thorny issue for Triplegangers. "Rights are a serious issue in the business we're in because we scan real people," he says. Under laws like Europe's GDPR, "they can't just take a picture of anyone on the web and use it."

Triplegangers' website is also a particularly tasty find for AI crawlers. Multibillion-dollar startups, such as Scale AI, have been founded in which humans painstakingly tag images to train AI. triplegangers's site contains detailed tagged photos: race, age, tattoos and scars, all body types, and so on.

Ironically, the OpenAI bot's greed is what reminds the Triplegangers to realize how exposed it is. He says that if it had scratched more gently, Tomchuk would never have known.

"It's scary because these companies seem to be exploiting a loophole to crawl data, and they're saying 'If you update your robot.txt with our tags, you can opt out,'" Tomchuk said, but that puts the onus on business owners on how to stop them.

He wants other small online businesses to know that the only way to find out if an AI bot is accessing a site's copyrighted content is to actively look for it. He's certainly not the only one being terrorized by them. Other website owners recently told Business Insider how OpenAI's bots were destroying their websites and increasing their AWS bills.

In 2024, the problem is growing exponentially. A new study by digital advertising company DoubleVerify found that AI crawlers and crawling tools have led to an increase of 861 TP3T in "general invalid traffic" in 2024-that is, traffic that isn't coming from real users.

Nevertheless, "most sites still don't know they're being crawled by these bots," Tomchuk warns. "Now we have to monitor log activity on a daily basis to spot these bots."

When you think about it, the whole model works a bit like mafia extortion: unless you're protected, the AI bots will take what they want.

"They should be asking for permission, not just grabbing data," Tomchuk said.

Related reading.

1. OpenAI has launched a new web crawler tool called GPTBot to address privacy and intellectual property issues raised by data collection on public websites. The technology aims to transparently collect public web data and use it to train its AI models, all under the OpenAI banner.

2. OpenAI uses web crawlers ("bots") and user agents to perform actions for its products that are either automated or triggered by user requests. openAI uses the following robots.txt tag to enable webmasters to manage how their websites and content work with AI . Each setting is independent of the others - for example, a site administrator can allow OAI-SearchBot to appear in search results while disabling GPTbot to indicate that crawled content should not be used to train OpenAI's generative AI base model. For search results, please note that it can take about 24 hours for adjustments to be made from a site's robots.txt update to our system.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...