OneFlow Yearly Deal: 900-page free eBook on "Generative AI and Big Models".

It's hard to imagine the amazing changes that would have taken place in AI in 2024 if Scaling Law hadn't slowed down, but then again you might be glad that it's because of Scaling Law's slowdown that it's giving later entrants in the industry a chance to catch up, and more ordinary people a chance to ride this round of the technological revolution.

The changes in the AI space are stirring. A year ago, the AI community generally believed that most models were half a year or a year away from OpenAI's models, but big model pre-training is gradually becoming less secretive, and its barriers to entry are lowering by orders of magnitude. To the deep shock of the domestic and international tech community, the gap between the open source big models represented by DeepSeek and Qwen and the top closed-source big model GPT 4o has drastically narrowed, and both models are from Chinese AI teams. Meanwhile, as the pre-training performance of big models hits the ceiling, inference is seen as the "second growth curve" for the continuous improvement of model capability, and the popularization of AI technology is further accelerated, as people can efficiently develop a generative AI application by accessing standardized APIs, or just enjoy the benefits of AI technology. interesting products released by product explorers.

In 2024, OneFlow published 80 quality articles, as always, documenting and exploring the many changes in the field of generative AI and big models. At the end of the year, we selected more than 60 articles and made a 900-page "yearbook" for every reader, hoping to help you understand the process of building big models, the current state of the industry and trends. This collection is divided into eight sections:Overview, Big Model Fundamentals, No Secrets to Big Model Training, Big Model "Second Growth Curve": Inference, AI Chip Changes, Generative AI Product Construction, Generative AI Industry Analysis, Challenges and Future of AGI.

Thank you to each of the authors for openly sharing their AI knowledge and inspiring you and me, which is our motivation to keep compiling this in-depth content. We hope to find those worth reading in the clutter of information and spread it to as many people as possible who wish to learn and explore AI. If you want to truly understand AI, the shortest shortcut is to open this gift and take the plunge to read it. 2025, expect new surprises from AI.

I. Overview

- AI Inventory 2024: investment soaring, infrastructure reconfiguration, accelerating technology adoption /4

- The trends I see behind 900 open source AI tools /36

II. Large model fundamentals

- Understanding how LLM works using middle school math /49

- Understanding and Encoding LLM's Self-Attention Mechanism from Scratch /78

- Revealing the LLM Sampling Process /112

- 50 Diagrams to Visualize and Understand the Large Model of the Mixed Expert (MoE) /125

- Building a Diffusion Model for Video Generation from Scratch /158

- 10 Big Model Hidden Plays That Change Everyday /174

Third, there is no secret to large model training

- LLaMA 3: A New Prologue to the Battle of the Big Models /177

- Extrapolating GPU memory requirements for LLM training /190

- Revealing Batch Processing Strategies on the GPU /200

- The Performance Truth Behind GPU Utilization /205

- Floating Point Allocation for LLM /211

- Performance Optimization of Mixed-Input Matrix Multiplication /219

- 10x accelerated LLM computational efficiency: vanishing matrix multiplication /227

- Largest top-level dataset open-sourced to create 15 trillion Token /240

- 70B Big Model Training Recipe #1: Dataset Creation and Evaluation /244

- From Bare Metal to 70B Large Models ②: Infrastructure Setup and Scripting /270

- 70B Large Model Training Recipe III: Findings from 1000 Hyperparameter Optimization Experiments /289

- John Schulman, Head of ChatGPT: Upgrade Secrets for Big Models /303

IV. Large model "second growth curve": reasoning

- The New Battlefield for Generative AI: Logical Inference and Reasoned Computation /318

- LLM logic inference strategy selection: computation during inference vs. computation during training /330

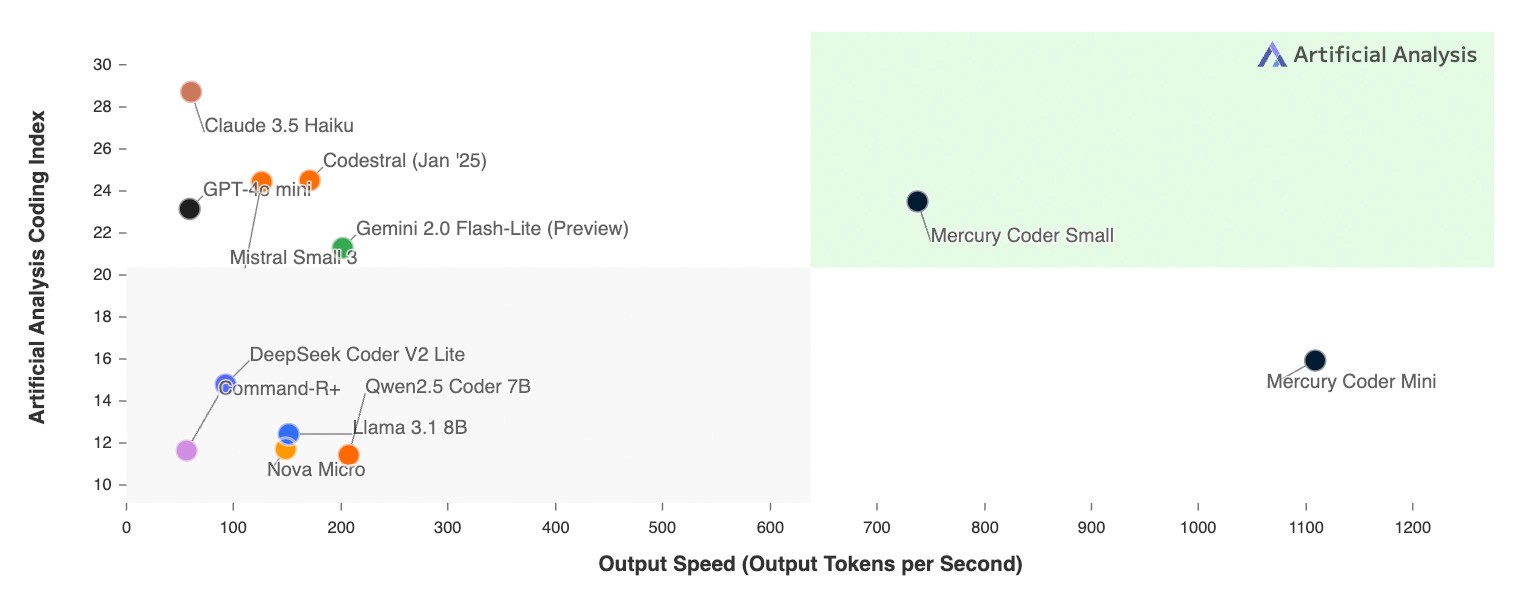

- Exploring the Throughput, Latency, and Cost Space of LLM Reasoning /345

- Achieving Extreme LLM Reasoning from Zero /363

- A Beginner's Guide to LLM Reasoning 1: Initialization and Decoding Phases of Text Generation /402

- A Beginner's Guide to LLM Reasoning ②: Analyzing the KV Cache in Depth /408

- A Beginner's Guide to LLM Reasoning (3): Profiling Model Performance /429

- LLM Reasoning Acceleration Guide /442

- LLM Serving Maximizing the Achievement of Effective Throughput /480

- How to accurately and interpretably assess the quantitative effects of large models? /491

- Evaluation of the quantitative effects of LLM: Findings after 500,000 empirical tests /502

- Stable Diffusion XL Ultimate Guide to Optimization /510

V. AI Chip Changes

- Renting H100 for $2/hour: the night before the GPU bubble bursts /585

- The Ultimate GPU Interconnect Technology Exploration: The Disappearing Memory Wall /600

- Cerebras: Challenging Nvidia, the "magic" of the world's fastest AI inference chip /614

- 20x faster than GPUs? d-Matrix Inference Price/Performance Analysis /624

- AI Semiconductor Technology, Markets and the Future /630

- AI Data Center History, Technology and Key Players /642

VI. Generative AI product construction

- The first year of big model productization: tactics, operations, and strategy /658

- OpenAI discontinued, homegrown big models are free to use! Developer Token free implemented /691

- LLM long context RAG ability measured: gpt-o1 vs. Gemini /699

- Large Model Cost-Effectiveness Comparison: DeepSeek 2.5 vs. Claude 3.5 Sonnet vs. GPT-4o /712

- Efficient Coding Tools for 10x Engineers: Cursor x SiliconCloud /718

- Beats Midjourney-v6, runs Kotaku Kolors without a GPU /723

- AI Search Perplexity's approach to product building /728

- Behind the NotebookLM explosion: core insights and innovations in AI-native products /739

- OpenAI's Organizational Formation, Decision Mechanisms, and Product Construction /750

- Taking apart the generative AI platform: underlying components, functionality, and implementation /761

- From generalist to expert: the evolution of AI systems to composite AI /786

VII. Generative AI Industry Analysis

- The Business Code Behind Open Source AI /792

- Capital Mysteries and Flows in the AI Market /805

- Generative AI industry economics: value distribution and profit structure /819

- Latest Survey on Enterprise Generative AI: AI Spending Jumps 6X, Multi-Model Deployments Prevail /829

- Generative AI Reasoning Technologies, Markets, and the Future /842

- Market Opportunities, Competition and Future of Generative AI Reasoning Business /855

- AI is not another "internet bubble" /864

- Sequoia Capital's Top Three AI Outlooks for 2025 /878

VIII. Challenges and future of AGI

- The Myth of AI Scaling /884

- Is the scale-up of large models sustainable /891

- GenAI's "Critical Leap": Reasoning and Knowledge /904

- The Shackles of LLM Logical Reasoning and Strategies for Breaking Them /924

- Revisiting the Three Fallacies of LLM Logical Reasoning /930

- Richard Sutton, the father of reinforcement learning: the next paradigm in AGI research /937

- Richard Sutton, the father of reinforcement learning: another possibility to AGI /949

https://siliconflow.feishu.cn/file/OSZtbnfYQoa4nBxuQ7Kcwpjwnpf

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...