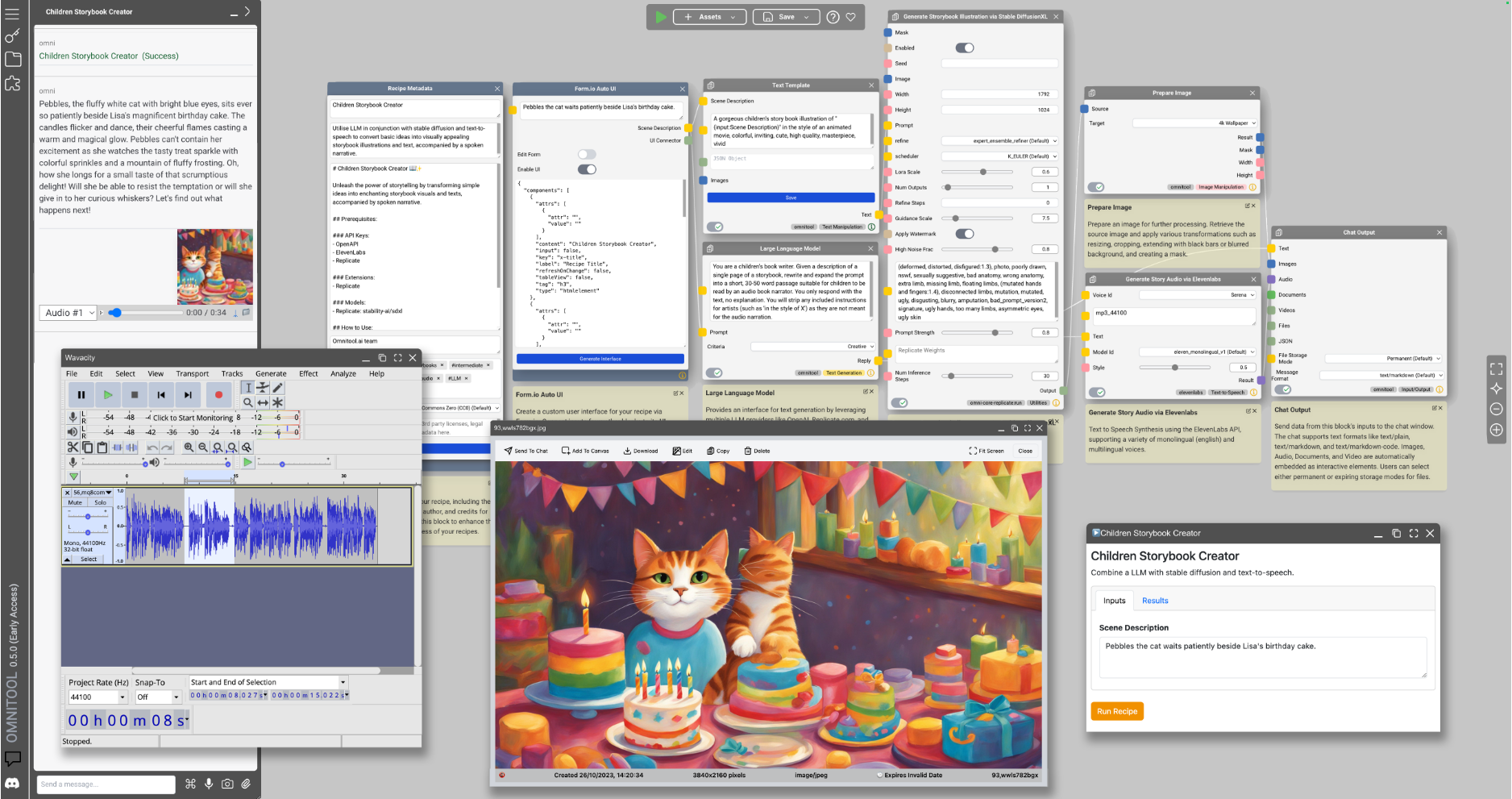

Omnitool: AI enthusiast's toolbox to manage, connect and use all AI models in one desktop!

General Introduction

Omnitool.ai is an open source "AI lab" designed to provide an extensible browser-based desktop environment for learners, hobbyists, and anyone interested in current AI innovations. It allows users to interact with the latest AI models from leading providers such as OpenAI, replicate.com, Stable Diffusion, Google, and others through a single unified interface. omnitool.ai runs on Mac, Windows, or Linux devices through native self-hosted software, with data stored locally. Data is transferred only when the user selects a third-party API to access.

Function List

- unified interface: Reduce time spent learning and using AI by connecting multiple AI models through a single interface.

- Local self-hosting: Runs on local devices to ensure data security and privacy.

- high scalability: Supports most OpenAPI3-based services that can be connected and used without writing code.

- Multi-platform support: Compatible with Mac, Windows and Linux operating systems.

- Rich Extension Modules: Includes file manager, view manager, news push and many other functional modules.

Using Help

Installation process

- Download and Installation::

- Visit the Omnitool GitHub page.

- Download the latest release.

- Select the appropriate installation package according to the operating system (Mac, Windows or Linux).

- Unzip the downloaded file and run the installation script (Windows users run it)

start.batMac and Linux users runstart.sh).

- Configuration and startup::

- Once the installation is complete, open a terminal or command prompt and navigate to the Omnitool installation directory.

- Run the startup script to start the Omnitool service.

- Open a browser and visit the local address (e.g.

http://localhost:3000) to enter the Omnitool interface.

Using Omnitool

- Connecting AI Models::

- In the Omnitool interface, select "Add Model".

- Enter the required API key and related configuration information.

- Click "Connect" and Omnitool will automatically connect and display the model.

- Using Extension Modules::

- In the main screen, select "Extension Module".

- Browse the available extension modules such as File Manager, View Manager, etc.

- Click on the "Install" button to install the required extensions.

- After the installation is completed, the extension module will appear in the main interface and users can use it directly.

- Run and test the model::

- In the main screen, select the connected AI model.

- Enter test data or select a predefined test case.

- Click "Run" to view the model output.

- Users can adjust the model parameters as needed for multiple tests and validations.

common problems

- Unable to start the service: Check that all dependencies are installed correctly and make sure that ports are not occupied.

- Connection model failure: Verify that the API key and configuration information are correct and check the network connection.

- Interface display abnormality: Try clearing your browser cache, or changing your browser to revisit.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...