olmOCR: PDF document conversion to text, support for tables, formulas and handwritten content recognition

General Introduction

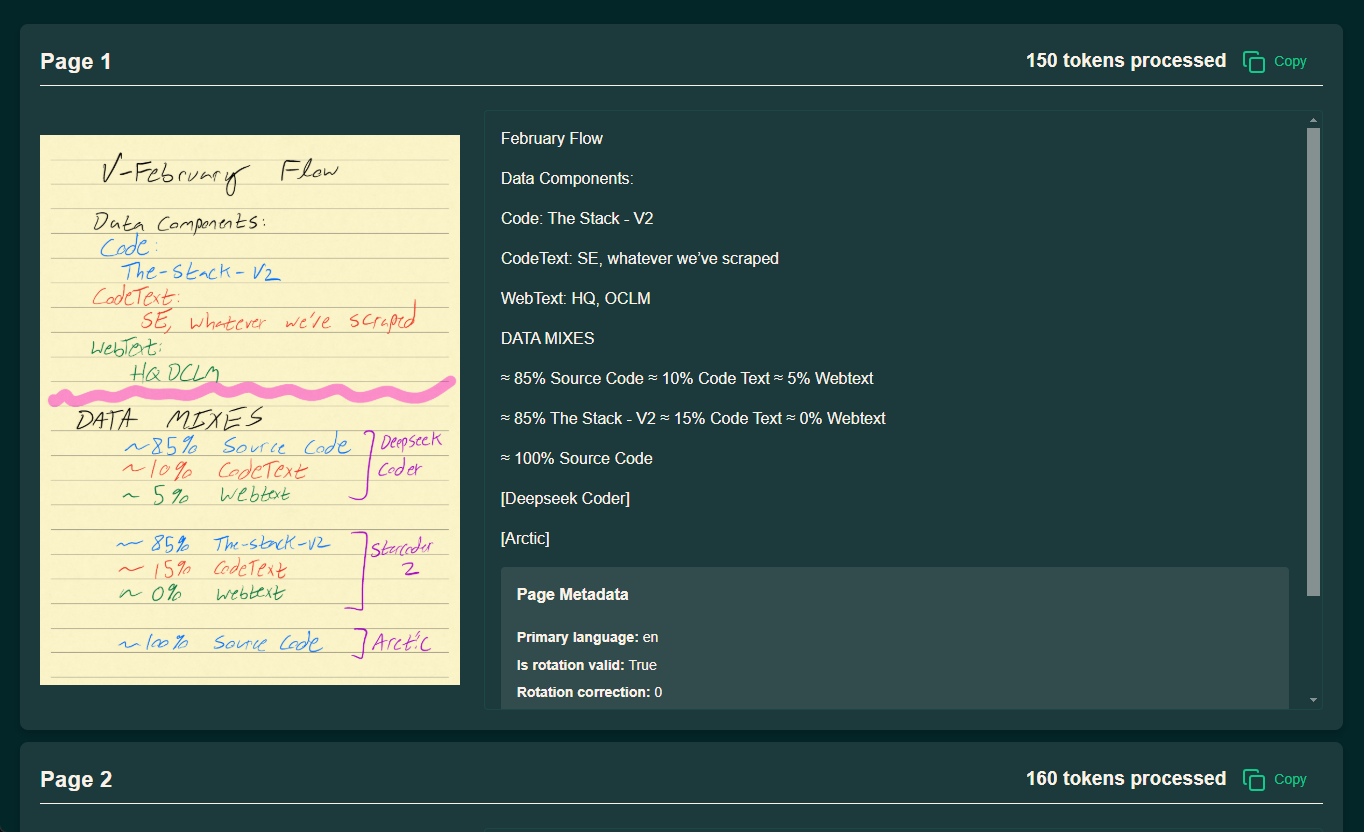

olmOCR is an open source tool developed by the AllenNLP team at the Allen Institute for Artificial Intelligence (AI2) that focuses on converting PDF files to linearized text, and is particularly suited for dataset preparation and training for large-scale language models (LLMs). It supports text extraction from complex PDF documents, maintains natural reading order, and can handle tables, formulas, and even handwritten content. The tool is designed to be efficient and can run on local GPUs or through AWS S3 for multi-node parallel processing, significantly reducing processing costs. According to official data, its processing speed up to 3000 + tokens per second, the cost is only 1/32 of GPT-4o, very suitable for researchers and developers who need to deal with a large number of PDF. olmOCR uses the Apache 2.0 license, the code, model weights and data is completely open source, and encourages the community to participate in the improvement.

Demo address: https://olmocr.allenai.org/

Function List

- PDF Text Extraction and Linearization: Convert PDF files to Dolma-style JSONL-formatted text, preserving reading order.

- GPU Accelerated Reasoning: Utilizing native GPUs and sglang technology for efficient document processing.

- Multi-node parallel processing: Support for coordinating multi-node tasks via AWS S3, suitable for processing millions of PDFs.

- Complex Content Recognition: Process tables, mathematical formulas and handwritten text to output structured results.

- Flexible workspace management: Support local or cloud workspace to store processing results and intermediate data.

- Open Source Ecological Support: Provide complete code and documentation for easy secondary development and customization.

Using Help

Installation process

The installation of olmOCR needs to be done in an environment that supports Python, and it is recommended to use a GPU to improve processing efficiency. Below are the detailed steps:

1. Environmental preparation

- Installing Anaconda: If you don't have Anaconda, go to the official website to download and install it.

- Creating a Virtual Environment:

conda create -n olmocr python=3.11 conda activate olmocr

- Clone Code Repository:

git clone https://github.com/allenai/olmocr.git cd olmocr

2. Installation of core dependencies

- Installation of basic dependencies:

pip install -e . - Installing GPU support (optional): Install sglang and flashinfer if you need GPU acceleration:

pip install sgl-kernel==0.0.3.post1 --force-reinstall --no-deps pip install "sglang[all]==0.4.2" --find-links https://flashinfer.ai/whl/cu124/torch2.4/flashinfer/take note of: Make sure your GPU driver and CUDA version are compatible with the above dependencies.

3. Verification of installation

- At the command line, run

python -m olmocr.pipeline --helpIf the help message is output, the installation was successful.

Usage

olmOCR provides two main usage scenarios: local single-file processing and large-scale processing in the cloud. The following describes the operation process in detail.

Native processing of individual PDFs

- Preparing PDF files:

- Place the PDF to be processed in a local directory, e.g.

./tests/gnarly_pdfs/horribleocr.pdfThe

- Place the PDF to be processed in a local directory, e.g.

- Run processing command:

python -m olmocr.pipeline ./localworkspace --pdfs tests/gnarly_pdfs/horribleocr.pdf - View Results:

- When processing is complete, the results are saved in JSON format in the

./localworkspaceThe - The extracted text is stored in Dolma-style JSONL format in the

./localworkspace/resultsCenter.

- When processing is complete, the results are saved in JSON format in the

- Adjustment parameters (optional):

--workers: Sets the number of concurrent worker threads, default 8.--target_longest_image_dim: Sets the maximum side length of the rendered image, default 1024 pixels.

sample output:

{"text": "Molmo and PixMo:\nOpen Weights and Open Data\nfor State-of-the...", "metadata": {"primary_language": "en", "is_table": false}}

Large-scale processing in the cloud (AWS S3)

- Configuring an AWS Environment:

- Ensure that you have an AWS account and generate an access key.

- Create two buckets on S3, for example

s3://my_s3_bucket/pdfworkspaces/exampleworkspacecap (a poem)s3://my_s3_bucket/jakep/gnarly_pdfs/The

- Upload PDF files:

- Upload PDF files to

s3://my_s3_bucket/jakep/gnarly_pdfs/The

- Upload PDF files to

- Starting a master node task:

python -m olmocr.pipeline s3://my_s3_bucket/pdfworkspaces/exampleworkspace --pdfs s3://my_s3_bucket/jakep/gnarly_pdfs/*.pdf- This command creates a work queue and starts processing.

- Adding Slave Nodes:

- Running on other machines:

python -m olmocr.pipeline s3://my_s3_bucket/pdfworkspaces/exampleworkspace- The slave node will automatically get the task from the queue and process it.

- Results View:

- The results are stored in the

s3://my_s3_bucket/pdfworkspaces/exampleworkspace/resultsThe

- The results are stored in the

take note of: You need to configure the AWS CLI and ensure that you have sufficient privileges to access the S3 storage bucket.

Use of Beaker (AI2 internal users)

- Add Beaker Parameters:

python -m olmocr.pipeline s3://my_s3_bucket/pdfworkspaces/exampleworkspace --pdfs s3://my_s3_bucket/jakep/gnarly_pdfs/*.pdf --beaker --beaker_gpus 4 - operational effect:

- After the workspace is prepared locally, 4 GPU worker nodes are automatically started in the cluster.

Featured Function Operation

1. Handling complex documents

- procedure:

- Upload PDFs that contain tables or formulas.

- Using the Default Model

allenai/olmOCR-7B-0225-preview, run the processing command. - Check the output JSONL file to make sure that tables and formulas are parsed as text correctly.

- draw attention to sth.:: Adjustment if recognition is poor

--target_longest_image_dimparameter to increase the image resolution.

2. High throughput processing

- procedure:

- Configure a multi-node environment (such as AWS or Beaker).

- rise

--workersparameter to enhance the stand-alone parallelism. - Monitor processing speed to ensure 3000+ tokens per second.

- dominance: Costs are as low as $190 per million pages, far less than commercial APIs.

3. Customized development

- procedure:

- modifications

olmocr/pipeline.py, adjusting the processing logic. - interchangeability

--modelparameter, using a custom model path. - Submit code to GitHub and participate in community development.

- modifications

caveat

- hardware requirement: GPU is required for local operation, NVIDIA graphics card is recommended.

- network requirement: Cloud processing requires a stable network connection to AWS.

- Debugging Tips: Use

--statsParameter to view workspace statistics for troubleshooting.

With the steps above, you can quickly get started with olmOCR, whether you are working with single PDFs or large-scale datasets, and get the job done efficiently.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...