Ollama Installation and Usage Tutorial

There have been many previous issues on Ollama The tutorials on installation and deployment have scattered information, but this time we have organized a complete instruction on how to use Ollama on local computers in one step. This tutorial is aimed at beginners to avoid stepping on the wrong path, and we recommend reading the official Ollama documentation if you are able to do so. I will then provide a step-by-step guide to installing and using Ollama.

Why Choose Ollama for Local Installation of Large Models

Many newcomers, like me, don't understand that there are other, better performing tools for deploying large models online, such as:Inventorying LLM frameworks similar to Ollama: multiple options for locally deploying large models Why do you recommend installing Ollama at the end?

First of all, of course, it is easy to install on personal computers, but one of the most important points is that the performance of the deployment model for stand-alone better optimized for the parameters, the installation is not prone to errors. For example, the same configuration computer installation QwQ-32B Use Ollama for possible smoothness of use, change to "more powerful". llama.cpp It may be stuck, and even the output answers are not correct. There are many reasons behind this and I can't explain them clearly, so I won't, just know that Ollama contains llama.cpp at the bottom, and because of better optimization, it runs more stable than llama.cpp instead.

What kind of large model files can Ollama run?

Ollama supports model files in the following two formats with different inference engines:

- GGUF format: By

llama.cppReasoning. - safetensors format: By

vllmReasoning.

That means:

- If a model in GGUF format is used, Ollama calls the

llama.cppPerform efficient CPU/GPU inference. - If a model in safetensors format is used, Ollama utilizes the

vllmThe GPUs are often relied upon for high-performance inference.

Of course you don't need to care, just know that most of the files you install are in GGUF format. Why do you emphasize GGUF?

GGUF Support Quantitative (e.g. Q4, Q6_K)The ability toMaintains good inference performance with very low graphics and memory footprintsWhile safetensors are usually full FP16/FP32 models, they are much larger and take up more resources. You can learn more here:What is Model Quantization: FP32, FP16, INT8, INT4 Data Types ExplainedThe

Ollama Minimum Configuration Requirements

Operating System: Linux: Ubuntu 18.04 or later, macOS: macOS 11 Big Sur or later

RAM: 8GB for running a 3B model, 16GB for running a 7B model, 32GB for running a 13B model

Disk space: 12GB for installing Ollama and the base model, additional space required for storing model data, depending on the model you are using. 6G of space is recommended to be reserved on the C drive.

CPU: Any modern CPU with at least 4 cores is recommended, and for running 13B models, a CPU with at least 8 cores is recommended.

GPU (optional): You don't need a GPU to run Ollama, but it can improve performance, especially running larger models. If you have a GPU, you can use it to accelerate the training of customized models.

Install Ollama

Go to: https://ollama.com/download

Just choose according to the computer environment, the installation is very simple, the only thing to pay attention to here is that the network environment may lead to failure to install properly.

macOS installation: https://ollama.com/download/Ollama-darwin.zip

Windows installation: https://ollama.com/download/OllamaSetup.exe

Linux installation:curl -fsSL https://ollama.com/install.sh | sh

Docker image: (please learn by yourself on the official website)

CPU or Nvidia GPU:docker pull ollama/ollama

AMD GPUs:docker pull ollama/ollama:rocm

After the installation is complete you will see the Ollama icon in the bottom right corner of your desktop, if there is a green reminder in the icon, it means you need to upgrade.

Ollama setup

Ollama is very easy to install, but most of the settings need to modify the "environment variables", which is very unfriendly to newcomers, I list all the variables for reference if you need them (no need to memorize):

| parameters | Labeling and Configuration |

|---|---|

| OLLAMA_MODELS | Indicates the directory where the model files are stored, the default directory isCurrent User Directoryassume (office) C:\Users%username%.ollama\modelsWindows system It is not recommended to put it on the C driveThe disk can be placed on other disks (e.g. E:\ollama\models) |

| OLLAMA_HOST | Indicates the network address on which the ollama service listens, the default is127.0.0.1 If you want to allow other computers to access Ollama (e.g., other computers on a LAN), theRecommended settingsbe all right 0.0.0.0 |

| OLLAMA_PORT | Indicates the default port that the ollama service listens on, which defaults to11434 If there is a port conflict, you can modify the settings to other ports (e.g.8080etc.) |

| OLLAMA_ORIGINS | Indicates the source of the HTTP client's request, using comma-separated lists. If local use is not restricted, it can be set to an asterisk * |

| OLLAMA_KEEP_ALIVE | Indicates the survival time of a large model after it is loaded into memory, defaults to5mThat's 5 minutes. (e.g., a plain number 300 means 300 seconds, 0 means that the model is uninstalled as soon as the response to the request is processed, and any negative number means that it is always alive) It is recommended to set the 24h The model remains in memory for 24 hours, increasing access speeds. |

| OLLAMA_NUM_PARALLEL | Indicates the number of concurrent requests processed, defaults to1 (i.e., single concurrent serial processing of requests) Recommendations adjusted to actual needs |

| OLLAMA_MAX_QUEUE | Indicates the request queue length, the default value is512 It is recommended to adjust to the actual needs, requests exceeding the queue length will be discarded |

| OLLAMA_DEBUG | Indicates that the Debug log is output, which can be set to the following in the application development phase1 (i.e., outputting detailed log information to facilitate troubleshooting) |

| OLLAMA_MAX_LOADED_MODELS | Indicates the maximum number of models loaded into memory at the same time, defaults to1 (i.e. only 1 model can be in memory) |

1. Modify the download directory of large model files

On Windows systems, model files downloaded by Ollama are stored by default in a specific directory under the user's folder. Specifically, the default path is usuallyC:\Users\<用户名>\.ollama\models. Here.<用户名>refers to the current Windows system login user name.

For example, if the system login user name isyangfan, then the default storage path for the model file may beC:\Users\yangfan\.ollama\models\manifests\registry.ollama.ai. In this directory, users can find all the model files downloaded through Ollama.

Note: Newer system installation paths are generally:C:\Users\<用户名>\AppData\Local\Programs\Ollama

Large model downloads can easily be several gigabytes, if your C drive space is small, the first step to do is to modify the download directory of large model files.

1. Find the entry point for environment variables

The easiest way: Win+R to open the run window, type in sysdm.cpl, enter to open System Properties, select the Advanced tab, and click Environment Variables.

Other methods:

1. Start->Settings->About->Advanced System Settings->System Properties->Environmental Variables.

2. This computer -> Right click -> Properties -> Advanced System Settings -> Environment Variables.

3. Start->Control Panel->System and Security->System->Advanced System Settings->System Properties->Environmental Variables.

4. Search box at the bottom of the desktop->Input->Environmental Variables

When you enter, you will see the following screen:

2. Modify environment variables

Look for the variable name OLLAMA_MODELS in System Variables and click New if it is not there.

If OLLAMA_MODELS already exists, select it and double-click the left mouse button, or select it and click "Edit".

The value of the variable is changed to the new directory, here I have gone ahead and changed it from drive C to drive E which has more disk space.

After saving, it is recommended to start the computer from a new startup and use it again for a more secure result.

2. Modify the default access address and port

In the browser enter the URL: http://127.0.0.1:11434/ , you will see the following message, indicating that it is running, there are some security risks here that need to be modified, still in the environment variables.

1. Modify OLLAMA_HOST

If not, add new, if it is 0.0.0.0 to allow extranet access, change it to 127.0.0.1

2.Modify OLLAMA_PORT

If it is not there, add it, and change 11434 to any port, such as:11331(The port modification range is from 1 to 65535.) Modifying the number from 1000 onwards can avoid port conflict. Note that the English ":" is used.

Remember to reboot your computer for recommended reading on Ollama's security:DeepSeek sets Ollama on fire, is your local deployment safe? Be wary of 'stolen' power!

Installation of large models

Go to URL: https://ollama.com/search

Select model, select model size, copy command

Access to the command line tool

Paste the command to install it automatically

It's downloading here, so if it's slow, consider switching to a happier Internet environment!

If you want to download large models that Ollama doesn't offer, you can certainly do so, the vast majority of the models are GGUF files on huggingface, and I've taken a special quantized version of the DeepSeek-R1 32B is used as an example for installation demonstration.

1. install huggingface quantitative versioning model base command format

Remember the following installation command format

ollama run hf.co/{username}:{reponame}

2. Selecting the quantization version

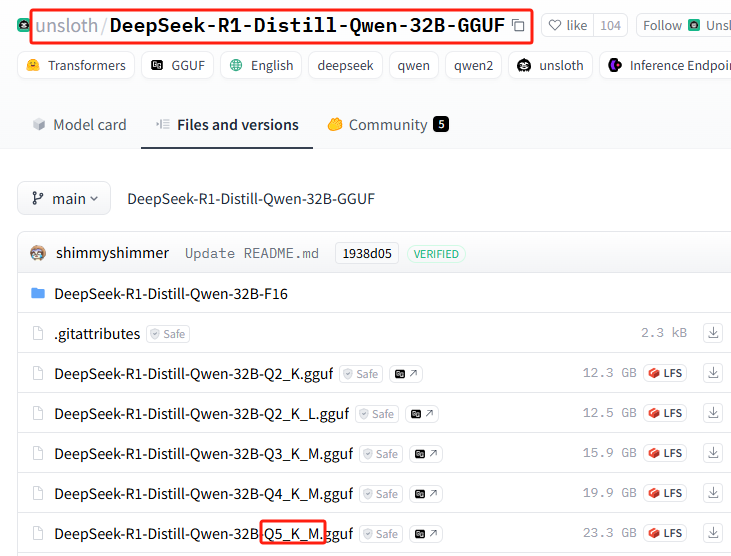

List of all quantized versions: https://huggingface.co/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF/tree/main

This installation uses: Q5_K_M

3. Splice Installation Command

{username}=unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF

{reponame}=Q5_K_M

Splice to get the full install command:ollama run hf.co/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF:Q5_K_M

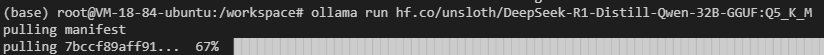

4. Execute the installation in Ollama

Execute the installation command

You may experience network failures (good luck with that), repeat the install command a few more times...

Still not working? Try the following command.hf.co/Amend the section to readhttps://hf-mirror.com/(switch to the domestic mirror address), the final patchwork of the complete installation command is as follows:

ollama run https://hf-mirror.com/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF:Q5_K_M

A full tutorial for this section is available:Private Deployment without Local GPUs DeepSeek-R1 32B

Ollama Basic Commands

| command | descriptive |

|---|---|

ollama serve | Launch Ollama |

ollama create | Creating Models from Modelfile |

ollama show | Displaying model information |

ollama run | operational model |

ollama stop | Stopping a running model |

ollama pull | Pulling models from the registry |

ollama push | Pushing models to the registry |

ollama list | List all models |

ollama ps | List running models |

ollama cp | Replication models |

ollama rm | Delete Model |

ollama help | Display help information for any command |

| symbolize | descriptive |

|---|---|

-h, --help | Show help information for Ollama |

-v, --version | Displaying version information |

When entering commands on multiple lines, you can use the """ Perform a line feed.

utilization """ End line feed.

To terminate the Ollama model inference service, you can use the /byeThe

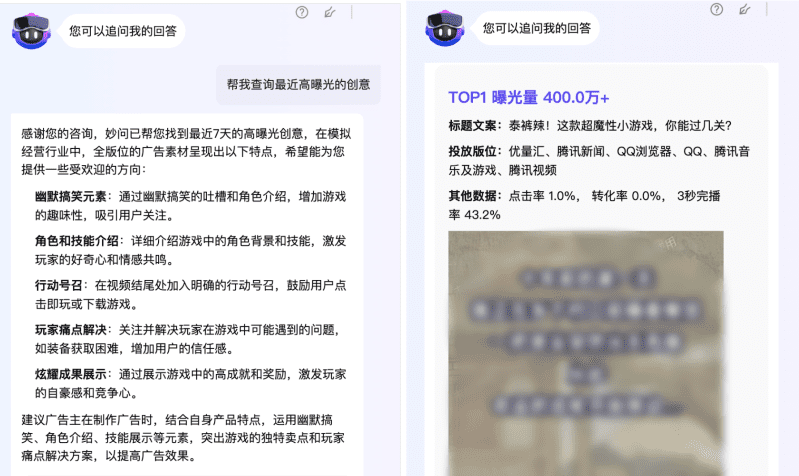

Using Ollama in Native AI Conversation Tools

Most of the mainstream native AI dialog tools are already adapted to Ollama by default, and do not require any settings. For example Page Assist OpenwebUI.

However, some local AI dialog tools require you to enter the API address yourself.http://127.0.0.1:11434/: (note if port is modified)

Some web-based AI dialog tools certainly support configuration, for example NextChat :

If you want Ollama running on your local computer to be fully exposed for external use, learn cpolar or ngrok on your own, which is beyond the scope of beginner's use.

The article seems very long, in fact, inside the 4 very simple knowledge points, learn to use Ollama in the future basically unimpeded, let us review again:

1. Setting environment variables

2. Two ways to install a large model

3. Remember the basic run and delete model commands

4. Use in different clients

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/e0a98a1365d61a3.png)