Ollama Installation and Configuration - docker article

summary

This section learns how to complete the Docker Ollama The installation and configuration of the

Docker is a virtualized container technology that is based on images and can start various containers in seconds. Each of these containers is a complete runtime environment that enables isolation between containers.

Ollama Download: https://ollama.com/download

Ollama official home page: https://ollama.com

Ollama official GitHub source code repository: https://github.com/ollama/ollama/

Official docker installation tutorial: https://hub.docker.com/r/ollama/ollama

I. Pulling the Ollama Mirror

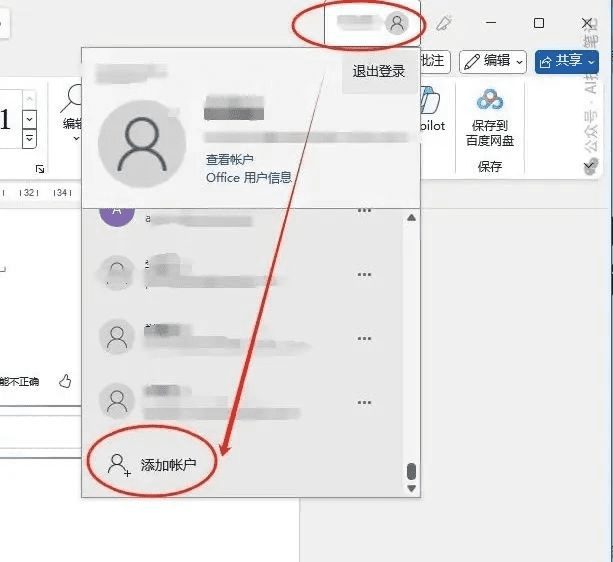

The author downloaded docker on Windows beforehand 👉docker installation download official website

Way 1: Docker software search and download in the visualization interface

Way 2: The official website recommends directly using the following command to pull the download image, this project can be run only on the CPU.

# CPU 或者 Nvidia GPU

docker pull ollama/ollama

# AMD GPU

docker pull ollama/ollama:rocm

Note: If the reader wants to use a specific version of the image that specifies the runtime environment, the following command can be used.

# CPU 或 Nvidia GPU下载ollama 0.3.0

docker pull ollama/ollama:0.3.0

# AMD GPU下载ollama 0.3.0

docker pull ollama/ollama:0.3.0-rocm

II. Running the ollama image

Way 1: Docker runs the image in a visual interface

- Once the download is complete, it can be found in the

Imageshit the nail on the headOllamaMirror, clickrunThe logo can be run, note that before running in the pop-up optional settings, select a port number (such as 8089).

- exist

ContainersFind the image in theOLLMAccess to the Ollama interface

- Verify that the installation is complete, in the

ExecEnter at:

ollama -h

The output is as follows: that means the installation was successful 🎉

Large language model runner

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model from a Modelfile

show Show information for a model

run Run a model

pull Pull a model from a registry

push Push a model to a registry

list List models

ps List running models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, --help help for ollama

-v, --version Show version information

- Terminal Use Ollama.

The following are common commands used by Ollama:

ollama serve #启动ollama

ollama create #从模型文件创建模型

ollama show #显示模型信息

ollama run #运行模型

ollama pull #从注册表中拉取模型

ollama push #将模型推送到注册表

ollama list #列出模型

ollama cp #复制模型

ollama rm #删除模型

ollama help #获取有关任何命令的帮助信息

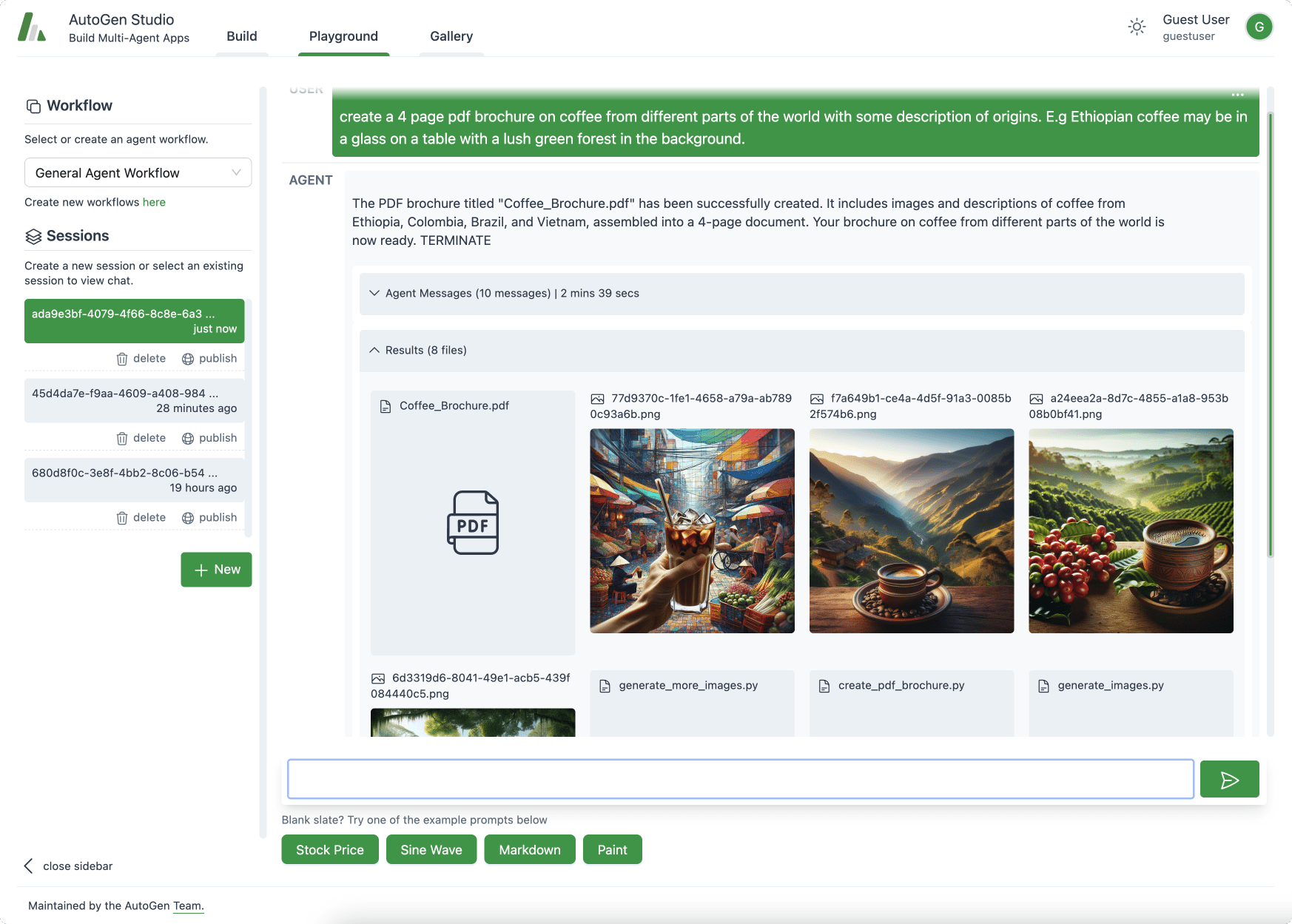

library (ollama.com) Here is Ollama's model library, search for the model you want and launch it directly!

ollama run llama3

The download speed depends on your bandwidth and is ready to use ✌Remember to use the

control + DExit Chat

Way 2: Command line startup

- CPU version:

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...