Ollama Installation and Configuration - Windows Systems

summary

In this section, learn how to do this on a Windows system Ollama The installation and configuration is divided into the following sections.

- Visit the official website to complete the download directly

- Environment variable configuration

- Run Ollama

- Verify that the installation was successful 🎉

I. Visit the official website to complete the download directly

- Visit the official homepage

Ollama Download: https://ollama.com/download

Ollama official home page: https://ollama.com

Ollama official GitHub source code repository: https://github.com/ollama/ollama/

- Wait for your browser to download the file OllamaSetup.exe, when it is finished, double-click the file, the following pop-up window will appear, click on the

InstallJust wait for the download to complete.

- Once the installation is complete, you can see that Ollama is already running by default. You can find the Ollama logo in the bottom navigation bar and right click on it.

Quit OllamaExit Ollama or viewlogsThe

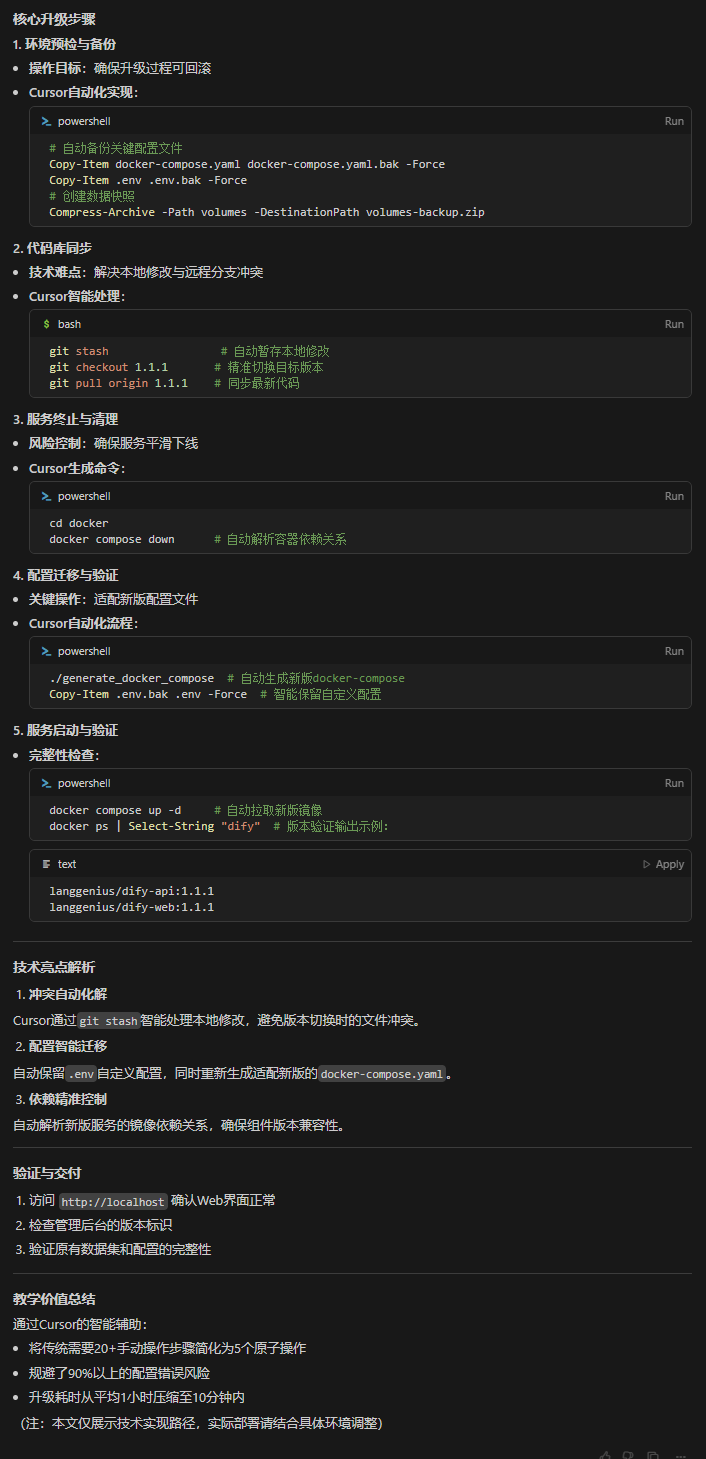

II. Configuration of environment variables

OllamaYou can install Ollama with one click on your computer like any other software, but it is recommended that you configure the system environment variables according to your actual needs. Below are the instructions for configuring environment variables for Ollama.

| parameters | Labeling and Configuration |

|---|---|

| OLLAMA_MODELS | Indicates the directory where the model files are stored, the default directory isCurrent User Directoryassume (office)C:\Users%username%.ollama\modelsWindows system It is not recommended to put it on the C drive The disk can be placed on other disks (e.g. E:\ollama\models) |

| OLLAMA_HOST | Indicates the network address on which the ollama service listens, the default is127.0.0.1 If you want to allow other computers to access Ollama (e.g., other computers on a LAN), theRecommended settingsbe all right 0.0.0.0 |

| OLLAMA_PORT | Indicates the default port that the ollama service listens on, which defaults to11434 If there is a port conflict, you can modify the settings to other ports (e.g.8080etc.) |

| OLLAMA_ORIGINS | Indicates the source of the request from the HTTP client, use half commas to separate the list, if the local use is not restricted, can be set to asterisks * |

| OLLAMA_KEEP_ALIVE | Indicates the survival time of a large model after it is loaded into memory, defaults to5mThat is, 5 minutes (e.g., a plain number 300 means 300 seconds, 0 means that the model is uninstalled as soon as the response to the request is processed, and any negative number means that the model is always alive) is recommended. 24h The model remains in memory for 24 hours, increasing access speeds. |

| OLLAMA_NUM_PARALLEL | Indicates the number of concurrent requests processed, defaults to1(i.e., single concurrent serial processing of requests) Recommendations adjusted to actual needs |

| OLLAMA_MAX_QUEUE | Indicates the request queue length, the default value is512It is recommended to adjust to the actual needs, requests exceeding the queue length will be discarded |

| OLLAMA_DEBUG | Indicates that the Debug log is output, which can be set to the following in the application development phase1(i.e., outputting detailed log information to facilitate troubleshooting) |

| OLLAMA_MAX_LOADED_MODELS | Indicates the maximum number of models loaded into memory at the same time, defaults to1(i.e. only 1 model can be in memory) |

For beginners, we strongly recommend that you configure the OLLAMA_MODELS to change the model storage location. By default, Ollama models are stored in the user directory on the C drive under the .ollama/models folder, which takes up space on the C drive. Changing it to another partition will allow you to better manage your storage.

Step 1: Locate the entry point for setting system environment variables.

Method 1: Start->Settings->About->Advanced System Settings->System Properties->Environmental Variables.

Method 2: This computer -> Right click -> Properties -> Advanced System Settings -> Environment Variables.

Method 3: Start->Control Panel->System and Security->System->Advanced System Settings->System Properties->Environmental Variables.

Method 4: Win+R to open the Run window, enter sysdm.cpl, enter to open System Properties, select the Advanced tab, and click Environment Variables.

Step 2: Setup OLLAMA_MODELS Environment Variables (change the model storage location)

- In " environment variable " Window's " System variables (S) " area (or the "User Variables (U)" area, depending on your needs), click the " new (W)... " button.

- In " Variable name (N) " input box, type:

OLLAMA_MODELS(Note the case, all capitalization is recommended). - In " Value of variable (V) " input box, enter the model storage path you want to set. For example, if you want to store the model on the E drive of the

ollama\modelsfolder, you can enter in the "Variable Value (V)":E:\ollama\models(Please modify the disk letter and folder path according to your actual situation).- Attention: Make sure you enter the path Already Existing Folders or The parent directory of the folder you wish to create exists . Ollama may be automatically created when it is first run

modelsfolder, but it's a good idea to create theollamafolder to make sure the path is correct. - Example: In order to store the model on the E-disk of the

ollama\modelsfolder, you can enter it in the "Variable Value (V)":E:\ollama\models

- Attention: Make sure you enter the path Already Existing Folders or The parent directory of the folder you wish to create exists . Ollama may be automatically created when it is first run

- Click " recognize " button, close " New system variables " (or "New User Variable") window.

Attention: If you don't know how to set

OLLAMA_MODELSenvironment variable, you can refer to the following example.

(Optional) Settings OLLAMA_HOST environment variable (modify the listening address).

If you need to make your Ollama service accessible to other devices on the LAN, you can configure the OLLAMA_HOST Environment variables.

- Also in " environment variable " Window's " System variables (S) " area (or the "User Variables (U)" area), click the " new (W)... " button.

- In " Variable name (N) " input box, type:

OLLAMA_HOST - In " Value of variable (V) " input box, type

0.0.0.0:11434(or the port number you want to specify; the default port is 11434).0.0.0.0Indicates listening to all network interfaces to allow LAN access.11434is the Ollama default port.- Example:

0.0.0.0:11434

- Click " recognize " button, close " New system variables " (or "New User Variable") window.

Step 3: Restart Ollama or PowerShell to make the environment variables take effect

Once the environment variables are set, you need to Restarting the Ollama Service or Restart your Command Prompt (CMD) or PowerShell window. in order for the new environment variable to take effect.

- Restart the Ollama service. If you run the

ollama servepriorCtrl + CStop and rerunollama serveThe - Restart Command Prompt/PowerShell. Close all open windows and reopen new ones.

Step 4: Verify that the environment variables are in effect

- reopen Command Prompt (CMD) or PowerShell The

- validate (a theory)

OLLAMA_MODELS: Type the following command and enter:

echo %OLLAMA_MODELS%

Output:

E:\ollama\models(If you set theE:\ollama\models)

III. Running Ollama

- Command line statements to start

ollama serve

The following error occurs when starting Ollama, because the Windows installation of Ollama will Default boot up (math.) genusOllama The service defaults to http://127.0.0.1:11434

Error: listen tcp 127.0.0.1:11434: bind: Only one usage of each socket address (protocol/network address/port) is normally permitted.

- Solution:

- (computer) shortcut key

Win+XOpen Task Manager and click启动The following is an example of how to disable Ollama in thestepThe mission of Ollama ended in the middle of the year.

- Re-use

ollama serveOpen Ollama.

- Verify successful startup:

- (computer) shortcut key

Win+RInputcmd, open a command line terminal. - importation

netstat -aon|findstr 11434View processes occupying port 11434.

netstat -aon|findstr 11434

Output:

TCP 127.0.0.1:11434 0.0.0.0:0 LISTENING 17556Shows that port 11434 is occupied by process 17556

- A look at the process running shows that Ollama has started.

tasklist|findstr "17556"

The output is as follows:

ollama.exe 17556 Console 1 31,856 K

IV. Verify successful installation🎉

- Terminal Input:

ollama -h

The output is as follows: that means the installation was successful 🎉

Large language model runner

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model from a Modelfile

show Show information for a model

run Run a model

pull Pull a model from a registry

push Push a model to a registry

list List models

ps List running models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, --help help for ollama

-v, --version Show version information

- Terminal use of Ollama .

library (ollama.com) Here is ollama's model library, search for the model you want and launch it straight away!

ollama run llama3

The download speed depends on your bandwidth and is ready to use ✌Remember to use the

control + DExit Chat

Fifth, how to cancel the boot self-start

(As of 2024-08-19) Currently Ollama boots up by default on Windows, if you don't need to boot up, you can cancel it by following these steps.

Open Task Manager and click 启动应用Find ollama.exe Right-click 禁用 Ready to go.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...