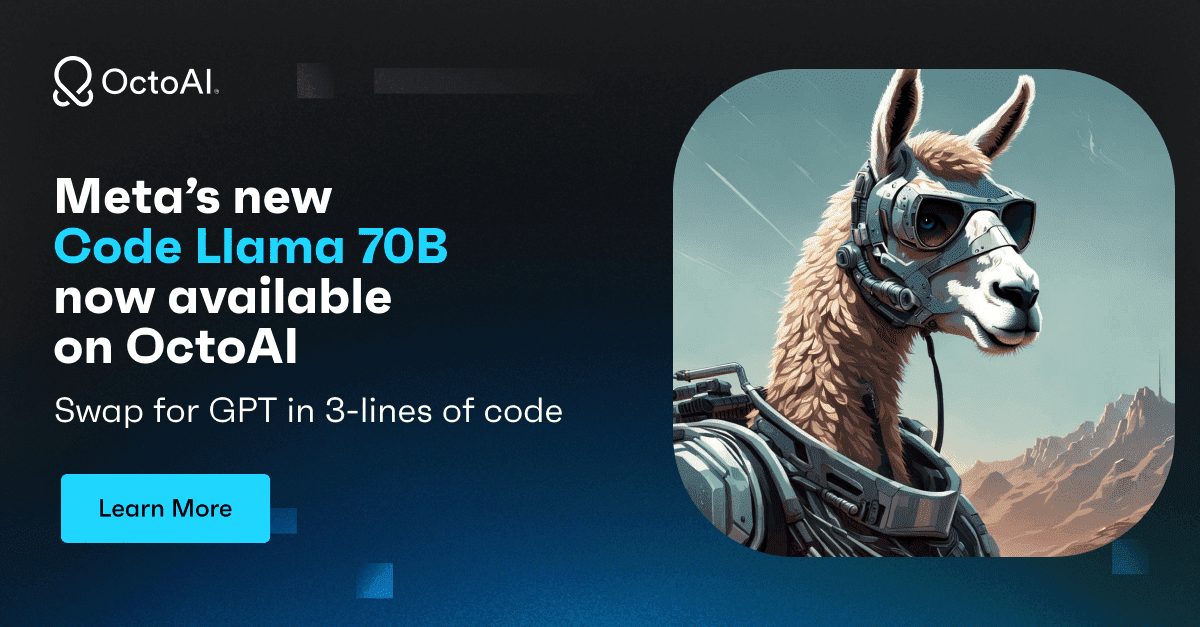

New Product: The Power of OctoAI: Seamless Integration of Different Open Source Language Models

Today we will delve into the world of open source language models (LLMs) and learn about this innovative new contender - Code Llama 70B using OctoAI.

Developers often find it difficult to effectively integrate advanced AI models like Meta's Code Llama 70B into their workflow.

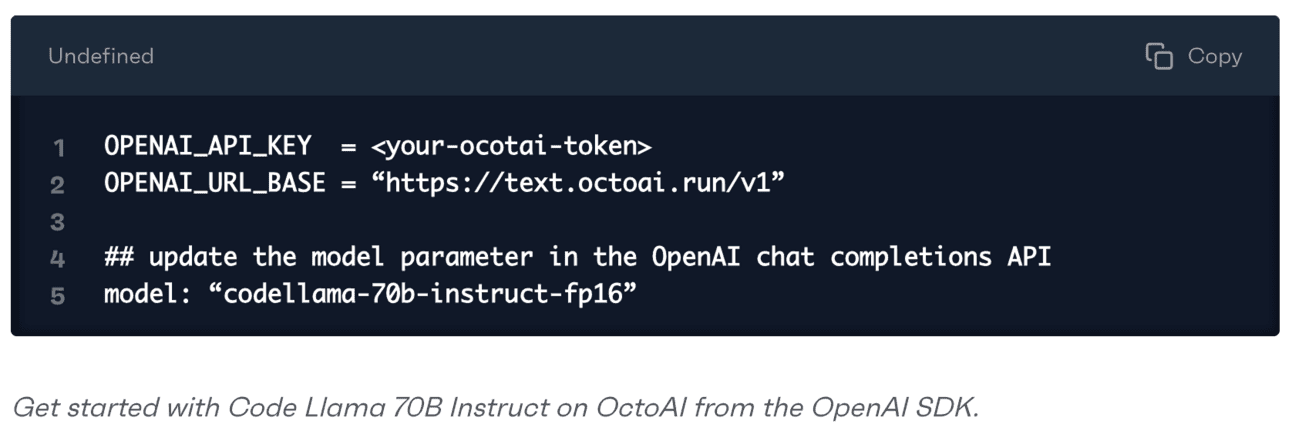

OctoAI simplifies this process by enabling seamless integration of the Code Llama 70B with just three lines of code.

In this deep dive, we'll cover how you can utilize this powerful AI model on OctoAI. Let's get started: 👇

Come Sunday, we'll be exploring the world of open source language models (LLMs) in depth and shedding some light on this brand new contender, the OctoAI-powered Code Llama 70B.

Developers often find it challenging to efficiently incorporate advanced AI models like Meta's Code Llama 70B into their workflow.

OctoAI, on the other hand, was able to simplify this process by seamlessly integrating Code Llama 70B with just three lines of code.

In this deep dive, we'll dive into how to utilize this powerful AI model on OctoAI. Let's get started: 👇

You may remember Mixtral 8-7B, the open source language model that was a sensation in the industry last month.

Now comes the debut of Code Llama 70B, which may provide faster and more cost-effective performance on OctoAI than popular closed-source models such as GPT-3.5-Turbo and GPT-4, while maintaining its open-source nature.

For users who currently rely on closed-source language models like OpenAI, moving to open-source alternatives may seem a bit tricky.

Fortunately, OctoAI provides a user-friendly solution that seamlessly integrates different language models including Mixtral and Code Llama with just a few simple lines of code.

With this integration, you can easily test and compare different LLMs to see which one fits your needs better.

This approach allows you to choose wisely which AI tools to add to your workflow.

Want to try the Mixtral-8-7B or the new Code Llama 70B on your particular scene?

On OctoAI, you can try out multiple models at the same time and evaluate their quality and fitness in a matter of minutes. It takes care of all the complex machine learning behind-the-scenes work for you so you can focus more on building.

Thanks to their expertise in machine learning optimization, any model you choose will run faster, more efficiently, and at a lower cost than doing it yourself. Example:

5-10 times faster response time

Up to three times the cost savings through increased efficiency

Easily handle queries ranging from 1 to over 100k per day

OctoAI gives you more options. Instead of being tied to a single vendor, you have the freedom to mix and match components such as data processors, models, and fine-tuning techniques to create a solution that's just for you.

Maintaining future compatibility with rapid advances in open source language modeling

The rapid evolution of open source language models means that the only constant now is change.

By limiting yourself to a closed, rigid solution, you may miss out on new features that are developed on a regular basis.

OctoAI's flexible machine learning architecture ensures that your applications are future-proof from the start. As better open source models emerge, you can easily switch to them, keeping you competitive.

It handles all the complex model integration, optimization, and infrastructure work, allowing you to focus only on API simplicity so you can focus on building great products.

For example, let's say your team is using Code Llama 70B for code search and code snippet generation. Six months later, when Meta or another deep modeler releases a more efficient model, you can replace it with just a few lines of code changes.

Upgrade instantly and take advantage of quality improvements without spending months on reengineering.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...