o3 Demonstrate with practical evidence that generic inference models are superior to specialized programming models in the programming domain

Original text:A Study of Programming Competition Competencies Based on a Big Reasoning Model, which is briefly summarized below for ease of reading.

1. Introduction

1.1 Background and Motivation

In recent years, Large Language Models (LLMs) have made significant progress in program generation and complex reasoning tasks. Programming competitions, especially platforms like the International Olympiad in Informatics (IOI) and CodeForces, are ideal testbeds for evaluating the reasoning capabilities of AI systems due to their rigorous demands on logical thinking and problem solving skills.

1.2 Objectives of the study

This study aims to explore the following questions:

- Performance Comparison of Generalized vs. Domain-Specific Reasoning Models: Compare the performance of general-purpose inference models (e.g., OpenAI's o1 and o3) with domain-specific models specifically designed for IOI competitions (e.g., o1-ioi).

- The Role of Reinforcement Learning in Enhancing Modeling Reasoning Skills: Evaluating the performance of large inference models trained by reinforcement learning (RL) in complex programming tasks.

- Modeling the Emergence of Autonomous Reasoning Strategies: Observe whether the model can autonomously develop effective reasoning strategies without human intervention.

2. Methodology

2.1 Introduction to the model

2.1.1 OpenAI o1

OpenAI o1 is a large-scale language model trained by reinforcement learning to generate and execute code. It solves problems step-by-step by generating internal inference chains and optimizing them with RL.

2.1.2 OpenAI o1-ioi

o1-ioi is an improved version of o1, fine-tuned specifically for the IOI competition. It employs a test-time strategy similar to the AlphaCode system, which consists of generating a large number of candidate solutions for each subtask and selecting the best submission through clustering and reordering.

2.1.3 OpenAI o3

o3 is the successor of o1 and further improves the model's inference capability. Unlike o1-ioi, o3 does not rely on manually designed test-time strategies, but autonomously develops complex inference strategies through end-to-end RL training.

2.2 Assessment methodology

2.2.1 CodeForces simulation competitions

We simulated the CodeForces competition environment, using the full test suite and imposing appropriate time and memory constraints to evaluate the model's performance.

2.2.2 IOI 2024 Live Competition

o1-ioi participated in the 2024 IOI competition, competing under the same conditions as human competitors.

2.2.3 Software engineering task assessment

We also evaluated the performance of the model on the HackerRank Astra and SWE-bench Verified datasets to test its capabilities in real software development tasks.

3. Discovery

3.1 Generic vs. domain-specific modeling

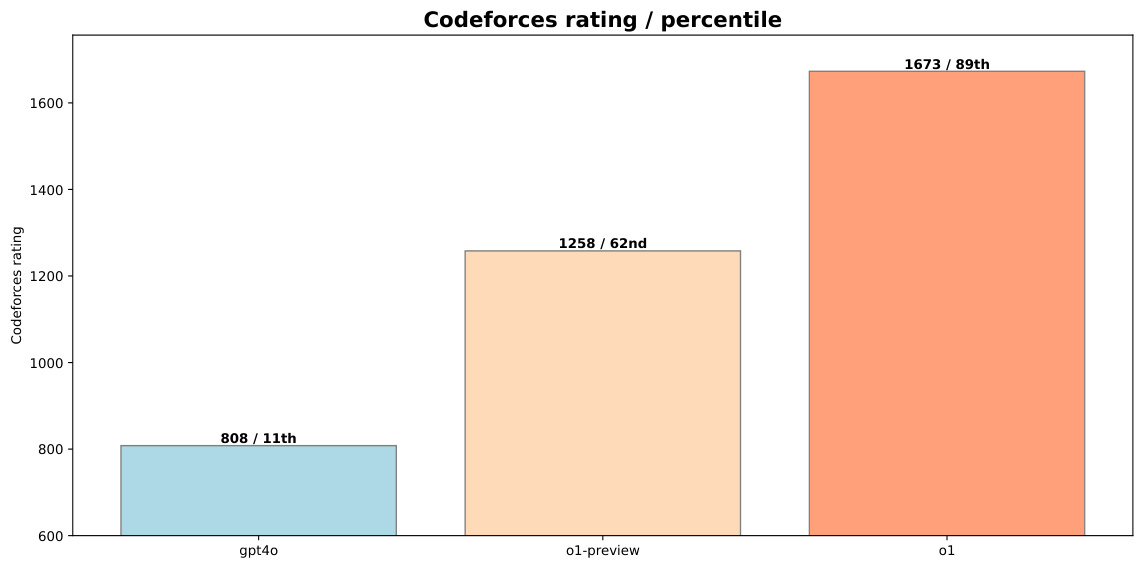

- o1-ioi in the IOI competition: In the 2024 IOI competition, o1-ioi scored 213 points and ranked 49%. after relaxing the submission restrictions, its score improved to 362.14 points, which is above the gold medal score line.

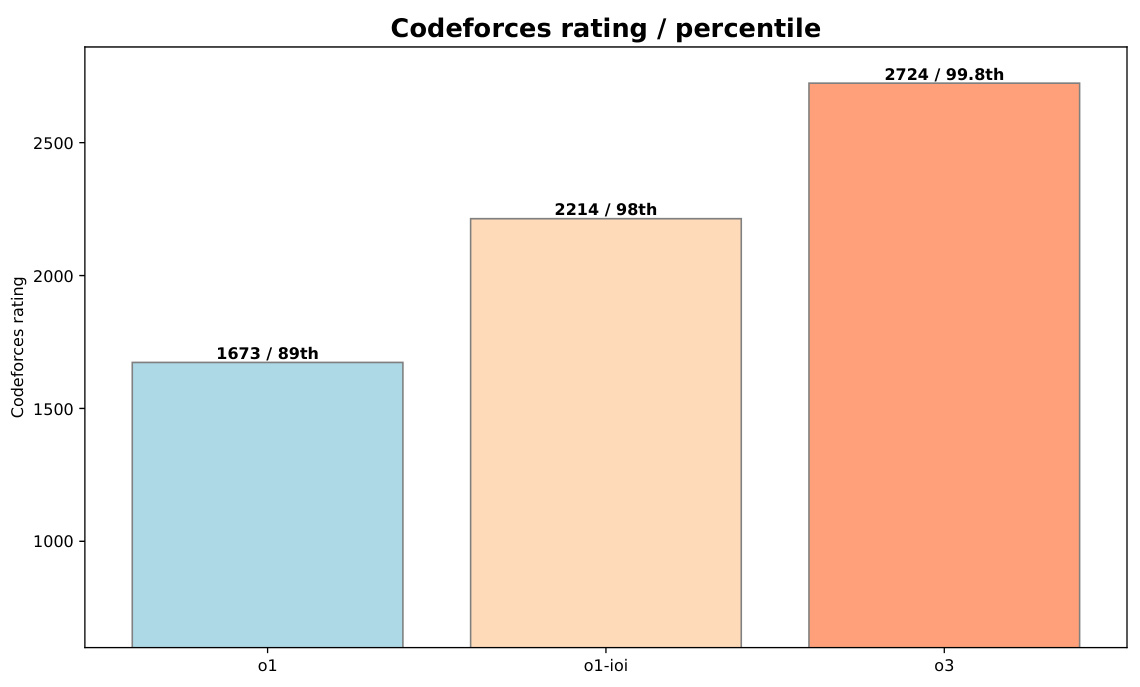

- o3 excellence: o3 performed well in the CodeForces benchmarks with a rating of 2724 (99.8th percentile), significantly better than o1-ioi (2214, 98th percentile). In the IOI 2024 benchmark, o3 also earned a score of 395.64, exceeding the gold medal score line, with a limit of only 50 submissions.

Figure 1: Comparison of o1-preview and o1 with gpt-4o performance at CodeForces

3.2 Enhancing the role of learning

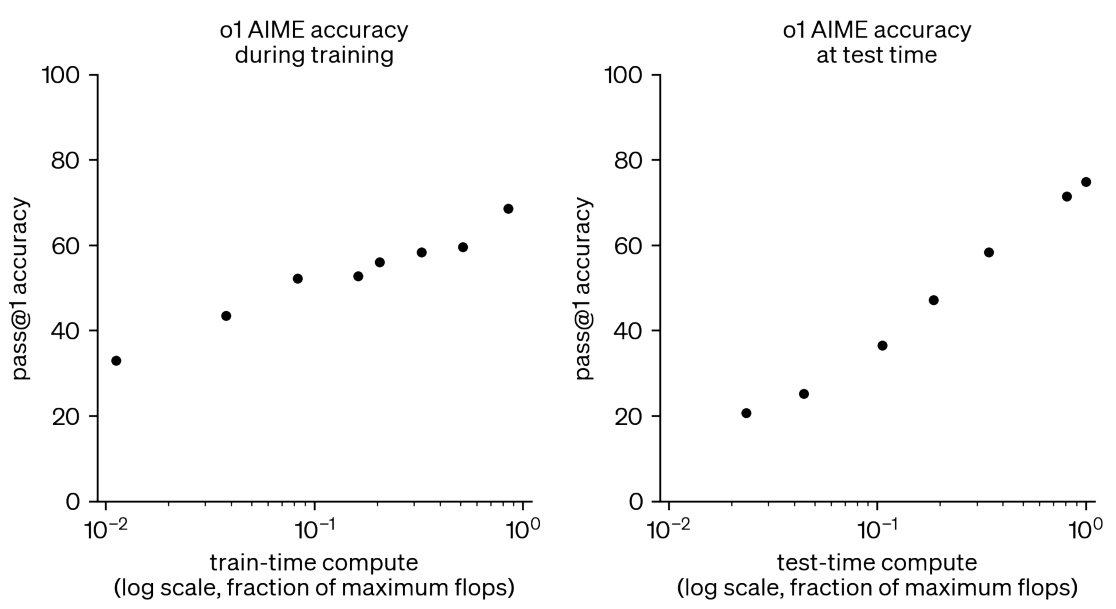

- Increase in computation during RL training and testing: As shown in Fig. 2, increasing the computational resources during RL training and testing can significantly improve the performance of the model in competitive math tasks.

Figure 2: Additional RL training and test-time computation improves performance on competitive math tasks

3.3 Emergence of Model Autonomous Reasoning Strategies

- Autonomous reasoning strategies for o3: o3 exhibits more complex and thoughtful reasoning chains when tested. For example, for problems that require validation, it generates simple brute-force solutions and then cross-checks its output against a more optimized implementation of the algorithm to catch potential errors.

Figure 3: The o3 test itself solution, reflecting a partial implementation of the test-time strategy used by o1-ioi in IOI 2024

4. Conclusion

4.1 Main findings

- Superiority of generalized models: While domain-specific models (e.g., o1-ioi) perform well on specific tasks, general-purpose models (e.g., o3) trained with large-scale RL are able to outperform these results without relying on manually designed inference heuristics.

- Effectiveness of RL training: Adding RL training and test-time computational resources can consistently improve the model's performance, bringing it closer to that of the world's top human players.

- Modeling the ability to autonomously develop complex strategies: o3 demonstrates the ability of the model to autonomously develop complex inference strategies, eliminating the need for manually designed test-time strategies.

4.2 Future prospects

The results of this study show that large-scale RL training provides a robust path to achieve state-of-the-art AI in reasoning domains such as competitive programming. In the future, large inference models are expected to unlock many new application scenarios in a variety of fields such as science, coding, and mathematics.

5. Examples

5.1 Examples of solutions in the IOI 2024 competition

5.1.1 Nile problem

o1-ioi scored full marks on the Nile problem, here is the sample code for the solution:

#include "nile.h"

#include <bits/stdc++.h>

using namespace std;

// ... (代码省略,详见附录C.1)

5.1.2 Message problem

o1-ioi scored 79.64 on the Message problem and here is the sample code for the solution:

#include "message.h"

#include <bits/stdc++.h>

using namespace std;

// ... (代码省略,详见附录C.2)

5.2 Examples of solutions in software engineering tasks

5.2.1 HackerRank Astra dataset

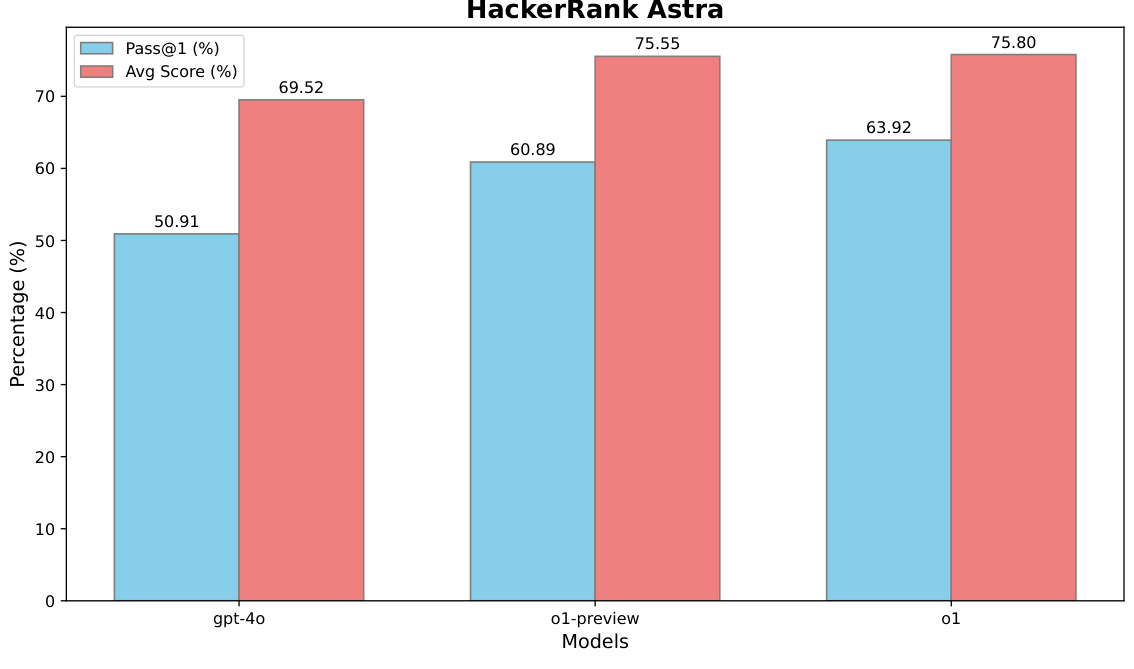

o1 on the HackerRank Astra dataset:

Figure 4: Performance of o1 on the HackerRank Astra dataset

5.2.2 SWE-bench Verified dataset

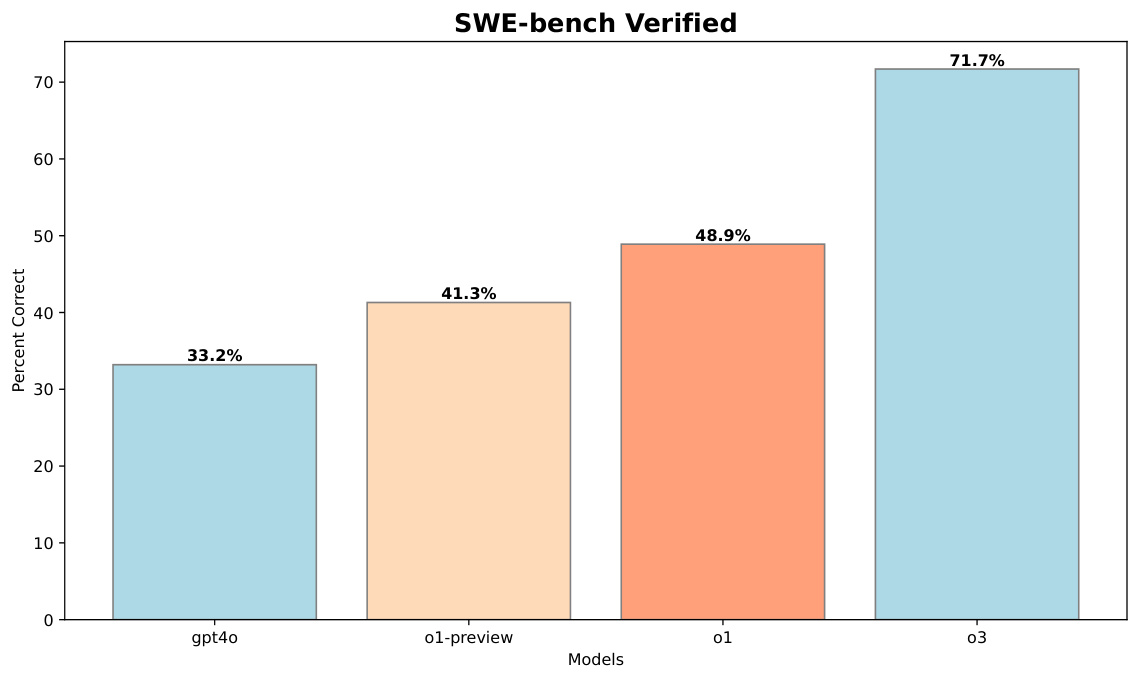

o3 on the SWE-bench Verified dataset:

Figure 5: Performance of o3 on SWE-bench Verified dataset

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...