o3-mini Crushes DeepSeek R1: A Python Program That Generated Nearly 4 Million Views

The headlines in the AI world are being DeepSeek After ten days of contracting, OpenAI finally sat down and launched a new inference model series, o3-mini, which not only opens up inference models to free users for the first time, but also reduces the cost by 15 times compared to the previous o1 series.

OpenAI also claims that this is the newest and most cost-effective model in its family of inference models:

It has just been launched, and some netizens can't wait to compare it with the domestic big models that have swept the whole big model circle. DeepSeek R1 Comparisons were made.

It has just been launched, and some netizens can't wait to compare it with the domestic big models that have swept the whole big model circle. DeepSeek R1 Comparisons were made.

Some time ago, the AI community became obsessed with using DeepSeek R1 to compete with other (inference) models in this task: "Write a Python script that makes a ball bounce inside a certain shape. Make the shape rotate slowly and make sure the ball stays inside the shape."

This simulated bouncing ball test is a classic programming challenge. It is equivalent to a collision detection algorithm that requires the model to recognize when two objects (e.g., a ball and the side of a shape) collide. Improperly written algorithms can have obvious physics errors.

While DeepSeek R1 swept the domestic and international hot searches, and American cloud computing platforms such as Microsoft, NVIDIA, and Amazon scrambled to introduce R1, R1 also accomplished the crushing of OpenAI o1 pro in this task.

look again Claude 3.5 Sonnet and Google's Gemini With 1.5 Pro's generation results, DeepSeek's open source model is indeed more than one level higher. However, in o3-mini After going live, the tables seem to have turned overnight, with posts like this one claiming that the OpenAI o3-mini crushed the DeepSeek R1, which has now attracted nearly 4 million visitors.

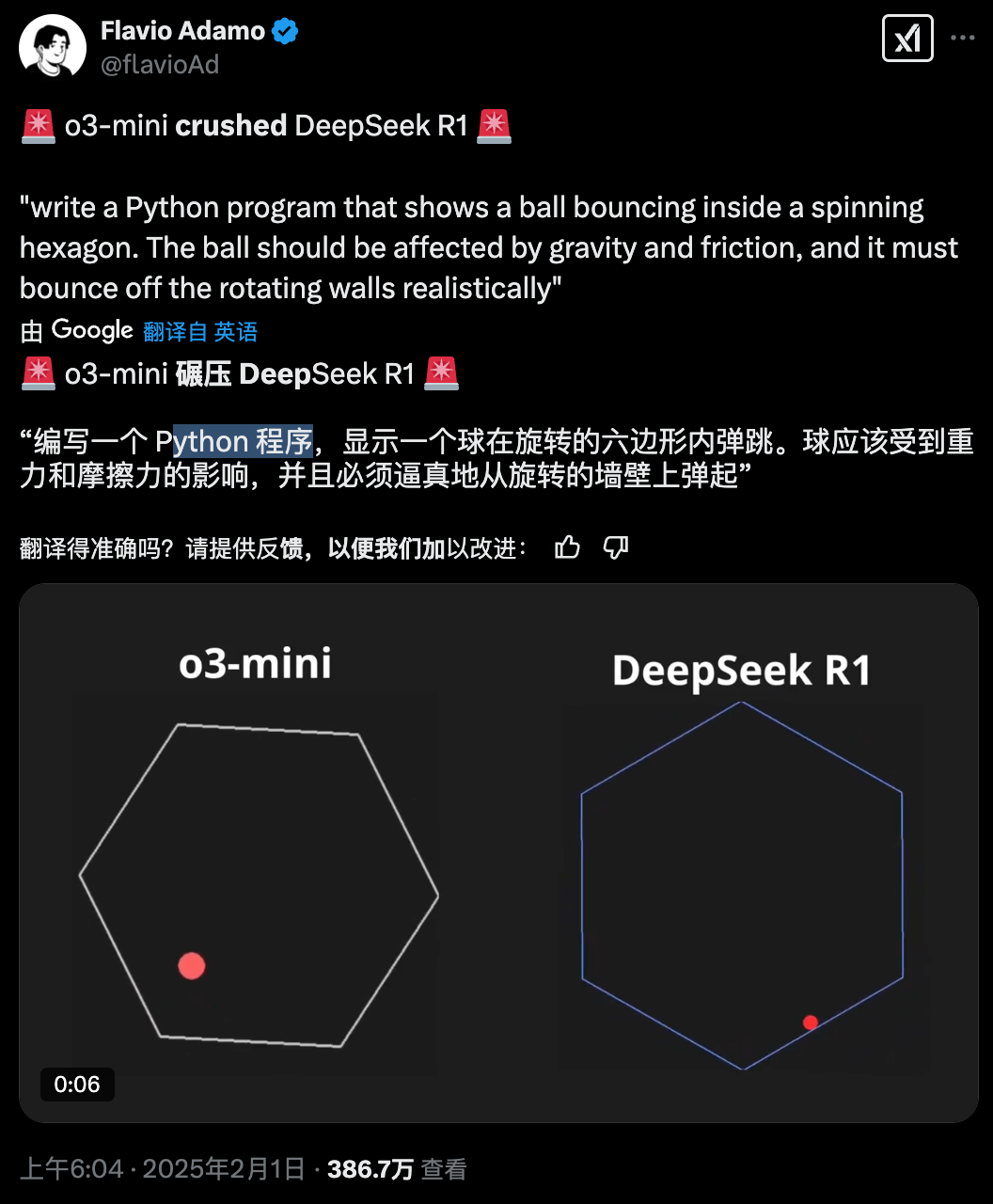

However, in o3-mini After going live, the tables seem to have turned overnight, with posts like this one claiming that the OpenAI o3-mini crushed the DeepSeek R1, which has now attracted nearly 4 million visitors. The developer used the prompt: "write a Python program that shows a ball bouncing inside a spinning hexagon. The ball should be affected by gravity and friction, and it The ball should be affected by gravity and friction, and it must bounce off the rotating walls realistically".

The developer used the prompt: "write a Python program that shows a ball bouncing inside a spinning hexagon. The ball should be affected by gravity and friction, and it The ball should be affected by gravity and friction, and it must bounce off the rotating walls realistically".

That is, let o3-mini and DeepSeek R1 respectively write a python program for a ball bouncing inside a rotating hexagon, with the ball bouncing following the effects of gravity and friction. The final presentation is as follows: In terms of effects, the o3-mini shows off the collision and bouncing effects much better. From the understanding of gravity and friction, the DeepSeek R1 version of the ball seems to be a bit overwhelmed by Newton's coffin plate, and is not controlled by gravity at all.

In terms of effects, the o3-mini shows off the collision and bouncing effects much better. From the understanding of gravity and friction, the DeepSeek R1 version of the ball seems to be a bit overwhelmed by Newton's coffin plate, and is not controlled by gravity at all.

This is not an isolated case, as @hyperbolic_labs co-founder Yuchen Jin also discovered this problem earlier, by typing the prompt words into DeepSeek R1 and o3-mini respectively: write a python script of a ball bouncing inside a tesseract (write write a Python script of a ball bouncing inside a tesseract).

Each vertex of a four-dimensional hypercube is adjacent to four prongs, and each prong connects two cubes. Geometry in four dimensions is beyond human intuitive perception, so listening to these descriptions, it may be difficult to visualize what a four-dimensional hypercube looks like.

And not only does o3mini show a stable geometry, but the ball bounces in four dimensions with a more flexible trajectory, with the percussive sensation of hitting the side of a cube. Looking at DeepSeek R1, it seems that its understanding of the shape of the four-dimensional hypercube is not deep enough. At the same time, the trajectory of the small ball in it seems a bit weird, with a kind of "erratic" feeling.

Looking at DeepSeek R1, it seems that its understanding of the shape of the four-dimensional hypercube is not deep enough. At the same time, the trajectory of the small ball in it seems a bit weird, with a kind of "erratic" feeling. According to Yuchen Jin, he tried many times and all attempts with DeepSeek R1 were worse than the disposable o3-mini, such as the one below where the ball was left.

According to Yuchen Jin, he tried many times and all attempts with DeepSeek R1 were worse than the disposable o3-mini, such as the one below where the ball was left. The heart of the machine is also a personal test, the same Pass@1 test, DeepSeek R1 this time is both the ball and geometric frame, and even the ball will change the color of the color, unfortunately, it simplifies the four-dimensional hypercube into a three-dimensional space coordinate axis.

The heart of the machine is also a personal test, the same Pass@1 test, DeepSeek R1 this time is both the ball and geometric frame, and even the ball will change the color of the color, unfortunately, it simplifies the four-dimensional hypercube into a three-dimensional space coordinate axis. The performance of o3-mini is a little bit like a "buyer's show". Obviously, Yuchen Jin inputs exactly the same prompts, but why can't o3-mini do the same? Why can't o3-mini get the "seller's show" as shown above?

The performance of o3-mini is a little bit like a "buyer's show". Obviously, Yuchen Jin inputs exactly the same prompts, but why can't o3-mini do the same? Why can't o3-mini get the "seller's show" as shown above? It seems that the DeepSeek R1 is not a complete flop for the o3-mini in terms of generating a program for bouncing the ball inside the geometric outer frame.

It seems that the DeepSeek R1 is not a complete flop for the o3-mini in terms of generating a program for bouncing the ball inside the geometric outer frame.

AIGC practitioner @myapdx tested o3-mini and DeepSeek R1 with a more complex cue of its kind: write a p5.js script that simulates 100 colored blobs bouncing inside a sphere. Each ball should leave a fading trajectory showing its nearest path. The container sphere should rotate slowly. Make sure to implement proper collision detection to keep the balls inside the sphere.

The o3-mini works like this: So many requirements in the cue word: bouncing inside the sphere, leaving a fading track, slow rotation of the container ..... .o3-mini are all perfectly met.

So many requirements in the cue word: bouncing inside the sphere, leaving a fading track, slow rotation of the container ..... .o3-mini are all perfectly met.

And the DeepSeek R1 doesn't seem to be any worse for wear: As for why there is such a discrepancy, both Yuchen Jin and @myapdx mentioned in their posts that the task responds to how the model understands the laws of real-world physics. The models need to synthesize their understanding of language, geometry, physics and programming to come up with the final simulation results. From the results of the first two rounds, it seems that o3-mini has the potential to be the best big model for physics.

As for why there is such a discrepancy, both Yuchen Jin and @myapdx mentioned in their posts that the task responds to how the model understands the laws of real-world physics. The models need to synthesize their understanding of language, geometry, physics and programming to come up with the final simulation results. From the results of the first two rounds, it seems that o3-mini has the potential to be the best big model for physics.

Meanwhile, OpenAI also highlighted in yesterday's release blog that o3-mini-low outperforms o1-mini on PhD-level science problems. o3-mini-high performs comparably to o1, with significant improvements on PhD-level biology, chemistry, and physics problems.

Understanding gravity and friction when a small ball bounces is not difficult for humans, but in the field of large language modeling, this ability to understand the 'world model' of the physical state of an object has not been a real breakthrough until recently.

There is also speculation that the DeepSeek R1's program sometimes only has one ball, could it be overthinking things? I wonder if any of our readers have experienced this for themselves? Feel free to discuss.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...