Nvidia's latest AI chatbot works independently on your PC and is completely free.

Nvidia's GeForce RTX 40 series GPUs.

If you own a GeForce RTX 30-series or newer GPU, the demo app, called "Chat with RTX," enables personalized content services that don't require an Internet connection.

Nvidia has introduced Chat with RTX, an AI chatbot capable of running on any PC equipped with an RTX 30 or 40 series graphics card with at least 8GB of VRAM.

There is also support for retrieving and summarizing local documents or YouTube videos.

Recommended reading:[World's Best AI Chatbots: ChatGPT and Other Alternatives]

Powered by Nvidia's TensorRT-LLM software, the app not only generates content, but also learns from user-supplied material, with support for file types including .txt, .pdf, .doc/.docx, .xml, and the ability to link to the URL of a YouTube video.

After selecting the content used to train the robot, users can ask it customized questions about that content. For example, the bot could outline step-by-step instructions from a YouTube instructional video, or tell the user what kind of batteries are recorded in their shopping list.

The bots are trained based on the user's preferred content, making the entire experience more personal and customized. And the localized processing of data ensures the privacy of user information." Chat with RTX" guarantees the security of user data as it does not rely on cloud services and can respond quickly even in a network-less environment.

See also:[ChatGPT vs. Copilot: Which AI chatbot is better for you?]

To run this chatbot, you'll need Nvidia's GeForce RTX 30 series or better GPU with at least 8GB of video memory." Chat with RTX also requires Windows 10 or 11 operating system and the latest Nvidia GPU drivers.

Nvidia has shown that its TensorRT-LLM software combines Retrieval Augmented Generation (RAG) and RTX acceleration to allow "chatting with RTX" without relying on the network by using local files as a knowledge base and combining them with features such as Mistral and open source Large Language Models (LLMs) like Llama 2 to provide precise answers.

Chat with RTX Review

Nvidia today announced its [...Chat with RTXAn early version of ] - a demo application that lets you run your own AI chatbot on your PC. Whether it's a YouTube video or a personal document, it can be used to generate summaries or get relevant answers based on personal data. The entire process takes place entirely on your local computer, and all you need is an RTX 30 or 40 series graphics card with at least 8GB of VRAM.

I've briefly tried Chat with RTX over the past day, and while the application is still rudimentary, I can already see the potential for it to become a valuable tool for data research, both for journalists and any user who needs to analyze numerous documents.

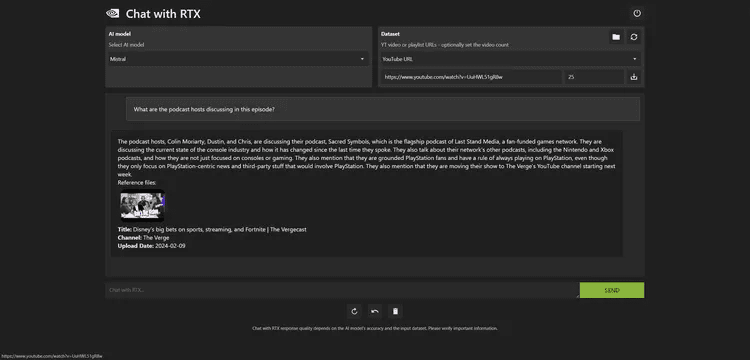

Chat with RTX has support for handling YouTube videos, where you simply enter the video URL and it lets you search for specific subtitles or summarize the entire video. This is particularly useful for me when searching for video podcasts, especially when looking for specific content from the recent week of rumors about Microsoft's new Xbox strategy shift.

However, it's not perfect when it comes to searching for YouTube videos. When trying to search for subtitles for a YouTube video from _Verge_, Chat with RTX instead downloads subtitles for a completely different video, which is apparently a bug in earlier versions.

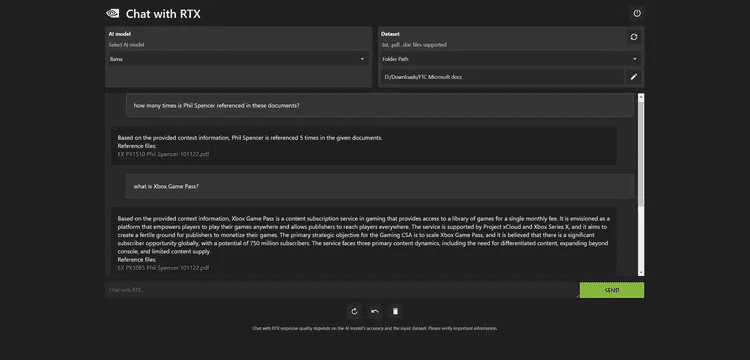

Figure 1: Chat with RTX excels at searching local documents.

When it works properly, I was able to find relevant content in the video within seconds. I also used Chat with RTX to analyze the _FTC v. Microsoft_ case document set. While reporting on this case last year, quickly skimming through a large number of

Documentation is a big challenge, but Chat with RTX allows me to complete queries almost instantly.

For example, the chatbot was able to accurately summarize Microsoft's Xbox Game Pass strategy from internal documents made public by the trial:

According to the background information provided, Xbox Game Pass is a game content subscription service that provides access to a library of games by paying a single monthly fee. It is intended to be a platform that enables gamers to play their games anywhere, while allowing publishers to reach gamers worldwide. The service is supported by Project xCloud and Xbox Series X and aims to provide publishers with a great environment where their games can realize real estate. The core strategic goal of the Gaming CSA is to scale Xbox Game Pass, with a global market of up to 750 million potential subscribers. The service faces three main content challenges: demand for differentiated content, expansion to platforms beyond consoles, and limited content availability.

I also found the tool to be helpful in quickly scanning PDF documents and verifying data. While Microsoft's own Copilot system doesn't handle PDFs within Word very well, Nvidia's Chat with RTX was able to easily extract all the key information and was so responsive that there was almost no perceptible latency, which is a very different experience than when using the cloud-based ChatGPT or Copilot chatbots.

The main flaw with Chat with RTX is that it really feels like an initial developer demo. In reality, Chat with RTX installs a web server and Python instance on your PC, processes the incoming data through a Mistral or Llama 2 model, and then utilizes Nvidia's Tensor core to accelerate the query process through RTX GPUs.

Figure 2: Chat with RTX's accuracy is sometimes skewed.

On my PC with an Intel Core i9-14900K processor and RTX 4090 GPU, installing Chat with RTX took about 30 minutes. The application is nearly 40GB in size, with the Python instance taking up about 3GB of RAM out of a total of 64GB of RAM on my system.Once up and running, you can access Chat with RTX through a browser, and in the background, a command prompt displays the process and any error codes.

Nvidia did not launch it as a full-fledged application that all RTX users should download and install immediately. The app has some known issues and limitations, including the fact that source attribution may not always be accurate. I initially tried to get it to index 25,000 documents, but this caused the app to crash and the preferences had to be cleared to restart.

Additionally, Chat with RTX does not remember the previous context, so subsequent issues cannot be based on the context of the previous issue. It also creates JSON files inside the index folder, so I don't recommend using this feature across the board in the Documents folder on Windows.

Nonetheless, I'm still extremely interested in tech demos like this, and Nvidia has really shown potential in this area. This bodes well for the future of AI chatbots running locally on PCs, especially for those who don't want to subscribe to services like Copilot Pro or ChatGPT Plus to analyze their personal files.

Chat with RTX Download

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...