Your exclusive digital person? The ultimate PK of six open source digital person programs: a comparison of results at a glance!

Recently, in the AI circle, the digital human technology is really a hot mess, a variety of "open source the strongest" digital human program endless, dazzling. Although the author has also shared a number of digital human integration packages, but in the face of so many choices, it is inevitable that people will be confused, do not know which is the most suitable for them.

Previously introduced for readers:12 free locally deployed digital peopleAs the so-called "difficult to choose" is a common problem of contemporary people, in order to solve the troubles of everyone, this time a breath out of six digital people to inventory!

The author will conduct a comprehensive inventory of previously shared resources related to digital people, comparing them in detail Realization effects, configuration requirements, generation time and other key information to enable everyone to clear at a glance Understand the current state of the art in open source digital human technology so that you can choose the best "digital human" for you.

Digital people: the "hot chick" of the AI space

When it comes to the hottest technology in AI right now, digital people are definitely on the list.

With AI Painting Publisher Stability AI Unlike the frequent rumors of closures and the "involution" situation in which domestic and foreign large model vendors are caught in a price war, digital people have demonstrated real business value and profit potential in the AI field.

As an example, in mid-April this year, Jingdong founder Liu Qiangdong's digital person, "Caixin Dongge," was unveiled on Jingdong's live broadcast, and its degree of realism is amazing, not only is the speed of speech and accent highly similar to real people, but even the habitual movements are almost exactly the same.

"Donggao" rubs his fingers together from time to time during his speech, and when he emphasizes a point, he makes a larger gesture and naturally nods his head. Many onlookers said they could hardly tell he was a digital person!

The debut of this digital human live show in less than an hour, the number of viewers exceeded 20 million, and the cumulative turnover of the entire live broadcast is more than 50 million Renminbi (RMB)

The great success of the debut show has directly promoted Jingdong to launch the "president of the digital people live" activity during the 618 promotion this year. Gree, Hisense, LG, Mingchuangyoupin, Jellyfish, Corvus, vivo, Samsung and many other well-known enterprises' executives have been transformed into digital people, and personally went down to the field to live with goods.

Jingdong official data show that up to now, Jingdong speech rhinoceros digital people have accumulated services more than 5000 brands, driving the total merchandise transactions (GMV) Over ten billion dollars RMB.

The huge commercial potential shown by digital people has attracted more and more people's attention. Although the cost of realizing a super-realistic digital person like "Pick and Sell Dong" is still not expensive, with the rapid development of AI technology, the open-source community has emerged with more and more multi-functional and powerful digital person projects, which greatly reduces the technical threshold.

Next, let me give you a detailed inventory of those excellent open source digital human projects.

What's the best open source digital human technology? A Hardcore Assessment of Six Projects

Digital human technology, a concept that once existed only in science fiction movies, is gradually coming into real life. With the rapid advancement of artificial intelligence technology, the field of open source digital people has become highly competitive, with major research institutions and technology companies launching their own open source solutions.

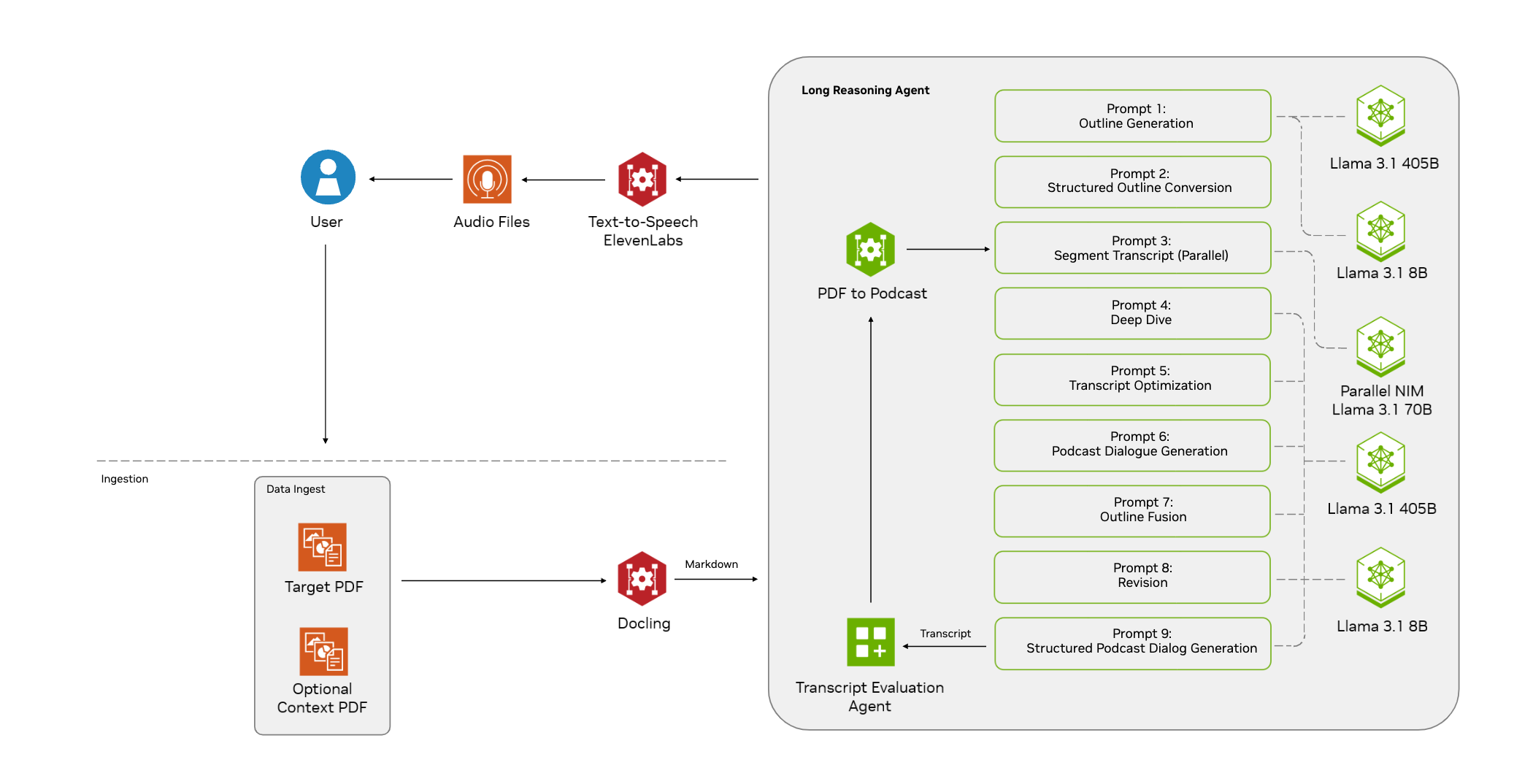

In the following, I will conduct a detailed review of six representative open source digital people projects and In order of technological development An introduction is given to facilitate the visualization of the evolution of digital human technology.

1. Wav2lip: a representative of the first generation of digital human technology

Wav2Lip Algorithm is a deep learning-based speech-driven facial animation generation algorithm, which is a more widely used scheme in early digital human technology. Its core idea is to map the information in the speech signal to the facial animation parameters, so as to generate facial animation synchronized with speech.

- Generating Cases: The following figure illustrates the Wav2Lip Generated digital human effect. It can be seen that the facial movements of the characters in the image are stiff, mainly focusing on the mechanical movements of the lips, and the overall maturity of the digital person is relatively low.

- Configuration Requirements: Wav2Lip has low hardware requirements, requiring only a GPU with 4GB of RAM to run. It takes about 5-15 minutes of processing time to generate a 1-minute digital human video.

2. SadTalker: an advanced program for more natural facial movements

SadTalker It is an open source project by Xi'an Jiaotong University, which generates 3D motion coefficients by learning from audio and combines it with a new 3D face renderer to generate head movements, realizing the effect of generating high-quality digital human videos using only a single photo and a piece of audio.

- Generating Cases: The following figure illustrates the SadTalker Generated digital human effects. Compared to Wav2Lip, SadTalker has improved the naturalness of the facial movements, the head is no longer completely static, but some slight movements have been added. However, a closer look reveals that there is still some misalignment at the edges of the figure. So there it is. SadTalker Enhanced to Generate Digital People Using Portrait Videos

- Configuration Requirements: Because SadTalker generates improved digital people, it requires a higher hardware configuration. It is recommended to use a GPU with 6GB of RAM to ensure smooth operation. If you use a GPU with less than 6GB of RAM or a CPU, the generation speed will be slower. It takes about 10-20 minutes of processing time to generate a digital human video of about 1 minute.

3. MuseTalk: from Tencent, lip synchronization is more accurate

MuseTalk MuseTalk is a digital human project launched by Tencent, focusing on real-time audio-driven lip-synchronized digital human generation.MuseTalk's core technology lies in its ability to automatically adjust the facial image of the digital character based on the audio signal, ensuring that the lip shape is highly consistent with the audio content, thus achieving a more natural lip-synchronization effect.

- Generating Cases: The following figure shows the effect of a digital person generated by MuseTalk. As you can see, MuseTalk has improved on SadTalker, the head and face movements are more natural, and the misalignment of the edges has been alleviated. However, there is still room for improvement in the fineness of the lip animation.

- Configuration Requirements: MuseTalk's hardware requirements are similar to SadTalker's, and a GPU with 6GB of video memory is recommended for a better running experience. It takes about 10-20 minutes to generate a 1-minute digital human video.

4. Hallo: Baidu & Fudan & ETH Zurich & Nanjing University joint production, the effect is amazing!

Hallo Hallo is a digital human project developed by Baidu in collaboration with Fudan University, ETH Zurich and Nanjing University, which has made significant progress in the field of audio-driven portrait animation generation.Hallo utilizes advanced AI technology to generate realistic and dynamic portrait videos based on voice input. The technology deeply analyzes voice input to synchronize facial movements, including lips, expressions, and head poses, resulting in an impressive digital human effect.

- Generating Cases: The following figure illustrates the Hallo Generated digital human effect. In terms of clarity, richness of head movements, and subtlety of facial expressions, Hallo-generated figures are a quantum leap forward from previous solutions.

- Configuration RequirementsHallo: Although the effect is outstanding, it also requires higher hardware performance. According to my review, it is recommended to use a GPU with more than 10GB of video memory to run it smoothly. It takes 30-40 minutes of processing time to generate a 1-minute digital human video.

5.LivePortrait: Racer open source, multi-character seamless stitching

LivePortrait is an eye-catching digital person project open-sourced by Racer. Its uniqueness is that it can not only accurately control the direction of the character's eye gaze and the opening and closing of the lips, but also able to realize the seamless stitching of multiple portraits.

- Generating Cases: The following figure illustrates the LivePortrait Generated digital people effect. As you can see, LivePortrait handles multi-person scenes with very smooth and natural transitions between the characters, with no abrupt borders or splicing marks.

- Configuration RequirementsCompared to Hallo, LivePortrait has a lower hardware requirement while ensuring excellent generation results. According to my evaluation, a GPU with 8GB of RAM can run it smoothly, and a GPU with 6GB of RAM can barely run it. The processing time to generate a 1-minute digital human video is about 10-20 minutes.

6. EchoMimic: audio and video dual drive, more realistic and natural

Traditional digital human technology relies on either audio-driven or facial keypoint-driven, each with its own advantages and disadvantages. While EchoMimic On the other hand, it skillfully combines these two driving methods to achieve more realistic and natural dynamic portrait generation through dual training of audio and facial keypoints.

- Generating CasesThe following figure shows the effect of a digital human generated by EchoMimic. As you can see, the facial expressions and body movements of the digital human generated by EchoMimic are so natural and smooth that it's almost hard to distinguish the real one from the fake one.

- Configuration RequirementsEchoMimic's generation results have been greatly improved without any significant increase in hardware requirements, as a GPU with 8GB of RAM can run smoothly. However, the generation time is slightly increased, and it takes about 15-30 minutes of processing time to generate a 1-minute video of a digital person.

Summary and outlook

The development speed of digital human technology is amazing, constantly breaking through the boundaries of people's imagination. In order to more intuitively show the effect of the various open source digital human technology to improve, the author has created a comparison chart of technological progress:

As AI technology continues to advance, we have reason to believe that more and more powerful open source AI digital people projects will emerge in the future. If you are curious about digital human technology and eager to experience the stunning effects of digital humans, now is the best time to do so. Let's witness the booming development and infinite possibilities of digital human technology together!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...