Napkins.dev: uploading wireframes to generate front-end code based on Llama4

General Introduction

Napkins.dev is a free open source project whose core function is to allow users to upload interface screenshots or wireframes to automatically generate runnable front-end code. Users only need to provide a design drawing , the tool will be through the Llama 4 model (by the Together Napkins.dev analyzes images and generates application code based on the Next.js framework. Napkins.dev is ideal for rapid prototyping, and is particularly good at handling simple interface designs such as login pages or navigation bars. The generated code can be previewed and edited in real-time, and users can run it directly or optimize it further, dramatically reducing the time from design to development.

Function List

- Screenshot upload: Support uploading interface screenshots or hand-drawn wireframes in PNG and JPG formats.

- AI Code Generation: Generating HTML, CSS and JavaScript code for the Next.js framework using the Llama 4 model to analyze screenshots.

- Real-time preview: After generating the code, provide online sandbox (based on Sandpack) to show the effect of the application.

- Code editing: support to adjust the generated code by prompt words, such as modifying the color or layout.

- Theme selection: allows users to select different interface themes to personalize code output.

- Open Source Collaboration: Users can clone repositories, commit code, or optimize features through GitHub.

- Mobile support: generated code adapted to desktop, mobile display is being optimized.

Using Help

Installation process

napkins.dev offers two ways to use it: directly accessing the official deployment (which will be live in the future through the https://napkins.dev) or run the project locally. Below are detailed steps for local deployment for developers or users who need to customize their functionality:

- Clone Code Repository

Open a terminal and enter the following command to clone the GitHub repository for napkins.dev:git clone https://github.com/Nutlope/napkins

This will download the project file locally.

- Configuring Environment Variables

In the project root directory, copy the.env.examplefile and rename it.env, then fill in the necessary information below:TOGETHER_API_KEY=你的Together AI密钥 AWS_ACCESS_KEY_ID=你的AWS S3访问密钥 AWS_SECRET_ACCESS_KEY=你的AWS S3秘密密钥 AWS_S3_BUCKET=你的S3存储桶名称 AWS_S3_REGION=你的S3区域- Together AI key: Visit the Together AI website to sign up for an account and get a free or paid API key for calling Llama 4 models. The free amount is usually enough for testing.

- AWS S3 Configuration: Log in to the AWS console and create an S3 storage bucket for storing the uploaded screenshots. Refer to Next.js S3 Upload Guide Configure storage bucket permissions to ensure that public reads are allowed. The bucket name and region need to match the

.envDocumentation is consistent.

- Installation of dependencies

Make sure Node.js is installed locally (recommended version 18 or above). Run it in the project directory:npm installThis will install all the dependencies needed for your project, including Next.js, Tailwind CSS, Sandpack, and Together AI's SDK.

- Starting Local Services

Run the following command to start the development server:npm run devOpen your browser and visit

http://localhost:3000to access the local interface of napkins.dev. - Verify Installation

Upload a simple screenshot of the interface (e.g., a design containing buttons and input boxes), click the Generate button, and check to see if the code can be output. If you encounter an error, check the.envThe key in the file is correct, or verify that the network is connected to Together AI and the AWS S3 service.

Usage

The core function of napkins.dev is to convert screenshots of the interface into runnable code. Here are the detailed steps to use it:

- access tool

If the official deployment is live, visithttps://napkins.dev(Currently we need to wait for the official release, we suggest to run it locally first). To run locally, open thehttp://localhost:3000The - Upload screenshot

Click the "Upload" button on the homepage and select a screenshot of the interface in PNG or JPG format. It is recommended to use a clear design with simple elements (e.g. buttons, input boxes, navigation bar).- Documentation requirements: It is recommended that images be less than 5MB in size and avoid blurring or overly complex dynamic effects (e.g. animation).

- Support Hand Painting: Hand-drawn wireframes can be uploaded, but make sure the lines are clear and the elements are clearly distinguished.

- draw attention to sth.: Try a simple screenshot of the login page for the first time to test the generation effect.

- Generate Code

After uploading the screenshot, click the "Generate" button. The system will analyze the image with the Llama 4 model and generate the front-end code based on Next.js. The generation process usually takes 5-30 seconds, depending on the complexity of the screenshot and network speed.- Output content: The code consists of HTML (React components), CSS (Tailwind styles) and JavaScript, and is clearly structured for rapid development.

- error handling: If the generation fails, check if the screenshot is too complex or re-upload a simpler design.

- Previewing and editing

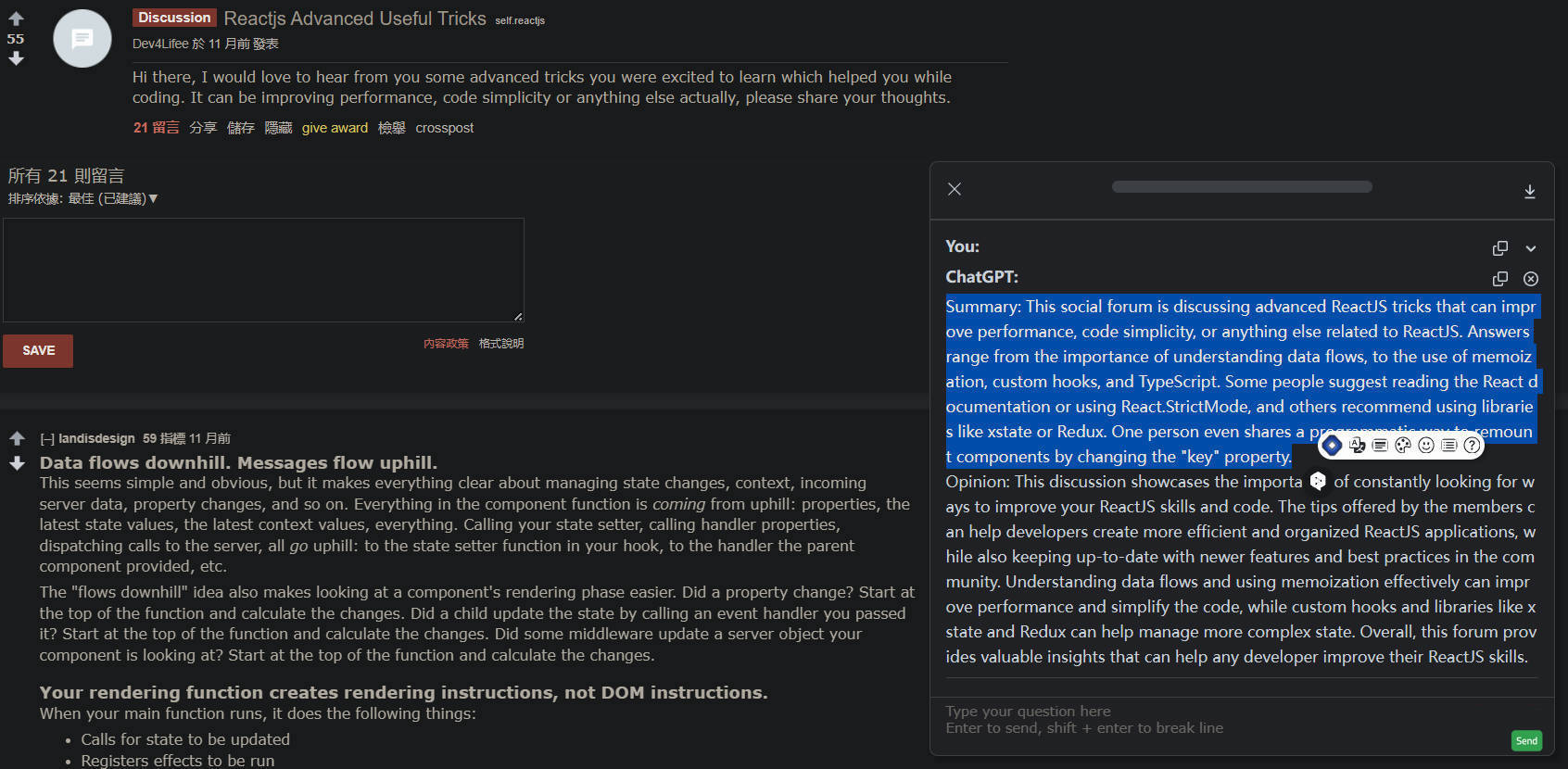

Once the code has been generated, the page displays a live preview through the Sandpack sandbox showing the appearance and basic interactions of the application. The code files are listed on the right side and can be viewed, copied or downloaded by the user.- Edit Code: Click the "Edit" button, enter a prompt (e.g., "Change background to darker" or "Resize buttons"), and the AI will modify the code and update the preview according to the prompts.

- Topic Selection: By selecting a different theme in the settings (e.g. bright or dark mode), the generated CSS will be adapted automatically.

- Save Code: Click the "Download" button to package the code into a ZIP file containing the complete Next.js project structure.

- Operation and deployment

The downloaded code can be run locally. Go to the code directory and runnpm installcap (a poem)npm run devAccesshttp://localhost:3000View app.- Deployment methodology: It is recommended to use Vercel or Netlify to deploy Next.js apps. Vercel provides one-click deployment and generates a public link after uploading the code.

- production environment: Generated code is suitable for prototyping, production environments need to manually check the logic, add back-end interfaces or optimize performance.

Featured Function Operation

- AI-driven screenshot analysis

napkins.dev utilizes the Llama 4 model (via Together AI inference) to recognize elements in a screenshot, such as buttons, text boxes, or navigation bars, and generates the corresponding React Components. For example, if you upload a design with a "Sign Up" button and an input box, the tool will generate code with the form structure and Tailwind styles.- Operating tips: Ensure screenshot elements are clear, complex designs may lead to misrecognition. It is recommended to start with a simple layout and gradually test complex interfaces.

- Description of restrictions: The current version has better support for static interfaces, and dynamic effects (such as rotating images) may require manual code adjustments.

- Real-time code sandboxing

The tool integrates with Sandpack (powered by CodeSandbox), generating code that runs directly in the browser to show real results. Users can interactively test button clicks or form inputs to verify code functionality.- Example of operation: After generating a login page, click on the button in the preview to check if the interaction is triggered. If it needs to be adjusted, enter a prompt (e.g. "Add button click event") to modify the code.

- Open source and extensions

napkins.dev is fully open source and developers can participate in development via GitHub. After cloning the repository, runnpm run devTest changes and submit Pull Requests to contribute features. Common improvements include optimizing the mobile display, adding a new theme, or enhancing the alert word feature.- Example of operation: in

src/componentsdirectory to find theEditor.tsxTo add a log showing the results of Llama 4's analysis, runnpm run devTest and then submit the code.

- Example of operation: in

- Mobile optimization (in development)

Currently, the generated code is suitable for desktop, and the mobile display may be crowded. The official plan is to optimize the responsive layout, and users can also request to "generate mobile-friendly code" through the prompt.- Example of operation: After uploading the screenshot, enter the prompt "Optimize for mobile screen layout" and the AI will adjust the CSS (e.g., by using the

flexmaybegrid(Layout).

- Example of operation: After uploading the screenshot, enter the prompt "Optimize for mobile screen layout" and the AI will adjust the CSS (e.g., by using the

Technical details

The napkins.dev tech stack includes:

- Llama 4: Meta provides the visual language model responsible for analyzing screenshots and generating code logic.

- Together AI: Provides reasoning services for Llama 4 to ensure efficient processing of images and text.

- Sandpack: Used for real-time code preview to simulate a real runtime environment.

- AWS S3: Store uploaded screenshots to ensure quick access.

- Next.js and Tailwind CSS: Builds the front-end interface and styles, and the generated code is based on this framework.

- Helicone: Monitor model performance and API calls.

- Plausible: Analyze website access data to optimize the user experience.

application scenario

- Rapid Prototyping

Product managers upload interface sketches to generate demo ready apps to show to teams or customers, saving development time. - Front-end learning tools

Beginners upload simple designs, generate code and then analyze React components and Tailwind styles to quickly learn modern front-end development. - Hackathon Development

The Programming Marathon team uses tools to turn designs into code, focusing on back-end logic or feature extensions to improve efficiency. - Personal Project Building

Developers upload a blog or portfolio design, generate code and deploy it with a few tweaks, suitable for rapid on-line personal websites. - Design Verification

UI designers upload screenshots, generate interactable prototypes, test interface layout and user experience, and optimize design solutions.

QA

- What conditions do I need to fulfill to take a screenshot?

Screenshots should be in PNG or JPG format, recommended to be less than 5MB in size, clear, and contain clear elements (e.g. buttons, input boxes). Complex designs may result in inaccurate generation, and it is recommended to start with a simple interface. - Is the generated code suitable for a production environment?

The generated code is suitable for prototyping and contains basic React components and Tailwind styles. Production environments check the code for logic, security, and add back-end functionality. - Do I have to pay to use napkins.dev?

The project is completely free and open source. Local operation requires a Together AI key (free credits are available) and AWS S3 service (may incur small storage charges). - How to improve the quality of generated code?

Use clear, simple screenshots and avoid dynamic effects. Once generated, you can tweak the code with cue words or participate in GitHub development to optimize the model. - What frameworks are supported?

Currently generates code based on Next.js and Tailwind CSS, and may support other frameworks such as Vue or Svelte in the future, depending on community contributions.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...