MuseGAN: an open source model for generating multi-track music/soundtracks, easily creating music clips for multiple instruments

General Introduction

MuseGAN is a music generation project based on Generative Adversarial Networks (GANs) designed to generate multi-track (multi-instrument) music. The project is capable of generating music from scratch or accompanied by user-supplied tracks.MuseGAN is trained using the Lakh Pianoroll Dataset to generate snippets of popular songs containing bass, drums, guitars, piano, and strings. The latest implementation is based on a network architecture of 3D convolutional layers with a smaller network size but reduced control.MuseGAN provides a variety of scripts to facilitate operations such as managing experiments, training new models, and inference and interpolation using pre-trained models.

Function List

- Generate multi-track music: Generate music clips containing multiple instruments from scratch.

- Accompaniment Generation: Generate accompaniments based on user-supplied tracks.

- Training new models: Scripts and configuration files are provided to facilitate users to train their own music generation models.

- Using pre-trained models: Download and use pre-trained models for music generation.

- Data Processing: download and process training data with support for Lakh Pianoroll Dataset.

- Experiment management: Provide a variety of scripts to facilitate users to set up and manage experiments.

- Output format: the generated music can be saved in numpy arrays, image files and multi-track pianoroll file formats.

Using Help

Installation process

- Make sure pipenv (recommended) or pip is installed.

- Use pipenv to install the dependencies:

bash

pipenv install

pipenv shell

或使用pip安装依赖:

bash

pip install -r requirements.txt

### 数据准备

1. 下载训练数据:

bash

. /scripts/download_data.sh

2. 处理训练数据:

bash

. /scripts/process_data.sh

### 训练新模型

1. 设置新实验:

bash

. /scripts/setupexp.sh ". /exp/myexperiment/" "Remarks on the experiment"

2. 修改配置文件和模型参数文件以设置实验参数。

3. 训练模型:

bash

. /scripts/runtrain.sh ". /exp/myexperiment/" "0"

或运行完整实验(训练+推理+插值):

bash

. /scripts/runexp.sh ". /exp/myexperiment/" "0"

### 使用预训练模型

1. 下载预训练模型:

bash

. /scripts/download_models.sh

2. 使用预训练模型进行推理:

bash

. /scripts/run_inference.sh ". /exp/default/" "0"

或进行插值:

bash

. /scripts/run_interpolation.sh ". /exp/default/" "0"

### 输出管理

生成的音乐样本默认会在训练过程中生成,可以通过设置配置文件中的`save_samples_steps`为0来禁用此行为。生成的音乐样本会以以下三种格式保存:

- `.npy`:原始numpy数组

- `.png`:图像文件

- `.npz`:多轨pianoroll文件,可通过Pypianoroll包加载

可以通过设置配置文件中的`save_array_samples`、`save_image_samples`和`save_pianoroll_samples`为False来禁用特定格式的保存。生成的pianoroll文件以`.npz`格式保存,以节省空间和处理时间。可以使用以下代码将其写入MIDI文件:

python

from pypianoroll import Multitrack

m = Multitrack('. /test.npz')

m.write('. /test.mid')

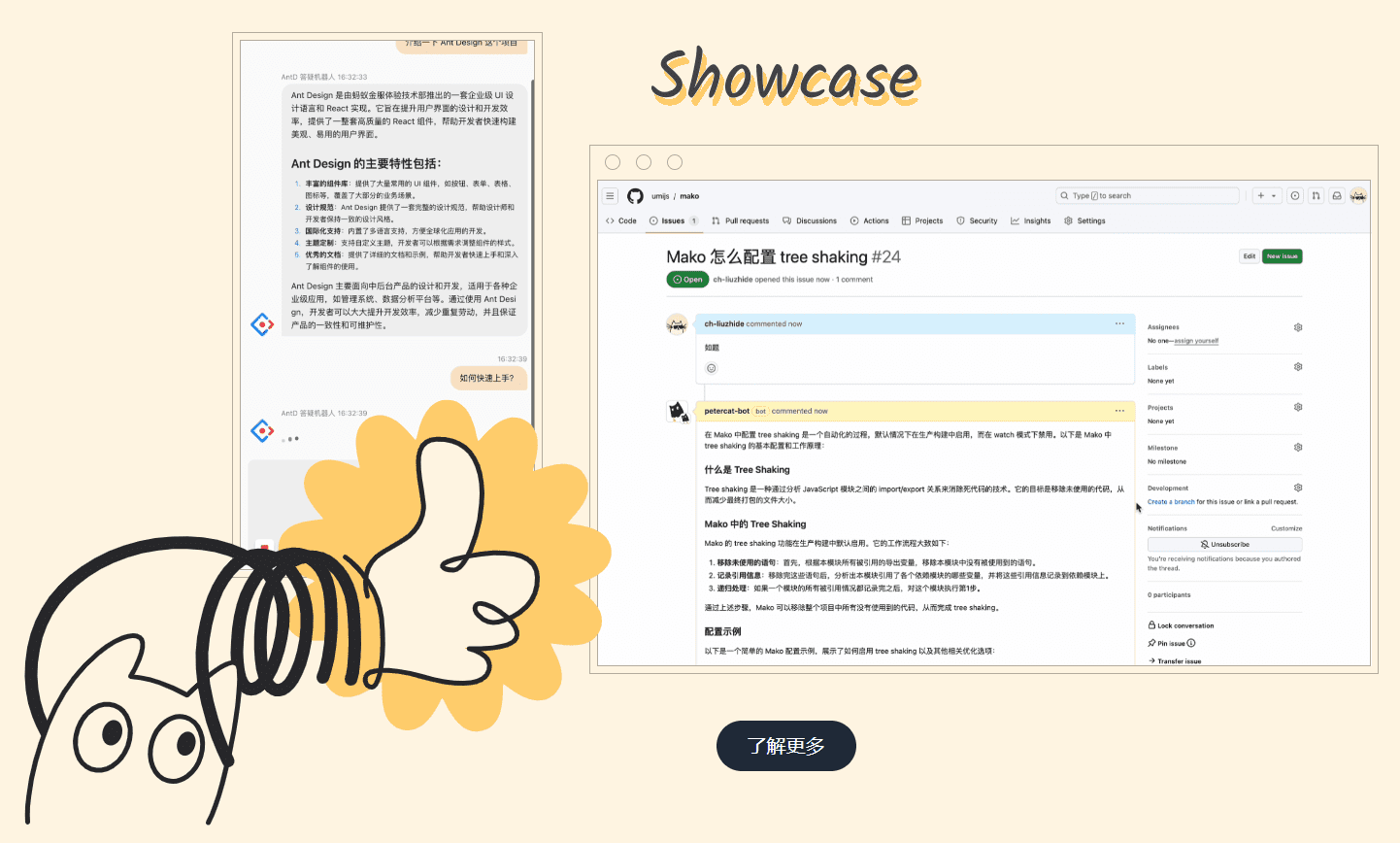

Example results

Some sample results can be found in the./exp/found in the catalog. More examples can be downloaded from the following links:

- sample_results.tar.gz(54.7 MB): Examples of inference and interpolation results

- training_samples.tar.gz(18.7 MB): Examples of results generated by different steps

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...