MTEB: Benchmarking for Evaluating the Performance of Text Embedding Models

General Introduction

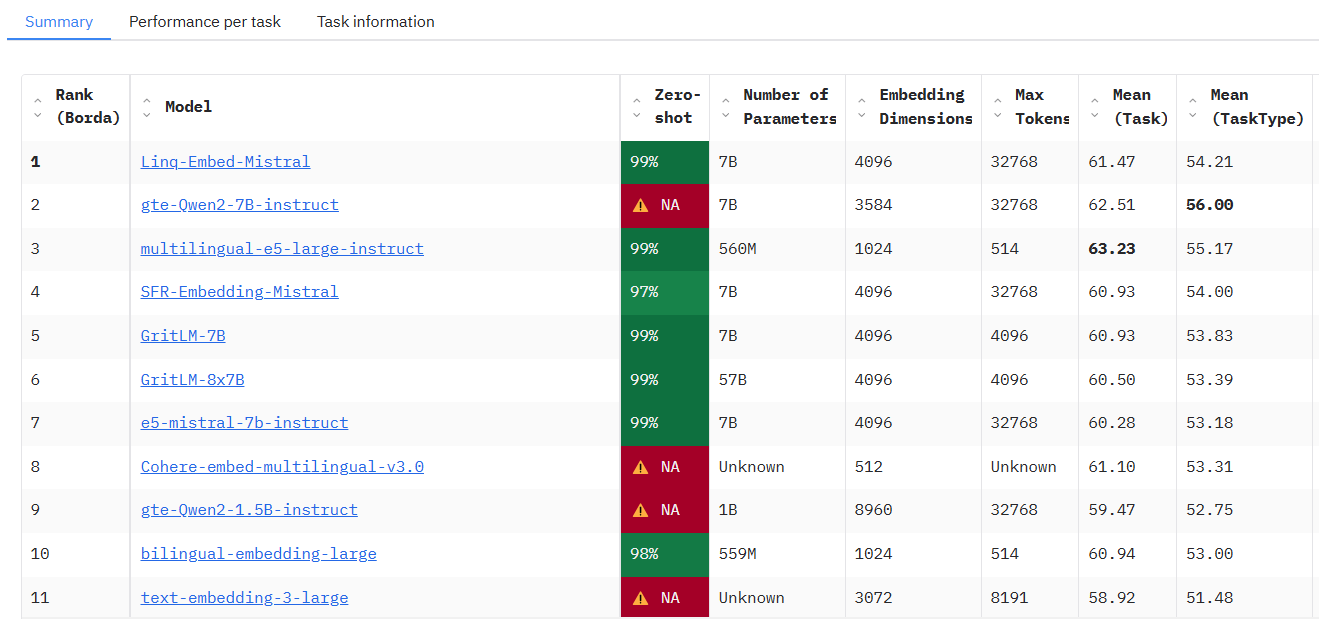

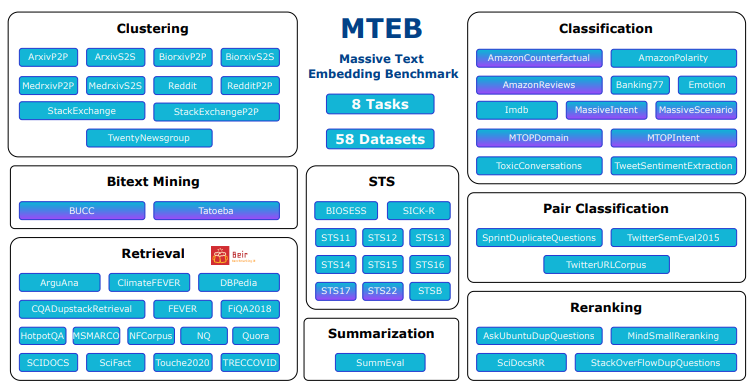

MTEB (Massive Text Embedding Benchmark) is an open source project developed by the embeddings-benchmark team and hosted on GitHub, aiming to provide comprehensive performance evaluation for text embedding models. It covers 8 major task types, including classification, clustering, retrieval, etc., integrates 58 datasets, supports 112 languages, and is currently one of the most comprehensive text embedding benchmarking tool.MTEB through the testing of 33 types of models, reveals the differences in the performance of different models in various types of tasks, to help developers choose the embedding model suitable for specific applications. The project provides open source code so that users can freely run the tests or submit new models to the public leaderboard, which is widely applicable to NLP research, model development and industry application scenarios.

Accessed at: https://huggingface.co/spaces/mteb/leaderboard

Function List

- Multi-task evaluation support: Contains 8 embedding tasks, such as semantic text similarity (STS), retrieval, clustering, etc., covering a wide range of application scenarios.

- Multilingual data sets: Support for 112 languages, providing the ability to test multi-language models, suitable for globalization application development.

- Model Performance Ranking: Built-in public leaderboards showing test results for 33 models for easy comparison and selection.

- Custom Model Testing: Allow users to import custom embedded models and run the evaluation with only a small amount of code.

- Cache Embedding Functionality: Supports embedded result caching to optimize repeat test efficiency for large-scale experiments.

- Flexible parameter adjustment: Provide coding parameter configuration, such as batch size adjustment, to enhance testing flexibility.

- Open Source Support: The complete source code is open for users to modify or extend the functionality according to their needs.

- Community Extensibility: Support users in submitting new tasks, datasets, or models to continuously enrich the test.

Using Help

Installation process

MTEB is a Python based tool that requires some programming environment to deploy and run. The following are the detailed installation steps:

1. Environmental preparation

- operating system: Windows, MacOS or Linux are supported.

- Python version: requires Python 3.10 or above, which can be accessed via the

python --versionCheck. - Git Tools: Used to get the source code from GitHub, it is recommended to install it in advance.

2. Cloning the code base

Open a terminal and run the following command to get the MTEB source code:

git clone https://github.com/embeddings-benchmark/mteb.git

cd mteb

This will download the project locally and enter the project directory.

3. Installation of dependencies

MTEB requires some Python library support, and it is recommended to create a virtual environment before installing dependencies to avoid conflicts:

python -m venv venv

source venv/bin/activate # Linux/MacOS

venv\Scripts\activate # Windows

Then install the core dependencies:

pip install -r requirements.txt

To run the leaderboard interface, you also need to install Gradio:

pip install mteb[gradio]

4. Verification of installation

Run the following command to check for available tasks and ensure a successful installation:

mteb --available_tasks

If the task list is returned, the environment configuration is complete.

Usage

The core function of MTEB is to evaluate text embedding models, and the following is the main operation flow:

Function 1: Run pre-built tasks to evaluate existing models

MTEB supports direct testing of existing models (e.g., the SentenceTransformer model). For example, evaluate the performance of the "average_word_embeddings_komninos" model on the Banking77Classification task:

mteb -m average_word_embeddings_komninos -t Banking77Classification --output_folder results/average_word_embeddings_komninos --verbosity 3

-mSpecifies the model name.-tSpecify the task name.--output_folderSpecifies the path where the results are saved.--verbosity 3Displays the detailed log.

The results are saved to a specified folder containing the scores for each task.

Function 2: Test Customized Models

If you want to test your own model, just implement a simple interface. Take the SentenceTransformer as an example:

from mteb import MTEB

from sentence_transformers import SentenceTransformer

# 加载模型

model = SentenceTransformer("average_word_embeddings_komninos")

# 定义评估任务

evaluation = MTEB(tasks=["Banking77Classification"])

# 运行评估

evaluation.run(model, output_folder="results")

After running, the results are saved to the "results" folder.

Feature 3: Cache embedding to optimize efficiency

For repeated tests, caching can be enabled to avoid double-counting of embeddings:

from mteb.models.cache_wrapper import CachedEmbeddingWrapper

# 包装模型以启用缓存

model_with_cache = CachedEmbeddingWrapper(model, cache_path="cache_embeddings")

evaluation.run(model_with_cache)

The cache file is stored in the specified path by task name.

Function 4: View Leaderboard

To see the current model rankings, visit the official leaderboards, or deploy locally:

git clone https://github.com/embeddings-benchmark/leaderboard.git

cd leaderboard

pip install -r requirements.txt

python app.py

Access in browser http://localhost:7860You can view the real-time leaderboards.

Function 5: Adding new tasks

Users can extend the MTEB by inheriting from a task class, for example, by adding a reranking task:

from mteb.abstasks.AbsTaskReranking import AbsTaskReranking

class CustomReranking(AbsTaskReranking):

@property

def description(self):

return {

"name": "CustomReranking",

"description": "自定义重排序任务",

"type": "Reranking",

"eval_splits": ["test"],

"eval_langs": ["en"],

"main_score": "map"

}

evaluation = MTEB(tasks=[CustomReranking()])

evaluation.run(model)

operating skill

- Multi-GPU Support: For retrieval tasks, multi-GPU acceleration can be used:

pip install git+https://github.com/NouamaneTazi/beir@nouamane/better-multi-gpu

torchrun --nproc_per_node=2 scripts/retrieval_multigpu.py

- selected subset: Only a subset of specific tasks are evaluated:

evaluation.run(model, eval_subsets=["Banking77Classification"])

- Adjusting batch size: Optimize encoding speed:

evaluation.run(model, encode_kwargs={"batch_size": 32})

With the above steps, users can easily get started with MTEB and complete model evaluation or functional extension.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...