Mochi 1 Video Generation Model: SOTA in Open Source Video Generation Modeling

Genmo AI is a cutting-edge artificial intelligence lab dedicated to developing state-of-the-art open source video generation models. Its flagship product, Mochi 1, is an open-source video generation model that generates high-quality videos from textual cues.Genmo's goal is to drive innovation in artificial intelligence through video generation technology, offering unlimited possibilities for virtual exploration and creation.

Models is an open source library of video generation models, mainly showcasing the latest Mochi 1 models, which are based on Asymmetric Diffusion. Transformer (AsymmDiT) architecture, with 1 billion parameters, is the largest publicly released video generation model. The model is capable of generating high-quality, smooth action videos and is very responsive to textual cues.

Mochi 1 Preview is an open and advanced video generation model with high-fidelity motion and strong cue following. Our new model significantly bridges the gap between closed and open video generation systems. We are releasing the model under a liberal Apache 2.0 license.

Mochi 1 Preview Address

[bilibili]https://www.bilibili.com/video/BV1FRy6YeEui/[/bilibili]

Function List

- Video Generation: Generate high-quality video content by entering text prompts.

- open source model: Mochi 1 is available as an open-source model, allowing users to make individual adjustments and secondary developments.

- High fidelity motion quality: Generate videos with smooth motion and high fidelity physics.

- Powerful cue alignment: The ability to generate a video that precisely matches the user's needs based on text prompts.

- Community Support: Provide a community platform where users can share and discuss the generated video content.

- Multi-platform support: Supports use on multiple platforms, including the web and mobile devices.

Mochi 1 Model Architecture

Mochi 1 represents a significant advancement in open source video generation with a 10 billion parameter diffusion model based on our novel Asymmetric Diffusion Transformer (AsymmDiT) architecture. Trained entirely from scratch, it is the largest video generation model ever publicly released. Most importantly, it is a simple and hackable architecture.

Efficiency is critical to ensuring that the community can run our models. In addition to Mochi, we have also open sourced our video VAE, which compresses the video to 128x smaller size, using 8x8 space and 6x time compression to 12 channels of potential space.

AsymmDiT efficiently processes user cues and compressed video markers by simplifying text processing and focusing neural network capacity on visual inference.AsymmDiT uses a multimodal self-attention mechanism to jointly focus on text and visual markers and learns a separate MLP layer for each modality, similar to Stable Diffusion.3 However, due to the large hidden dimensions, our have almost four times as many parameters for the visual stream as for the text stream. To unify the modalities in the self-attention mechanism, we use an asymmetric QKV and output projection layer. This asymmetric design reduces inference memory requirements.

Many modern propagation models use multiple pre-trained language models to represent user prompts. In contrast, Mochi 1 encodes cues using only a single T5-XXL language model.

Mochi 1 uses a full 3D attentional mechanism to jointly reason over a context window of 44,520 video markers. To localize each marker, we extend the learnable rotational position embedding (RoPE) to 3 dimensions. The network learns a mixture of spatial and temporal axis frequencies end-to-end.

Mochi benefits from some of the latest improvements in language model extensions, including the SwiGLU feedforward layer, query key normalization for enhanced stability, and mezzanine normalization for controlling internal activation.

A technical paper will follow that will provide more details to facilitate advances in video generation.

Mochi 1 Installation Process

- clone warehouse ::

git clone https://github.com/genmoai/models

cd models

- Installation of dependencies ::

pip install uv

uv venv .venv

source .venv/bin/activate

uv pip install -e .

- Download model weights : Download the weights file from Hugging Face or via a magnet link and save it to a local folder.

Usage Process

- Launching the User Interface ::

python3 -m mochi_preview.gradio_ui --model_dir "<path_to_downloaded_directory>"

interchangeability<path_to_downloaded_directory>is the directory where the model weights are located.

- Command Line Video Generation ::

python3 -m mochi_preview.infer --prompt "A hand with delicate fingers picks up a bright yellow lemon from a wooden bowl filled with lemons and sprigs of mint against a peach-colored background. The hand gently tosses the lemon up and catches it, showcasing its smooth texture. A beige string bag sits beside the bowl, adding a rustic touch to the scene. Additional lemons, one halved, are scattered around the base of the bowl. The even lighting enhances the vibrant colors and creates a fresh, inviting atmosphere." --seed 1710977262 --cfg_scale 4.5 --model_dir "<path_to_downloaded_directory>"

interchangeability<path_to_downloaded_directory>is the directory where the model weights are located.

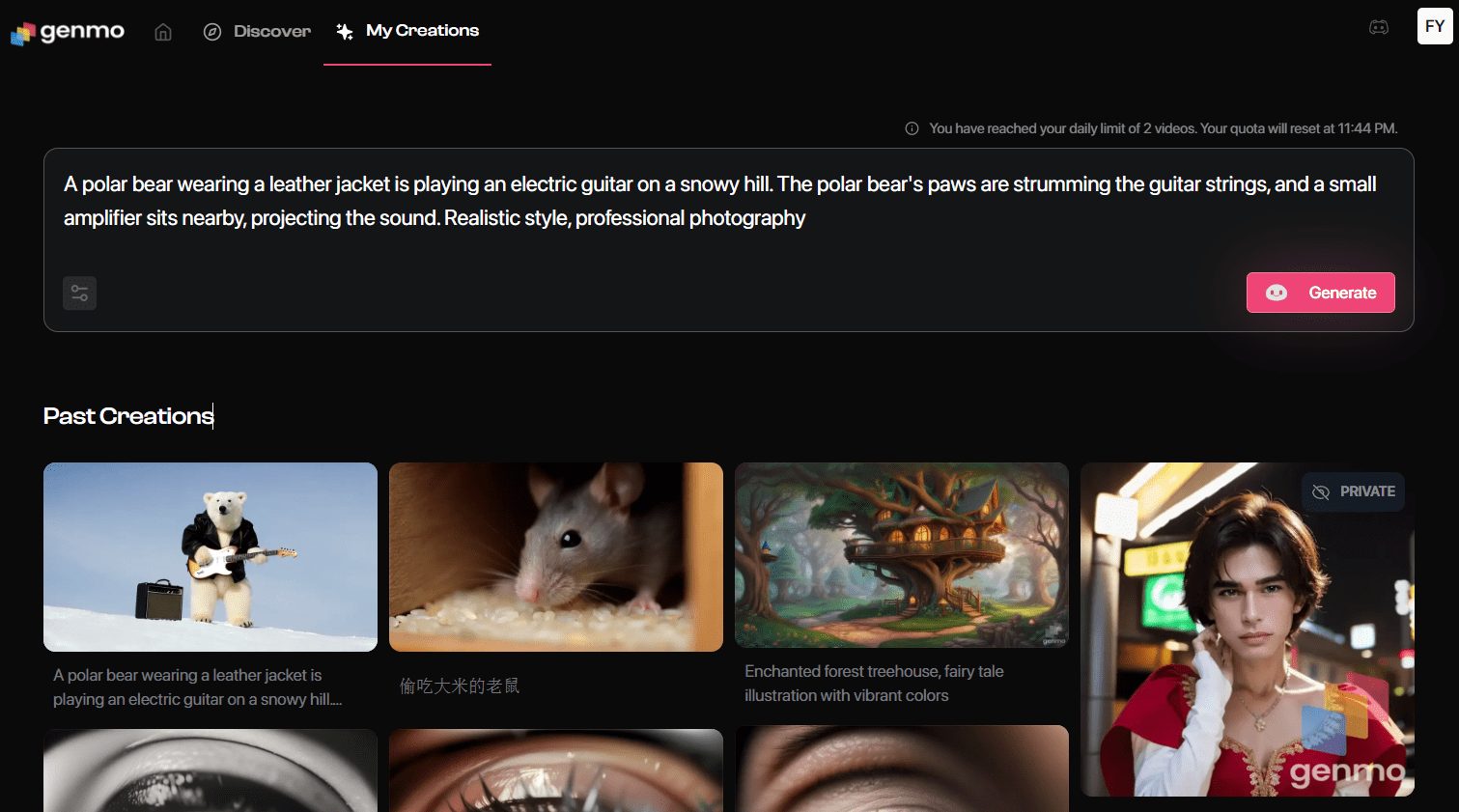

Experience Mochi 1 online

- Go to the generation page: After logging in, click "Playground" to enter the video generation page.

- input prompt: Enter the description of the video you want to generate in the prompt box. For example, "Movie trailer for the adventures of a 30 year old astronaut in a red wool motorcycle helmet".

- Selection of settings: Select the video style, resolution, and other settings as needed.

- Generate Video: Click the "Generate" button and the system will generate the video according to your prompts.

- Download & Share: Once generated, the video can be previewed and downloaded locally, or shared directly to social media platforms.

Advanced Features

- Custom Models: Users can download the model weights for Mochi 1 and personalize them locally for training and tuning.

- Community Interaction: Join Genmo's Discord community to exchange experiences and share generated videos with other users.

- API Interface: Developers can use the API interface provided by Genmo to integrate the video generation functionality into their applications.

common problems

- Video generation failure: Ensure that the prompt statements entered are clear and specific, avoiding vague or complex descriptions.

- Login Issues: If you are unable to log in, please check your internet connection or try a different browser.

- Model Download: Visit Genmo's GitHub page to download the latest Mochi 1 model weights.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...