MoBA: A Large Language Model for Long Context Processing by Kimi

General Introduction

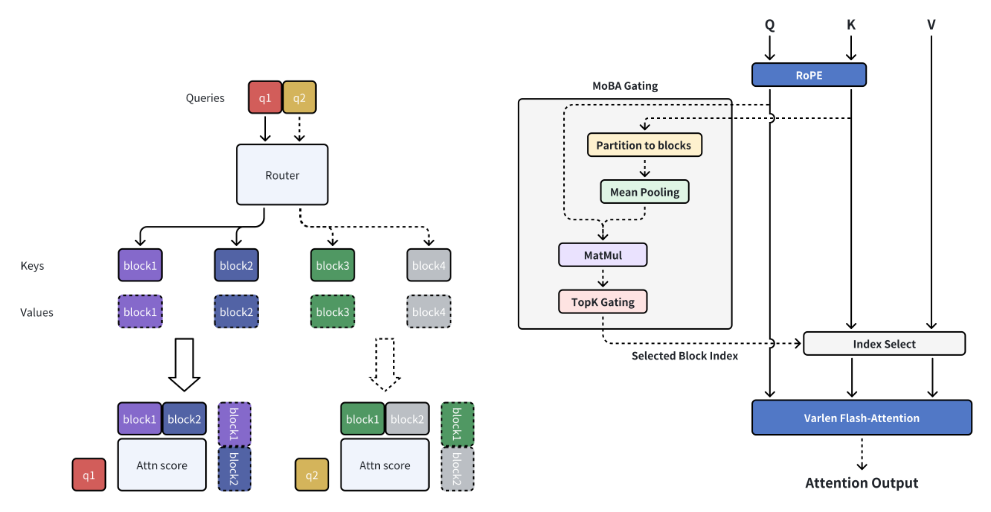

MoBA (Mixture of Block Attention) is an innovative attention mechanism developed by MoonshotAI, designed for large language models (LLMs) with long context processing.MoBA achieves efficient long sequence processing by dividing the full context into multiple blocks, and each query token learns to focus on the most relevant KV blocks. Its unique parameter-free top-k gating mechanism ensures that the model only focuses on the most informative chunks, which significantly improves computational efficiency.MoBA is able to seamlessly switch between full-attention and sparse-attention modes, which ensures both performance and efficiency. The technique has been successfully applied to Kimi-enabled long context requests, demonstrating significant advances in efficient attention computation.

Function List

- Block Sparse Attention: Divide the full context into chunks and each query token learns to focus on the most relevant KV chunks.

- Parameter-free gating mechanism: Introduce a parameter-free top-k gating mechanism that selects the most relevant block for each query token.

- Full sparse attention switching: Seamlessly switch between full and sparse attention modes.

- Efficient computing: Significantly improve computational efficiency for long context tasks.

- open source: Full open source code is provided for ease of use and secondary development.

Using Help

Installation process

- Create a virtual environment:

conda create -n moba python=3.10

conda activate moba

- Install the dependencies:

pip install .

Quick Start

We provide a MoBA implementation that is compatible with transformers. Users can use the--attnThe parameter selects the attention backend between moba and moba_naive.

python3 examples/llama.py --model meta-llama/Llama-3.1-8B --attn moba

Detailed function operation flow

- Block Sparse Attention: When dealing with long contexts, MoBA divides the full context into multiple chunks, and each query token learns to focus on the most relevant KV chunks, thus enabling efficient long sequence processing.

- Parameter-free gating mechanism: MoBA introduces a parameter-free top-k gating mechanism that selects the most relevant blocks for each query token, ensuring that the model focuses only on the most informative blocks.

- Full sparse attention switching: MoBA is designed to be a flexible alternative to full attention, allowing seamless switching between full and sparse attention modes, ensuring both performance and efficiency.

- Efficient computing: Through the above mechanism, MoBA significantly improves the computational efficiency of long context tasks for a variety of complex reasoning tasks.

sample code (computing)

Below is a sample code that uses MoBA:

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-3.1-8B")

model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-3.1-8B", attn="moba")

inputs = tokenizer("长上下文示例文本", return_tensors="pt")

outputs = model(**inputs)

print(outputs)

One sentence description (brief)

MoBA is an innovative block attention mechanism designed for large language models with long context processing, significantly improving computational efficiency and supporting full sparse attention switching.

Page keywords

Long Context Processing, Block Attention Mechanism, Large Language Model, Efficient Computing, MoonshotAI

General Introduction

MoBA (Mixture of Block Attention) is an innovative attention mechanism developed by MoonshotAI, designed for large language models (LLMs) with long context processing.MoBA achieves efficient long sequence processing by dividing the full context into multiple blocks, and each query token learns to focus on the most relevant KV blocks. Its unique parameter-free top-k gating mechanism ensures that the model only focuses on the most informative chunks, which significantly improves computational efficiency.MoBA is able to seamlessly switch between full-attention and sparse-attention modes, which ensures both performance and efficiency. The technique has been successfully applied to Kimi-enabled long context requests, demonstrating significant advances in efficient attention computation.

Function List

- Block Sparse Attention: Divide the full context into chunks and each query token learns to focus on the most relevant KV chunks.

- Parameter-free gating mechanism: Introduce a parameter-free top-k gating mechanism that selects the most relevant block for each query token.

- Full sparse attention switching: Seamlessly switch between full and sparse attention modes.

- Efficient computing: Significantly improve computational efficiency for long context tasks.

- open source: Full open source code is provided for ease of use and secondary development.

Using Help

Installation process

- Create a virtual environment:

conda create -n moba python=3.10

conda activate moba

- Install the dependencies:

pip install .

Quick Start

We provide a MoBA implementation that is compatible with transformers. Users can use the--attnThe parameter selects the attention backend between moba and moba_naive.

python3 examples/llama.py --model meta-llama/Llama-3.1-8B --attn moba

Detailed function operation flow

- Block Sparse Attention: When dealing with long contexts, MoBA divides the full context into multiple chunks, and each query token learns to focus on the most relevant KV chunks, thus enabling efficient long sequence processing.

- Parameter-free gating mechanism: MoBA introduces a parameter-free top-k gating mechanism that selects the most relevant blocks for each query token, ensuring that the model focuses only on the most informative blocks.

- Full sparse attention switching: MoBA is designed to be a flexible alternative to full attention, allowing seamless switching between full and sparse attention modes, ensuring both performance and efficiency.

- Efficient computing: Through the above mechanism, MoBA significantly improves the computational efficiency of long context tasks for a variety of complex reasoning tasks.

sample code (computing)

Below is a sample code that uses MoBA:

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-3.1-8B")

model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-3.1-8B", attn="moba")

inputs = tokenizer("长上下文示例文本", return_tensors="pt")

outputs = model(**inputs)

print(outputs)© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...