MMAudio: generating synchronized sound effects and soundtracks for video footage, video-to-audio multimodal co-training tool

General Introduction

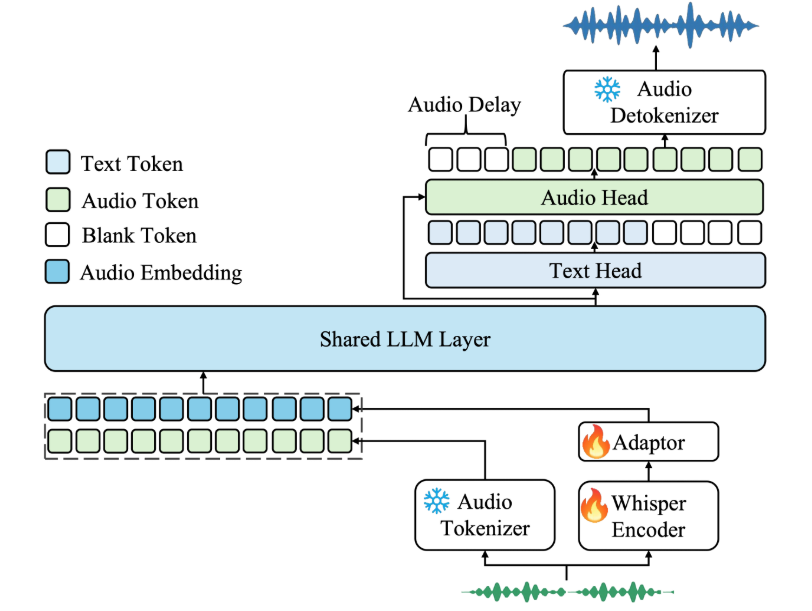

MMAudio is an open source project aimed at generating high-quality synchronized audio through joint multimodal training. Developed by Ho Kei Cheng et al. at the Chinese University of Hong Kong, the project's main function is to generate synchronized audio based on video and/or text inputs.The core innovation of MMAudio lies in its multimodal joint training approach, which is capable of training on a wide range of audio-video and audio-text datasets. In addition, the synchronization module can align the generated audio with the video frames. The project is still under construction, but the single-case inference functionality is already working and training code will be added. openart site can be searched for related workflows.

Function List

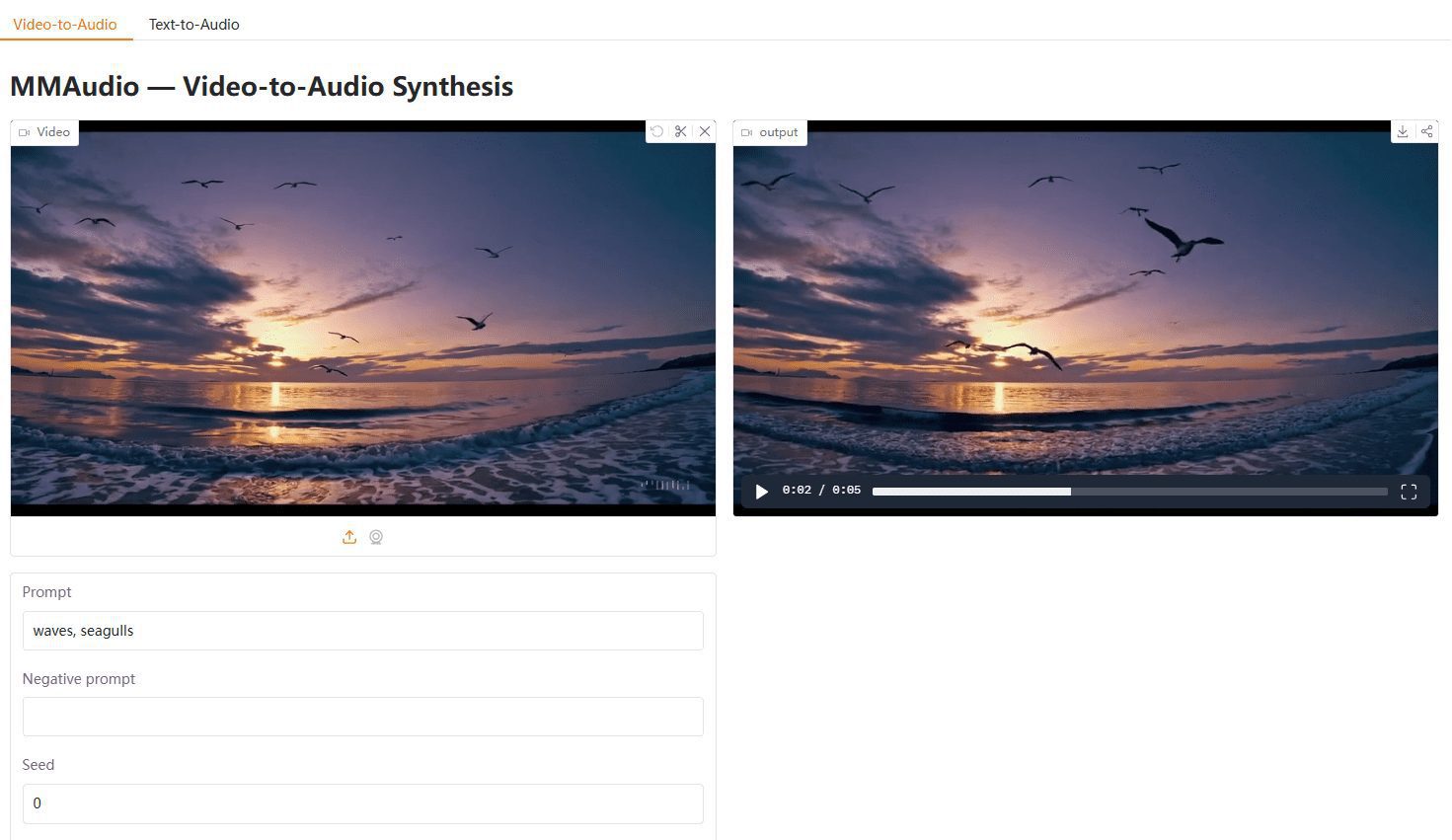

- Video to Audio Generation: Generates synchronized audio based on the incoming video.

- Text-to-audio generation: Generate audio based on the input text.

- Joint multimodal training: Joint training on audio-video and audio-text datasets.

- synchronization module: Aligns the generated audio with the video frame.

- open source: Full open source code is provided to facilitate secondary development by users.

- Pre-trained models: Provides a variety of pre-trained models that users can use directly.

- Demo Script: Provide a variety of demo scripts for users to get started quickly.

Using Help

Installation process

- environmental preparation: The miniforge environment is recommended. Make sure to install Python 3.9+ and PyTorch 2.5.1+ and the corresponding torchvision/torchaudio.

- Installation of dependencies: Run the following command to install the necessary dependencies:

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 --upgrade

- clone warehouse: Clone the MMAudio repository using the following command:

git clone https://github.com/hkchengrex/MMAudio.git

- Installing MMAudio: Go to the MMAudio directory and run the install command:

cd MMAudio

pip install -e .

Usage

- Run the demo script: MMAudio provides several demo scripts that allow users to run the default large_44k model with the following commands:

python demo.py

- Input video or text: Enter a video file or text as required and MMAudio will generate the corresponding synchronized audio.

- View Results: The generated audio will be synchronized with the input video frames and can be viewed and used directly by the user.

Detailed function operation flow

- Video to Audio Generation: Use the video file as input, run the demo script and MMAudio will automatically generate audio synchronized with the video.

- Text-to-audio generation: Take the text as input, run the corresponding script, and MMAudio will generate the corresponding audio.

- Joint multimodal training: Users can perform multimodal co-training on their own datasets based on the provided training code to enhance model generation.

- synchronization module: The module automatically aligns the generated audio with the video frame to ensure audio and video synchronization.

caveat

- Environmental requirements: Currently only tested on Ubuntu systems, other systems may require additional configuration.

- dependency version: Ensure that the installed dependency versions match the project requirements to avoid compatibility issues.

- Pre-trained models: Pre-trained models will be downloaded automatically when running the demo script, or users can download them manually and place them in a specified directory.

With these steps, users can quickly install and use MMAudio to generate high-quality synchronized audio. Detailed usage help and demo scripts will help users better understand and operate the tool.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...