Apple unveils new MM1 AI model

Apple researchers have just published a new paper describing MM1, a family of multimodal AI models that combine vision and language understanding to enable advanced functionality.

Details.

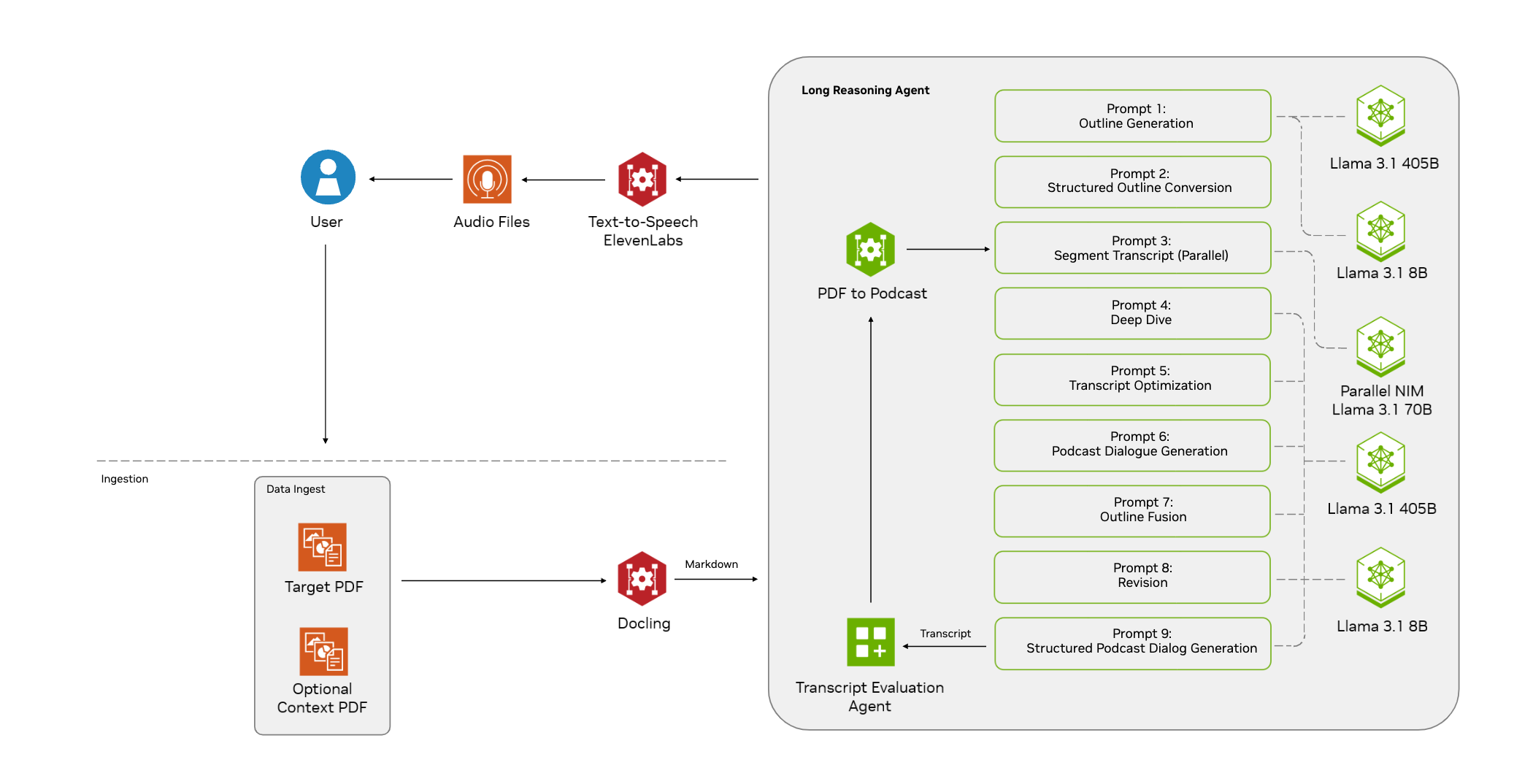

The MM1 model is trained on a carefully curated mixture of image captions, image text data and plain text data.

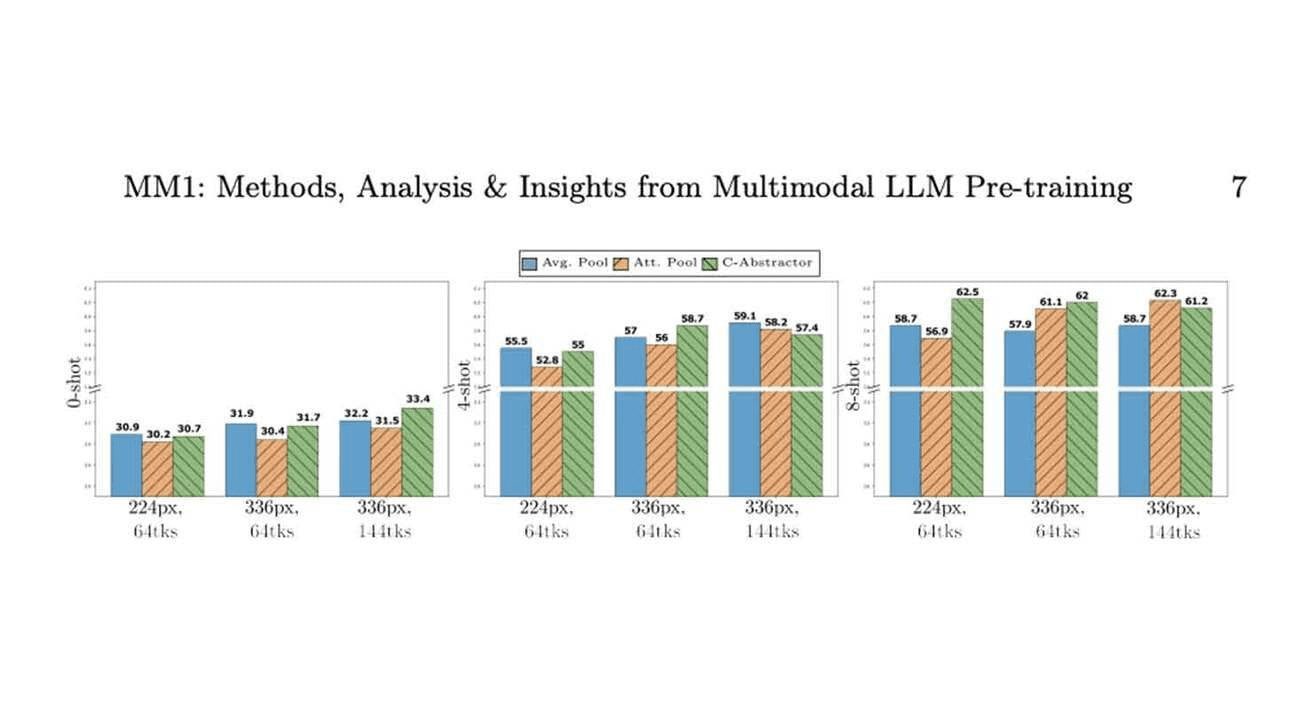

The largest 30B parametric model shows the power of learning from a small number of examples and inferring multiple images.

study finds

The image processing of the scaled model has the greatest impact on performance.

The MM1 benchmark competes with state-of-the-art multimodal models such as GPT-4V and Gemini Pro.

Reason for concern: Apple's detailing and low-key release of new models is a far cry from its usual style of secrecy, and a big win for open source software. Now that a powerful new model is official, is Siri finally ready for an upgrade?

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...