How to set up Mixtral-8x22B | Getting Started with Basic Modeling Tips [Translation]

The Mixtral 8x22B is now available, the first time a commercially available open source GPT-4 type model has been introduced.

However, it is not a command-optimized model, but a base model.

This means we need to cue in a whole new way.

While this is more challenging, it is not impossible to achieve.

A concise guide to basic modeling tips:

The base model is prompted in the same way as like ChatGPT Such command optimization models are very different. Think of them as super auto-completion tools. They are not designed to have conversations; rather, they are trained to complement any text you provide.

This distinction makes prompting more difficult - and opens up more possibilities!

For example, the base model is far more expressive than the one you're familiar with from ChatGPT. you may have noticed that answers generated by ChatGPT are often easily recognizable because of the deep tuning it has undergone. Basically, its style and behavior is fixed. It's really hard to get it to innovate beyond the model it was trained on. But the underlying model lurks with infinite possibilities - just waiting for you to discover them.

How to consider hints to the underlying model:

When you provide cues to the base model, you should not think too much about how to describe to the model what you want it to do, but more about showing what you want it to do. You need to really get into the mind of the model and think about how it thinks.

The underlying model is essentially a reflection of its training data. If you can understand this, you can work wonders.

For example, if you want the model to write a news article called "The Impact of Artificial Intelligence in Healthcare," you should consider where it might have encountered similar news-style articles in its training data. Probably on news sites, right?

With this in mind, you can build a prompt that utilizes this concept, including some similar elements that real article pages might contain. For example:

Home | Headlines | Opinion

Artificial Intelligence Times

--

The Impact of Artificial Intelligence in Healthcare

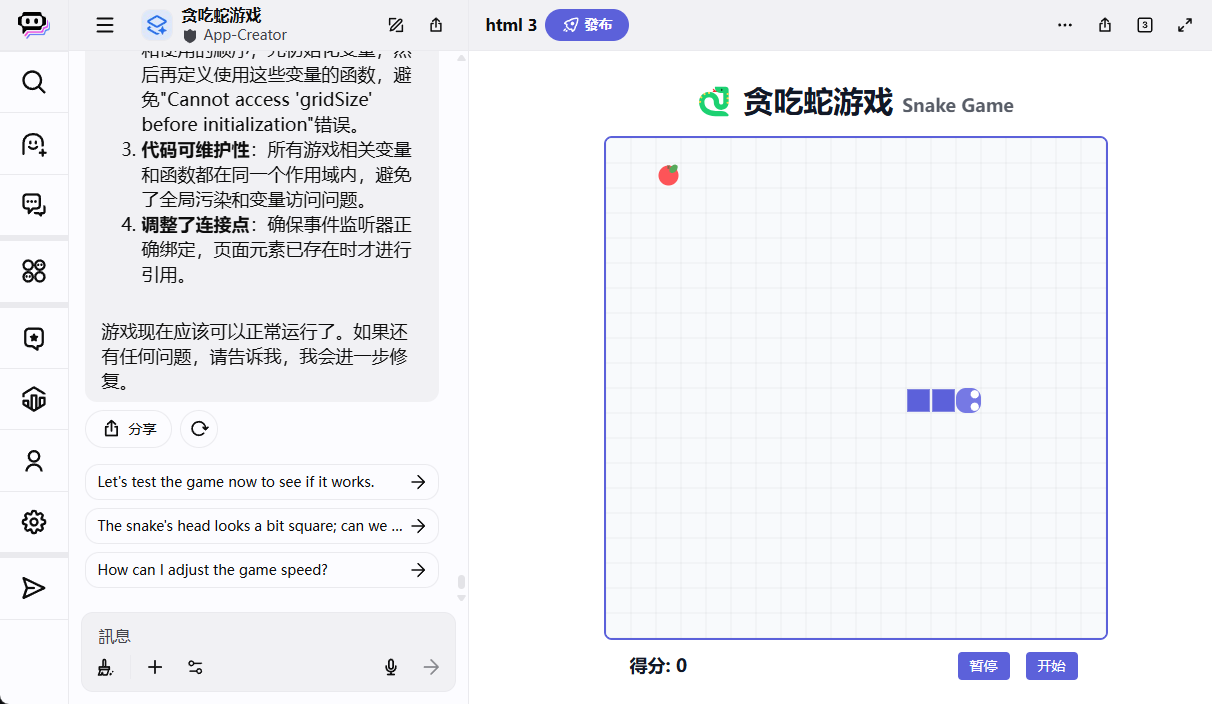

You can see in the screenshot below that by placing the model in a situation similar to what it might see in its training data, it ended up writing an article!

![如何设置 Mixtral-8x22B | 基础模型提示入门 [译]-1 如何设置 Mixtral-8x22B | 基础模型提示入门 [译]](https://aisharenet.com/wp-content/uploads/2024/04/fde4404668566a4.png)

But this method is not perfect. The writing of the article does not flow well enough and there is still no guarantee that an article will be generated.

So how do we improve reliability?

By adding examples.

The base model responds very well to few-shot prompts. Let's add a few examples to the prompt. To make this quick, I'll take a couple of articles from the Internet and add them to the top of the prompt (don't blame me - this is just a demonstration and won't go into production!) .

![如何设置 Mixtral-8x22B | 基础模型提示入门 [译]-1 如何设置 Mixtral-8x22B | 基础模型提示入门 [译]](https://aisharenet.com/wp-content/uploads/2024/04/035c0c2554e0ca4-1.png)

As you can see, with these few examples, the article is significantly improved.

Let's talk about parsing:

One of the main challenges when working with base models is parsing their output. In the case of instructional models, you can simply instruct them to output in a specific format, for example, you can ask them to "answer in JSON", which is very easy to parse. But for base models, this is not so easy.

Here's a technique I use often called 'model guidance'.

Assuming you need to generate a list of article titles, you can almost force the model to respond in a list format by adding the first two characters of an array at the end of the prompt after describing your needs. Here is an example:

![如何设置 Mixtral-8x22B | 基础模型提示入门 [译]-3 如何设置 Mixtral-8x22B | 基础模型提示入门 [译]](https://aisharenet.com/wp-content/uploads/2024/04/f070eddc9fc6b0d.png)

See, how I added '["' to end the prompt. This simple trick enables you to generate parsable data using the underlying model.

More advanced methods:

The above introduction is just some simple application methods of the basic model. There are many more effective techniques that can help us achieve better results.

For example, one way to do this is to have the model think it's a Python interpreter.

This may not sound intuitive, but it works very well in practice.

For example, you could try writing a prompt that shortens the text. See the prompt in the screenshot, which is a practical application of the method.

![如何设置 Mixtral-8x22B | 基础模型提示入门 [译]-4 如何设置 Mixtral-8x22B | 基础模型提示入门 [译]](https://aisharenet.com/wp-content/uploads/2024/04/36cb1ab19c57b95.png)

As you can see, we actually create a prompt that simulates the Python interpreter and have the model simulate the output of the interpreter. Since the function we're calling is to shorten text, the model gives a short version of the text!

As you can see, using the base model for prompting is much different than when using the chat or guide model. I hope this is helpful to all those who use the Mixtral 8x22B!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...