Mistral Releases Open Source Mistral Small 3: Performance Rivals GPT-4o, Outpaces Llama 3

Mistral Small 3: Apache 2.0 protocol, 81% MMLU, 150 tokens/sec.

Today, Mistral AI launched Mistral Small 3, a latency-optimized 24 billion parameter model, and released it under the Apache 2.0 protocol.

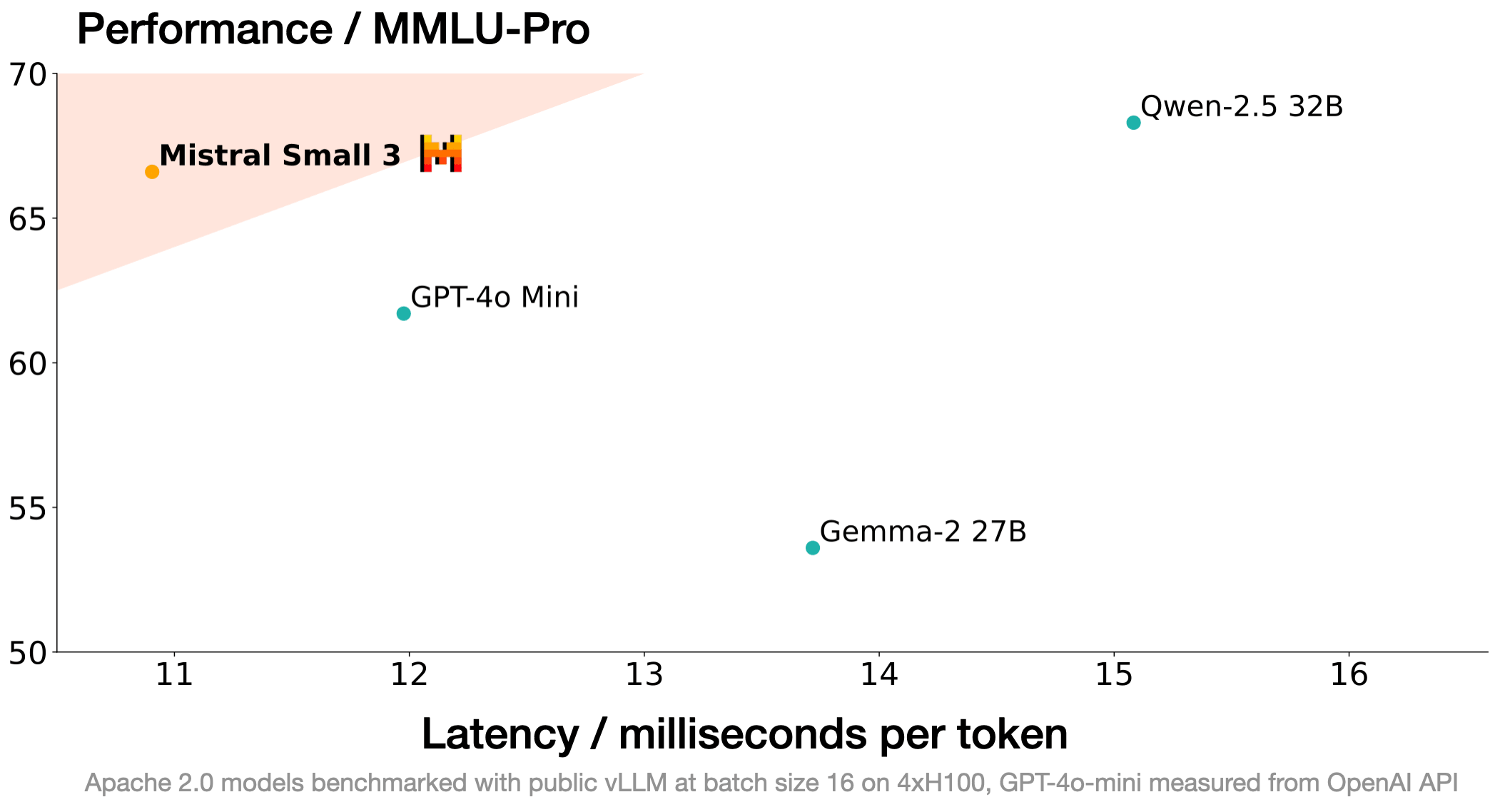

Mistral Small 3 is comparable to larger models such as Llama 3.3 70B or Qwen 32B, and is an excellent open source alternative to opaque proprietary models such as GPT4o-mini. Mistral Small 3 is comparable to the performance of the Llama 3.3 70B instructor, but more than 3 times faster on the same hardware. Mistral Small 3 is comparable to Llama 3.3 70B instruct, but is more than 3 times faster on the same hardware.

Mistral Small 3 is a pre-training and instruction fine-tuning model designed to meet the needs of '80%' for generative AI tasks that require strong linguistic capabilities and instruction adherence performance with very low latency.

Mistral AI This new model was designed to saturate performance at a scale suitable for local deployment. Specifically, Mistral Small 3 has far fewer layers than competing models, significantly reducing the time per forward propagation.With an MMLU accuracy of over 81% and a latency of 150 tokens/second, Mistral Small is the most efficient model of its kind to date.

Mistral AI is releasing pre-training and instruction fine-tuning checkpoints under the Apache 2.0 protocol. These checkpoints can serve as a powerful foundation for accelerated progress. Note that Mistral Small 3 is trained using neither reinforcement learning (RL) nor synthetic data, and is therefore more efficient in the model production process than the Deepseek R1 (a great and complementary open source technology!) Mistral AI is looking forward to seeing how the open source community adopts and customizes it. It can serve as a great base model for building cumulative reasoning power.Mistral AI looks forward to seeing how the open source community adopts and customizes it.

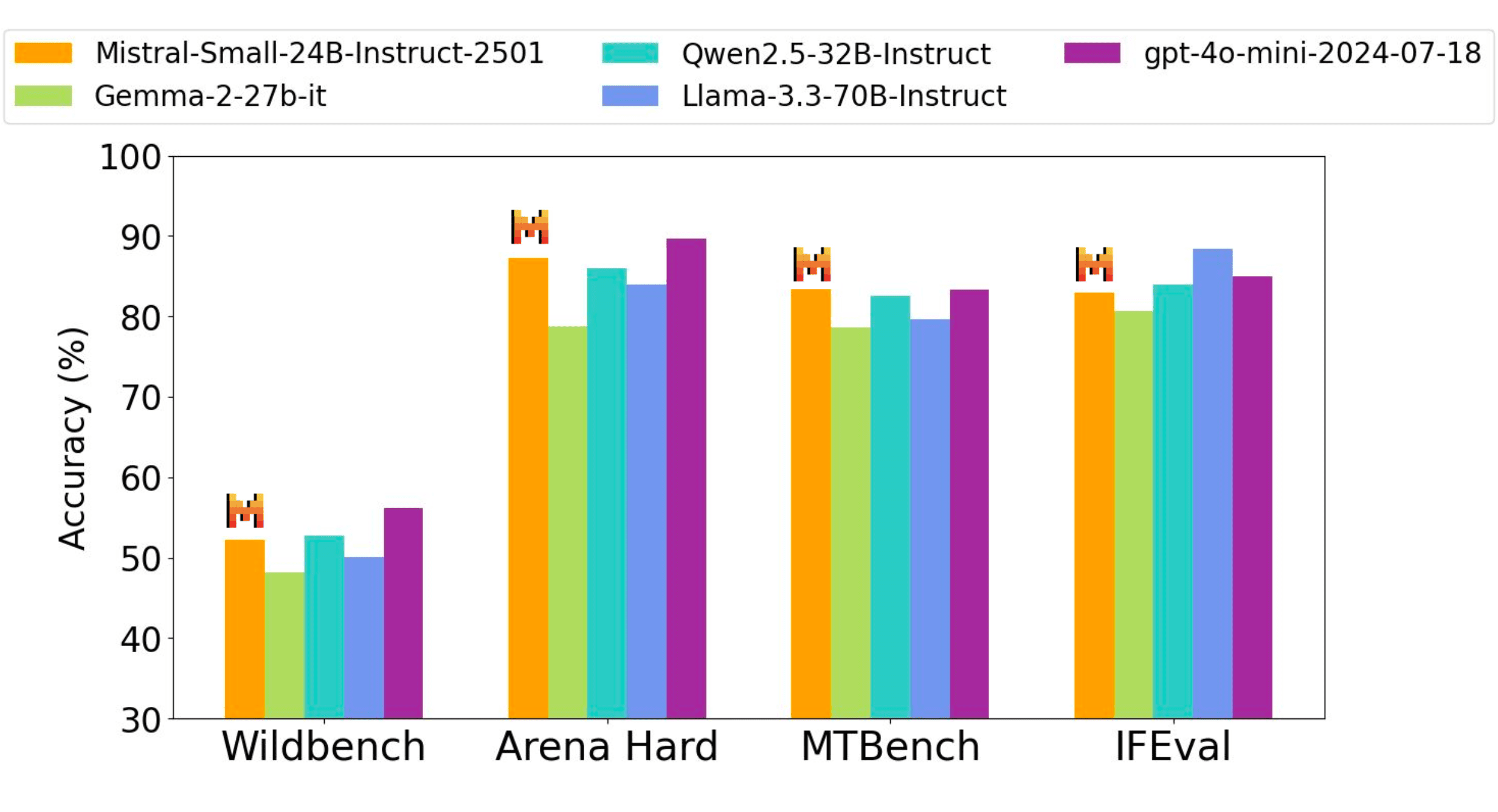

performances

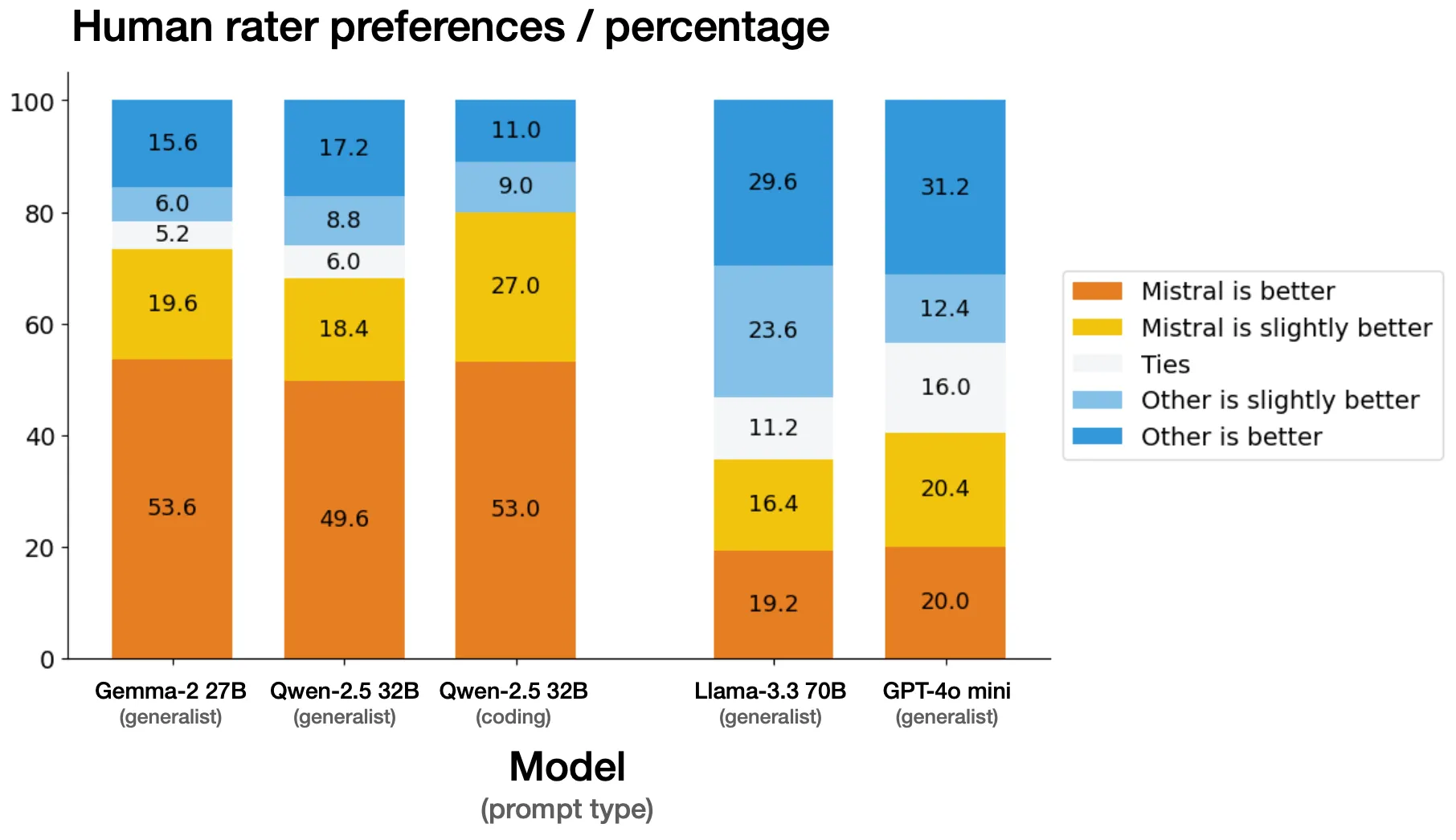

manual assessment

Mistral AI conducted side-by-side evaluations with an external third-party vendor on a set of over 1,000 proprietary codes and generic cues. The evaluators were tasked with selecting their preferred model response from anonymized results generated by Mistral Small 3 versus another model.Mistral AI recognizes that in some cases human judgment benchmarks will differ significantly from publicly available benchmarks, but Mistral AI has taken extra care to verify the fairness of the evaluation.Mistral AI is confident that the above benchmarks are valid.

directive function

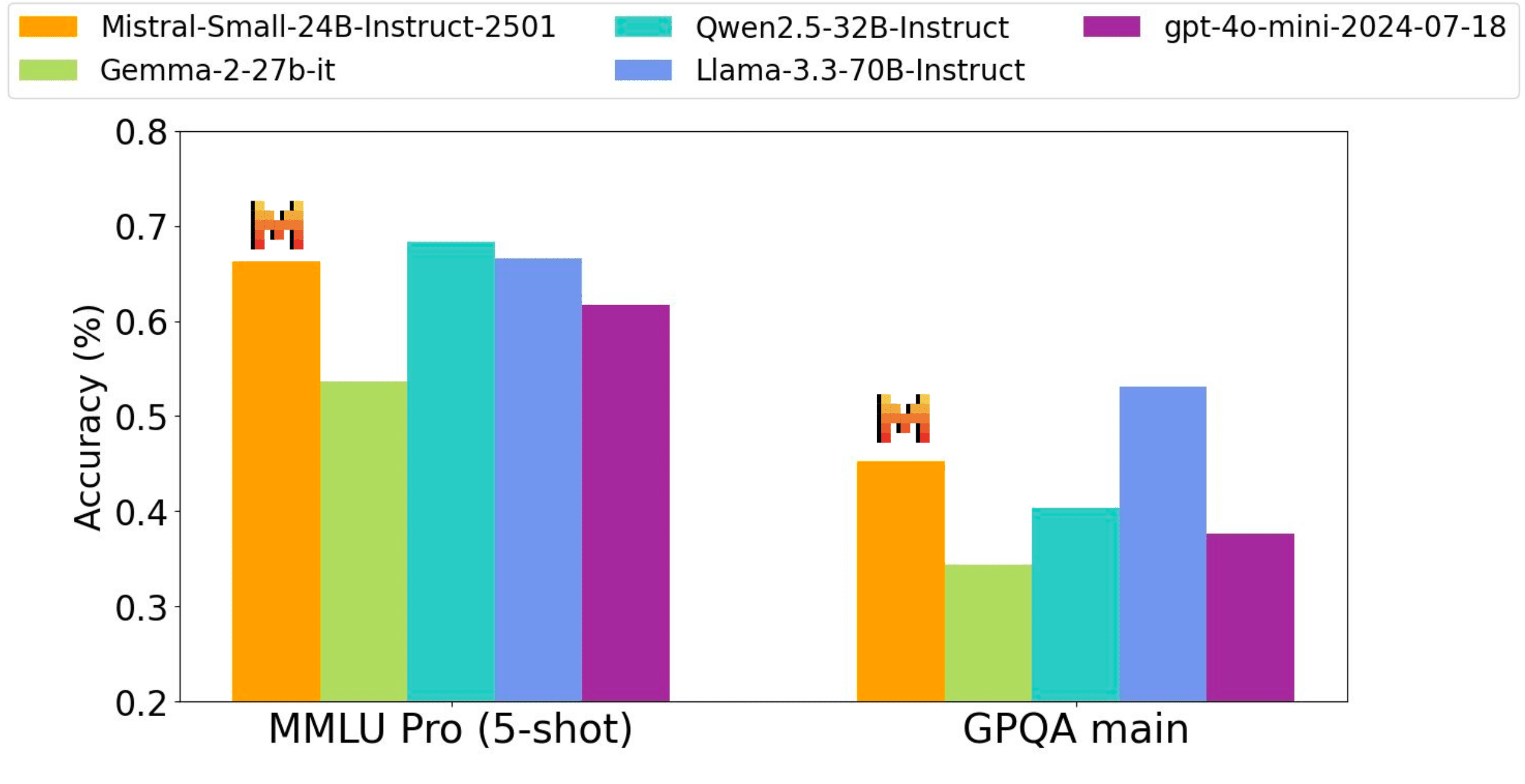

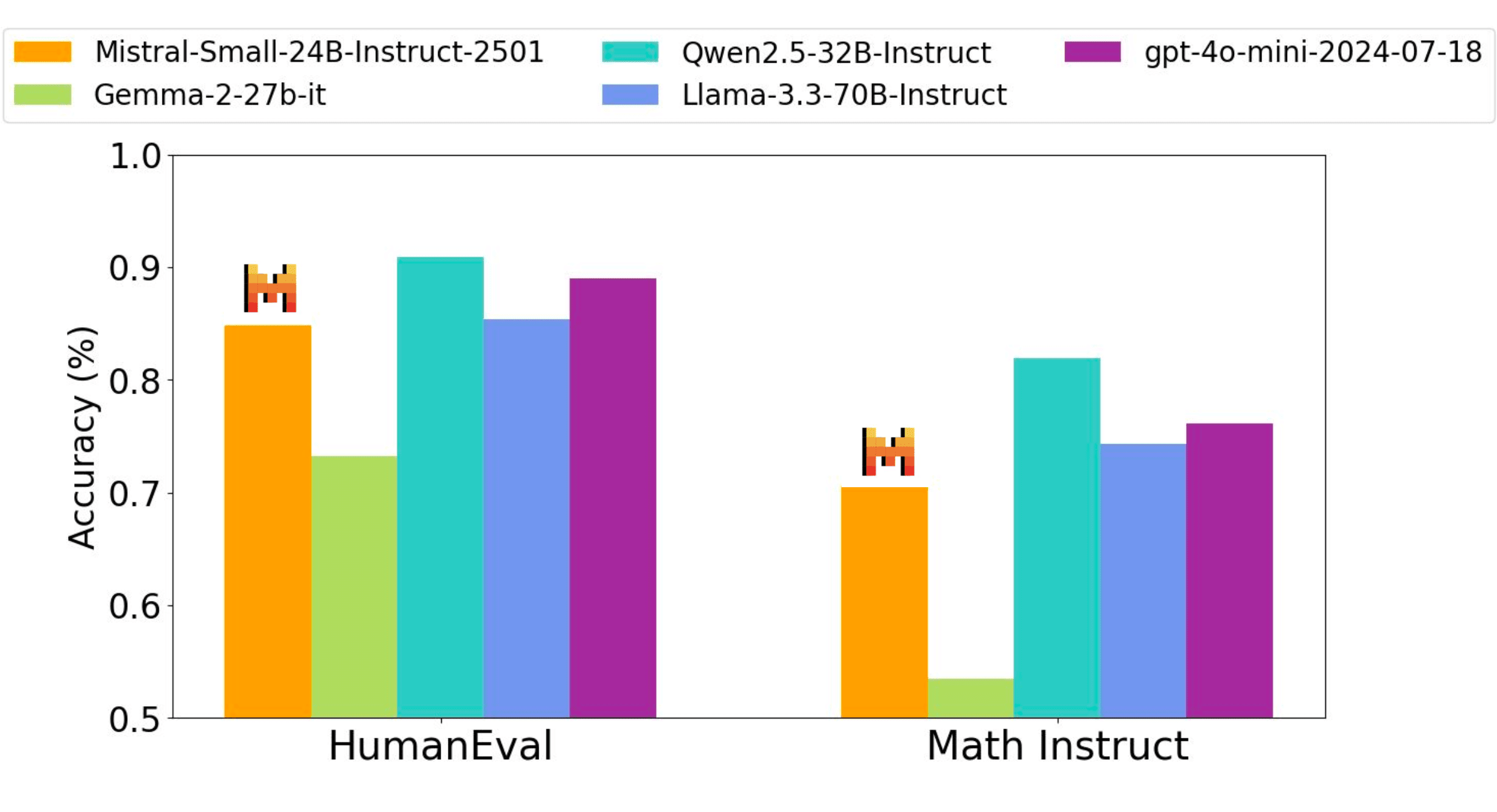

Mistral AI's instruction fine-tuning model has a competitive advantage over open-source weighting models three times larger and the proprietary GPT4o-mini model in code, math, general knowledge, and instruction-following benchmarks.

Performance accuracy for all benchmarks is obtained through the same internal evaluation process - therefore, numbers may differ slightly from previously reported performance (Qwen2.5-32B-Instruct, Llama-3.3-70B-Instruct, Gemma-2-27B-IT). wildbench, Arena hard and Wildbench, Arena hard and MTBench Judge based evals based on gpt-4o-2024-05-13.

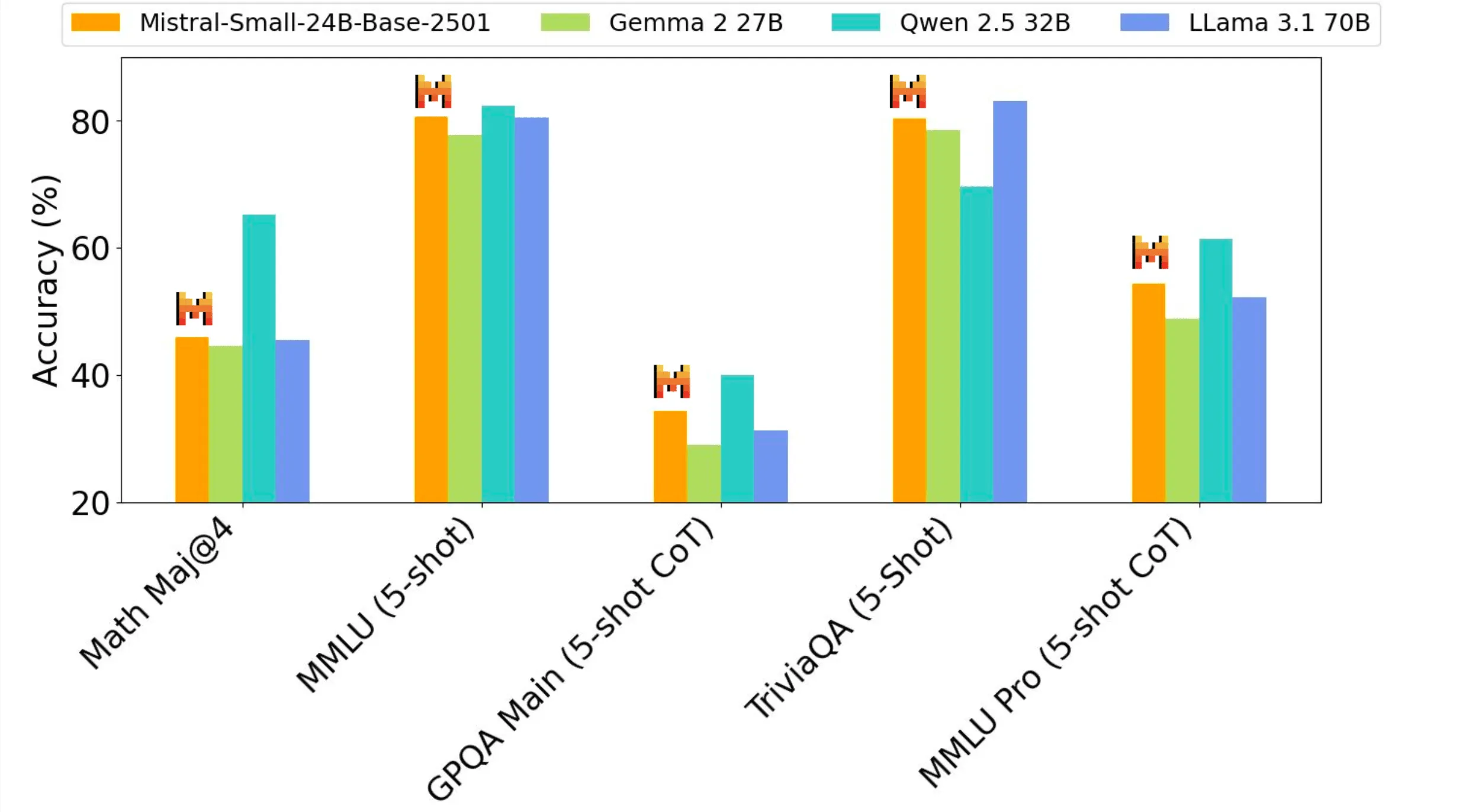

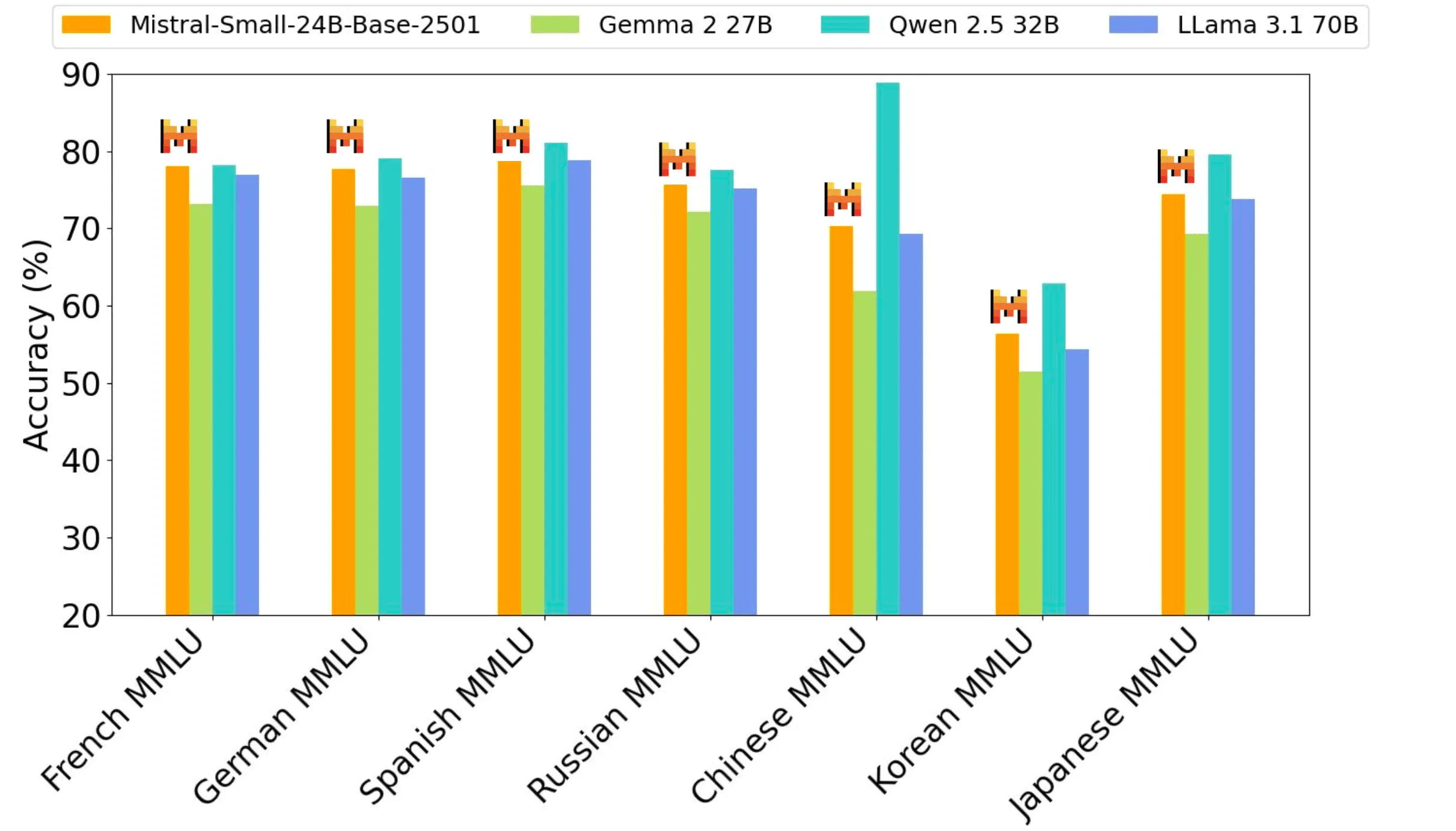

Pre-training performance

The Mistral Small 3, as a 24B model, offers the best performance for its size class and can match models three times larger such as the Llama 3.3 70B.

When to use Mistral Small 3

Among Mistral AI's customers and community, Mistral AI has seen several unique use cases emerge for pre-trained models at this scale:

- Fast Response Conversational Assistance: Mistral Small 3 excels in scenarios that require fast, accurate responses. This includes many virtual assistant scenarios where users expect instant feedback and near real-time interaction.

- Low-latency function calls: Mistral Small 3 can handle fast function execution when used as part of an automation or agent workflow.

- Fine-tuning to create subject matter experts: Mistral Small 3 can be fine-tuned to focus on specific areas to create highly accurate subject matter experts. This is particularly useful in areas such as legal counseling, medical diagnostics and technical support, where domain-specific knowledge is critical.

- Local reasoning: especially beneficial for hobbyists and organizations dealing with sensitive or proprietary information. When quantizing, Mistral Small 3 can run privately on a single RTX 4090 or a Macbook with 32GB of RAM.

Mistral AI's customers are evaluating Mistral Small 3 across multiple industries, including:

- Used by financial services clients for fraud detection

- Used by healthcare providers for client triage

- Robotics, automotive and manufacturing companies for on-device command and control

- Horizontal use cases across customers include virtual customer service and sentiment and feedback analysis.

Using Mistral Small 3 on your favorite technology stacks

The Mistral Small 3 is now available on la Plateforme as a mistral-small-latest maybe mistral-small-2501 This is provided in the form of the Mistral AI model. See Mistral AI's documentation on how to use Mistral AI's models for text generation.

Mistral AI is also excited to partner with Hugging Face, Ollama, Kaggle, Together AI and Fireworks AI to make the model available on their platforms starting today:

- Hugging Face (base model)

- Ollama

- Kaggle

- Together AI

- Fireworks AI

- Coming soon to NVIDIA NIM, Amazon SageMaker, Groq, Databricks, and Snowflake!

The way forward

These are exciting days for the open source community! Mistral Small 3 complements large open source inference models like the recently released DeepSeek and can serve as a powerful base model to bring inference capabilities to the fore.

Among many other things, look for small and medium sized Mistral models with enhanced reasoning capabilities to be released in the coming weeks. If you're interested in joining Mistral AI's journey (Mistral AI is hiring) or going above and beyond by cracking Mistral Small 3 and making it better right now!

Mistral's open source model

Mistral AI is reaffirming Mistral AI's commitment to use the Apache 2.0 protocol for general purpose models, as Mistral AI is phasing out MRL-licensed models . As with Mistral Small 3, the model weights will be available for download and local deployment, and can be freely modified and used in any setting. The models will also be available through serverless APIs on la Plateforme, Mistral AI's local and VPC deployment, customization and orchestration platforms, and Mistral AI's inference and cloud partners. Enterprises and developers requiring specialized capabilities (increased speed and context, domain-specific knowledge, task-specific models (e.g., code completion)) can rely on additional business models to complement Mistral AI's contributions to the community.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...