MiniMax Multimodal Generation Technology Re-innovates: Subject Reference, Reference Portrait Images to Generate Stylistically Consistent Videos

Everyone has a movie dream in their heart - wanting to step into different roles to experience life in the theater, or become a director to orchestrate each set of shots, or as a screenwriter to write about the infinite possibilities in the parallel universe.

Conch AI is a dream-making machine that allows different people to have a way to approach the movie. At the beginning of the new year, Conch AI brings a new creative helper - Subject Reference - to users around the world.

MiniMax Latest self-developed S2V-01 video modelBySingle Figure Body Reference Architecturewith traditional programsInput and calculation costs up to 1%Just type inA picture.This can be achievedAccurate reproduction of visual detailsAlso availableHigh degree of freedom and combinability. UserSignificant reduction in waiting time, to achieve high availability.

The main reference function is now fully online globally, go to the Conch Video The creation platform is instantly experienced.

Input a picture, output a high-definition blockbuster

In the field of AI video generation, how to maintain the realism and stability of characters' faces from multiple angles in dynamic videos; how to keep the characters' roles highly consistent when using continuous clip splicing to create has been a difficult problem plaguing the industry. We provide users with an optimal solution through our self-developed S2V-01 video model.

After selecting the "Subject Reference" function in Conch AI, users only need to upload a picture to recognize and lock the subject role. Input the Prompt word in the text box, no need to wait for a long time, you can generate a creative and consistent high-quality video with the same subject.

The S2V-01 model can accurately recognize facial features such as different genders, ages, skin colors, and structures of the facial features in the photos, and the generated characters are stable and coherent, and the characters can be kept consistent in each frame. The facial expression control of the main character and the texture of the non-subject scene are still the "specialty" of Conch AI.

Subject Reference + Prompt: A close-up of a young boy in a dimly lit room, his eyes fixed on the glowing screen of a gaming console. The camera is positioned slightly above the eye level, focusing on his concentrated expression as his fingers nimbly manipulate the controller. The camera is positioned slightly above eye level, focusing on his concentrated expression as his fingers nimbly manipulate the controller. A game character appears, breaking free from the screen's confines.

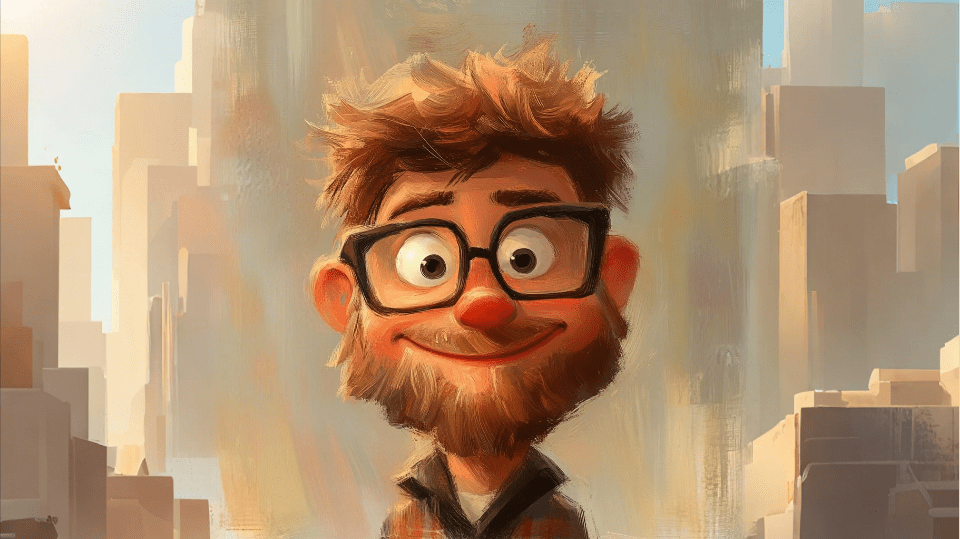

Creator @OlivioSarikas uploaded an oil-on-canvas anime portrait as the subject of an animated piece that transports the viewer to a fairytale land.

Currently, Conch AI is open to the ability to reference a single character, which requires uploading recognizable facial information as a facial reference generated by the video subject. In the future, Conch AI will continue to open up the ability to reference multiple people, objects, scenes and other richer reference.

Input and computation costs are dramatically reduced, reshaping the video creation experience

MiniMax has been continuously exploring the ability of picture referencing since its early days, including roles, styles and so on. Based on a large number of technical explorations, we believe that the upper limit of the effect of the picture reference scheme is high enough for the subject class reference problem, and even better than the fine-tuned LoRA (Low-Rank Adaptation) scheme, taking into account the effect and scalability.We believe that a good technology should be able to serve as wide a range of users as possible, while also working well enough to solve real problems.

Since MiniMax's subject reference scheme requires only one image as input, there is no additional training computational cost and waiting time, and the generation cost is close to that of conventional text-generated and graph-generated videos.Compared to the current LoRA scheme, subject reference reduces both the user's input cost and the computation cost to less than one percent, the user's waiting time is dramatically reduced, and the user experience is doubled.

Subject Reference + Prompt: A woman in an elaborate gown and a pair of white gloves walks through a corridor in a medieval castle. She runs with her back to the camera, then looks back to the camera, her expression changing from calm to horror. She runs with her back to the camera, then looks back to the camera, her expression changing from calm to horror. The end of the corridor is dimly lit. The camera follows the woman as she pushes closer and the view changes from medium to close-up, focusing on the woman's face.

In order to keep only the necessary visual information of the subject itself (e.g., human facial features) in the video without interference from other information such as posture, expression, lighting, etc., MiniMax has continued to do a lot of optimization on data construction, model architecture and training strategy. In the S2V-01 model, which is already online, we have realized the key effects of both aspects at the same time:

- Accurate reproduction of visual details:The facial features of the characters in the generated videos have a high degree of similarity to the reference images;

- High Freedom + Combinatorial:Except for the facial features that represent identity, all other dimensions have a high degree of freedom. For example, the character can be text-controlled to show any pose and expression; the character can be placed in any environment with natural and harmonious lighting performance.

With subject reference technology, users are no longer constrained by solving consistency problems through card draws and can focus more on content expression, thus dramatically increasing the efficiency of creating long-form video content.Your role is, naturally, consistent.

Visual modality opens up an era of AI co-innovation

AI technology has brought convenience to the content production industry such as microfilm, advertising, variety, animation, CG effects, etc. However, the video body is easy to collapse in the generation process is the biggest problem, and the presentation of the content tends to be inflexible and cutthroat.

The launch of the master reference function provides professional creators with highly consistent visual presentation and creative flexibility, and will bring disruptive innovation to several video production industries, including short videos and advertisements, so that consistency and coherence will no longer be a problem.At present, MiniMax puts the subject reference function on the open platform in the form of API service, and will continue to explore in the aspect of multi-subject reference to provide more perfect solutions for enterprises and professional creators.

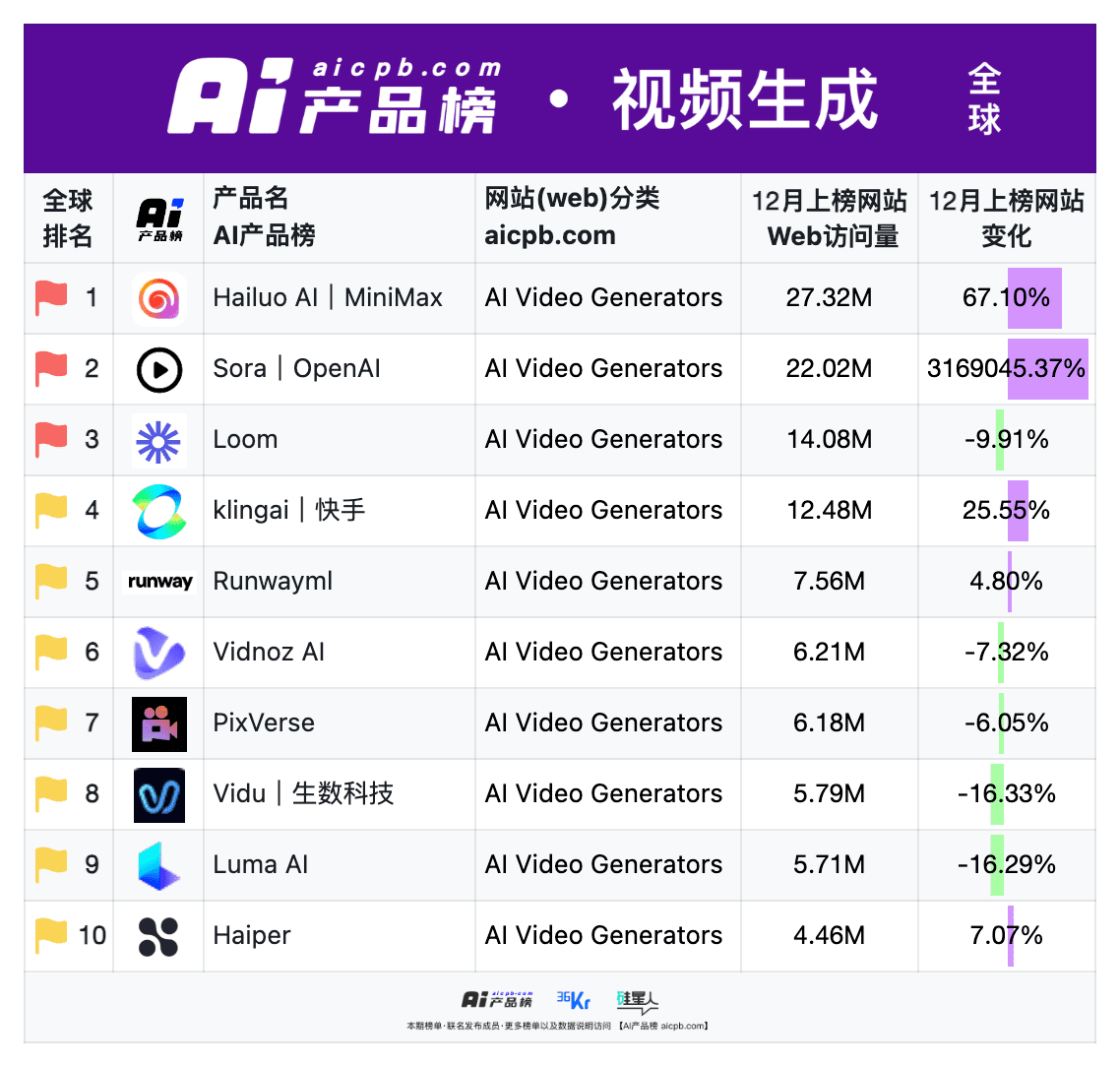

Since MiniMax launched its video model, Conch AI has continued to be the focus of the industry.2024 In December, I2V-01-Live, a graphical video model launched by MiniMax, was widely acclaimed, and the number of overseas visits to Conch AI exceeded 27 million, breaking through a new all-time high and topping the global AI video product list in December.

- Global AI Video Products List December 2024

The way people interact with the world is inherently multimodal, so multimodal understanding and generation is exactly the key link to AGI and the opening of the era of AI co-creation. We expect more users to co-create intelligence with MiniMax and reap the joy of creation in Conch AI. Here we have prepared a teaching document on how to play with the subject reference function, click on the original article to jump to it. Thank you to everyone who supports and loves MiniMax and Conch AI.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...