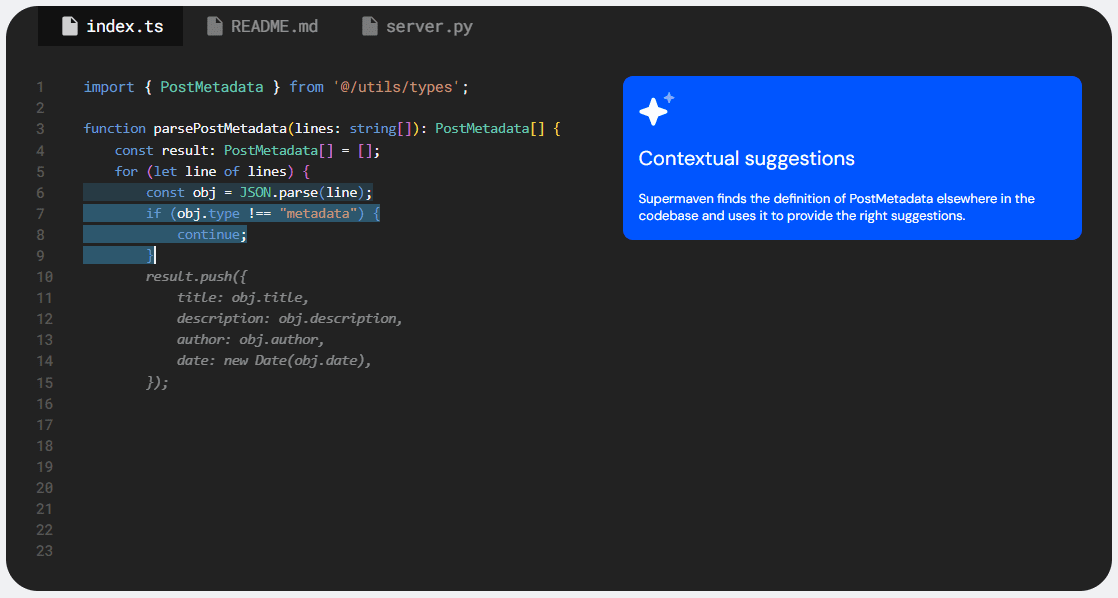

MindSearch: open source AI search engine framework to deploy your own Perplexity search engine!

General Introduction

MindSearch is an open-source AI search engine framework launched by Shanghai Artificial Intelligence Laboratory (SAL), which aims to simulate human thought process for complex information collection and integration. The tool combines the advanced technology of large-scale language modeling (LLM) and search engine, and realizes autonomous information collection and integration of hundreds of web pages through a multi-intelligence body framework, and gives comprehensive answers in a short time. Users can deploy their own search engines using closed-source LLM (e.g., GPT, Claude) or open-source LLM (e.g., InternLM2.5 series models).

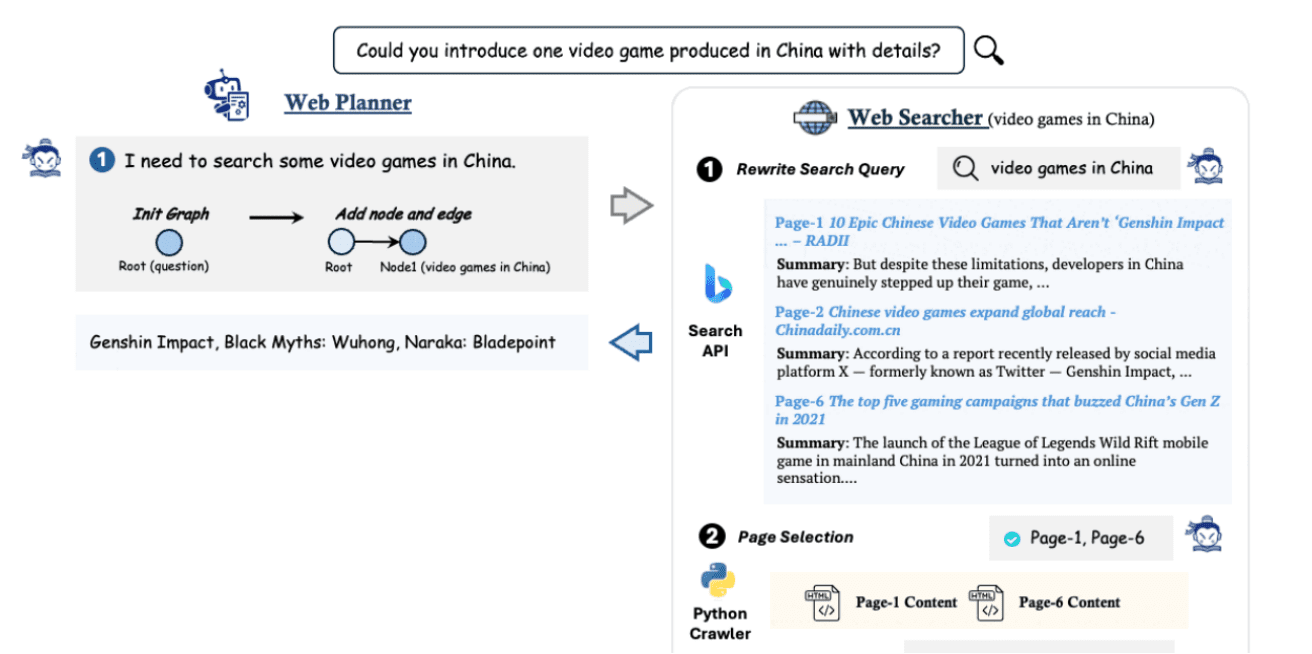

The core logic is that a multi-intelligent body framework is used to model human thought processes, including two key components: the WebPlanner (layer) and WebSearcher (executor).

- WebPlanner breaks down a user's question and builds a directed acyclic graph (DAG) to guide the search;

- WebSearcher retrieves and filters valuable information from the Internet to WebPlanner;

- WebPlanner eventually gives its conclusion.

Function List

- Multi-Intelligence Body Framework: Gathering and integrating complex information is achieved by multiple intelligences working in tandem.

- Supports multiple LLMs: Compatible with both closed-source and open-source large language models, users can choose the appropriate model according to their needs.

- Multiple front-end interfaces: Provide React, Gradio, Streamlit and other front-end interfaces for user convenience.

- Deep Knowledge Exploration: Provides extensive and in-depth answers by navigating through hundreds of web pages.

- Transparent solution path: Provide complete content such as thought paths and search terms to increase the credibility and usability of responses.

Technical Principles

1. WebPlanner: the intelligent planning hub

WebPlanner is the intelligent brain of MindSearch, which builds search tasks into a directed acyclic graph (DAG). After receiving the user's problem, WebPlanner uses predefined atomic code functions to disassemble the problem into sub-problem nodes and outline the problem solving framework by virtue of the language model code generation capability. In the search process, based on the feedback from WebSearcher, the graph is flexibly extended and refined, and the strategy is dynamically adjusted to lead the system to accurately mine information. For example, in the face of the question "the current situation and challenges of the application of artificial intelligence in medical image diagnosis", it will disassemble the medical image types, examples of the application of artificial intelligence algorithms, data privacy and interpretation accuracy, etc., to pave the way for a comprehensive answer.

2,WebSearcher: The Information Mining Pointer

WebSearcher is the pioneer of information mining. It adopts a coarse-to-fine strategy, first optimizing keywords to improve precision, aggregating massive search content to eliminate redundancy, accurately screening key pages, and then summarizing and refining in depth. With the help of language models, it understands and integrates fragmented information, and transforms it into logical and coherent knowledge modules. Take "new energy vehicle battery technology breakthroughs" as an example, it can quickly filter out key information such as improved battery energy density and faster charging speeds from the reports of scientific research institutes, industry news, and official websites of enterprises, and organize and present them.

Functional Features

1、Depth knowledge extraction

MindSearch digs deep into many web pages to present users with in-depth knowledge. Whether it's exploring the mysteries of ancient civilizations or tracking cutting-edge technological developments, it can sort through the massive web resources. For example, if you query "cosmic dark matter research progress", it not only provides basic concepts, but also summarizes the latest observational data, theoretical models, and stage-by-stage results of global scientific research teams to help users build a systematic knowledge system.

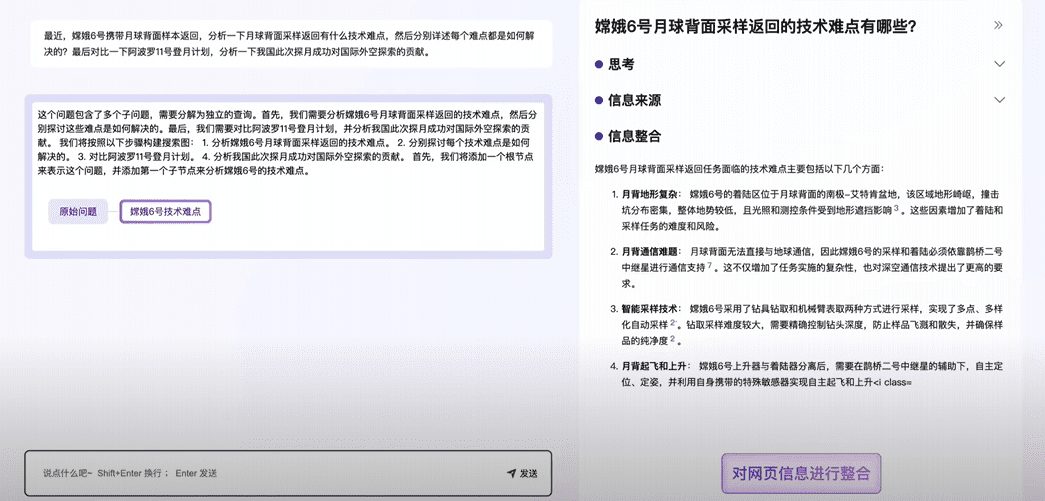

2、Search path transparency

Different from traditional search engines, MindSearch shows users the thinking path, search keywords and information integration process. When users query "interpretation of legal provisions", in addition to the answer, they can also know the process of screening and integrating information from legal databases, professional forums and case studies, which enhances trust and facilitates in-depth study and research and improves the level of relevant knowledge.

3、Multi-interface adaptation

MindSearch offers interfaces for React, Gradio, Streamlit, and local debugging with different user needs in mind. Developers can leverage React The Gradio interface integrates it into web applications, while ordinary users can query it conveniently through the Gradio or Streamlit interface without complex programming and environment configuration, lowering the threshold of use and improving user experience.

4. Dynamic map construction mechanism

The dynamic graph construction function can generate sub-question nodes according to user queries and expand in real time based on search results. In the face of hot topics such as "the impact of social media on adolescent mental health", the search graph can be updated in a timely manner to take into account new research and events, and adjust the direction flexibly to ensure that the most relevant and up-to-date information is provided.

application scenario

1、Good helper for academic research

In the academic field, MindSearch significantly shortens the information collection time for researchers. For example, if history scholars study cultural exchanges in a specific historical period, it can integrate ancient documents, archaeological reports, academic papers and other resources to sort out the communication lineage and important events, helping scholars quickly locate the key information, clarify the direction of the research, and improve the efficiency of the research.

2. Creative Inspiration Inspirator

For creators, MindSearch is a source of inspiration. When copywriters create tourism copy, they can search for materials such as specialty food, niche attractions, and folk customs of the destination, and then integrate and process them into fascinating copy. Movie and TV scriptwriters, when creating sci-fi scripts, can obtain novel sci-fi concepts, future scene settings and other inspirational materials to enrich the content of their creations.

3. Business decision-making compass

In the commercial field, enterprises can use MindSearch to monitor market trends, analyze the dynamics of competing products, and gain insight into consumer demand. For example, when a catering company develops a new product strategy, it can search for information on popular ingredients, competitors' hot dishes, and consumers' taste preferences, and then launch a new product that meets the market demand and enhances its competitiveness in the market after a comprehensive study.

Using Help

1,Dependent Installation

First, make sure you have a Python environment installed on your system (recommended Python 3.8 and above). Then, go to the root directory of your MindSearch project on the command line and execute the following command to install the required dependencies:

pip install -r requirements.txtThis step automatically downloads and installs the various Python libraries and modules required for MindSearch to run, preparing it for subsequent launch and use.

2,Launch MindSearch API

After the dependency installation is complete, you can start MindSearch API. Use the following command to start the FastAPI server:

python -m mindsearch.app --lang en --model_format internlm_server --search_engine DuckDuckGoSearchHere you can adjust the parameters according to the actual needs:

- `--lang`: used to specify the language of the model, for example, `en` means English, `cn` means Chinese. Please choose according to your expected input language and search result language.

- `--model_format`: specify the format of the model, e.g. `internlm_server` means to use the InternLM2.5 - 7b - chat local server model; if you want to use another model, e.g. GPT4, you need to change it to `gpt4`, and also make sure that you have correctly configured the access and usage privileges of the corresponding model .

- `--search_engine`: used to select the search engine, MindSearch supports a variety of search engines, such as

`DuckDuckGoSearch` (DuckDuckGo search engine), `BingSearch` (Bing search engine), `BraveSearch` (Brave search engine), `GoogleSearch` (Google Serper search engine), `TencentSearch ` (Tencent search engine), etc. If you choose a web search engine other than DuckDuckGo and Tencent, you need to set the corresponding API key to the `WEB_SEARCH_API_KEY` environment variable; if you use the Tencent search engine, you need to additionally set `TENCENT_SEARCH_SECRET_ID` and `TENCENT _SEARCH_SECRET_KEY`.

3,Launch MindSearch front-end

MindSearch provides a variety of front-end interfaces for users to choose from, and the following is how different front-end interfaces are launched:

3.1 React

1. First, you need to configure Vite's API proxy, specifying the actual backend URL. assuming that the backend server is running locally on port `8002` of `127.0.0.1` (please modify it according to the actual situation), execute the following command:

HOST="127.0.0.1"PORT=8002sed -i -r "s/target:\s*\"\"/target: \"${HOST}:${PORT}\"/" frontend/React/vite.config.ts

2. Make sure you have Node.js and npm installed on your system. for Ubuntu systems, you can use the following command to install it:

sudo apt install nodejs npmFor Windows, you need to download and install the appropriate version of Node.js for your system from the [official Node.js website](https://nodejs.org/zh-cn/download/prebuilt-installer).

3. Go to the `frontend/React` directory and execute the following commands to install the project dependencies and start the React frontend:

cd frontend/Reactnpm installnpm start

3.2 Gradio

Start the Gradio front-end by executing the following command from the command line:

python frontend/mindsearch_gradio.py3.3 Streamlit

Use the following command to start the Streamlit front end:

streamlit run frontend/mindsearch_streamlit.py3,local debugging

If you wish to debug locally, you can use the following command:

python mindsearch/terminal.pyThrough local debugging, you can more conveniently check and optimize the operation of MindSearch in your local environment, view detailed log information in order to find and solve possible problems in time.

concluding remarks

MindSearch has created a wave of innovation in the field of information retrieval with its unique technology, rich functionality and multiple application scenarios. It improves the efficiency and quality of users' information access, builds an innovative platform for developers, and promotes the development of AI search engine technology. It has great potential and value in academic, creative and commercial fields. We believe that MindSearch will continue to evolve in the future, helping us explore the knowledge universe more efficiently and enjoy a new experience of intelligent information retrieval.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...