A hands-on course on evaluating the Large Language Model (LLM) for product managers

Designed for AI product teams and AI leaders, introduces how to evaluate LLM-based products. Provides an easy start to learning with no programming knowledge required. The course will be available on December 9, 2024 Start.

What you will learn

The basics of LLM assessment: from assessment methods and benchmarks to safeguards against LLM, and how to create custom LLM assessment tools. This course is designed for AI product managers and AI leaders who want to master the core concepts of AI quality and observability.

Evaluation of the different phases of the LLM application life cycle: from the experimental phase to the production monitoring phase.

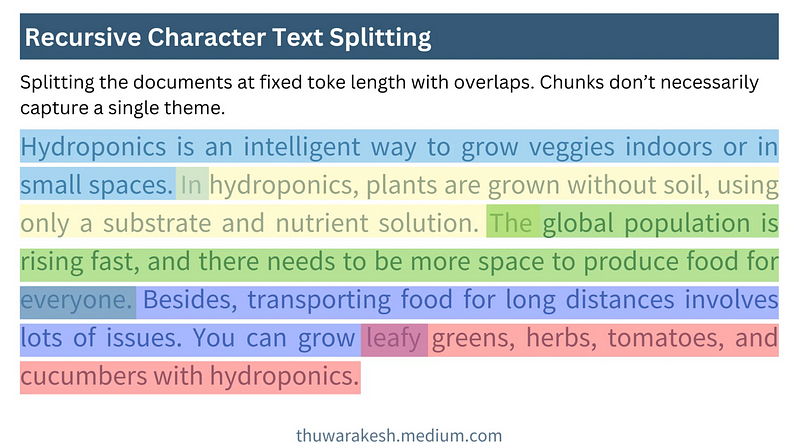

How to design evaluation datasets and generate diverse test cases using synthetic data.

Possible problems with LLM applications: hallucinations, hint injections, out-of-authority cracking, etc.

How to build LLM observability in production environments: tracking, assessment, safeguards.

LLM assessment methods: LLM assessment tools, regular expressions, predictive indicators.

Practical examples: how to evaluate RAGs (Retrieval Augmentation Generation Systems), QA systems, and intelligent agents.

Waiting for the release...

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...