Meta Releases Llama 3.2: Introducing the Next Generation of 1B and 3B Small Language Models

Meta recently launched its latest language model, Llama 3.2, further extending the reach of its AI technology. This new version specifically introduces smaller models with 1B (billion) and 3B (3 billion) parameters, aiming to meet the needs of more users, especially in resource-constrained environments. L...

Llama 3.2 not only optimizes the number of parameters, but also adopts the Q4_K_M quantization technique, which significantly reduces the storage requirements of the model, making it a better balance between performance and efficiency. This innovative architectural design enables developers to flexibly deploy Llama 3.2 in different application scenarios, be it mobile devices or cloud computing platforms.

Supported languages: English, German, French, Italian, Portuguese, Hindi, Spanish and Thai are officially supported.

In contrast, the two versions of Llama 3.2 Small (11B) and Medium (90B) are models that support multimodal capabilities and beat the closed-source Claude 3 Haiku on the image understanding task.

Recommended updates for fast experience ollama and experience the 3B parameter model, which is very stable in tasks such as following instructions, summarization, prompt rewriting, and tool use. At the same time GROQ The 90B parametric model is now available for online experience.

This release marks Meta's continued innovation in the AI space, and the launch of Llama 3.2 is expected to provide developers and organizations with more possibilities to advance and develop applications of all kinds.

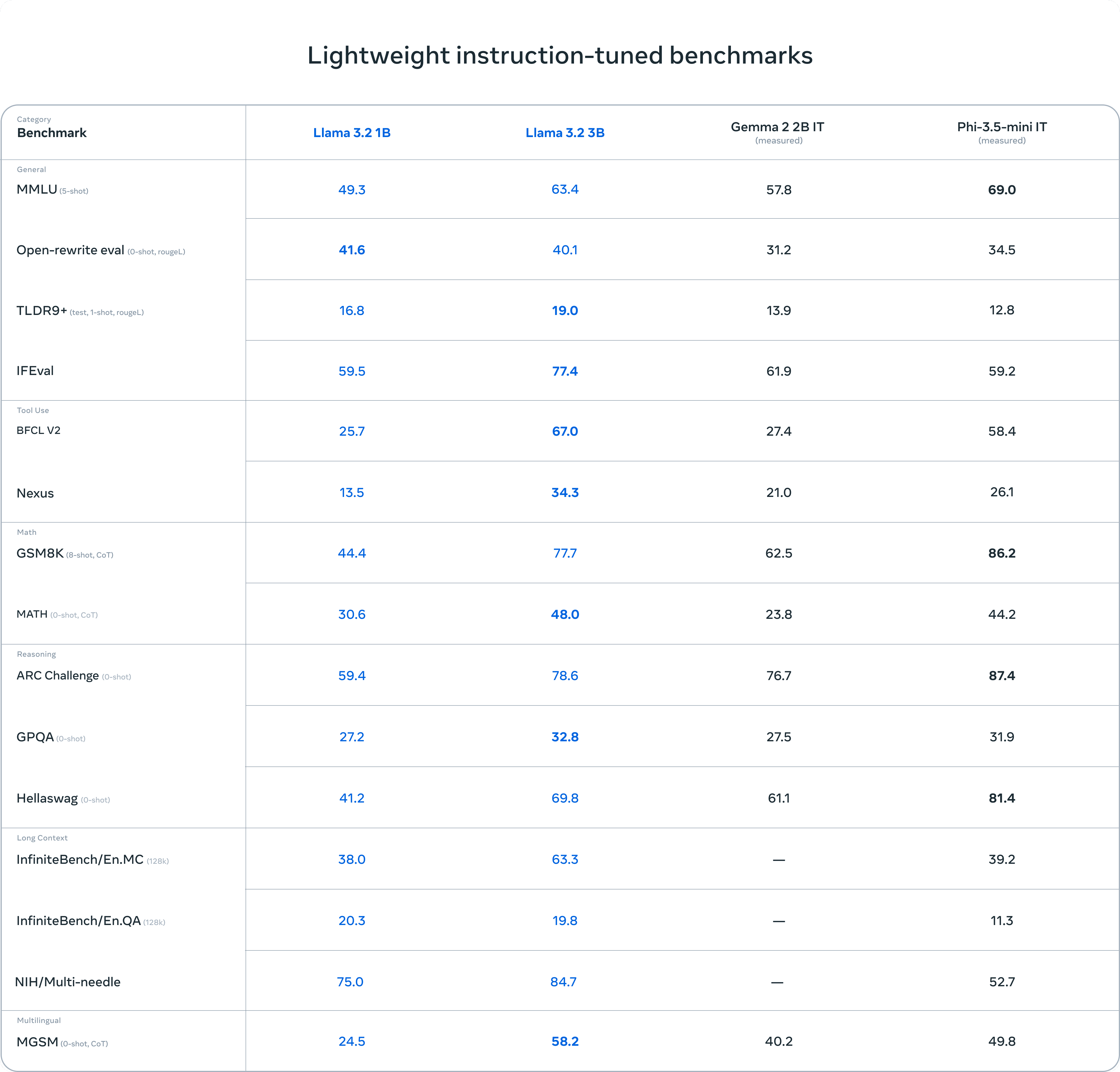

benchmarking

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...