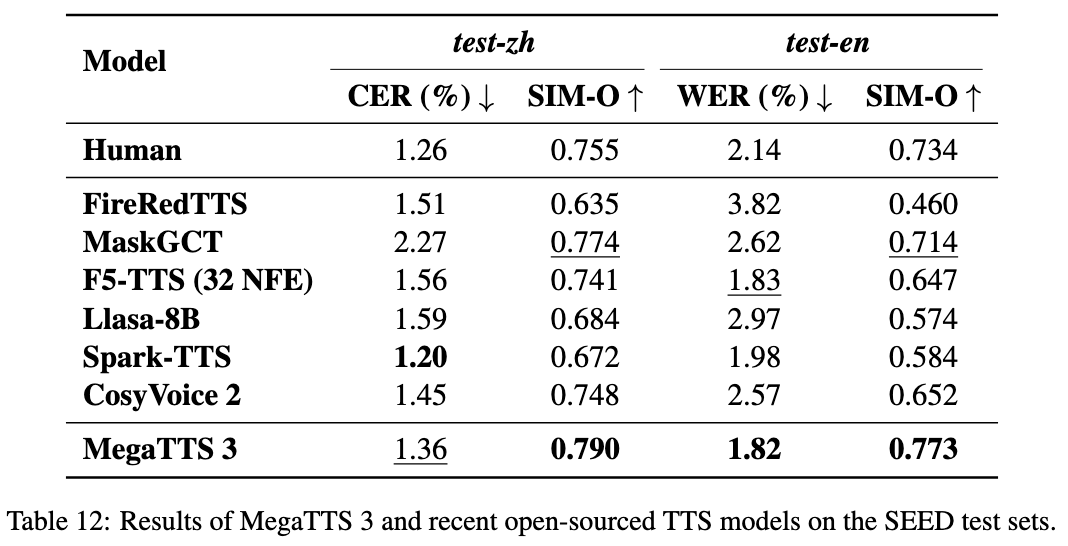

MegaTTS3: A Lightweight Model for Synthesizing Chinese and English Speech

General Introduction

MegaTTS3 is an open source speech synthesis tool developed by ByteDance in cooperation with Zhejiang University, focusing on generating high-quality Chinese and English speech. Its core model is only 0.45B parameters, lightweight and efficient, supporting mixed Chinese and English speech generation and speech cloning. The project is hosted on GitHub and provides code and pre-trained models for free download.MegaTTS3 can mimic the target voice with a few seconds of audio samples, and also supports adjusting the intensity of the accent. It is suitable for academic research, content creation, and development of speech applications, with pronunciation and duration control features to be added in the future.

Function List

- Generate Chinese, English and mixed speech with natural and smooth output.

- High-quality speech cloning is achieved with a small amount of audio that mimics a specific timbre.

- Supports accent strength adjustment to generate speech with accent or standard pronunciation.

- Use acoustic latents to improve model training efficiency.

- Built-in high-quality WaveVAE vocoder for enhanced speech intelligibility and realism.

- Aligner and Graphme-to-Phoneme submodules are provided to support speech analysis.

- Open source code and pre-trained models for customized development.

Using Help

MegaTTS3 requires some basic programming experience, especially with Python and deep learning environments. The following are detailed installation and usage instructions.

Installation process

- Build the environment

MegaTTS3 RecommendedPython 3.9. This can be done withCondaCreate a virtual environment:conda create -n megatts3-env python=3.9 conda activate megatts3-envAfter activation, all operations are performed in this environment.

- Download Code

Run the following command in a terminal to cloneGitHubWarehouse:git clone https://github.com/bytedance/MegaTTS3.git cd MegaTTS3 - Installation of dependencies

Project offersrequirements.txt, run the following command to install the required libraries:pip install -r requirements.txtInstallation time varies by network and device and is usually completed in a few minutes.

- Getting the model

Pre-trained models can be downloaded from Google Drive or Hugging Face (see official links).README). Download it and unzip it to./checkpoints/Folder. Example:- commander-in-chief (military)

model.pthput into./checkpoints/model.pthThe - pre-extracted

latentsFiles need to be downloaded from the specified link into the same directory.

- commander-in-chief (military)

- test installation

Run a simple test command to verify the environment:python tts/infer_cli.py --input_wav 'assets/Chinese_prompt.wav' --input_text "测试" --output_dir ./genIf no errors are reported, the installation was successful.

Main Functions

speech synthesis

Generating speech is the core function of MegaTTS3. It requires the input of text and reference audio:

- Prepare the document

existassets/folder into the reference audio (e.g.Chinese_prompt.wav(math.) andlatentsFiles (e.g.Chinese_prompt.npy). If there is nolatents, official pre-extracted files are required. - Run command

Input:CUDA_VISIBLE_DEVICES=0 python tts/infer_cli.py --input_wav 'assets/Chinese_prompt.wav' --input_text "你好,这是一段测试语音" --output_dir ./gen--input_wavis the reference audio path.--input_textis the text to be synthesized.--output_diris the output folder.

- View Results

The generated speech is saved in the./gen/output.wav, which can be played directly.

voice cloning

It takes only a few seconds of audio samples to mimic a specific sound:

- Prepare clear reference audio (5-10 seconds recommended).

- Using the above synthesis command, specify the

--input_wavThe - The output voice will be as close as possible to the reference tone.

accent control

Adjusting Accent Strength via Parameters p_w cap (a poem) t_w::

- Enter English audio with an accent:

CUDA_VISIBLE_DEVICES=0 python tts/infer_cli.py --input_wav 'assets/English_prompt.wav' --input_text "这是一条有口音的音频" --output_dir ./gen --p_w 1.0 --t_w 3.0 p_wtoward1.0The original accent is retained at times, and the increase tends to standardize the pronunciation.t_wControls for timbre similarity, which is usually higher thanp_wyour (honorific)0-3The- Generate standardized pronunciation:

CUDA_VISIBLE_DEVICES=0 python tts/infer_cli.py --input_wav 'assets/English_prompt.wav' --input_text "这条音频发音标准一些" --output_dir ./gen --p_w 2.5 --t_w 2.5

Web UI Operations

Supports operation through a web interface:

- Running:

CUDA_VISIBLE_DEVICES=0 python tts/gradio_api.py - Open your browser and enter the address (default)

localhost:7860), upload audio and text to generate speech.CPUApprox. 30 seconds in the environment.

Submodule usage

Aligner

- functionality: Align speech and text.

- usage: Run

tts/frontend_function.pyThe example code in for speech segmentation or phoneme recognition.

Graphme-to-Phoneme

- functionality: Converts text to phonemes.

- usage: Reference

tts/infer_cli.py, which can be used for pronunciation analysis.

WaveVAE

- functionality: Compressed audio is

latentsAnd rebuild. - limitation: the encoder parameters are not disclosed and can only be used with pre-extracted

latentsThe

caveat

- WaveVAE encoder parameters are not available for security reasons and can only be used with official

latentsDocumentation. - Project was released on March 22, 2025 and is still under development with new pronunciations and hourly adjustments planned.

GPUAccelerated referrals.CPURuns but is slow.

application scenario

- academic research

Researchers can test speech synthesis technology with MegaTTS3, analyze thelatentsThe effect of the - Educational aids

Convert textbooks to speech and generate audiobooks to enhance the learning experience. - content creation

Generate narration for videos or podcasts and save on manual recording costs. - voice interaction

Developers can integrate it into their devices to enable voice conversations in English and Chinese.

QA

- What languages are supported?

Supports Chinese, English and mixed speech, with the possibility of expanding to other languages in the future. - must

GPUWhat? - I don't know.

Not required.CPUIt can be run, but it is slow and is recommended to use theGPUThe - How do I handle installation failures?

updatepip(pip install --upgrade pip), checking the network, or inGitHubSubmit issue. - Why are WaveVAE encoders missing?

Undisclosed for security reasons, official pre-extraction requiredlatentsThe

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...