Maxun: open source no-code platform that automatically crawls web data and converts it to APIs or spreadsheets

General Introduction

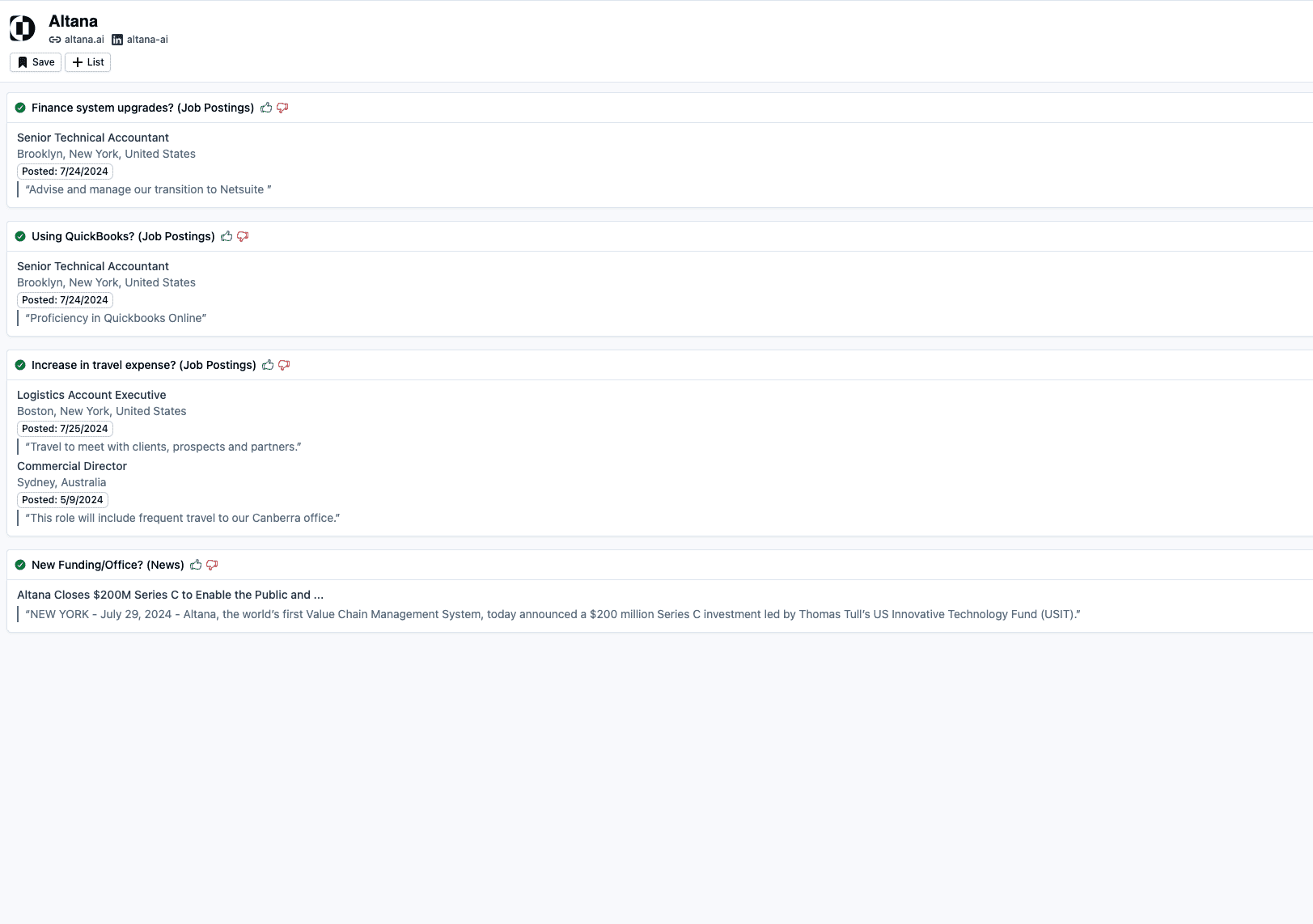

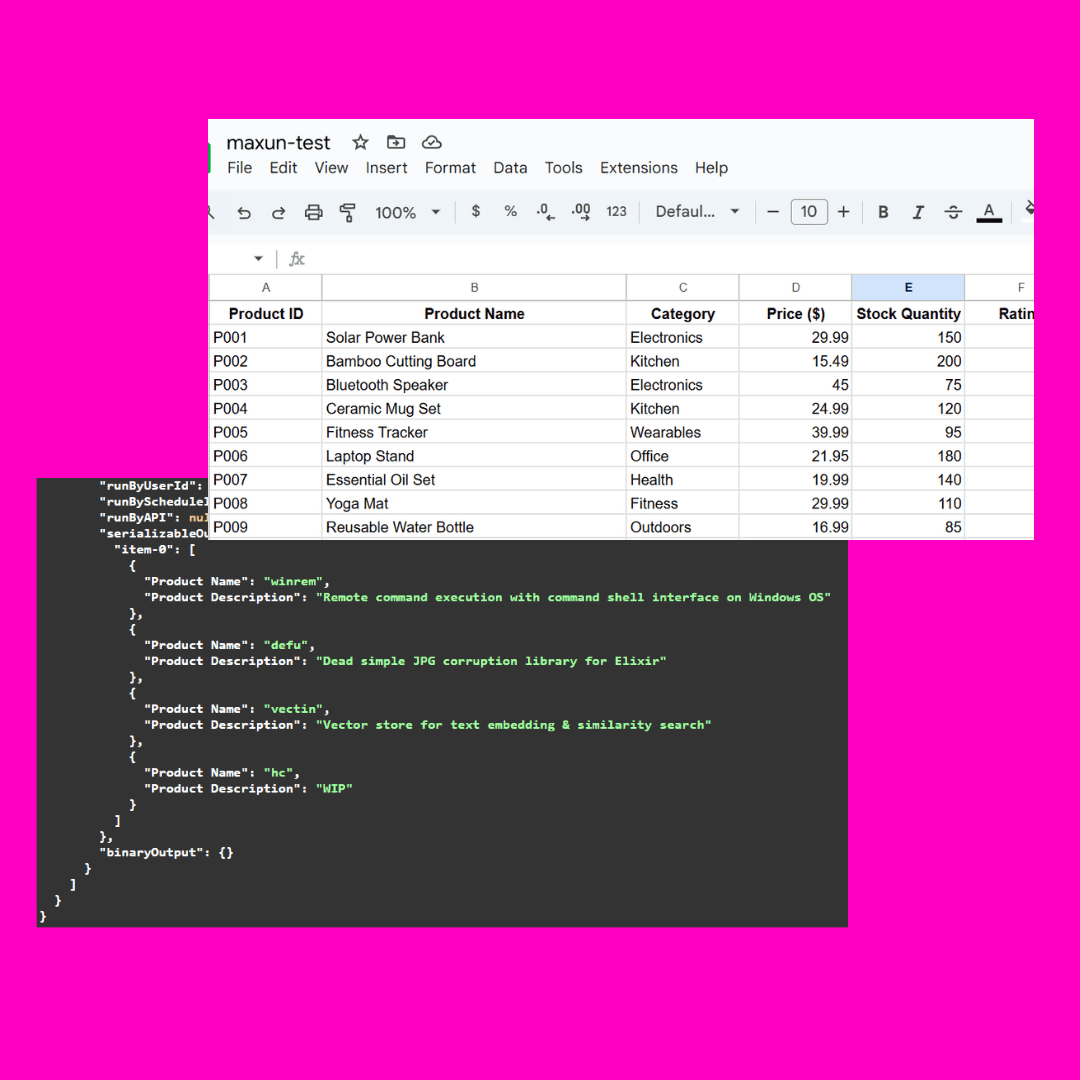

Maxun is an open source, no-code web data extraction platform that allows users to train robots in minutes to automatically crawl web data and convert it into APIs or spreadsheets. The platform supports paging and scrolling , can adapt to changes in website layout , provides powerful data crawling functions , suitable for a variety of data extraction needs .

Function List

- No code data extraction: no need to write code to crawl the web page data

- Automated data crawling: robots automate data crawling tasks

- API Generation: Converts crawled data into an API

- Spreadsheet conversion: export captured data to spreadsheet

- Paging and scrolling support: handling multi-page data and long page data

- Adapting to website layout changes: automatically adapting to changes in web page layout

- Login and two-factor authentication support: crawling data from sites that require login (coming soon)

- Integration with Google Sheets: Import data directly into Google Sheets

- Proxy support: use external proxies to bypass anti-bot protection

Using Help

Installation process

Installation with Docker Compose

- Cloning Project Warehouse:

git clone https://github.com/getmaxun/maxun

- Go to the project catalog:

cd maxun

- Build and start the service using Docker Compose:

docker-compose up -d --build

manual installation

- Ensure that Node.js, PostgreSQL, MinIO and Redis are installed on your system.

- Cloning Project Warehouse:

git clone https://github.com/getmaxun/maxun

- Go to the project directory and install the dependencies:

cd maxun

npm install

cd maxun-core

npm install

- Start front-end and back-end services:

npm run start

- The front-end service will run on http://localhost:5173/ and the back-end service will run on http://localhost:8080/.

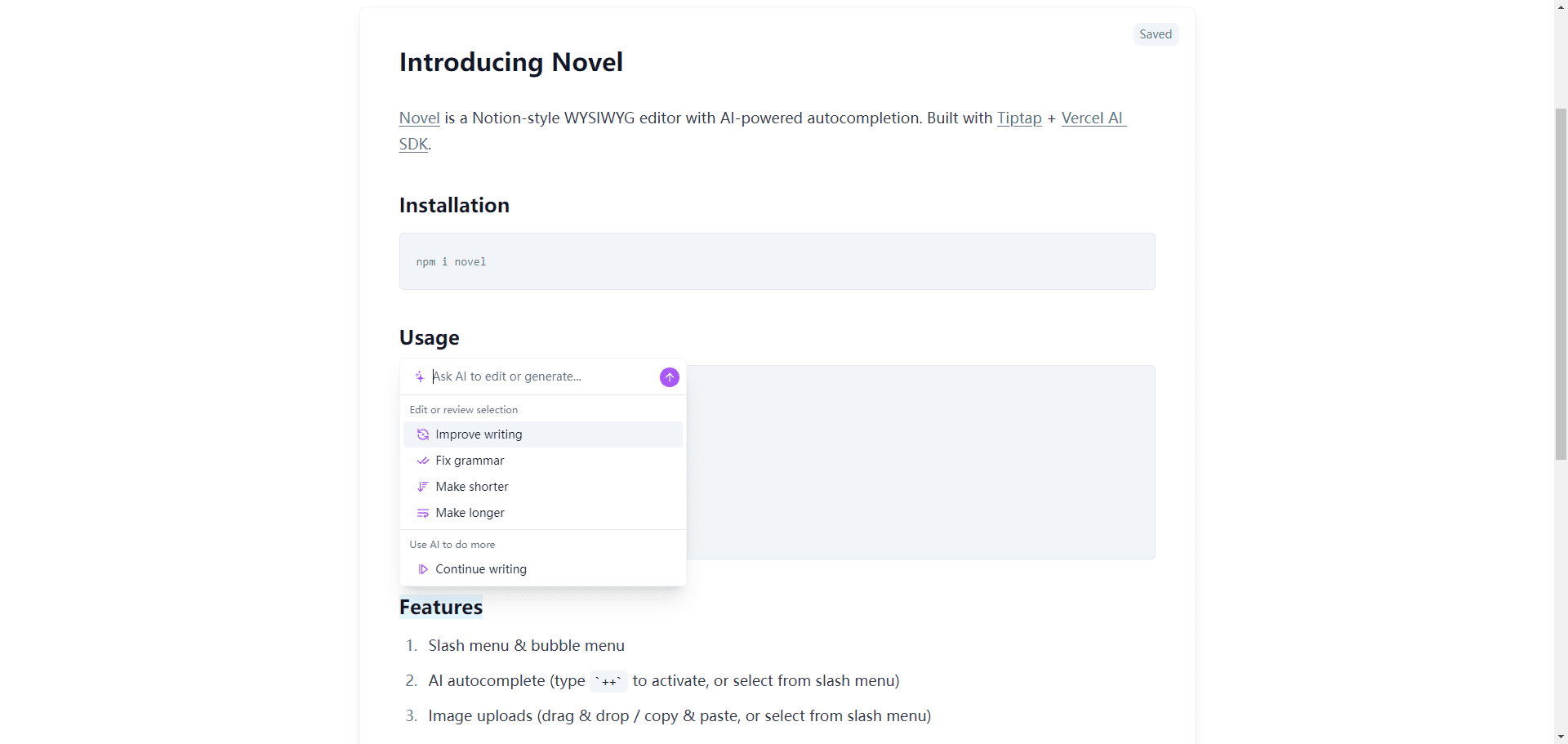

Guidelines for use

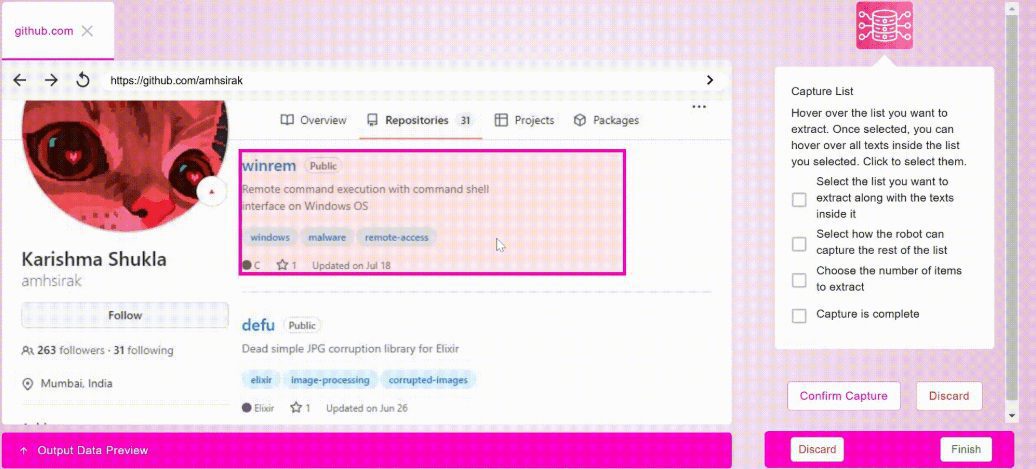

- Creating Robots::

- After logging in to the platform, click on the "Create Bot" button.

- Select the type of data to be captured (list, text or screenshot).

- Configure crawling rules, such as target URL, crawling frequency, etc.

- Save and start the robot, which will automatically perform the data grabbing task.

- Data export::

- After the bot mission is completed, go to the mission details page.

- Select the export format (API or spreadsheet).

- Click the "Export" button to download the data or get the API link.

- Handling paging and scrolling::

- Configure paging and scrolling options when creating a robot.

- The robot will automatically process multi-page data and long page data to ensure data integrity.

- Adapting to website layout changes::

- The platform has built-in intelligent algorithms that automatically adapt to changes in page layout.

- There is no need to manually adjust the crawling rules, the robot will automatically adapt to the changes.

- Integration with Google Sheets::

- In Platform Settings, configure the Google Sheets integration.

- The data grabbed by the robot will be automatically imported into the specified Google Sheets form.

- Using Proxies::

- In Platform Settings, configure the external agent.

- The robot will perform the grasping task through a proxy, bypassing the anti-robot protection.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...