Marco-o1: An Open Source Version of the OpenAI o1 Model Based on Qwen2-7B-Instruct Fine-Tuning to Explore Open Inference Models for Solving Complex Problems

General Introduction

Marco-o1 is an open reasoning model developed by Alibaba International Digital Commerce Group (AIDC-AI) to solve complex real-world problems. The model combines Chain of Thought (CoT) fine-tuning, Monte Carlo Tree Search (MCTS), and innovative reasoning strategies to optimize complex problem solving tasks.Marco-o1 not only focuses on disciplines with standard answers such as mathematics, physics, and programming, but also aims to generalize to domains where there are no clear criteria and where it is difficult to quantify rewards. The goal of the project is to explore the potential of large-scale inference models for multilingual applications, and to enhance the reasoning power and application scope of the models through continuous optimization and improvement.

Function List

- Chain of Thought fine-tuning (CoT): Enhance the inference ability of the model by fine-tuning the base model with full parameters, combining open-source CoT datasets and self-developed synthetic data.

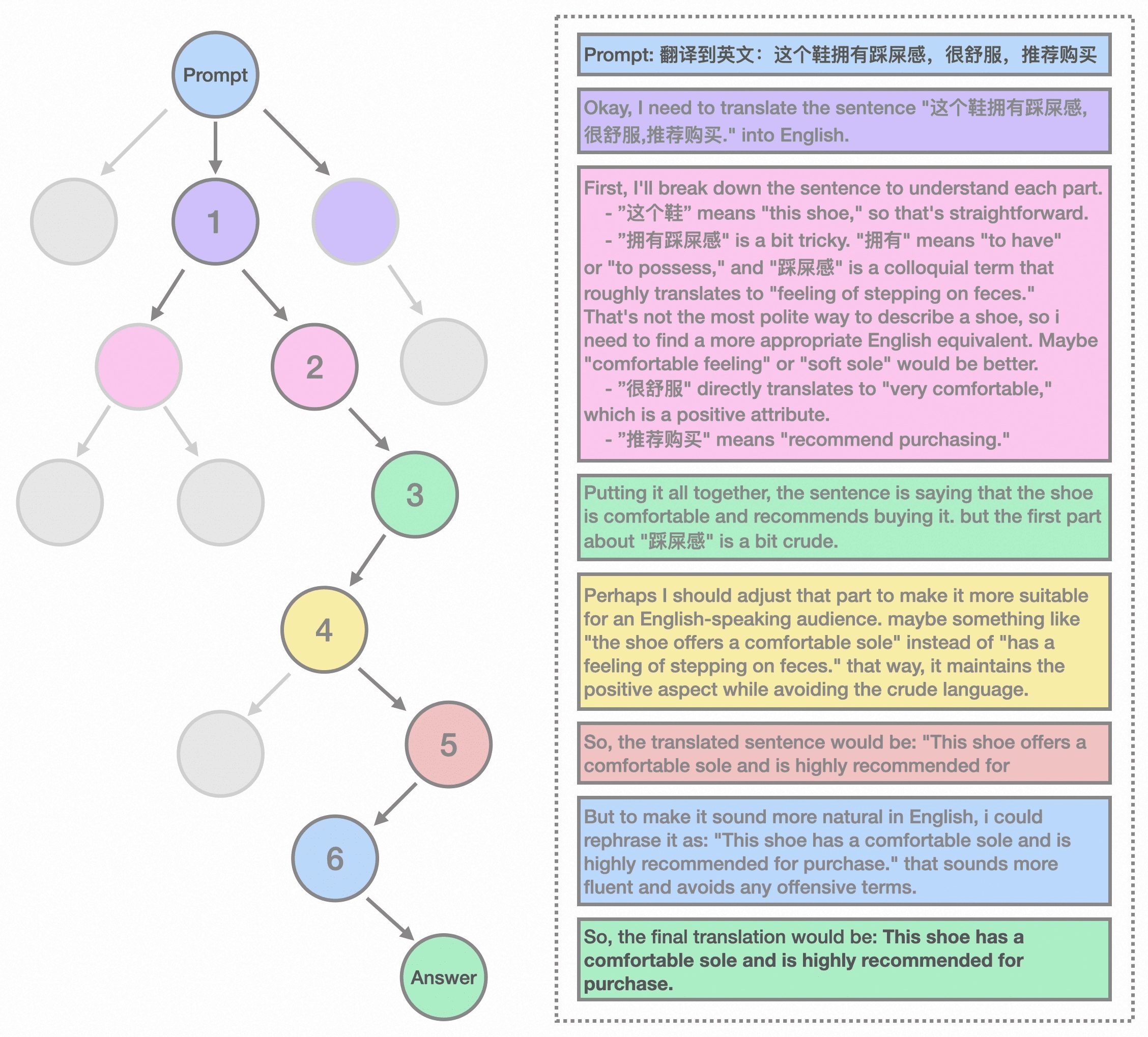

- Monte Carlo Tree Search (MCTS): Use the confidence of the model output to guide the search, expand the solution space, and optimize the inference path.

- Reasoning Action Strategy: Implement innovative reasoning action strategies and reflection mechanisms to explore actions at different levels of granularity and improve the ability of models to solve complex problems.

- Multilingual translation assignments: The first application of a large-scale inference model to a machine translation task, exploring inference time scaling laws in multilingual and translation domains.

- Reward model training: Develop Outcome Reward Modeling (ORM) and Process Reward Modeling (PRM) to provide more accurate reward signals and reduce randomness in tree search results.

- Intensive Learning Training: Optimize the decision-making process of the model through reinforcement learning techniques to further enhance its problem solving capabilities.

Using Help

Installation process

- Visit the GitHub page: Go toMarco-o1 GitHub pageThe

- clone warehouse: Use the command

git clone https://github.com/AIDC-AI/Marco-o1.gitClone the repository to local. - Installation of dependencies: Go to the project directory and run

pip install -r requirements.txtInstall the required dependencies.

Guidelines for use

- Loading Models: In a Python environment, use the following code to load the model:

from transformers import AutoModelForCausalLM, AutoTokenizer model_name = "AIDC-AI/Marco-o1" model = AutoModelForCausalLM.from_pretrained(model_name) tokenizer = AutoTokenizer.from_pretrained(model_name) - Example of reasoning: Reasoning with models, here is a simple example:

python

input_text = "How many 'r' are in strawberry?"

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**inputs)

print(tokenizer.decode(outputs[0], skip_special_tokens=True)) - multilingual translation: Marco-o1 performs well in multi-language translation tasks, and the following is an example translation:

python

input_text = "这个鞋拥有踩屎感"

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**inputs)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Detailed function operation flow

- Chain of Thought fine-tuning (CoT)::

- Data preparation: Collect and organize open-source CoT datasets and self-research synthetic data.

- Model fine-tuning: Use the above data to fine-tune the base model with full parameters to improve its inference.

- Monte Carlo Tree Search (MCTS)::

- nodal representation: In the MCTS framework, each node represents a reasoning state in the problem solving process.

- Motion Output: The possible actions of a node are generated by the LLM and represent potential steps in the inference chain.

- Rollback and bonus calculation: During the rollback phase, the LLM continues the reasoning process up to the termination state.

- Bootstrap search: Use reward scores to evaluate and select promising paths to steer the search towards more reliable inference chains.

- Reasoning Action Strategy::

- Action Granularity: Explore actions at different granularities within the MCTS framework to improve search efficiency and accuracy.

- Reflection mechanisms: Prompting models to self-reflect significantly enhances their ability to solve complex problems.

- Multilingual translation assignments::

- mission application: Applying large inference models to machine translation tasks to explore inference time scaling laws in multilingual and translation domains.

- Example of translation: Demonstrate the superior performance of the model in translating slang expressions.

- Reward model training::

- Outcome Reward Modeling (ORM): Train the model to provide more accurate reward signals and reduce the randomness of tree search results.

- Process Reward Modeling (PRM): Further optimization of the model's inference paths through process reward modeling.

- Intensive Learning Training::

- Decision Optimization: Optimize the model's decision-making process and enhance its problem-solving capabilities through reinforcement learning techniques.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...